One fine day I was setting up a nested encrypted container protected by a hardware crypto key, trying to figure out how you could get to the data if the key was pulled from the machine. The more I thought about it, the clearer the answer became: you can’t. Right then I was asked to comment on a document—essentially a field manual for police forensic experts on finding digital evidence. I was so impressed that, without asking the authors for formal permission, I decided to write an article based on it. The nested containers and the hardware key went on the back burner, and I sat down to study the techniques that, according to the police, most suspects use to cover their tracks and hide evidence.

Moving data to a different folder

No, we’re not talking about “hiding” a stash of porn pics in a folder called “Strength of Materials term papers.” We’re talking about a naive but fairly effective way to hide evidence by simply moving certain data to another location on the disk.

Is this obvious nonsense or just extreme naïveté? As simplistic as this method may seem, it can actually work for rare and exotic artifacts—like Windows Jump Lists (do you even know what those are?) or a WhatsApp message database. An examiner may simply not have the time or persistence to comb through an entire machine looking for a database from… well, from what exactly? There are hundreds, if not thousands, of messaging apps—good luck guessing which one the suspect used. And remember, each app has its own file paths, filenames, and even database formats. Manually hunting for them on a system with tens of thousands of folders and hundreds of thousands of files is a losing battle.

Obviously, this way of hiding evidence will only work if the case has nothing to do with information security. The investigator may not question the contents of the suspect’s hard drive, and the analysis will become a mere formality. In those and only those situations, the method—let’s call it the “Elusive Joe” method—can work.

Using “secure” communication methods

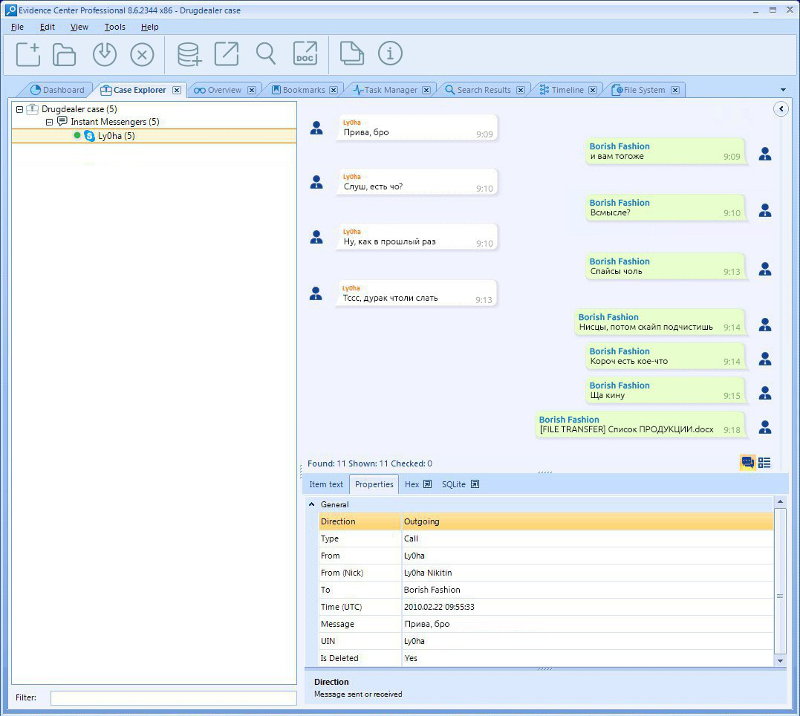

This point really impressed me. Surprisingly, modern criminals have a solid grasp of security. They understand quite well how chat messages are transmitted between participants, where they’re stored, and how to delete them.

The document details the avenues investigators can use to gain access to chats. These include analyzing the freelist space in SQLite databases, issuing lawful requests to messaging providers (for example, Microsoft can readily provide Skype conversation logs since they’re stored on the company’s servers), and even contacting smartphone manufacturers—recalling the case where BlackBerry helped Canadian police track down a drug ring that had decided to use the company’s proprietary messenger on the legacy BlackBerry OS platform.

In this context, a curious case comes to mind that I heard about at a law enforcement event. U.S. police detained a man suspected of large-scale drug trafficking. He was fairly tech-savvy and used Apple iMessage as his only means of communication. iMessage chats aren’t stored on Apple’s servers, and the suspect meticulously deleted his message history after each session. His iPhone backup contained nothing of interest.

However, when the police examined his computer (the suspect had a Mac), they hit the jackpot: the machine contained hundreds of thousands of messages the suspect—by then clearly a criminal—didn’t even know existed. What helped convict the drug dealer was a then-new Apple feature, Continuity, which synced iMessage across all devices registered to the same Apple ID.

As an aside, the speaker complained that all existing iMessage database analysis tools at the time couldn’t handle the sheer volume of messages and kept crashing, so the police had to write their own utility to parse the bloated database.

The takeaway? There isn’t one: unless you’re an IT professional, you can’t really be expected to know about stuff like this.

Renaming files

Another naive way to hide evidence is by renaming files. As simple as it sounds, renaming, say, the encrypted database of a secure messenger to something like C:\ can easily slip past even a careful examiner. After all, Windows directories contain thousands of files; finding something out of place among them—especially if it doesn’t stand out by size—is not a task you can realistically do by hand.

How are such files found? It’s understandable that a layperson might not know there’s an entire class of specialized tools built specifically to locate these kinds of files on a suspect’s disks (and disk images). Investigators don’t just search by filename; they use a comprehensive approach, analyzing artifacts—such as entries in the Windows Registry—from installed applications, then pivoting to the file paths those applications have accessed.

Another popular way to locate renamed files is content carving—deep, content-based scanning. The same idea, better known as signature scanning, has been used since the earliest days by practically all antivirus engines. Carving lets you analyze not only the contents of files on the disk, but also the disk’s raw data itself (optionally limited to allocated areas) at a low level.

Is it worth renaming files? That’s just security by obscurity—at best it might slow down a very lazy investigator.

Deleting files

I’m not sure how “naive” it is these days to try to hide evidence by simply deleting files. The thing is, files deleted from conventional hard drives are usually fairly easy to recover using well-known signature-based file carving: the disk is scanned block by block—focusing on areas not occupied by existing files or other filesystem structures—and each block is analyzed against a set of criteria (for example, whether it looks like the header of a specific file format, a fragment of a text file, and so on). With this kind of scanning, which can be performed fully automatically, the chances of successfully recovering deleted files—at least partially—are quite high.

You might think deleting files is the classic, naive way to cover your tracks. That’s not the case when those files are on SSDs.

Here we should dig a bit deeper into how deletion (and later reading) of data works on SSDs. You’ve probably heard about the “garbage collector” and the TRIM function, which help modern SSDs maintain high write performance—especially when rewriting data. The TRIM command is issued by the operating system; it tells the SSD controller that certain data blocks at specific “physical” addresses—though they aren’t truly physical in the strict sense—have been freed and are no longer in use.

The controller’s task now is to erase (wipe the data in) the specified blocks, preparing them so new data can be written to them quickly.

But erasing data is a very slow process, and it runs in the background when the drive isn’t busy. What if a write command arrives right after TRIM, targeting that same “physical” block? In that case, the controller instantly swaps it for a clean block by updating the address translation table. The block that was scheduled for erasure gets a new physical location or is moved into the unaddressable spare (overprovisioned) area.

Trick question: if the controller hasn’t yet physically erased data from TRIMmed blocks, can a signature-based scan find anything in the SSD’s free space?

Correct answer: in most cases, if you try to read from a block that has been issued a TRIM, the controller will return either zeros or some other data pattern unrelated to the block’s original contents. This is due to how modern SSD protocols define controller behavior for post-TRIM reads. There are three possibilities: Undefined (the controller may return the block’s original contents; rarely seen in modern SSDs), DRAT (Deterministic Read After TRIM—returns a fixed data pattern; common in consumer drives), and DZAT (Deterministic Zeroes After TRIM—always returns zeros; often found in RAID/NAS/server-oriented models).

Thus, in the overwhelming majority of cases, the controller will return data that has nothing to do with the drive’s actual contents. In most cases, you won’t be able to recover deleted files from an SSD—even just seconds after they’re removed.

Data in the Cloud

Data in the cloud? You might think no criminal today would be that careless—and you’d be wrong. People routinely forget to disable iCloud Photos, OneDrive, or Google Drive sync, and even more obscure syncing—like the option (which iOS doesn’t even expose; maybe that’s why it’s overlooked?) that sends iPhone call logs, both regular and FaceTime, straight to Apple’s servers. I’ve already cited examples involving a left-on Continuity feature and BlackBerry Messenger.

I don’t have much to add here, except that the company’s cloud data is handed over to law enforcement with minimal resistance.

Using removable media

Using encrypted USB drives to store data tied to illegal activity seems like a brilliant idea to criminals. In their minds, there’s no need to delete anything—just pull the drive out of the computer, and no one will ever access the data (assuming the protection is strong). That’s the naive criminal’s logic.

Why “naïve”? Because most everyday users have no idea about the traces left behind by almost any interaction with USB devices. For example, there was once an investigation into the distribution of child sexual abuse material (CSAM). The perpetrators used only external storage (ordinary USB flash drives); nothing was stored on the computers’ internal drives.

The perpetrators overlooked two key points. First, Windows logs USB device connections in the Registry; if you don’t clear it, that data can linger for a very long time. Second, when you browse images using Windows Explorer, the system automatically generates (and saves) thumbnail previews, typically under %LocalAppData%\. By analyzing those thumbnails and correlating the USB device IDs with the confiscated hardware, investigators were able to prove the defendants’ involvement in the alleged crime.

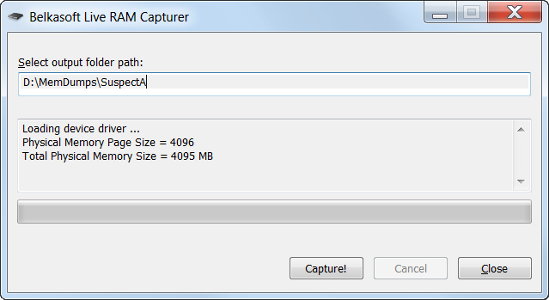

What about encryption? That’s not straightforward either. First, there are specialized tools that let you capture a computer’s RAM dump and extract cryptographic keys used to access encrypted volumes (including BitLocker To Go, popular among naive offenders). One such tool is Elcomsoft Forensic Disk Decryptor, which can analyze the dump in a fully automated mode. You can create a RAM image using the free utility Belkasoft RAM Capturer.

Second, it’s no secret that many encrypted containers automatically escrow their encryption keys to the cloud. When you enable FileVault 2, Apple repeatedly informs you that you can recover access to the volume via iCloud; by contrast, when encrypting a volume with BitLocker Device Protection, Microsoft quietly creates an escrowed key in the user’s Microsoft Account. Those keys are available directly on the user account page.

How do you access the account? If the computer is set up to log in with a Microsoft Account (rather than a local Windows account), an offline brute-force attack can recover the password—which, surprise!—will be the same as the password for the online Microsoft Account.

Final thoughts: Nested encrypted containers with a hardware key

It might look like an unbreakable defense. Our hypothetical user Vasya can chuckle and rub his hands, confident that his data is now perfectly safe.

In theory—yes. In practice… well, there are legal nuances. Here’s a clear example.

The defendant, accused of downloading and possessing child sexual abuse material, has been incarcerated in a U.S. prison for more than two years. The formal charge is his refusal to disclose the passwords to encrypted external storage (NAS) devices that the court believes contain such material.

Whether it’s stored there or not is unknown; no corresponding content was found in the defendant’s possession. But the charges are very serious, and apparently that’s reason enough to dispense with such trifles as the presumption of innocence and the right against self-incrimination. So the defendant is in jail and will stay there until he reveals the passwords—or dies of old age or for some other reason.

Not long ago, civil rights advocates filed an appeal arguing that, under the statute in question (refusal to cooperate with the investigation), the maximum term of confinement is 18 months. The appeal was rejected by the court even though the judge called the defense’s arguments “interesting and wide-ranging”. The more serious the charge, the more readily judges will look the other way—at anything, including the letter of the law.

Still want to play hide-and-seek with the law? I don’t have good news for you…