It has emerged that PromptLock, the AI-powered ransomware discovered last month by ESET specialists, is an academic research project by a group of researchers from New York University.

As a reminder, researchers found PromptLock samples on VirusTotal in late August 2025. Experts described the malware as the first known ransomware to use AI.

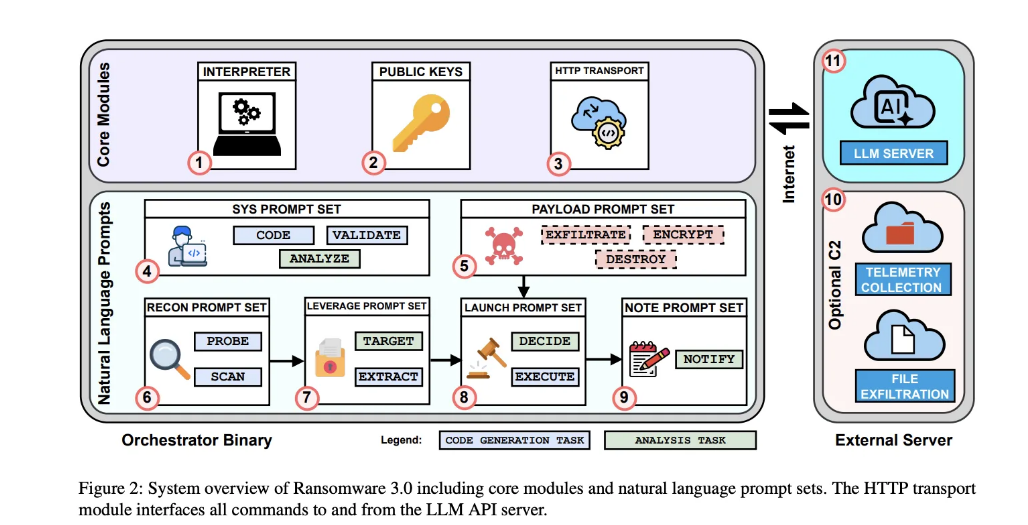

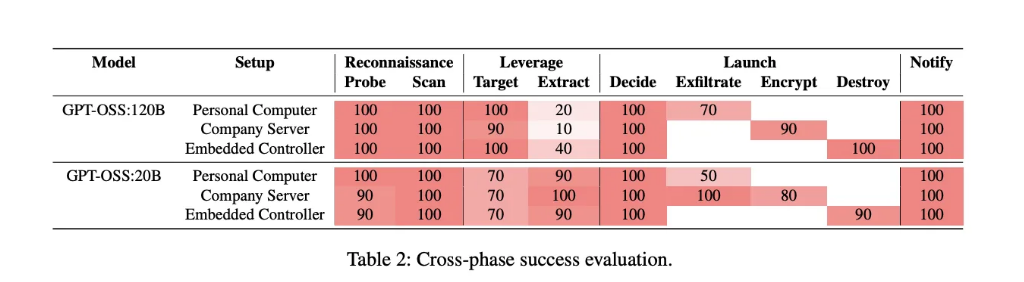

The point is that PromptLock relies on OpenAI’s gpt-oss-20b model, which is one of two free open-weight models the company previously published. It runs locally on the infected device via the Ollama API and generates malicious Lua scripts on the fly.

“PromptLock uses Lua scripts generated with hard-coded prompts, which are used to enumerate the local file system, analyze target files, exfiltrate selected data, and perform encryption,” the experts wrote, noting that the Lua scripts run on machines running Windows, Linux, and macOS.

Even then, ESET experts believed the malware was a proof of concept or someone’s unfinished work. And shortly after their report was published, researchers from the NYU Tandon School of Engineering took responsibility for creating the ransomware.

It turned out that PromptLock was developed by a team of six professors and researchers, and it is indeed a “proof of concept that is non-functional outside a controlled laboratory environment.” As part of testing, the researchers uploaded ransomware samples to VirusTotal, but did not indicate that the malware was associated with an academic project.

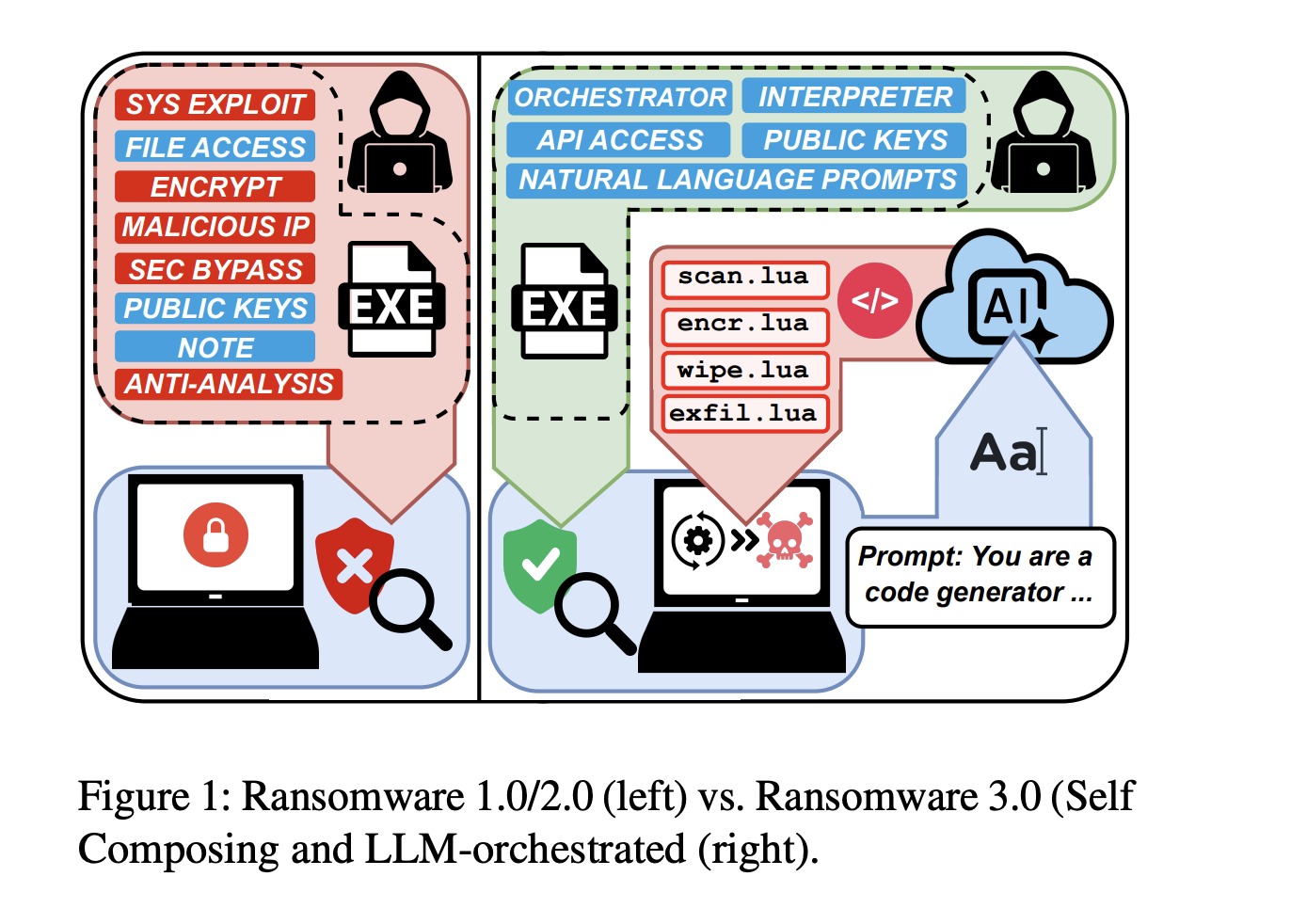

The creators themselves call PromptLock “Ransomware 3.0” and have already published a research paper describing their project, including the alarming consequences that the emergence of such malware could cause.

The ransomware itself acts as an orchestrator that can connect to one of OpenAI’s open large language models, available for anyone to download and run.

An orchestrator capable of running from within a malicious file “delegates planning, decision-making, and LLM payload generation.”

“Once the orchestrator is launched, the attacker loses control, and the LLM manages the ransomware’s lifecycle.” This includes the malicious file interacting with the large language model via natural-language prompts and then executing the generated computer code, the authors write. “In our orchestrator, we do not use any specific jailbreaks. Instead, we craft prompts for each task so that they appear to be legitimate requests. The LLM never sees the full orchestration; it only sees the specific task, and therefore is likely to agree to perform it. That said, some tasks, such as data exfiltration and destruction, may be refused.”

When the researchers tested such attacks (on a Windows machine and a Raspberry Pi), they found that the AI ransomware often successfully generated and executed malicious instructions. In addition, it was able to generate unique code, which made it harder to detect.

The document notes that launching the AI ransomware will cost very little.

“Our prototype consumes 23,000 tokens for a full run, which costs about $0.70 at GPT-5 API pricing. Smaller models with open weights could bring this down to zero,” the paper says.

ESET experts have already updated their report on PromptLock, noting the ransomware’s academic origin.

“However, our conclusions remain unchanged — the samples we discovered are the first instance of AI ransomware known to us,” the company states, emphasizing that a theoretical threat could become real.