Generally speaking, parsers are excellent bug-hunting tools. And the more complex is the processed format, the more interesting things you can find there. Take, for instance, XML. It’s widely used to describe documents and presentations, SOAP and RSS, etc., etc. And concurrently it’s marred by countless problems: plenty of attacks exploit XML vulnerabilities, including 20-year-old XML bombs (billion laughs attack), XXE attacks, and errors in some XML implementations enabling you to escape from the sandbox in iOS 13 and read other people’s SMS messages.

www

In January 2016, LiveOverflow posted a short video showing how to create an executable file that cannot be opened in GDB and Radare2: Uncrackable Program? Finding a Parser Differential in loading ELF. This video inspired me to start this research.

Language-Theoretic Security and polyglot files

Parsers, as their name suggests, perform parsing of a certain language that is defined by a certain grammar. And this applies not only to programming languages, but to file formats and protocols as well.

The langsec website is dedicated to Language-Theoretic Security. Numerous researchers have contributed to this domain of science, including Sergey Bratus (some exciting features of ELFs and so-called weird machines) and Meredith L. Patterson (analysis of protocols and grammar parsers).

So what is langsec about? In an interview to IOHK, Meredith Patterson explained that langsec began with a discussion that, according to the theory of formal languages, filters based on regular expressions will never overcome SQL injections.

Another aspect examined by the langsec guys is the identification of inconsistencies between theory and practice, namely problems in the implementation of various formats and how they can be exploited. One of the main problems is adherence to Postel’s law, which states:

Be conservative in what you send, be liberal in what you accept.

Initially, this approach was supposed to ensure the reliability of TCP. But today, this principle introduced in the early days of the Internet can result in interesting incidents. Take, for instance, so-called polyglot files. After all, any file is just a set of bytes, and their interpretation depends on the parser. And some parsers are very tolerant to various deviations from specifications: they either try to restore missing data from the context or ignore clearly invalid values stored in individual fields.

This makes it possible, for instance, to combine a script containing a Python web server (and other stuff) with a valid PDF file. This can be done pretty easily due to some implementation features of this standard (e.g. in practice, PDF magic is not required to appear at offset zero and can be even absent in some cases).

The creation of such files is a good exercise for your brains; in addition, they can be used to bypass file-type filters during system testing. Numerous studies are dedicated to such files; there is even a special format αcτµαlly pδrταblε εxεcµταblε that intricately combines PE, ELF, Mach-O, sh, and bootsector. According to its author, this format can be run without modification on Linux, Mac, Windows, BSD, and even on BIOS.

info

Plenty of information on polyglot files can be found in repositories of Ange Albertini, the author of Corkami also known as the file format guy. And this title is fully deserved: in his repositories, you can find posters with visual representations of many formats: executable files, documents, pictures, archives, etc., as well as practical notes.

The PoC||GTFO repository contains plenty of useful articles (in addition to those dedicated to polyglot files). Beware: a PDF file with his articles is actually more than just a PDF!

Overall, it’s quite fun to abuse parsers using polyglot files. But now let’s discuss a more targeted evil and then try to put it into practice.

Parse tree differential attack

For the first time, this attack was mentioned in a 2010 presentation on protocol grammars delivered by Meredith Patterson and Len Sassaman. It was called “Parse tree differential attack” and targeted X.509 parsers (to be addressed in more detail later).

In the study “Security Applications of Formal Language Theory” published in 2013, the authors examine this attack thoroughly.

info

As far as I understand, the term “parser differentials” that has existed for more than ten years isn’t commonly used outside the langsec community – even though numerous attack descriptions can be classified this way.

Parse tree differential attacks are truly amazing. Their main idea is as simple as ABC: two implementations of parsers designed for the same format ‘see’ the same input data differently. The consequences of this seemingly innocent feature, especially in combination with other vulnerabilities, can be absolutely unpredictable. Let’s examine a few examples.

Requests smuggling in GitLab and Zoom

HTTP requests smuggling is an attack targeting parser differentials. Such attacks exploit the fact that two servers differently parse the same HTTP request passing through them. In fact, they ‘see’ different things in the same input data, which makes it possible to ‘smuggle’ a second request into the first one.

This vulnerability (CVE-2020-6833) existed in GitLab: gitlab-workhorse and gitlab-rails parsed the same HTTP file save request differently. Exploitation of this vulnerability enabled the attacker to read files (see the GitLab blog for more detail).

But the requests smuggling attack is not limited to HTTP requests. In the report XMPP Stanza Smuggling or How I Hacked Zoom, Ivan Fratrik from Project Zero discusses the CVE-2022-22784 vulnerability making it possible to insert tags into messages (stanza) due to parser errors. Potentially, this enables the attacker to forge server messages, redirect connections, and cause memory corruption problems, which couldn’t be achieved by other means. In the end, the researcher managed to execute arbitrary code during Zoom auto-update as a result of server spoofing.

Psychic Paper and CVE-2022-42855

In January 2023, another study by the same researcher from Project Zero was published. In this article, Ivan Fratrik mentions a variant of Psychic Paper: an attack making it possible to execute files with unsigned entitlements on macOS – while the system believes that everything is OK. This happens because the operating system contains as many as four PLIST (i.e. oldie-goodie XML) parsers that don’t produce perfectly identical results in certain cases. An incredible solution to that problem was – what would you think? – the creation of a fifth parser.

Later, Apple decided to switch to a binary DER format instead of text XML, but even in this case, Fratrik managed to find functions in libCoreEntitlements that parse DER elements in different ways (even though he was unable to achieve the Psychic Paper effect).

Trusted X.509 certificates for an arbitrary domain

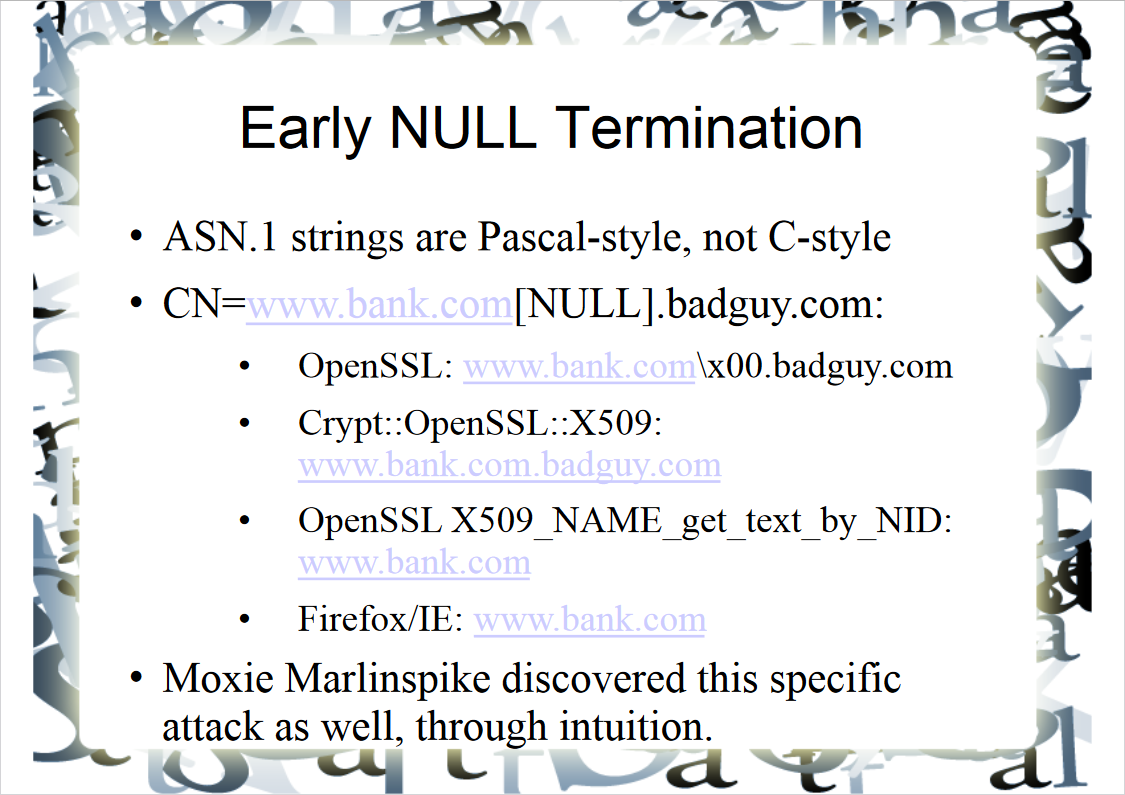

In 2010, in a talk entitled Towards a formal theory of computer insecurity: a language-theoretic approach, Len Sassaman and Meredith Patterson described the above-mentioned attack that targets the processing of X.509 certificates by browsers: the browser could display a wrong domain to the user (i.e. not the one the certificates were issued to) due to the incorrect parsing of the CN (Common Name) field containing a null terminator.

The certification authority won’t allow someone to request a certificate for, let’s say, www. if the requester doesn’t own such a domain. But the researchers asked themselves: what happens if you specify www. in CN?

Due to incorrect handling of the null terminator, browsers ‘see’ the string www. and display it to the user, although the certificate (signed by a trusted authority!) was issued to a completely different domain. The researchers claim that such an outrageousness wouldn’t be possible if all implementations were parsing this field in the same way.

ELF: execute impossible to parse

Alejandro Hernández, aka nitr0us, from IOactive followed a similar logic. Back in 2012, he decided to find a reliable way to prevent the analysis of binaries since runtime anti-debugging techniques can be bypassed. In the GDB version that was actual at that time (7.5.1), nitr0us found a bug in the DWARF processing procedure: an attempt to load a specially crafted ELF to the debugger caused its crash as a result of null pointer dereference.

In addition to GDB, nitr0us found a bug in IDA Pro 6.3 resulting in an internal error and subsequent program closedown, which made file analysis impossible. Needless to say, that the ELF was running smoothly on the OS and performed its functions. More information can be found in the IOactive post Striking Back GDB and IDA debuggers through malformed ELF executables.

ELF parsers

Now that you have realized that something is rotten in the world of parsers, let’s get closer to the subject and see how are things going with the Executable and Linkable Format (ELF). Have you ever wondered how many parsers designed for it operate in Linux?

OS kernel

An ELF parser exists in the Linux kernel and in other operating systems that can interact with such files. It ensures that such system calls as execve(/execveat(do their job properly so that you can launch your favorite browser and watch funny videos with cats read articles.

In essence, kernel performs the following operations:

- checks for permission to run the file;

- checks whether its format is supported as executable;

- depending on the format, selects the so-called interpreter. In the case of dynamically linked ELFs, this is

ld; while and in the case of scripts that begin with a shebang (#!), this is what is specified in the first string (/,bin/ sh /, or any other path – you can experiment with this at your leisure);usr/ bin/ env python3 - in the case of an ELF, prepares memory segments as specified in its program headers;

- if the ELF is dynamic, loads all the required libraries that are also ELF files (and the same steps are performed for them as well); and

- sets the pointer of the current instruction of the process that has called

execve*(to the entry point.)

Memory dumps that are created in Linux when, for instance, abort( is called or SIGABRT received also appear not out of thin air: the kernel kindly packs them into an ELF file whose type is ET_CORE instead of ET_EXEC/ET_DYN.

One might think that the mechanism that runs custom binaries in the OS has been perfectly polished over the decades of this format’s existence: its first specification (for Unix System V) goes back to 1989. But no! In 2014, IOActive has identified a problem in OpenBSD 5.5 that makes it possible to cause a kernel panic using a specially crafted file.

System utilities

A number of system utilities from the Binutils set, including readelf, objdump, as, gcc, and ld, use the GNU BFD Library to parse ELF files (i.e. at the lower level, there is only one parser). But as soon as the logic of these utilities comes into play, differences can appear.

Debuggers, decompilers, disassemblers, and emulators

When you debug an ELF file, say in GDB, it goes through at least two parsers: (1) the debugger parser (to load, for instance, available symbols from the file or debug info if available); and (2) the kernel parser (to run this ELF). If you use extensions, then something else can come into play (e.g. elftools in the case of pwndbg).

Various tools (e.g. IDA, Ghidra, Radare2, or rizin) also parse file headers to show you the entry point, segments, and, if possible, display debug-friendly names of functions and variables. In angr, executable files are loaded by CLE that internally uses elftools. In LIEF, custom code is responsible for this.

In addition, executable files are parsed by antivirus engines in the course of analysis; sometimes, this results in amusing incidents when an entire architecture becomes excluded from further scanning.

Looking for differences

As you can see, there are plenty of parsers everywhere. But how to start searching for parser differentials between the OS and its apps when the number of potential targets is so high? As always, first, you have to decide what you want. Your primary object of interest is an ELF file that is smoothly executed by the OS, but cannot be opened in debuggers and disassemblers.

It is known that sections info isn’t required for an ELF to execute; accordingly, the OS disregards this information (see my previous article). By contrast, analysis tools use it when they try to read names of symbols and sections in a file. In other words, it makes sense to try fuzzing section headers.

info

Important: my goal was not to search for 0-day vulnerabilities in reverse engineering tools. Instead, I wanted to figure out whether they can be tested using a bunch of ELF files. Therefore, the analyzed sample isn’t very large.

Fuzzers

Similar to any other format, ELF files can be fuzzed in different ways. Let’s start with the simplest one.

LiveOverflow wrote a script that assigns a random value to a random byte within a file; as a result of this simple operation, you get a workable file that cannot be opened in GDB and Radare2. Interestingly, in 2020, a couple of problems associated with the processing of PE and ELF headers were identified in Radare2 using a similar fuzzer. These vulnerabilities have been assigned identifiers CVE-2020-16269 and CVE-2020-17487.

Another possible option is to use AFL++. This approach is undoubtedly much better than simple brute-forcing of bytes and it makes it possible to track coverage in the program under test. But for the purposes of this research, I intend to test a number of different tools using the same set of ELFs and find among them one that breaks all these tools – and concurrently runs smoothly on the OS. Unfortunately, this is not exactly the use case AFL++ is designed for.

In addition, if I know which ELF parser I want to fuzz (or, to be specific, what the tested parser pays attention to in the parsed file), then I should be able to specify the task for the fuzzer more precisely. Do I want to corrupt Program Header Table (PHT), or Section Header Table (SHT), or ELF’s own header, or maybe all of them together? It seems that the best way is to use a grammar-based fuzzer. But this is a separate complex topic that goes far beyond the scope of this article. For the purposes of my research, I’m better off corrupting just section headers in an ELF, and that’s it.

Fortunately, there is a fuzzer optimally suited for this task.

Melkor

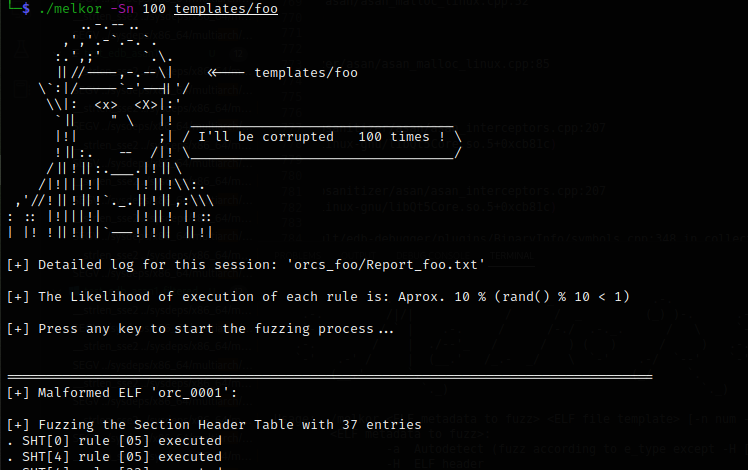

This tool was created by nitr0us (who is already familiar to you) to corrupt ELF in different ways (depending on the task), and it bears the proud name Melkor. Despite its age, I managed to compile and run it virtually with no problems – after all, the format hasn’t changed over time. The only significant limitation of Melkor is that it supports only the x86 architecture (32/64 bit).

Its repository contains a couple of template ELF files that can be used as input for fuzzing. You can specify which headers in the input ELF you want to fuzz: PHT, SHT, symbols table, relocations table, etc., which is very convenient if you know exactly what you need.

So, I instruct Melkor to generate malformed ELF files by fuzzing only section headers in the template file foo stored in the templates folder. The generated files will be saved in the orcs_foo/ folder.

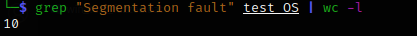

Another advantage of this fuzzer is the presence of a ready-made script, test_fuzzed., that can be used to run a bunch of generated files on the tested tool (be it an OS or a debugger). Let’s run the malformed ELFs on the OS using the command ./. Most of these files have run and finish without errors. Interestingly, some files finish with segmentation faults, but for the purposes of this research, they are irrelevant since they haven’t crashed the system.

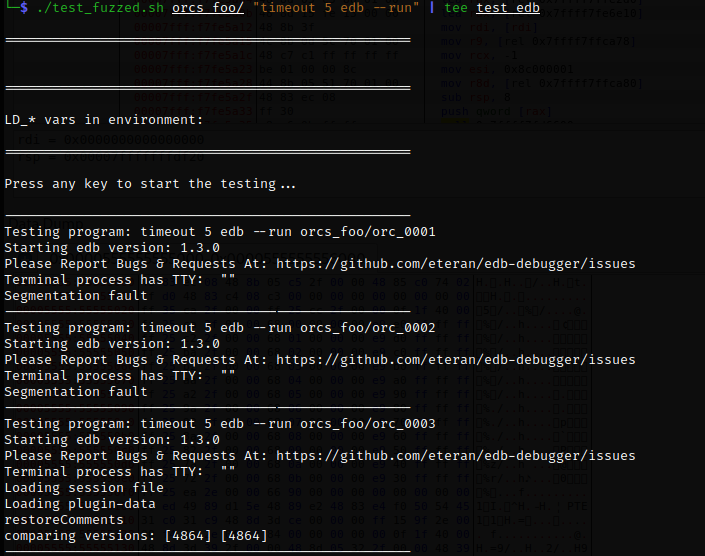

Testing EDB

Now let’s see the reaction of debuggers. When such tools encounter ‘bad’ files, they display error messages and wait for user instructions, which isn’t very handy when you analyze their behavior using a large number of files. I am going to use the following trick: to avoid the need to close the debugger manually every time, I launch it with the timeout command. It will complete the test case execution while I can sit back and have a cup of coffee.

EDB (1.3.0) stumbled on the first two ELF files; while on the third one, it suggested to debug the file, but was closed due to a timeout, and then the testing continued. Amazing!

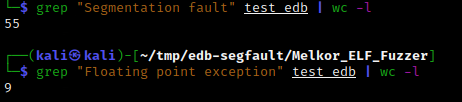

After running a hundred corrupted ELFs in EDB, I got 55 segfaults and 9 divide-by-zero exceptions (yep, despite its name, SIGFPE (or floating point exception) is also generated in Linux when an integer is divided by zero).

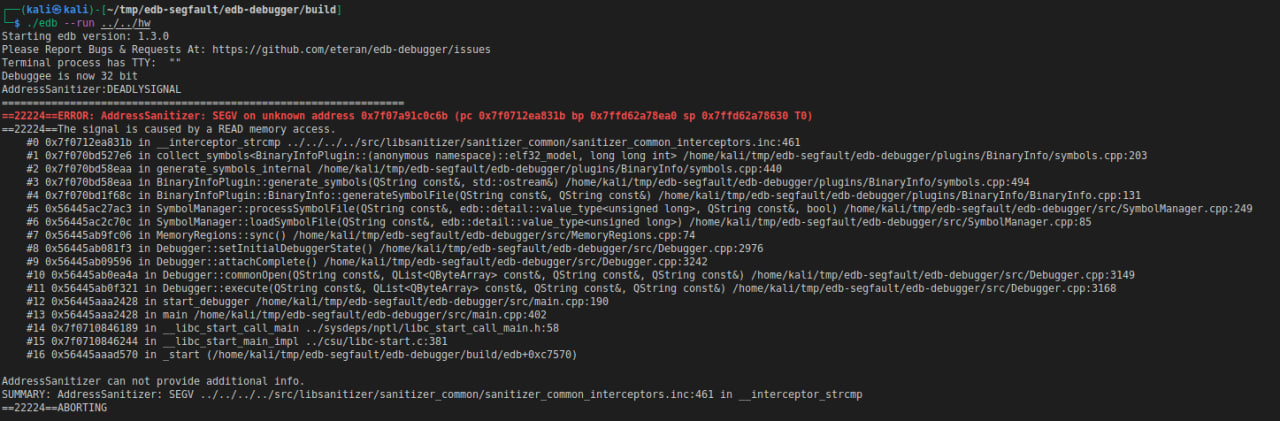

Further investigation that granted me a truly unique experience (imagine: debugging a debugger in another debugger) showed that these problems originate from the BinaryInfo plugin. It parses sections to collect information on symbols prior to running the tested ELF for debugging. In the first case, the validity of the pointer to the string containing the section name wasn’t checked, and it could point beyond the mappings of the read file. In the second case, it didn’t check the element size prior to counting the number of elements in sections containing element tables (e.g. the symbol table).

For your information: both bugs have already been fixed.

By the way, when you analyze crashes, it’s very convenient if the build of the tested software includes sanitizers. For instance, if you build EDB with AddressSanitizer (ASAN), you’ll get a handy backtrace that can be examined in logs of the above-mentioned ./, which is much easier than examining each case manually in the debugger.

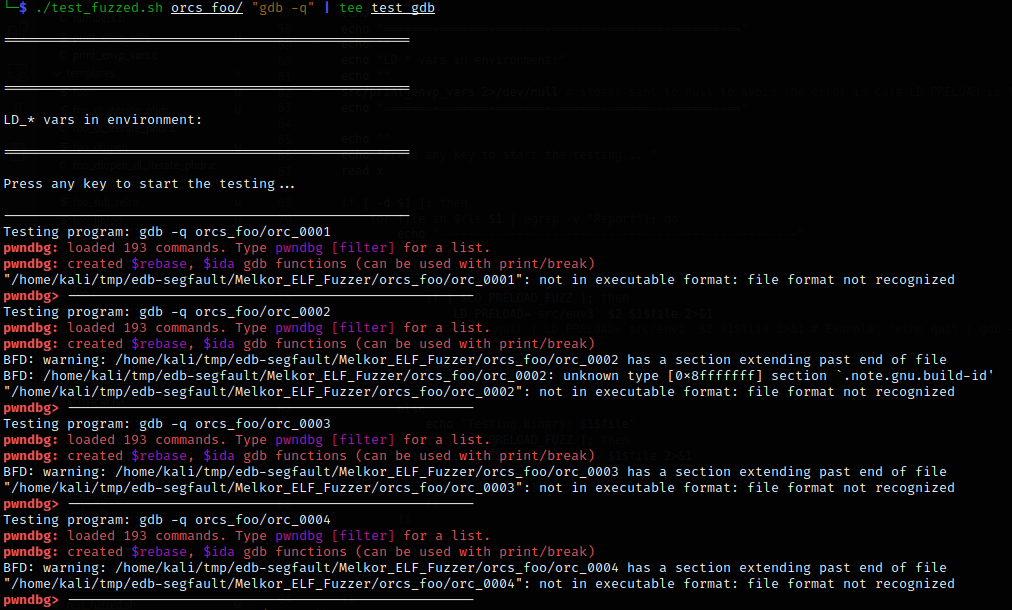

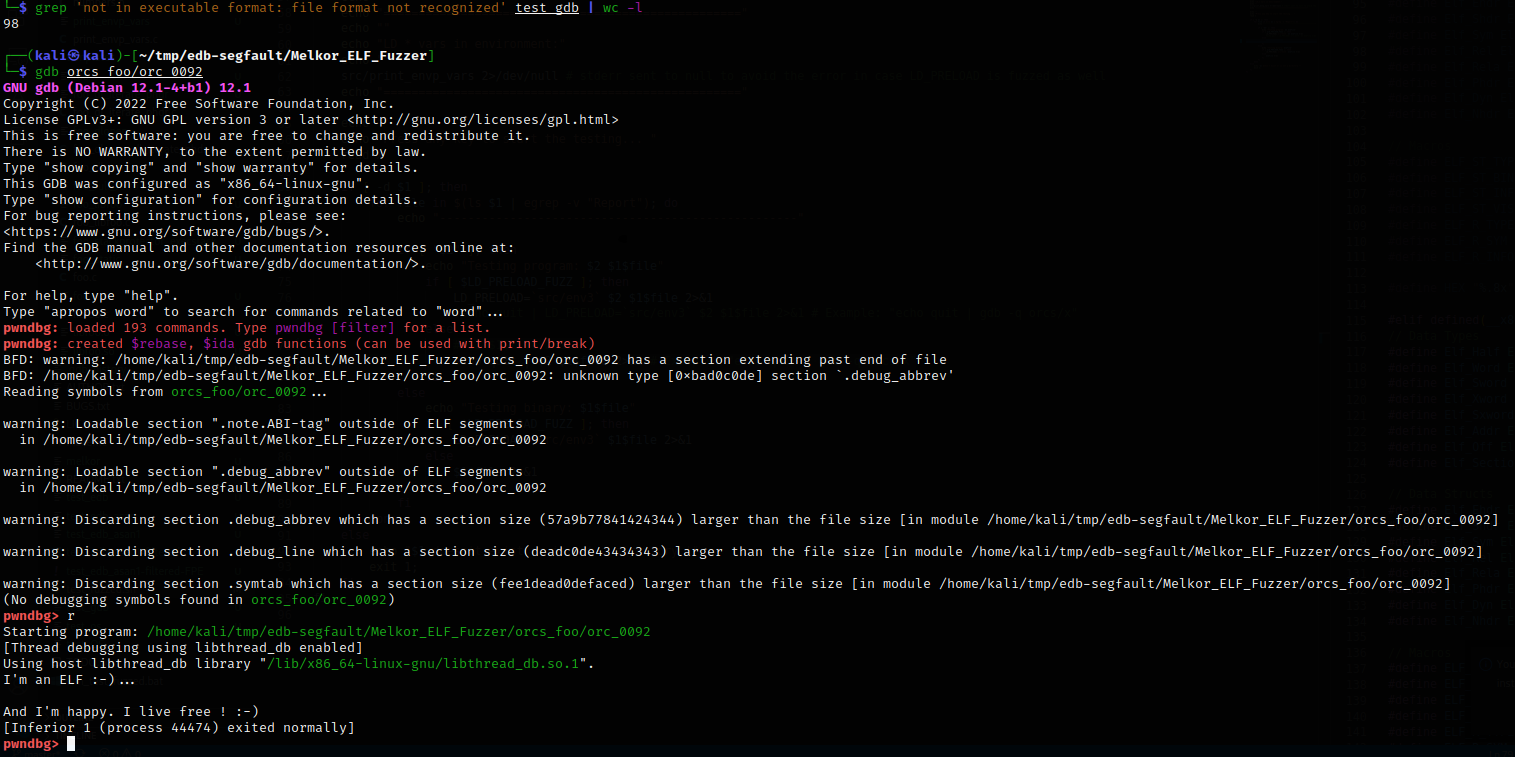

Testing GDB

Since nitr0us had tested GDB as well, he took into account that it will wait for user input if an error occurs during file opening. Accordingly, instead of using the timeout trick, you can uncomment line 80 instead of 79 in ./ so that it looks as follows:

# $2 $1$file 2>&1echo quit | $2 $1$file 2>&1 # Example: "echo quit | gdb -q orcs/x"Now let’s run ./.

In 98 cases out of 100, GDB refused to open a file with the message not . In one case, it reported: Can't . And one file it managed to open – albeit with issues. But unlike EDB, there were no crashes.

Overall, GDB demonstrates quite a mature behavior on a hundred corrupted ELFs: at least, it doesn’t crash on them. On the other hand, it completely refuses to deal with them, which is exactly what anti-debugging guys want.

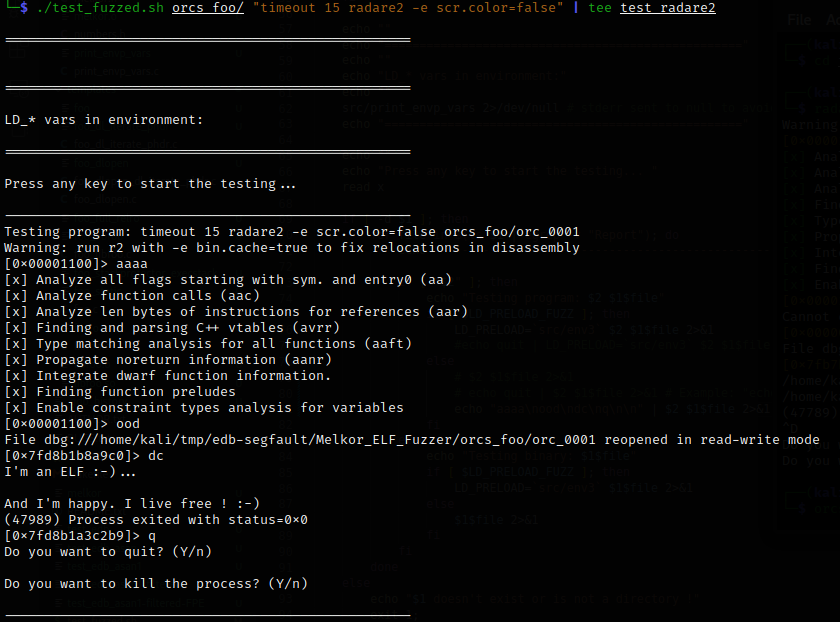

Radare2: r2hang

To automate file analysis, debug launch, and closedown of Radare2 in case of any problems, I replace the 80th string in the ./ script with the following one:

echo "aaaanoodndcnqnn" | $2 $1$file 2>&1

I leave timeout in the start command – just in case, to avoid getting stuck on something. Also, I disable color output (-e ) so that escape sequences that encode the colors don’t interfere with the subsequent log analysis. After that, I start testing.

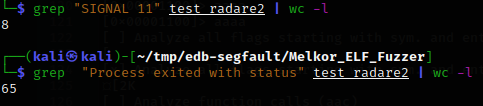

Radare2 managed to open most of the corrupted ELF files without significant problems; unlike EDB, it didn’t crash on any of them. It stayed stuck for a while prior to starting debugging some files, but ultimately opened them. Eight files finished with segfaults (on the OS, 10 files finished with segfaults), and 65 files ended with some code (i.e. were actually run for debugging).

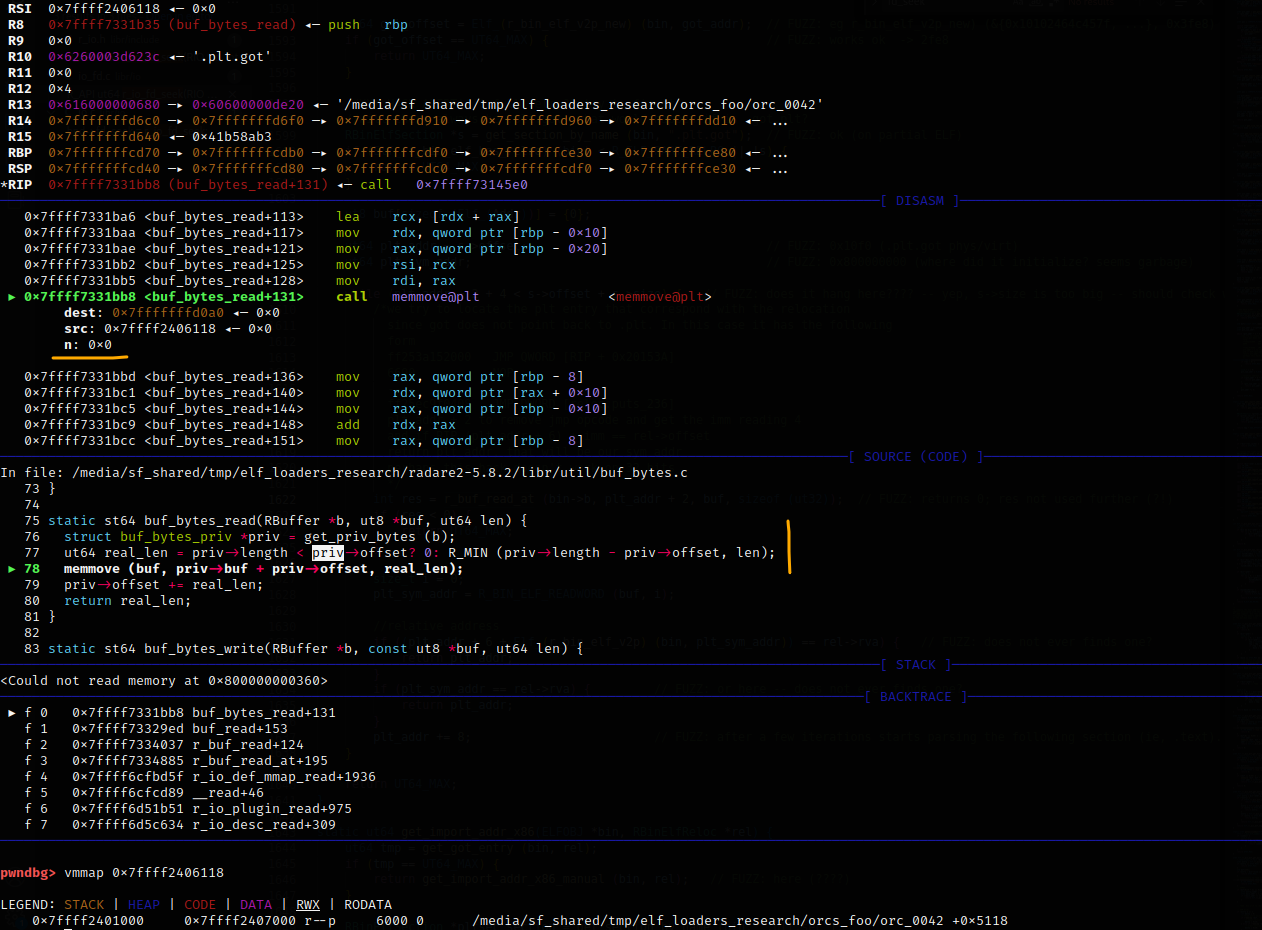

In other words, nearly a quarter of the tested files caused problems. In most cases, Radare2 got stuck when it tried to open an ELF file, which consumed all the CPU horsepower, and finished due to the timeout. To find out what makes it stuck, you can attach to its process with GDB.

To investigate this problem, I made a debug build of the latest version available on GitHub, made sure that it also gets stuck, and performed line-by-line Radare2 debugging, including source code examination and analysis of backtraces and structures describing ELF files inside Radare2.

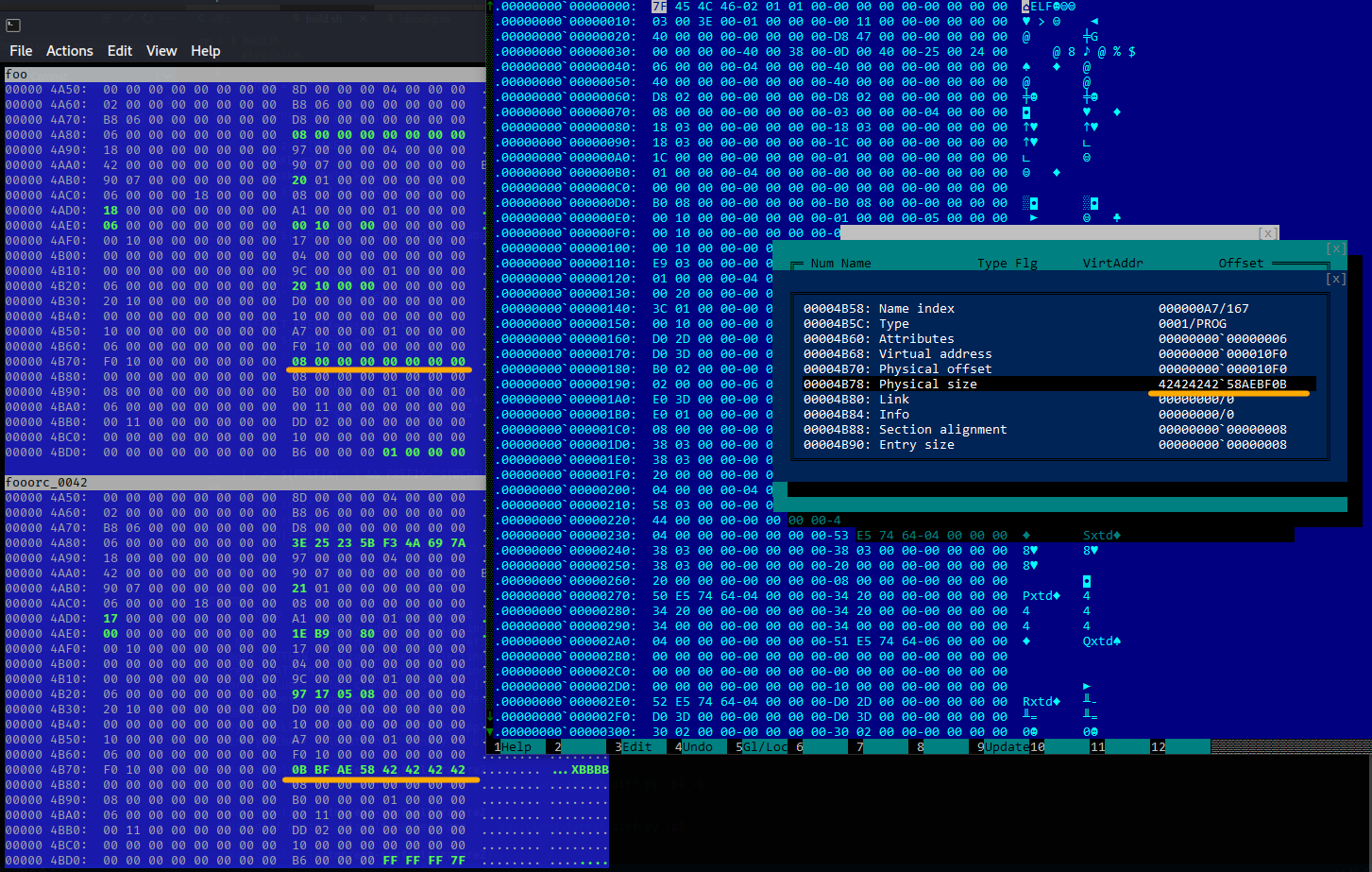

To localize the problem, headers of the ELF binary that makes Radare2 stuck were compared with the original foo from the Melkor repository used to generate the 100 test files. On the left is the diff between the contents of their section header tables (SHT) in vbindiff; while on the right, the same data for one of the sections are presented in Hiew. As you can see, Melkor decided to create a section (to be specific, .) of enormous size.

It turned out that the problem was in one missing check that should be performed when the ELF parser in Radare2 goes through the elements of the . section trying to find the offset of the plt_addr symbol in it. The program didn’t check whether the section whose size is defined in the ELF headers (Physical in Hiew, see the screenshot above) fits within the tested file. Accordingly, Radare2 iterated through the file from the beginning of . (in this particular case, 0x10f0) to the place where it thought the end was located according to headers in the SHT. In this example, this is a very large number. To exit this loop, the program had iterate over zillions of elements:

0x4242424258aebf0b/8 = 596806425961158625By the way, this bug has also been fixed.

It must be noted that no actual reading occurred beyond the boundaries of the buffer storing the ELF contents (which could potentially result in a segfault when the debugger goes beyond the mapping boundaries) because the required checks are present in functions called later. As a result, after reaching the end of the buffer, Radare2 was simply reading 0 bytes at a time until meeting the exit condition (i.e. iterate the entire section by its size).

Interactive Disassembler

The last tool that was tested in the framework of this research is IDA. For this purpose, the creator of Melkor crafted a batch file called win_test_fuzzed.. In his research, nitr0us notes that you have to close popping-up windows manually, which isn’t very convenient… Alas, I have no choice but to accept this.

Nitr0us fuzzed the IDA demo, but I am going to test IDA Free 8.2 for Linux. If error windows pop up, I will act like a regular user: unthinkingly agree with everything.

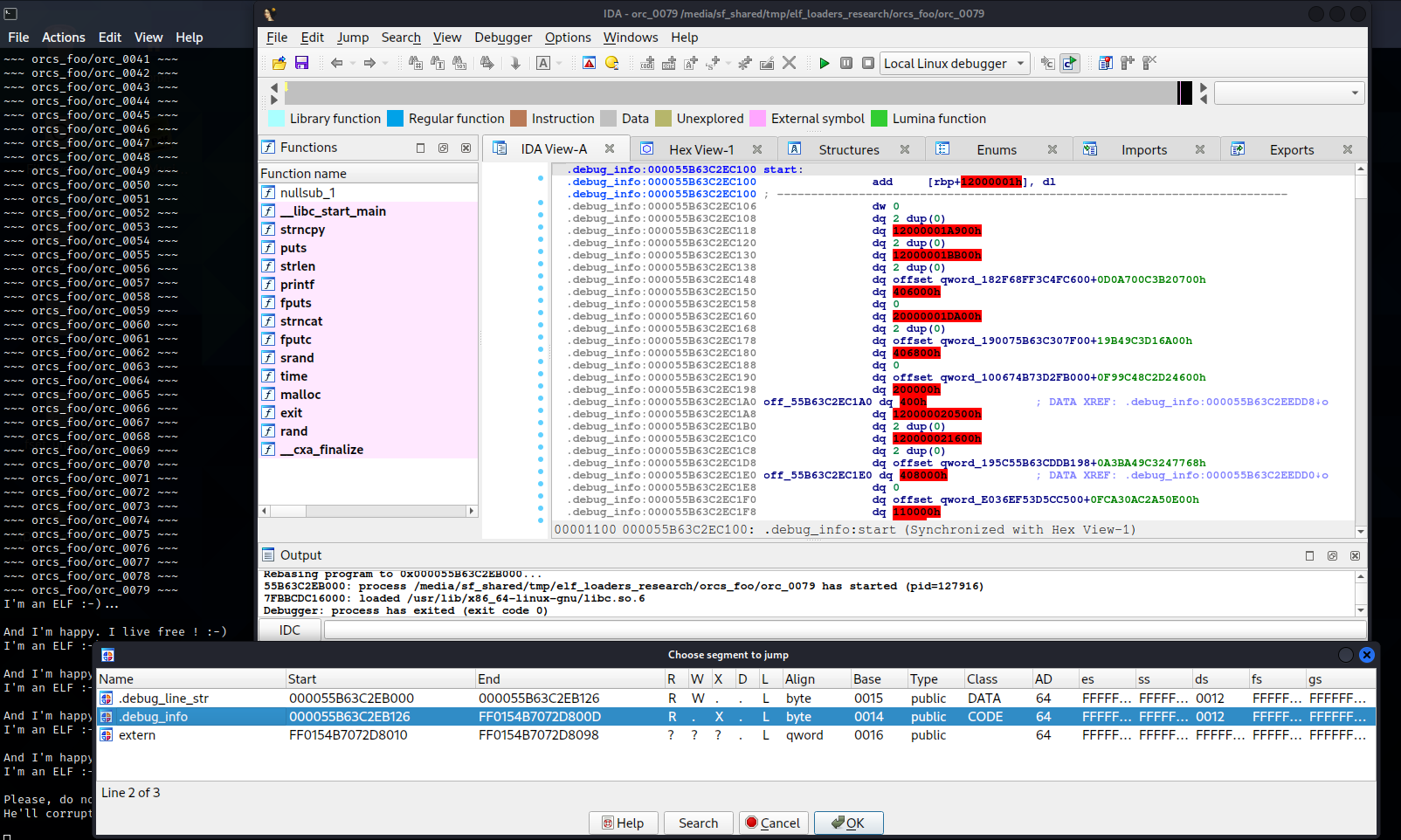

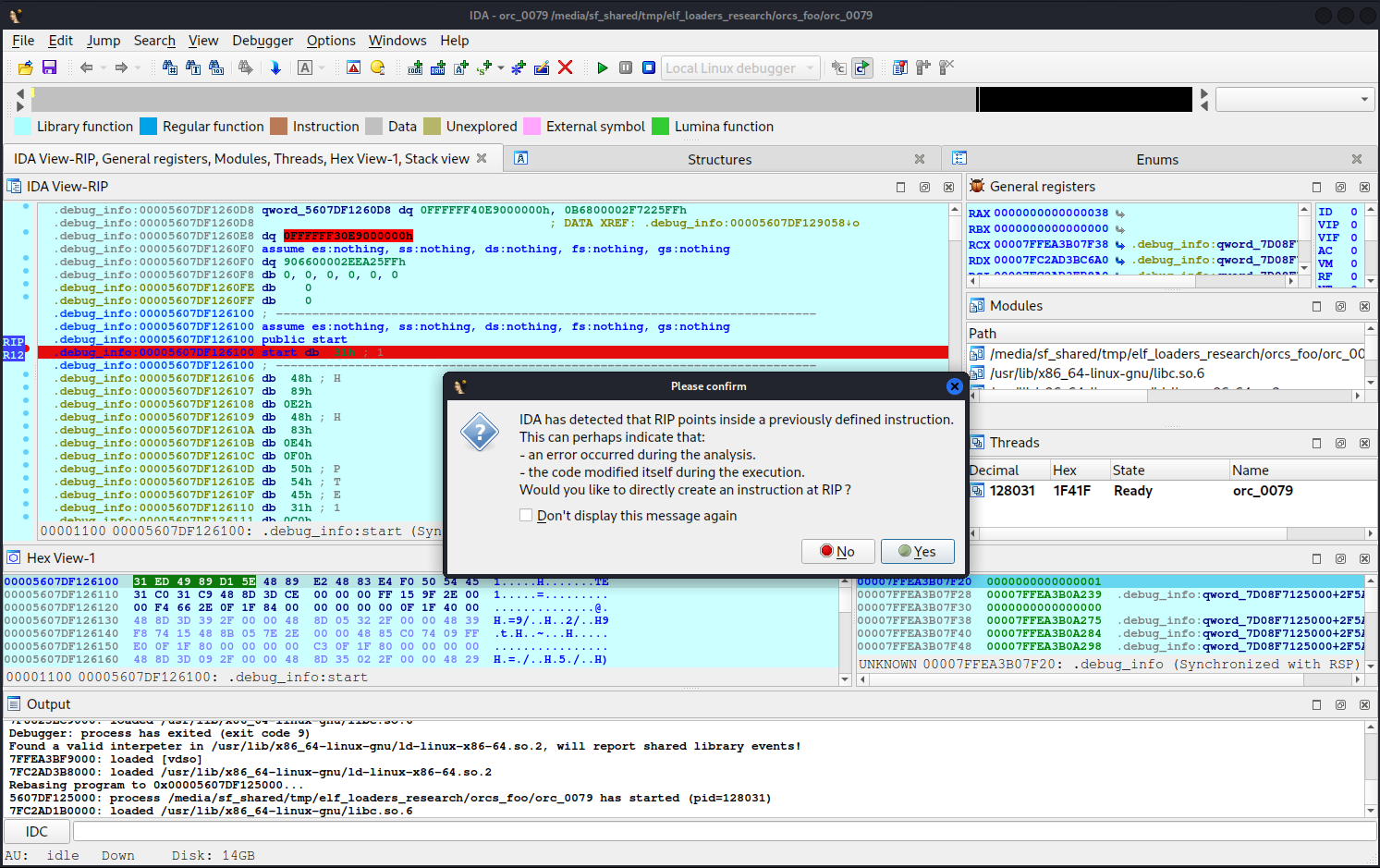

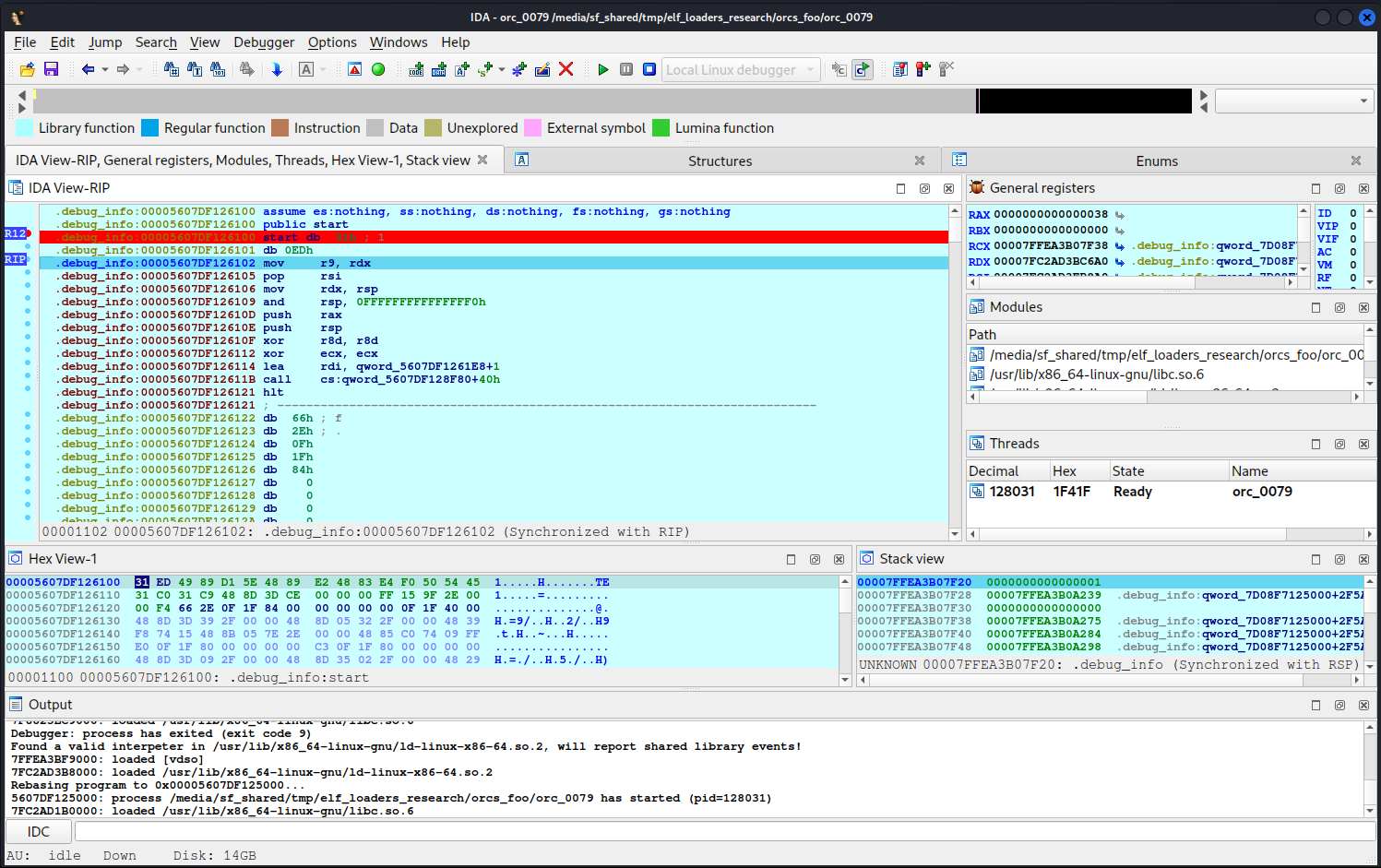

Overall, IDA did quite well. It checks offsets and sizes of sections, their overlaps, and validity of attributes and handles invalid values in an appropriate way. However, some ELFs turned out to be too tough for IDA. In one of the files, it incorrectly maps the contents of sections into segments, shows a very strange entry point, and the list of available segments also leaves multiple questions. On the other hand, the F9 key successfully runs this ELF for debugging, and it exits with code 0.

Furthermore, you can set a breakpoint at the entry point (pay no regard to its appearance!); as a result, during the debugging, IDA will indeed stop at the start of the binary, and when you press F7 (single step), it will suggest to convert the data into code.

Interestingly, if you accept this suggestion, you’ll see something suspiciously similar to setting the registers before the __libc_start_main( call.

In total, IDA showed such behavior with 2 ELFs out of 100.

Conclusions

Once upon a time, a wise man told me: the first and foremost you have to do when you intent to hack something is to perceive the attack surface. Amazingly, but just a hundred corrupted ELF files turned out to be sufficient to exploit parser differentials in various tools and prevent analysis of workable files – provided that you know what to pay attention to.

Various parsers represent an invaluable field for experimentation. Implementations of standards contain errors and don’t parse identically; while formats often contain redundant information. A brilliant example of this is information about ELF sections: as a result, the same files are smoothly run by the OS (which ignores this information), but cannot be analyzed by tools that somehow rely on it. The langsec leitmotif confirms this:

Ambiguity is insecurity.As shown above, the easiest task was to prevent GDB from parsing test files. Using these files, I was able to crash EDB and freeze Radare2. And although IDA managed to open most of the binaries, its output for two of them contained outright nonsense.

The bugs identified in EDB and Radare2 have been localized and fixed. I think this is a good result for a research project inspired by an eight-minute video!

www

In addition to sources listed in the text, the following links contain useful information that will help you to dig deeper into hacking tools designed for work with ELF files:

- tmp.out – an ezine devoted to ELF files and reversing; and their repository with a huge list of references;

- repository of elfmaster Ryan O’Neill, an ELF guru;

- PoC||GTFO – Emulation, vintage computing, modern heap corruption exploitation, systems internals, and everything in between;

- How programs get run: ELF binaries [LWN.net];

- How does the Linux kernel run a program and other articles about Linux by 0xAX; and

- “Weird Machines” in ELF: A Spotlight on the Underappreciated Metadata.