Factor 1: Happenstance

Think back to 2013. Qualcomm’s lineup featured the highly successful Snapdragon 800, built on the company’s own 32-bit Krait 400 cores. That chip (and its successor, the Snapdragon 801) powered dozens—if not hundreds—of different models. At launch, Qualcomm’s flagship had no real competition: solutions based on ARM’s Cortex-A15 cores were extremely power-hungry and couldn’t compete with Qualcomm’s four custom Krait cores. Everything looked great—Qualcomm was king of the hill and just needed to keep evolving a winning architecture. What could possibly go wrong?

But first things first. In 2011, ARM Holdings announced the ARMv8 architecture, which opened up many ways to accelerate certain specialized computations—for example, stream encryption, which, jumping ahead, is now used in practically all smartphones. The first mobile cores implementing this architecture were the Cortex‑A53 and Cortex‑A57, announced by ARM in 2012. At the time, ARM projected that processors based on the new cores would only hit the market in 2014. But Apple, which holds an ARM architectural license, beat everyone to it—almost a year ahead of the competition.

So, in November 2013 Apple released the iPhone 5s. Alongside a fingerprint sensor and the Secure Enclave security subsystem, it was the first phone on the market with a 64‑bit Apple A7 (ARMv8) processor. In Geekbench, the new chip impressed: in single‑threaded tests, the dual‑core CPU scored about 1.5× higher than Krait 400 cores, while multi‑threaded performance was roughly on par.

The expanded ARMv8 instruction set arrived at exactly the right time: it was with the iPhone 5s that Apple first integrated the Secure Enclave hardware security subsystem, which among other things handles data encryption. From Apple’s perspective, the move to a 64-bit architecture was perfectly logical: only ARMv8-class cores introduced instructions (the ARMv8 Cryptography Extensions) to accelerate bulk encryption, which Apple had already been relying on for quite some time. Later, these new cores let Apple achieve unprecedented speeds when accessing encrypted data—by contrast, the Nexus 6 released a year later, built on the 32-bit Qualcomm Snapdragon 805 (ARMv7), showed abysmal bulk-crypto performance: access to encrypted data was 3–5 times slower than to unencrypted data.

Early on, 64-bit smartphone architectures were widely dismissed—by consumers and plenty of experts—as pure marketing. Users thought so, and Qualcomm’s executives said as much, at least in their public statements.

In 2014, the iPhone 6 launches with the A8 chip, which also implements the ARMv8 instruction set. How does Qualcomm respond? With a minor update: the market is dominated by phones running the Snapdragon 801 (32-bit, ARMv7). The Snapdragon 805 also appears, using the same Krait 400 cores but with a more powerful GPU. Apple’s processors prove faster than Qualcomm’s counterparts in both single-threaded and multi-threaded workloads, and in specific use cases—such as stream cipher implementations—they beat competitors by a wide margin. Qualcomm tries to play it cool, but OEMs are putting on the pressure, demanding a competitive SoC. Qualcomm has no choice but to join the race.

In 2015, Apple released the iPhone 6s and the A8, while Qualcomm rolled out the Snapdragon 810 and its cut-down variant, the Snapdragon 808. These processors were Qualcomm’s response to partner demands. However, the company’s lack of experience with 64-bit chip design backfired: both processors turned out to be deeply flawed. From day one, the chips tended to draw too much power, overheat, and throttle—so much that after a few minutes of use, their sustained performance was barely better than the Snapdragon 801.

So what can we conclude from all this? Just one thing: Apple caught the industry off guard by rolling out cores with a new architecture at a time and in places where it seemed unnecessary. As a result, Qualcomm was left playing catch-up, and Apple gained about an 18-month head start. Why did that happen?

Here we should examine the specifics of the mobile processor development lifecycle.

Factor Two: Different Development Cycles

So, we’ve established that Apple managed to pull ahead, outpacing competitors by about eighteen months. How did that happen? The reason lies in the difference between Apple’s development cycles and those of Android smartphone manufacturers.

As is well known, Apple tightly controls iPhone development and manufacturing, starting from the lowest level—the CPU design. And while until recently it licensed GPU cores from Imagination Technologies, the company has preferred to design its CPU cores in-house.

What does Apple’s development cycle look like? Based on an ARM architectural license, they design a CPU compatible with the target instruction set (ARMv8). At the same time, they develop the smartphone that will use that chip. In parallel, they build all the necessary drivers and the OS, and optimize the whole stack. It all happens within one company: OS engineers have no trouble accessing driver source code, and driver developers can talk directly to the people who designed the processor.

The manufacturing lifecycle for Android devices is completely different.

First and foremost, ARM—the company behind the eponymous instruction set and processor architectures—comes into play. ARM designs the reference CPU cores. Back in 2012, it announced the Cortex‑A53 cores, which went on to power the vast majority of smartphones released in 2015, 2016, and 2017.

Hold on—2012? That’s right: 64-bit Cortex-A53 cores were announced in October 2012. But a core architecture is one thing; actual processors are another. ARM Holdings doesn’t manufacture chips—it offers reference designs to partners but doesn’t ship SoCs itself. Before a smartphone based on any given architecture can reach the market, someone has to design and produce a complete system-on-chip (SoC).

Despite public statements by its own representatives, in 2013 Qualcomm was working full tilt on a 64-bit processor. There wasn’t time to design a custom CPU core, so they had to use what was available: ARM’s big.LITTLE architecture of the day, pairing “little” Cortex-A53 cores (a solid choice) with “big” Cortex-A57 cores (controversial in terms of power efficiency and thermal throttling).

The first Qualcomm processors based on these cores were announced in 2014. But a processor is only part of the equation! You still need a chassis, a screen, and more. All of that is made by OEMs—the companies that actually design and manufacture smartphones. And that takes time—a lot of it.

Finally, the operating system. To bring up Android on a device, you need a driver package for the new chipset. Those drivers are developed by the chipset vendor (e.g., Qualcomm) and supplied to smartphone makers for integration. OEMs also need time to study, adapt, and integrate these drivers.

And that’s still not the end of it! Even a finished smartphone with a working build of Android still has to be certified in one of Google’s labs for compatibility and compliance with the Android Compatibility Definition (CDD). That takes time too—and it’s time they’re already desperately short on.

In other words, there’s nothing surprising about the fact that smartphones with the Snapdragon 808/810 only appeared in 2015. Qualcomm’s first 64-bit flagship chips lagged Apple’s SoCs by roughly a year and a half. That’s a historical fact—and a real advantage for Apple.

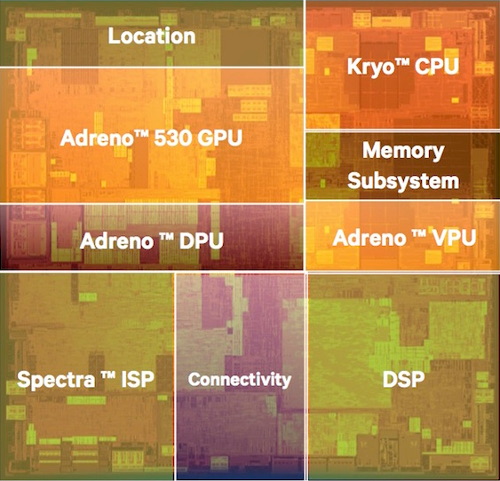

In 2015, a drawn-out development cycle and partner demands backfired on Qualcomm—their first attempt was a flop. Still, the company managed to redeem itself with the Snapdragon 820. But was it already too late?

Third factor: size matters

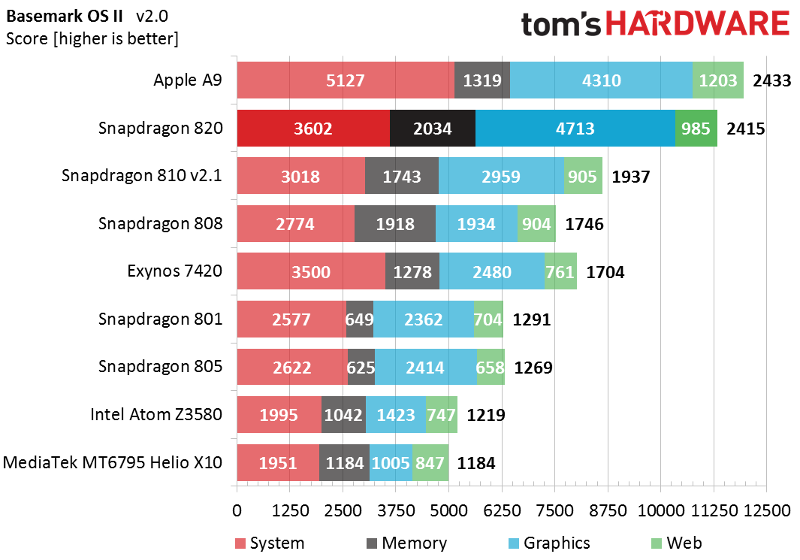

Consider the table comparing the two most recent generations of Apple and Qualcomm processors.

| Processor | A10 Fusion | A11 Bionic | Snapdragon 820 | Snapdragon 835 |

|---|---|---|---|---|

| Process node | 16 nm | 10 nm | 14 nm | 10 nm |

| CPU | 64-bit quad-core: 2x Hurricane @ 2.34 GHz + 2x Zephyr | 64-bit hexa-core: 2x Monsoon + 4x Mistral | 64-bit quad-core: 2x Kryo @ 1.59 GHz + 2x Kryo @ 2.15 GHz | 64-bit octa-core: 4x Kryo 280 @ 2.45 GHz + 4x Kryo 280 @ 1.9 GHz |

| Core management | Cluster-based | Per-core | Per-core | Per-core |

| GPU | 6-core | 3-core | Adreno 530 | Adreno 540 |

| Geekbench single-core | 3438 | 4216 | 1698 | 1868 |

| Geekbench multi-core | 5769 | 10118 | 3996 | 6227 |

Data from the Geekbench website

What does this table show? It’s easy to see that Apple’s per‑core performance is more than twice that of Qualcomm’s, and the multithreaded performance of current‑generation chips is nearly 1.5× higher as well. Why is that? We can try to find an answer in the next table.

| Processor | A10 Fusion | A11 Bionic | Snapdragon 820 | Snapdragon 835 |

|---|---|---|---|---|

| Process node | 16 nm | 10 nm | 14 nm | 10 nm |

| Die area | 125 mm2 | 87.66 mm2 | 113.7 mm2 | 72.3 mm2 |

If we set aside the A10 Fusion and Snapdragon 820, which use different process nodes, we can compare the die sizes of the A11 Bionic and Snapdragon 835. Apple’s chip has about 1.2× the die area of Qualcomm’s. What does that mean in practice? Room for more transistors and a more advanced core microarchitecture. Specifically, researchers found that the A11 Bionic’s “little” CPU cores are several times larger than the small A53 cores (sorry—Kryo 280) used in the Snapdragon 835. This suggests that even the A11’s little cores support out-of-order execution, delivering higher IPC compared to the in-order A53 cores.

The die size of a processor directly affects its price. At the same process node, a larger die means a higher manufacturing cost. Which brings us to the next factor: how much it costs the manufacturer to produce the CPU.

Factor Four: Cost

According to an Android Authority report, the CPU core area of the Apple A10 Fusion is twice that of its closest competitor, the Snapdragon 820.

“Apple’s advantage is that it can afford to spend money to increase the die size of a processor built on the latest 16 nm FinFET process. A few extra dollars won’t materially affect the final device cost—and Apple will be able to sell far more $600 devices thanks to the resulting performance,” writes Linley Gwennap, director of The Linley Group.

Indeed, an extra five or six dollars won’t move the needle on the iPhone’s final price — we’re talking fractions, at worst a couple of percentage points for the customer. But if those five or six dollars can double the device’s performance compared to Android rivals, that’s a compelling argument in Apple’s favor.

Why can’t Qualcomm pull this off? Because the Android SoC pipeline has too many stakeholders. ARM designs and licenses the CPU cores, Qualcomm licenses those cores to build complete chips, and then you have the Android OEMs. Those OEMs are locked in cutthroat price competition, so every dollar matters. They want the cheapest possible SoCs (which is why those archaic, low-power A53-based designs are still surprisingly popular), and Qualcomm has to play along. But both Qualcomm and ARM need their margin, which means a solution comparable to Apple’s would actually end up costing even more than Apple’s. OEMs couldn’t afford to buy such chips at scale, which would push the price up further. That’s exactly what happened with the MediaTek Helio X30—there was little demand, and only two phones ever shipped with it.

You could argue that Samsung and Huawei have their own processor lines—Exynos and Kirin, respectively. But Huawei doesn’t design its own CPU or GPU cores: it uses off‑the‑shelf ARM Cortex CPU cores and ARM Mali GPUs, then packages them as “its own” chips. Naturally, those CPU cores can’t outperform what ARM itself offers. Samsung, on the other hand, has tried to follow Apple’s path with custom CPU cores—though their performance hasn’t strayed far from standard, stock ARM cores.

Factor Five: A Matter of Control

Last year, Apple made an interesting move: it deliberately dropped support for 32-bit apps in iOS 11. As it happened, that’s also the OS version that launched with the new iPhone lineup: 8, 8 Plus, and X. What does that mean for performance?

Being able to drop support for 32-bit instructions brings a lot of benefits. The decode and execution units get simpler, and the required transistor count goes down. Where do those savings go? You can use them to shrink the die (directly reducing cost and power), or keep the die size and power roughly the same and spend the transistors on other blocks to boost performance. Most likely the latter is what happened: the A11 Bionic gained an extra 10–15% performance specifically by ditching support for 32-bit code.

Is something similar possible in the Android world? Yes—but only partially, and not anytime soon. Only starting in August 2019 do requirements take effect that obligate developers, when adding or updating apps in the Google Play Store, to include 64‑bit versions of any native libraries. (Note that not all—and in fact not even most—Android apps use native libraries at all; many rely on bytecode executed by the runtime.) For reference, Apple introduced a similar requirement in February 2015—once again a head start, this time by four and a half years.

Factor Six: Optimization and Use of Available Resources

Optimization is the bedrock of performance. Historically, Apple has been either flawless or exemplary at it (people who complain about older devices slowing down after updating to the latest iOS don’t realize what kind of hell that same underpowered hardware would be if it had to run Android). Android, by contrast, is… uneven. Varied. You might even say all over the place—sometimes spectacularly so.

On modern hardware, stock Android builds tend to run quite fast—like those used on Google Nexus and Pixel phones, as well as devices from Motorola and Nokia. But even here, not everything is perfect: for example, the Google (Motorola) Nexus 6 had astonishing storage I/O performance issues caused by a botched encryption implementation (Google’s engineers failed to utilize the Snapdragon 805’s hardware crypto accelerator and then claimed that “the software implementation is better”). In this article we took a deep look at the Nexus 6’s encrypted read/write performance and compared it to the iPhone 5s: this. Here are the numbers:

- Nexus 6 — sequential read, unencrypted data: 131.65 MB/s

- Nexus 6 — sequential read, encrypted data: 25.17 MB/s (39 MB/s after the Android 7 update)

- iPhone 5s — sequential read, encrypted data: 183 MB/s

Impressive, isn’t it? With comparable hardware, Google’s own engineers (Google, not the usual inept OEMs!) somehow managed to ship a blunder like this in a reference device that was supposed to champion secure encryption for the masses. Would you be surprised to learn that other vendors struggle with optimization too? They do. The fully loaded HTC U Ultra (Snapdragon 821) manages to stutter and overheat during routine tasks; it feels like the CPU is doing at least twice the work it should. And as for Samsung phones, which still find ways to lag over trivial things even on the fastest available hardware—there’s hardly any need to elaborate.

Factor Seven: Display Resolution

There’s one more point worth mentioning: display resolution. As you know, standard iPhone models come with HD screens, while the Plus versions use Full HD. Android manufacturers using Qualcomm’s flagship chipsets tend to opt for QHD (2560 × 1440) displays. At the very least, you’ll see Full HD—though, in flagship phones, that’s actually uncommon.

Why “alas”? Because on IPS displays up to and including 5.7 inches, resolutions above Full HD are more than enough. For AMOLED screens—which, first, use a PenTile subpixel layout and, second, may support Google VR headsets (by the way, what percentage of users actually found that useful?)—you can still make a case for QHD resolution.

A bit of an outlier is the iPhone X with a 2436 × 1125 display—though in practice that’s not far from Full HD. For comparison, the Samsung Galaxy S8 screen is 2960 × 1440, which is about one and a half times as many pixels as the iPhone X.

Now imagine we’re comparing an iPhone 8 with its HD resolution to, say, a Nokia 8 with QHD. Picture it? The Nokia has to push almost four times as many pixels as the iPhone, which inevitably impacts both power consumption and performance—at least in tests that render to the screen. I’m not trying to justify the dated displays Apple stubbornly puts in devices approaching a thousand dollars; I’m simply pointing out that, all else being equal, devices with lower-resolution screens will be faster and more power-efficient than smartphones with QHD displays.

Vendors seem to have caught on as well. Take the Sony Xperia Z5 Premium: its screen (an IPS panel—useless for VR, by the way) claims a physical 4K resolution (actually not; even here the marketers fudged it), but the effective, or logical, resolution is “just” Full HD. That let the manufacturer mislead buyers while avoiding a massive performance hit. Samsung took a similar route by allowing reduced logical resolution on high‑DPI screens. Clearly, marketing’s priorities clash with the interests of both users and the company’s own developers.

Bottom line: Does your phone really need 64-bit?

Do we really need 64-bit processors in mobile devices? After all, 32-bit cores have their own advantages. They can be faster than 64-bit chips because instructions are shorter thanks to shorter addresses, which also makes them less demanding on RAM. They can also use shorter instruction queues/pipelines, which can deliver performance gains in certain scenarios.

Some of these benefits will remain mostly theoretical, but for many modern workloads you can’t get by without ARMv8 instruction set support. Think streaming encryption, real-time HDR merging, and plenty of other under-the-hood tasks. Either way, CPU vendors have already moved to 64-bit cores with ARMv8 support—and that’s a done deal.

But smartphone makers aren’t in a hurry to adopt 64-bit OS builds.

There has never been a single Windows 10 Mobile smartphone that ran the OS in 64‑bit mode. The Lumia 950 (Snapdragon 808), Lumia 950 XL (Snapdragon 810), and even the relatively recent Alcatel Idol 4 Pro (Snapdragon 820) all use a 32‑bit build of Windows 10 Mobile.

Android phone makers are no exception. For example, Lenovo, which sells smartphones under the Motorola brand, has only two devices with a “proper” 64-bit Android: the Moto Z flagships (the standard model and the Force variant) and the Moto Z2 Force. All the other devices — including the budget Moto G5 on the Snapdragon 430 and the newer sub-flagship Moto Z2 Play on the Snapdragon 626 — run in 32-bit mode.

A number of devices from other manufacturers (for example, the BQ Aquaris X5 Plus) run the powerful Snapdragon 652 in 32-bit mode. Needless to say, those devices aren’t taking full advantage of the available hardware capabilities.

On the other hand, Apple isn’t perfect either. Even 64-bit apps compiled to native code, because of backward-compatibility requirements, have to stick to the instruction set available on the company’s earliest 64-bit chips—the 2013 Apple A7. By contrast, Android’s ART bytecode compiler (used since Android 5) doesn’t have this limitation: it compiles app bytecode into optimized native code that can leverage all instructions supported by the device’s current hardware.

Well, we’ll live with what we’ve got. If you want top-tier CPU core performance and guaranteed optimization, go with Apple. If you want roughly the same thing but 1.5–2x worse and just as much cheaper, pick from the many Android phone makers.