Let’s consider several possible solutions.

- Oracle Clusterware for Oracle Unbreakable Linux is fairly expensive; it really only makes sense if you’re working with Oracle products.

- Veritas InfoScale Availability is a commercial solution, formerly known as Veritas Cluster Server. A VCS Access license for a four‑node cluster runs about $20–30k.

- Red Hat Cluster Suite is open source, but using it properly requires wading through a lot of documentation, which will take a significant amount of time.

I want to show how to build application-level clustering with high availability quickly, on a tight budget, and without deep expertise in clustering. In my view, the best option is to use Corosync with Pacemaker. This combo is free, easy to learn, and fast to roll out.

Corosync is software that lets you build a unified cluster from multiple physical or virtual servers. Corosync monitors and disseminates the state of all members (nodes) in the cluster.

This product allows you to:

- Monitor application status

- Notify applications when the cluster’s active node changes

- Broadcast identical messages to processes on all nodes

- Provide access to a shared database for configuration and metrics

- Send notifications about changes made in the database

Pacemaker is a cluster resource manager. It lets you run and manage services and resources within a cluster of two or more nodes. In short, its key advantages are:

- Detects and remedies failures at the node and service level.

- Storage-agnostic: forget about shared storage like a bad dream.

- Resource-agnostic: if you can script it, you can cluster it.

- Supports STONITH (Shoot-The-Other-Node-In-The-Head): a failed node is fenced/isolated and won’t receive traffic until it reports healthy again.

- Supports quorum-based and resource-driven clusters of any size.

- Works with virtually any redundancy topology.

- Can automatically replicate its configuration to all cluster nodes—no manual edits on each node.

- Lets you define resource start order and colocation/compatibility on a single node.

- Supports advanced resource types: clones (one resource running on many nodes) and multi-state roles (master/replica, primary/secondary), which is relevant for DBMSs like MySQL, MariaDB, PostgreSQL, and Oracle.

- Provides a unified CRM cluster shell with scripting support.

The idea is to build the cluster with Corosync and use Pacemaker to monitor its state.

In theory

Let’s tackle the following tasks.

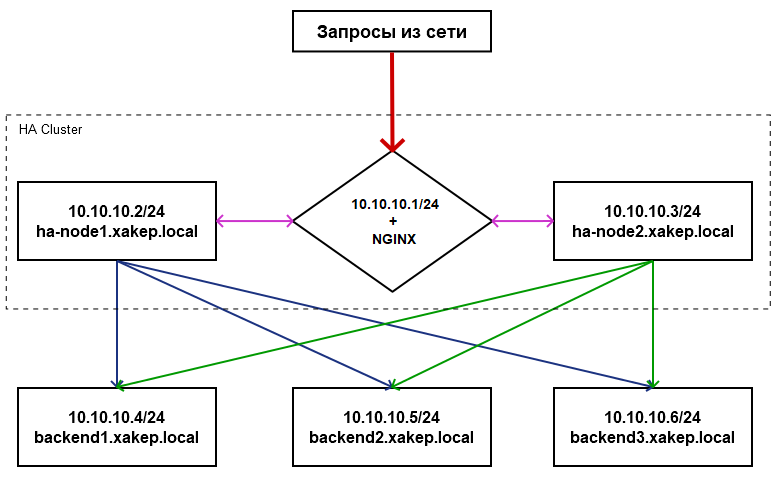

- Deploy a two-node high-availability cluster to handle client requests, using a shared virtual IP address and the Nginx web service.

- Test the failure of one node in the cluster and verify that resources fail over to the surviving node when the active node goes down.

- Failure detection on the active node: 30 seconds.

info

Before starting any work, I strongly recommend drawing a diagram of the network nodes and how they interact. It will make the overall design easier to grasp and clarify how the nodes communicate and operate.

Practical implementation

It’s time to put the plan into action. In my example, I used Red Hat Enterprise Linux 7. You can use any other Linux distribution—the principles of building the cluster are the same.

First, install the packages required for the software to function correctly on both nodes (perform the installation on each node).

$ sudo yum install -y python3-devel ruby-devel gcc libffi-devel libffi-dev fontconfig coreutils rubygems gcc-c++ wget

The next command also needs to be run with root privileges, but if you run it via sudo, the installer script won’t work correctly and the hacluster user won’t be created.

$ yum install -y pacemaker pcs resource-agents corosync

Verify the hacluster (Pacemaker) user and change its password:

$ sudo cat /etc/passwd | grep hacluster

hacluster:x:189:189:cluster user:/home/hacluster:/sbin/nologin

Log in to the nodes as the hacluster user. If you see an access error to a node (Error: ), it most likely means the Linux account has restrictions that block the use of remote shells. You need to lift the SUDO restriction for the duration of the installation.

Once you see the following, you can move on:

$ sudo -l

User

(ALL) NOPASSWD: ALL

Now, on both nodes, verify that corosync, pacemaker, and pcs are installed; enable them to start at boot, and start the Pacemaker configuration service.

$ sudo yum info pacemaker pcs resource-agents corosync

$ sudo systemctl enable pcsd.service; sudo systemctl start pcsd.service

Log in to the nodes as the hacluster user:

$ sudo pcs cluster auth ha-node1 ha-node2 -u hacluster

Creating a two-node cluster:

$ sudo pcs cluster setup --force --name HACLUSTER ha-node1 ha-node2

Enable and start all clusters on every node:

$ sudo pcs cluster enable --all; sudo pcs cluster start --all

For a two-node setup, enable STONITH (fencing). It’s used to forcibly power off nodes that fail to cleanly stop their workloads. Configure the cluster to ignore quorum.

$ sudo pcs property set stonith-enabled=true

$ sudo pcs property set no-quorum-policy=ignore

Check the status on both nodes ($ ) and see:

Cluster name: HACLUSTER

Stack: corosync

Current DC: ha-node2 (version 1.1.18-11.el7_5.3-2b07d5c5a9) – partition with quorum

Last updated: Wed Oct 17 13:12:00 2018

Last change: Wed Oct 17 13:10:47 2018 by root via cibadmin on ha-node1

2 nodes configured

0 resources configured

Online: [ ha-node1 ha-node2 ]

No resources

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

Add the virtual IP address and nginx as resources (hopefully you remember the 30‑second timeout), then query the cluster status.

$ sudo pcs resource create virtual_ip ocf:heartbeat:IPaddr2 ip=10.10.10.1 cidr_netmask=24 op monitor interval=30s

$ sudo pcs resource create nginx ocf:heartbeat:nginx op monitor interval=30s timeout=60s

$ sudo pcs status

Full list of resources:

virtual_ip (ocf::heartbeat:IPaddr2): Started ha-node1

nginx (ocf::heartbeat:nginx): Started ha-node1

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

Take the first node offline for a few minutes. Check the status of the second node to confirm the first is unavailable.

Full list of resources:

virtual_ip (ocf::heartbeat:IPaddr2): Started ha-node2

nginx (ocf::heartbeat:nginx): Started ha-node2

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

Once the first node is available, manually fail over the virtual IP and Nginx to it using the command

$ sudo pcs resource move virtual_ip ha-node1; sudo pcs resource move nginx ha-node1

All that’s left is to check the cluster status and verify that the address is assigned to the first node.

$ sudo pcs resource show

virtual_ip (ocf::heartbeat:IPaddr2): Started ha-node1

You can view the list of all supported services with the command $ .

Nginx‑based clustering

Nginx must be installed and configured on both nodes. Connect to the cluster and create the following resource:

$ sudo pcs cluster auth ha-node1 ha-node2 -u hacluster

$ sudo pcs resource create nginx ocf:heartbeat:nginx op monitor interval=30s timeout=30s

Here we define a service resource that is monitored every 30 seconds; if it doesn’t respond for one minute, the resource will fail over to another node.

info

To remove a node from the cluster, use the command $ .

Conclusion

We’ve built a simple yet highly fault-tolerant cluster for the application’s frontend. Remember: building a cluster is a creative endeavor, and it all comes down to your ingenuity and imagination. For a sysadmin, there’s no such thing as an impossible task!