Voice Synthesis

The human voice is produced by the movement of the vocal cords, tongue, and lips. A computer, by contrast, only has numbers representing the waveform captured by a microphone. So how does a computer generate the sound we hear through speakers or headphones?

Text-to-Speech

One of the most popular and well-studied ways to generate speech is to convert the target text directly into audio. The earliest systems of this kind would literally concatenate individual letters into words, and words into sentences.

As speech synthesizers evolved, the building blocks shifted from sets of individually recorded phonemes (single letters/sounds) to syllables, and eventually to full words.

The advantages of such programs are obvious: they’re easy to develop, use, and maintain; they can pronounce any word in the language; and they behave predictably—all of which once made them attractive for commercial use. But the voice quality produced by this approach leaves much to be desired. We all remember the hallmarks of such generators: emotionless delivery, misplaced stress, and words—even individual sounds—sounding chopped up and disconnected.

From sounds to speech

This speech-generation approach quickly supplanted the first because it better mimics human speech: we produce sounds, not letters. That’s why systems based on the International Phonetic Alphabet—IPA—produce higher-quality, more natural-sounding speech.

This method is built on pre-recorded studio samples of individual sounds that are stitched together into words. Compared to the earlier approach, the quality is notably better: instead of simply splicing audio tracks, it uses sound-blending techniques based on mathematical models as well as neural networks.

Speech-to-Speech

A relatively new approach is entirely based on neural networks. The WaveNet autoregressive architecture, developed by DeepMind researchers, makes it possible to convert audio or text directly into new audio without relying on pre-recorded building blocks (research paper).

The key to this technology is the proper use of Long Short-Term Memory recurrent units, which maintain state not only at the level of each individual cell in the neural network, but also across the entire layer.

Overall, this architecture works with any type of audio waveform, whether it’s music or human speech.

info

There are several projects built on top of WaveNet.

- – A WaveNet for speech denoising — removing noise from voice recordings

- – Tacotron 2 (Google blog post) — generating audio from a mel spectrogram

- – WaveNet Voice Enhancement — enhancing voice quality in recordings

To synthesize speech, these systems use text-to-phoneme converters and prosody generators (stress, pauses) to create a natural-sounding voice.

This is state-of-the-art speech synthesis: it doesn’t just splice or blend raw audio snippets, it generates smooth transitions between them, inserts pauses between words, and modulates pitch, volume, and timbre to achieve natural pronunciation—or any other desired effect.

Generating a Fake Voice

For the most basic kind of identification I discussed in my previous article, almost any method will do — a particularly lucky hacker might even get by with five seconds of raw recorded voice. But to bypass a more robust system—for example, one built on neural networks—we’ll need a true, high-quality voice generator.

How Voice Cloning Works

Building a convincing, voice-for-voice clone with a WaveNet-based approach takes a lot of work: you need a large corpus of text read by two different speakers, aligned so that every sound matches second for second—which is hard to pull off. There is, however, another way.

Building on the same principles as speech synthesis, you can achieve an equally realistic reproduction of all vocal characteristics. A voice-cloning program was created that works from a short speech sample—that’s the one we’re using.

The program itself is composed of several key components that run in sequence, so we’ll break it down step by step.

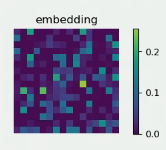

Voice Encoding

Every person’s voice has a range of characteristics—often subtle and hard to hear, but important. To reliably distinguish one speaker from another, it’s best to build a specialized neural network that learns its own feature sets for different individuals.

This encoder enables you to not only perform voice conversion later on, but also compare the outputs against the target.

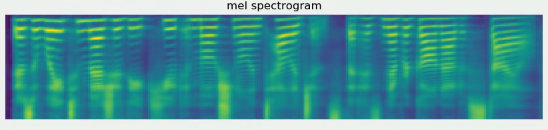

Spectrogram Generation

Using these features, the system converts text into a mel-spectrogram. The synthesizer is built on Tacotron 2 and leverages WaveNet.

The generated spectrogram contains all the information about pauses, sounds, and pronunciation, with all precomputed voice characteristics already embedded in it.

Audio Synthesis

Now a different neural network—based on WaveRNN—will gradually synthesize an audio waveform from the mel-spectrogram. That waveform is then played back as the final sound.

The synthesized audio preserves all the key traits of the base voice and, while it’s not without its challenges, can recreate the person’s original voice for any text.

Putting the Method to the Test

Now that we know how to create a convincing voice imitation, let’s put it into practice. In a previous article, I discussed two very simple yet effective methods of speaker identification: analyzing mel-frequency cepstral coefficients (MFCCs) and using neural networks trained to recognize a specific individual. Let’s see how well we can fool these systems with spoofed recordings.

Let’s take a five-second recording of a male voice and use our tool to generate two clips. The original and the resulting clips can be downloaded or listened to.

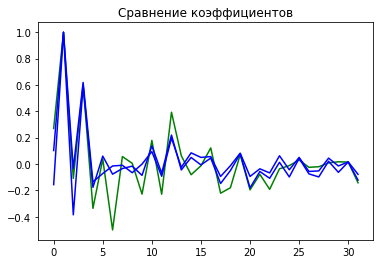

We’ll compare these recordings using Mel-frequency cepstral coefficients (MFCCs).

The difference in the coefficients is also evident in the numbers:

Synthesis_1 - original: 0.38612951111628727

Synthesis_2 - original: 0.3594987201660116

How would a neural network respond to such a convincing fake?

Synthesis_1 - original: 89.3%

Synthesis_2 - original: 86.9%

It turned out we could fool the neural network, though not flawlessly. Robust security systems—like those used in banks—will likely detect the forgery, but a human, especially over the phone, would struggle to tell a real caller from a computer-generated impersonation.

Key Takeaways

Faking a voice isn’t as hard as it used to be, which opens up big opportunities not just for hackers but for creators as well: indie game developers can get high-quality, low-cost voice-over, animators can voice their characters, and filmmakers can produce convincing documentaries.

High-quality speech synthesis is still in its early days, but its potential is already breathtaking. Soon, every voice assistant will have its own distinctive voice—not metallic and cold, but rich with emotion and nuance; support chats will stop being frustrating, and you’ll be able to have your phone handle unpleasant calls for you.