The Root of the Problem

Initially, the Google Project Zero blog reported the successful implementation of three variants of attacks against the CPU cache:

- Cache poisoning

- Out-of-bounds access

- Branch Target Injection (BTI)

Formally, they were described as two related vulnerabilities—Meltdown (variant 1) and Spectre (variants 2 and 3). Real-world exploitation scenarios depend on the CPU microarchitecture and the operating system, impacting hardware vendors, cloud providers, and the Linux community to varying degrees.

The researchers had known about the issue for a long time. On June 1, 2017, they submitted their reports to Intel, AMD, and ARM, promising not to publish the details until the vendors released patches. However, a leak forced them to disclose the details earlier.

At a conceptual level, these vulnerabilities share many traits. Both make it possible to conduct a side-channel attack by exploiting weaknesses in the physical implementation of processors. This class of attacks was first described in detail by Paul Kocher in 1996 in the context of popular cryptosystems. He also co-authored the Meltdown and Spectre research.

To date, researchers have demonstrated three basic ways to exploit these vulnerabilities, which we’ll examine in more detail below. The key point is that in every variant, the ultimate target of the attack is the cache. Using various tricks, the CPU is forced to cache the value of a byte from a memory address that the malicious process isn’t allowed to access.

Note: the malware does not read the data itself (it cannot read arbitrary locations in the cache). Instead, it recovers inadvertently cached bytes within its own address space by simply measuring read latencies across different rows of a pre-allocated array.

At their core, Meltdown and Spectre are a class of timing attacks. The key difference between them is how they cause data from protected memory pages to be cached. Meltdown exploits the delay in exception handling, while Spectre deceives the branch predictors. To understand these details, it helps to refresh the basics of how a CPU works.

A Ticking Time Bomb

The idea of out-of-order instruction execution was proposed by IBM in 1963. Everyday users didn’t encounter it until 1995, with the introduction of the first Pentium Pro. Since then, the simple in-order pipeline has faded into history, and all modern processors use architectures with out-of-order and speculative execution mechanisms.

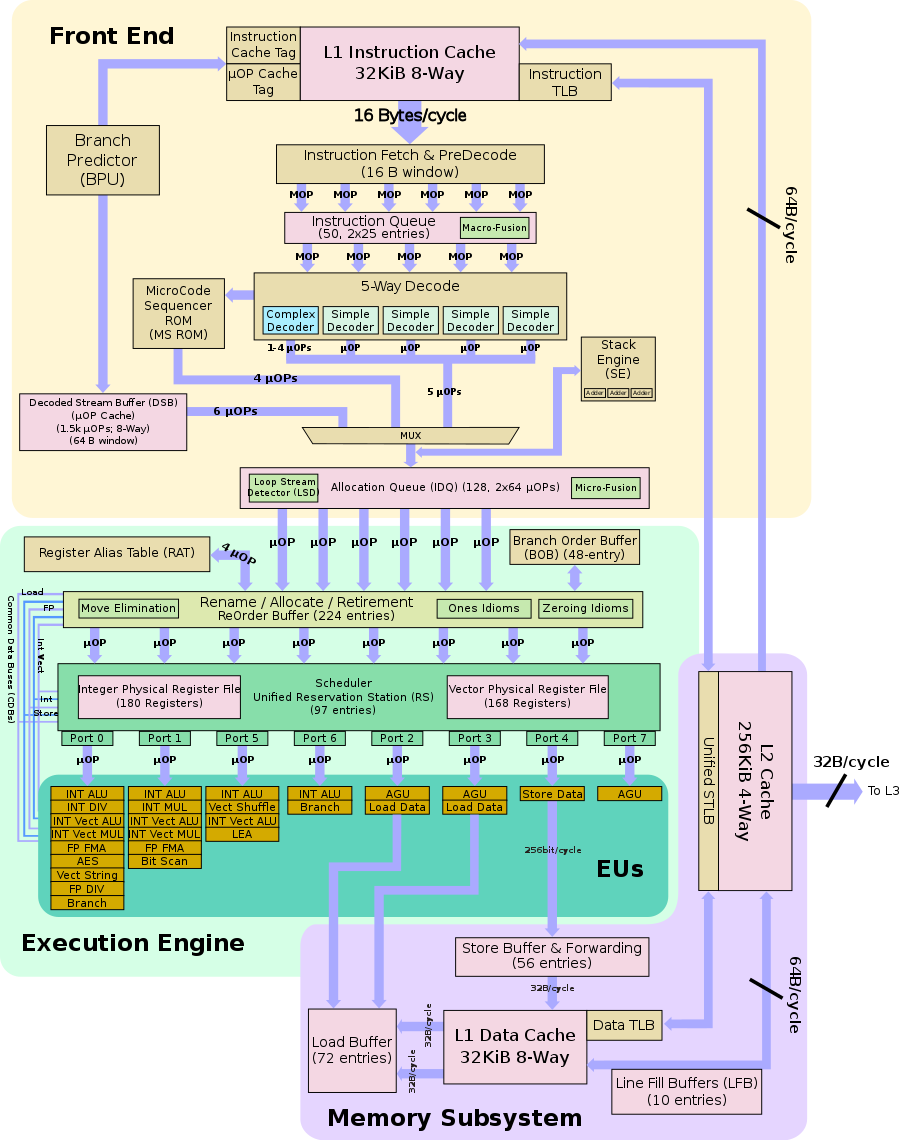

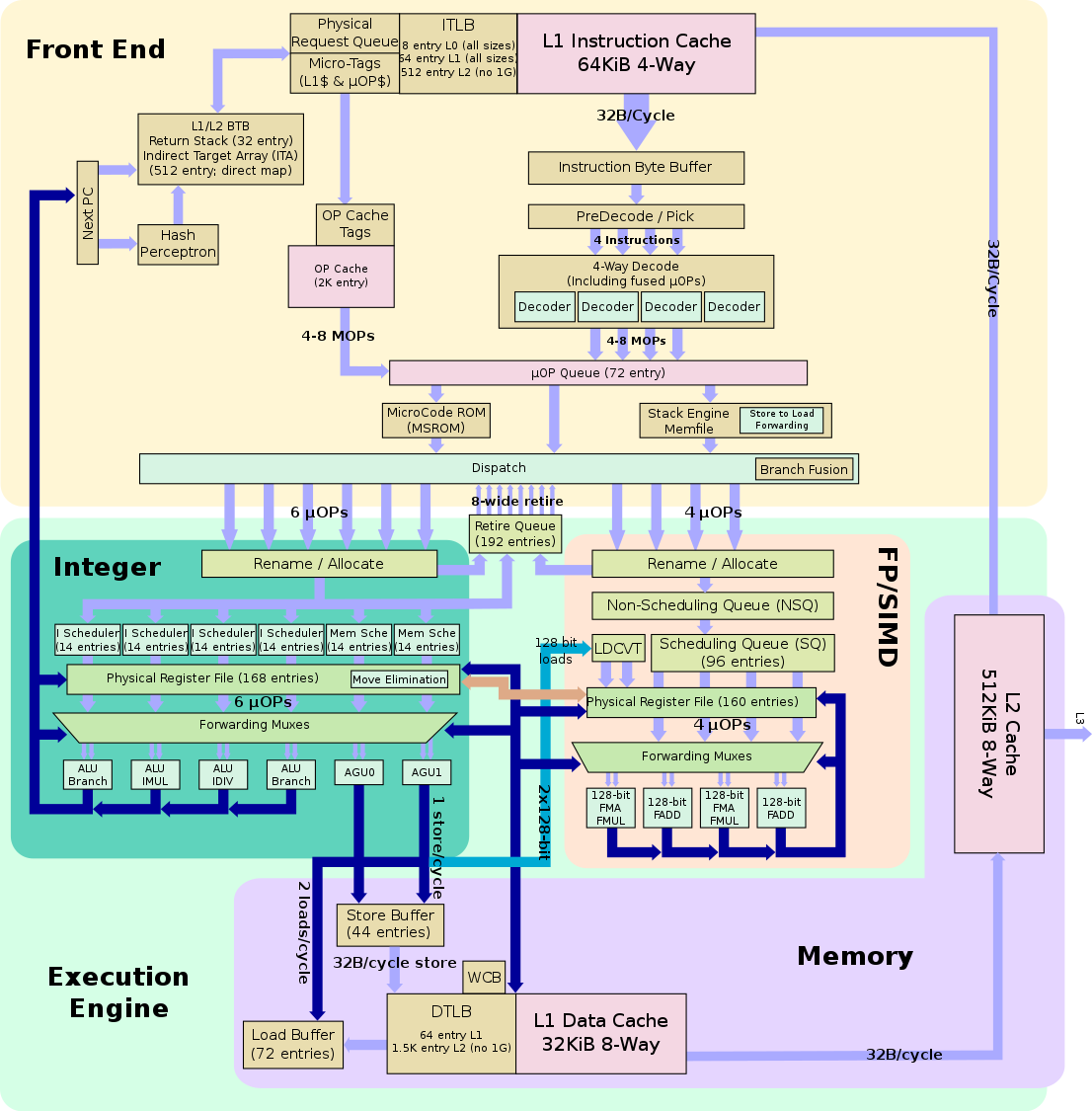

How does it actually work? To a programmer, it looks simple: you feed in an instruction stream and get the results out. But inside the CPU there’s a lot of magic. A modern core uses a multi-stage pipeline with non-linear execution paths. Incoming instructions are cached and decoded several per clock cycle. They’re broken down into micro-operations (micro-ops), which are reordered in a dedicated buffer (ROB, Reorder Buffer). None of these micro-ops operates directly on physical memory addresses or the processor’s physical registers. The memory management unit translates virtual addresses and enforces access permissions.

After the ROB, micro-ops are sent to the reservation station, where they’re processed in parallel across different execution units. In addition, modern CPUs use multiple types of caches to speed up access to data that originally resides in comparatively slow main memory. Simple instruction caches appeared as early as 1982, and data caches have been used since 1985. Today both are considered part of the basic level-one (L1) cache, with general-purpose L2 and L3 caches layered beyond that.

These architectural enhancements were designed to reduce the idle time of individual execution units. While one unit waits for its instruction’s data to be fetched from main memory, another processes subsequent instructions in parallel. The MMU (Memory Management Unit) and the overall pipeline architecture help with this: the pipeline tries to stay ahead by speculatively prefetching the most frequently used data into cache.

The key point in this scheme is that in most processors, all checks on whether instructions are allowed to execute—and even enforcement of memory access separation in the MMU—happen only at the final stage of instruction processing, during retirement, when intermediate results are committed in program order. Forbidden operations are discarded (they trigger an exception), while data that wasn’t ultimately used remains in the cache. That’s the crux of the problem.

Intel CPUs with long pipelines (excluding Itanium and the earliest Atom cores) are the biggest offenders when it comes to late condition resolution and deep speculative run-ahead along branch paths. But AMD and even ARM are affected too — exploits for them just need a more precise timer, different array sizes, and alternative cache-eviction techniques. Meltdown hasn’t been convincingly reproduced on those platforms yet, but Spectre has.

For 27 years, leading CPU designers prioritized instruction throughput over security, assuming they could perform checks at retirement—after a speculative pass through the pipeline. In one form or another, out-of-order/speculative execution is now used in all processors. Because functional units work in parallel, there are always delays in exception handling, MMU latency, and cache flushing—these effects are simply more pronounced on the Core microarchitecture, making them easier to exploit.

Meltdown

This vulnerability was independently reported by researchers from Google Project Zero, Cyberus Technology, and Graz University of Technology (Austria), as well as the aforementioned cryptographer Paul Kocher. Together, they identified a general technique to bypass the isolation between user and kernel address spaces in memory.

On January 3, 2018, they published details of the “Rogue Data Cache Load” vulnerability CVE-2017-5754, which was dubbed Meltdown because it “melts” the isolation between memory pages. It breaks a fundamental security mechanism by exploiting out-of-order execution and speculative cache loads.

Here’s how the attack works.

- The malware starts by flushing the CPU caches.

- It allocates an array in RAM with 256 × 4096 elements. Each row index corresponds to a possible byte value (0–255). The array won’t be in cache due to its size and because it hasn’t been touched yet.

- The trojan declares a variable

tmpand attempts to load into it the byte stored at a restricted address belonging to another process. This access is illegal, but while the MMU resolves the fault, the pipeline speculates and pulls the requested data into the cache. - The trojan then uses

tmpas an index into its array. That dependent access shouldn’t be allowed to retire either, but the check is again deferred, and the corresponding cache line for that array row is fetched speculatively. - Both operations will ultimately be blocked and raise an exception, but before that, due to speculative/out-of-order execution, the forbidden instructions execute transiently and the requested data ends up cached.

- Once the MMU reports the protected access, the CPU refuses to commit those results to the trojan, but it does not evict the data from the cache.

- Before the exception is handled, the trojan performs a legitimate step: it scans the previously allocated array, timing the load of each row. Only the row whose index matches the cached value returns quickly; the others are much slower because they come from main memory.

By repeating the attack for additional addresses, you can reconstruct, within the trojan’s address space, a complete copy of the password, key, or other memory-resident data whose access is otherwise blocked.

Formally, this doesn’t violate any security rules. The trojan only reads from its own address space and gets denied when it tries to access other processes’ memory. The trick is that the exception isn’t handled immediately, and the requested data still gets cached. By measuring the timing of reads to its own data, the trojan can infer which value the CPU cached—even though it wasn’t allowed to read it directly.

Spectre

The Spectre vulnerability was jointly described by ten authors. Among them are the Meltdown researchers, whose work was complemented by experts from Rambus’s cryptography division and several universities.

This attack is more versatile and could be executed in several ways. The authors outline the two most obvious ones:

- Bounds Check Bypass CVE-2017-5753

- Branch Target Injection CVE-2017-5715

Earlier, when outlining the CPU architecture, we intentionally skipped two important components—the branch prediction unit and the branch target predictor—as they aren’t essential for understanding the Meltdown attack. However, various Spectre exploitation scenarios hinge on manipulating these units.

If the instruction stream contains a conditional branch (if, switch) and there are idle execution units in the pipeline (which is almost always), the possible branch paths start being processed before the condition is actually evaluated. This saves time because evaluating the condition can take hundreds of CPU cycles just to load the data, which may be sitting in RAM—or even paged out to disk in swap.

It’s faster for the CPU to predict the most likely branch ahead of time, execute it speculatively, and then either retire it as a whole or discard the mispredicted path if the guess was wrong. Meanwhile, anything that got pulled into the cache stays there.

The problem is that branch prediction relies on a statistical model. The processor tracks the outcomes of common conditional checks and, based on that history, chooses the branch that’s statistically more likely, then speculatively executes the instructions along that path.

Those instructions could be anything, including reading a key from a known memory address. The trojan can’t perform that operation itself, but it can induce the legitimate program to cache the key simply by training the CPU’s branch predictor.

Modern software contains lots of boilerplate, so in a target application—say, a popular password manager—you can usually find a standard snippet that takes user input and performs a conditional branch.

In the first Spectre variant, you craft malware that contains a code fragment similar to the victim’s. It “trains” the branch predictor by repeatedly exercising a condition equivalent to the branch used in the target program.

In our (malicious) program, we can set things up so the chosen condition always evaluates to true. Once enough branch history accumulates, the branch predictor will start treating it as the common case—the condition that usually evaluates to true.

While the target program’s instructions are being processed, the trained branch predictor will behave accordingly. If a false condition is encountered (for example, an incorrect master password), the CPU will still start executing the “true” branch speculatively. It will eventually correct itself and flush the pipeline, but the mistakenly requested data (such as the decryption key) will remain in the cache.

The way the malware pulled data from the cache is similar to Meltdown, with one key difference: the trojan never touched the memory region it wasn’t allowed to access. Instead, it trained the branch predictor so that a legitimate program would speculatively bring the sensitive data into the cache before it had a chance to perform the usual check.

The second Spectre variant looks very similar. The difference is that instead of training the branch direction predictor, you train the branch target predictor, which has its own buffer. In effect, the attack drives the cache via the branch target buffer (BTB). The BTB supplies predicted virtual target addresses for indirect branches. Those branches are taken under a typical pattern, which we replicate in our code to trigger the misprediction.

After enough repetitions, the buffer gets trained, and the branch target predictor assumes that this code fragment (a “gadget,” in the authors’ terms) always performs an indirect jump to the specified address—and it starts prefetching data from that location into the cache.

Because branch predictors and caches exist in virtually all CPUs, Spectre affects an even wider range of architectures. So far, there’s no practical demonstration only for MIPS and Elbrus, but that doesn’t mean they’re immune—the attack scenario may simply be less obvious.

Conspiracy Theories

Some commentators consider the discovered vulnerability a Trojan-style backdoor planted in processors years ago. As one illustrative account put it, the key requirement for an effective implant is its ubiquity. In the case of Meltdown and Spectre, that requirement is more than fulfilled.

The authors of the study concede they’re probably not the first to notice this behavior. For example, in fall 2008, Nikolay Likhachev (aka Chris Kaspersky) was preparing to present at the Hack In The Box conference about a vulnerability he had discovered that allowed compromising Intel-based computers regardless of the operating system. Sounds familiar, doesn’t it?

“Processors have design flaws that let you exploit vulnerabilities both locally, at the keyboard, and remotely—regardless of what updates or applications are installed,” he wrote in the abstract. However, the talk was never delivered. Chris flew to the United States, went to work for McAfee, an Intel-controlled company, and later died in a crash under mysterious circumstances.

If we sharpen Occam’s razor a bit, another point stands out: it’s long been assumed that out-of-order (speculative) execution undermines the fundamental privilege separation known as protection rings. In this security model, certain CPU instructions can only be executed in the most privileged Ring 0, where the OS kernel rules (along with advanced debuggers like SoftICE and kernel-level rootkits).

The next rings, numbered 1 and 2, have lower privileges. They’re intended for drivers as well as hypervisors, emulators, sandboxes, and other virtualization systems. What actually runs there depends on the OS. For example, Windows 7 doesn’t use rings 1 and 2 at all—it only uses rings 0 and 3.

In any OS, all user processes run in an unprivileged protection ring (typically the outermost one). In a bare-metal system they reach system services and physical devices through drivers, and in a virtualized setup through the hypervisor when they need to execute privileged instructions. The kernel validates system calls (syscalls) and either services or rejects the application’s requests. To keep the data needed for these checks close at hand, it’s often mapped into a virtual-memory range adjacent to the user process’s address space. Those pages are marked with the kernel/supervisor privilege bit, so code running in userspace can’t read them directly.

For example, the kernel will prevent an app like trojan. or malware. from reading RAM at addresses previously allocated to chrome.. The trojan stays confined to its sandbox and can’t just snatch passwords from the browser. To pull that off, it would need some workaround—say, a separate loader that injects a DLL into the chrome. process and escalates the trojan’s privileges, bypassing the sandbox, UAC, and other defenses. That’s hard to do on an arbitrary system, so it’s usually easier to rely on social engineering and trick the user into performing the needed steps manually. This was the primary attack method before Meltdown and Spectre.

Thanks to these new vulnerabilities, the trojan no longer needs to take the long way or count on user carelessness. Instead, it abuses out-of-order (speculative) CPU execution to pull a copy of data from any protected address into its own memory space.

Vendor responses and stopgap patches

The reaction to the news of fundamental vulnerabilities in processors was striking. Intel CEO Brian Krzanich learned about the issue in the summer of 2017 and began selling most of his company stock. By November 2017, he was down to only the mandatory stake he was contractually required to hold. Intel representatives initially denied any problems with their CPUs, then called them insignificant, and later—reluctantly—acknowledged them, publishing an evolving list of processors for which the vulnerability was confirmed.

AMD stated that architectural differences in its processors make it unlikely that Meltdown and Spectre variant 2 can be executed under real-world conditions. As a result, the company recommends installing patches from OS and software vendors, but only to address the potential Spectre variant 1 vulnerability. The main argument: the researchers did not test Meltdown on AMD systems, and no one else has reported issues.

Microsoft has released a patch for Windows 10. For other versions of Windows, you can manually download the update packages here, but hold off for now.

People are already reporting that KB4056892 causes boot hangs on many PCs with AMD processors (notably the Athlon 64 X2 series). You can roll it back (if you previously created a restore point) and then disable automatic updates via the Group Policy Editor (gpedit).

On Linux, the Meltdown issue was partially addressed with the KPTI patches. They’re not recommended for systems with AMD CPUs—you never know.

A more comprehensive approach to rethinking address space isolation mechanisms in Linux is discussed here.

Apple issued a succinct statement saying that “…all Mac and iOS systems are vulnerable, though no real-world attacks have been observed so far. As a precaution, download software only from trusted sources such as the App Store.” That advice would be reasonable—if not for the fact that Trojans have already been found in the App Store.

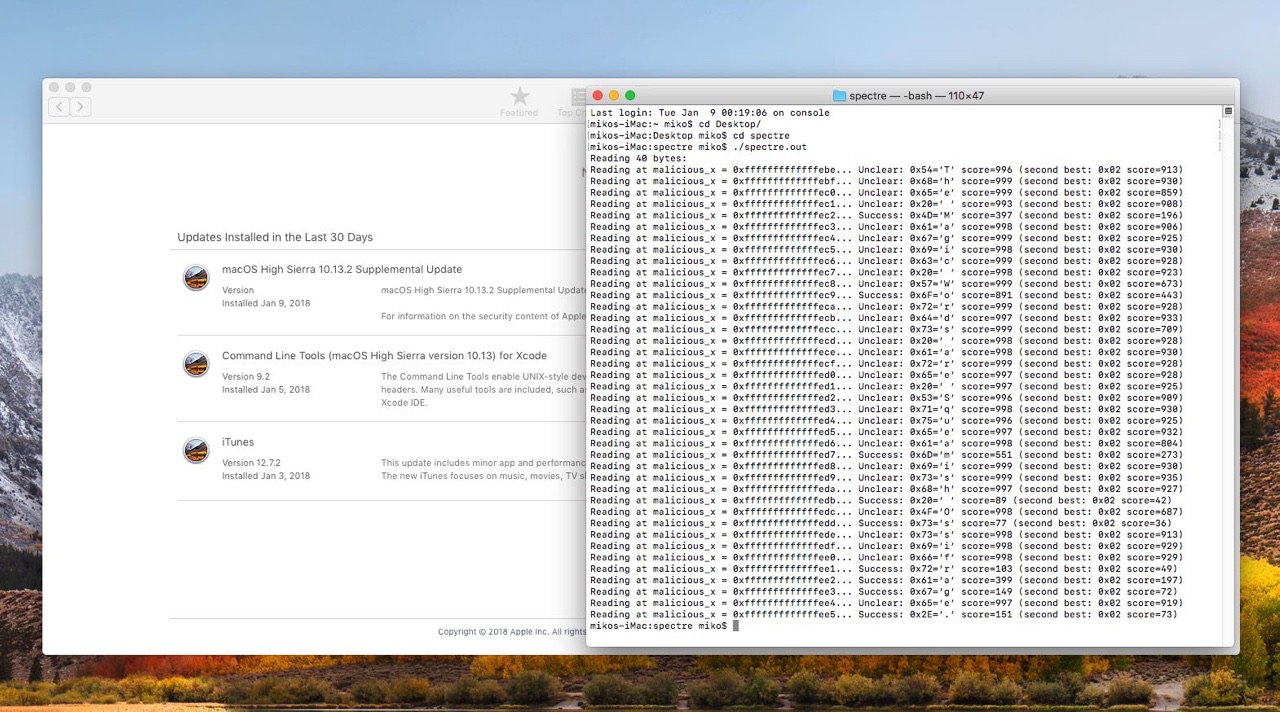

Updates are available for iOS 11.2, macOS 10.13.2, and Safari; however, even after installing the latest patches on macOS 10.13.2, the Spectre exploit still works.

It’s generally believed that watchOS doesn’t need patching, since Apple Watch uses the Apple S1 processor, and so far no Meltdown or Spectre variant has been shown to work on it.

Google cited its Project Zero work and released Android updates. As usual, when these reach users of smartphones and tablets outside the Nexus and Pixel lines is up to third-party manufacturers. Meanwhile, the company postponed Chrome browser fixes until January 23, the release date for version 64.

ARM has released a list of vulnerable SoCs, including a table of models with confirmed attack scenarios and links to patches.

It’s easy to see that this list excludes older ARM cores without out-of-order execution. As expected, they are immune to Meltdown and Spectre, since these attacks don’t work without out-of-order execution. Examples include the popular Cortex-A7, Cortex-A53, and the ARM11 family. The Raspberry Pi 2 Model B is built on the A7, while the newer 3 Model B uses the A53. Other Raspberry Pi models use ARM11, which also executes instructions in order.

In mobile devices, Cortex-A7 cores are found in SoCs from Qualcomm (MSM8226, MSM8626, Snapdragon 200, 210, and 400), MediaTek (MT6572, MT6582, MT6589, MT6592), Broadcom (BCM23550, BCM2836), and Allwinner (A20, A31, A83T).

Qualcomm’s Snapdragon 410, 412, 415, 425, 430, 435, 610, 615, 616, and 625—along with many other popular low- and mid-range chips—are based on the Cortex‑A53. Faster SoCs from various vendors already mix older (largely unaffected) cores with newer, vulnerable ones. For example, the Snapdragon 808 combines A53 and A57 cores. On the A57 cores, researchers were able to reproduce both Spectre scenarios and a modified Meltdown variant that ARM calls “Variant 3a.”

IBM acknowledged the issue and promised to release firmware updates on January 9 for systems with processors from the Power family.

Nvidia has released security updates for its GPU drivers for Windows, Linux, FreeBSD, and Solaris.

Mozilla has released a hotfix for Firefox, but candidly warns it’s only a stopgap. Chrome’s developers are preparing a similar workaround to make browser-based exploitation harder. In the meantime, users are advised to enable Site Isolation.

One way to check whether your computer is vulnerable to Meltdown and Spectre is to run a ready-made Windows PowerShell cmdlet, or a similar Python script on Linux.

Here’s another simple checker for Linux.

Antivirus Compatibility Issues

Windows patches are clashing with many antivirus and security products, leading to system instability, BSODs (Blue Screens of Death), and other nasty side effects. The root cause is that some AV vendors rely on questionable techniques—essentially the same kinds of abuses the new patches are designed to stop. For example, many AVs bypass Kernel Patch Protection, install their own hypervisors to intercept system calls (syscalls), and make unsafe assumptions about memory addresses.

In the end, Microsoft’s engineers solved the issue in an unusual way — they required antivirus vendors to add a registry entry that signals to Windows Update that everything is “all clear” and updates can proceed without issues:

Key="HKEY_LOCAL_MACHINE" Subkey="SOFTWARE\Microsoft\Windows\CurrentVersion\QualityCompat" Value="cadca5fe-87d3-4b96-b7fb-a231484277cc" Type="REG_DWORD"

Initially, this registry entry was required only to install the Meltdown and Spectre patches, but then Microsoft engineers decided to tighten the screws even further. If the registry entry is missing, Windows won’t install not only the Meltdown and Spectre fixes, but any updates at all. In effect, users now have to choose what matters more: OS updates or a sketchy antivirus.

A regularly updated list of antivirus products that are compatible and incompatible with Windows, maintained by security professionals, is available here.

If the real issue is in CPUs, why are we patching operating systems, drivers, and apps?

The researchers explain that all the released patches are just software band-aids that make it harder to exploit the vulnerabilities—more precisely, the known exploit scenarios. Truly fixing these security holes requires a hardware change: shipping new processors without out-of-order instruction execution.

Clearly, even if it happens, it won’t be anytime soon. The development cycle for new CPU cores is far too long to allow rapid fixes—especially changes this drastic.

So vendors do what can be done quickly: rewrite the software. For example, Linux no longer maps kernel memory into the address space of user processes. This mitigates Meltdown, but it makes all system calls slower.

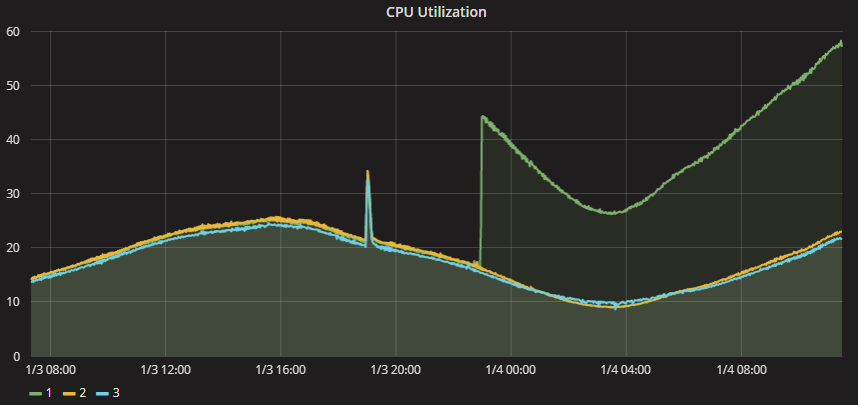

Depending on the specifics of a given system, the available patches slow down all operations that involve kernel transitions. For home users, such calls make up a small portion of workloads, so the slowdown is minor (around 2–3% in most scenarios). For enterprise customers running databases and virtualization platforms, performance degradation can reach up to 30%.

Intel and Microsoft avoided making official comments on the issue for a long time, but eventually acknowledged the problem. An official post on Microsoft’s blog by Terry Myerson, Executive Vice President of the Windows and Devices Group, states that users on older operating systems and processors will be hit the hardest.

- Windows 10 users on newer CPUs (2016-era, Skylake, Kaby Lake and later) will barely notice any slowdown—the impact is measured in just a few milliseconds.

- Some Windows 10 users on older CPUs (2015-era, including Haswell and earlier) may see noticeable regressions in certain benchmarks and even a visible drop in overall system performance.

- Windows 8 and Windows 7 users (on 2015-era CPUs, including Haswell and older models) will almost certainly experience a significant performance hit.

- On Windows Server (regardless of CPU), the performance impact will also be substantial, especially for applications with heavy I/O workloads.

Intel CEO Brian Krzanich also addressed the issue during his keynote at the Consumer Electronics Show in Las Vegas. He emphasized that there is a performance impact, but it depends heavily on processor load and the specific workloads the CPU is running. Krzanich assured that Intel’s engineers are doing everything possible to minimize this impact.

As you’ve probably noticed, Intel and Microsoft avoid quoting hard numbers—and understandably so, given the wide variance. Still, for those who want specifics, we’ll provide a few examples.

Red Hat’s developers estimate a performance hit of 1–20%, with the exact impact depending on a variety of factors.

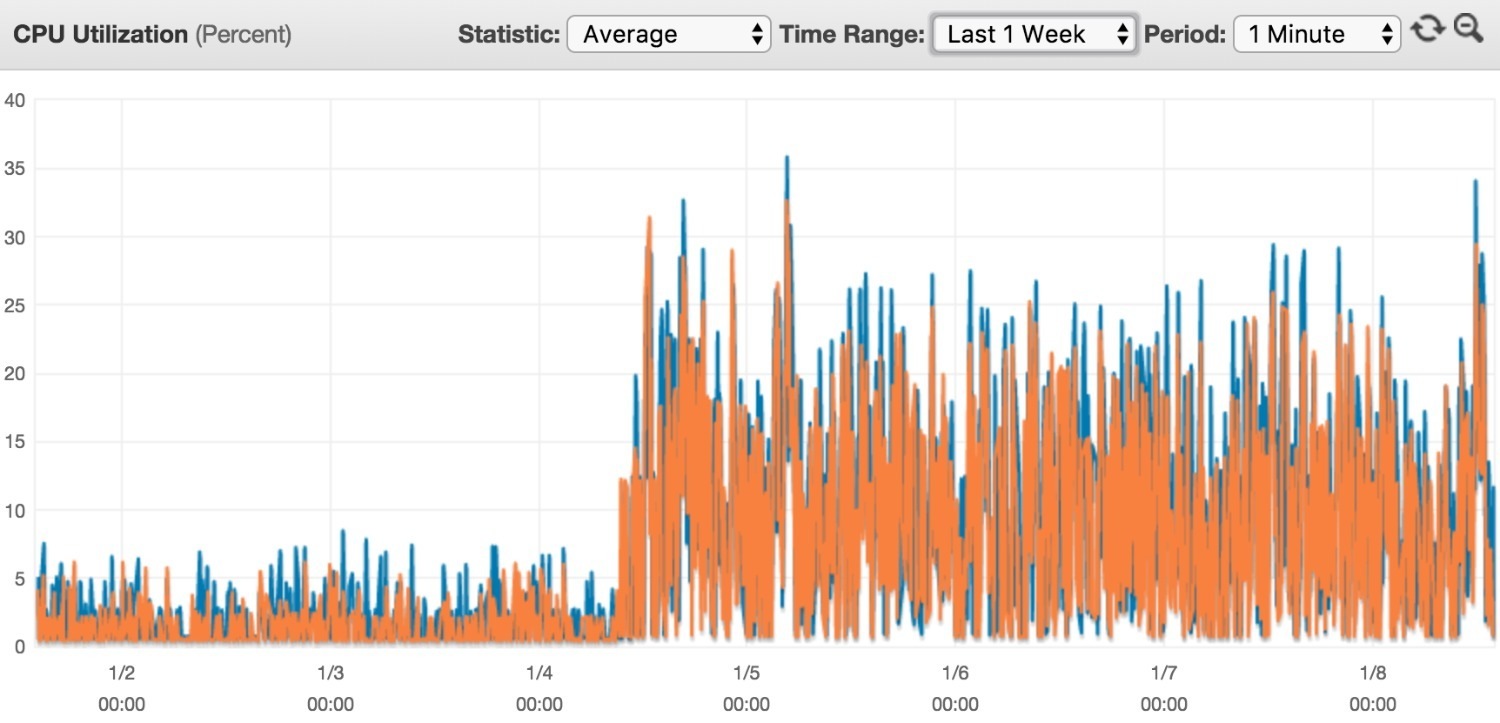

Epic Games and Branch Metrics reported that the Meltdown and Spectre patches have already caused serious operational issues. For example, the chart below shows that after installing the updates, load on one of the servers for the popular online shooter Fortnite jumped from 20% to 60%.

Housemarque Games also reported similar issues: the load on the Nex Machina servers increased by 4–5 times.

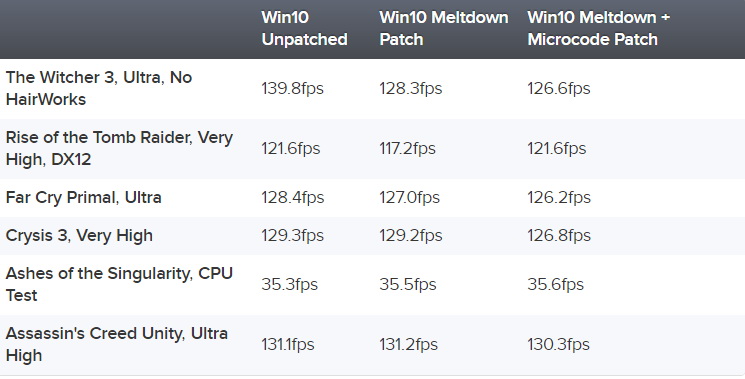

Nevertheless, many researchers say that regular users are unlikely to notice any slowdown at all. In particular, many are concerned about potential FPS drops in games. But according to Eurogamer’s recent testing, the impact is negligible. Testers at Dark Side of Gaming have reached similar conclusions.

You can also check out tests from the teams at TechSpot and ComputerBase, and simply Google for benchmarks of specific processors and use cases, since pretty much everyone jumped into benchmarking after the patches were released.

Conclusion

These days, proof‑of‑concept exploits for newly discovered vulnerabilities show up on GitHub quickly. For example, here’s a Meltdown exploit in C, and here’s a well‑commented Spectre exploit.

Whether to install the updates is up to you. The challenge in assessing the risk is that neither Meltdown nor Spectre leave any traces in the system and are essentially undetectable (aside from direct checks against known signatures). The authors present three attack scenarios, but in theory there are many more.