www

The full code of the resulting proof-of-concept is available in my GitHub repository.

A few words about LKM rootkits

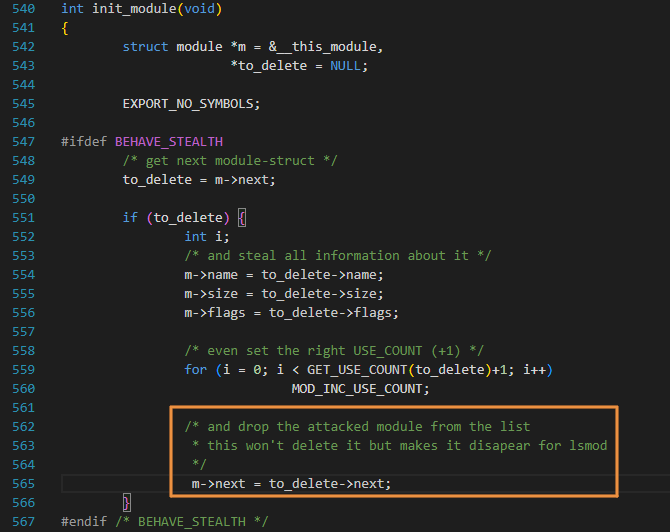

Since ancient times, kernel-level Linux rootkits (aka Loadable Kernel Modules (LKMs)) have been using the same mechanism to avoid detection: they simply remove their module descriptors (struct_module) from the linked list of loaded kernel modules expectably called (modules). This trick prevents them from being displayed in procfs (/) and lsmod outputs and also protects from unloading with rmmod (because the kernel thinks that no such module was loaded; accordingly, there is nothing to unload).

After removing themselves from the module list, some rootkits can overwrite certain artifacts in memory to complicate the search for their traces. For example, starting with version 2.5.71, when a structure is removed from the linked list, Linux sets the values of the next and prev in this structure to LIST_POISON1 and LIST_POISON2 (0x00100100 and 0x00200200).

This helps to detect errors, and can also be used to detect dangling descriptors of LKM rootkits that were previously removed from the module list. Of course, a smart rootkit will overwrite such ‘screaming’ values in memory with something less noticeable, thus, bypassing the check. For instance, KoviD LKM created in 2022 hides itself this way.

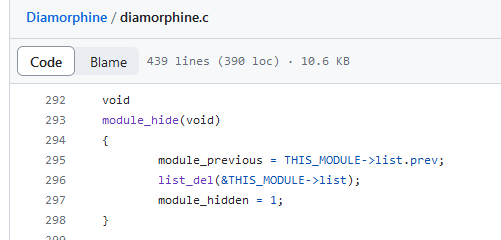

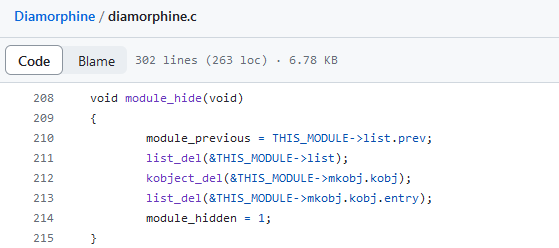

But even after their removal from the list of modules, rootkits can still be detected in sysfs, specifically in /. This pseudofile was even mentioned in the Volatility Framework documentation that is used to analyze memory dumps. Analysis of this file also makes it possible to detect negligent rootkits. The documentation claims that the developers had never encountered a rootkit that deletes itself from both places; however, the above-mentioned KoviD LKM successfully does this. What’s even funnier: the first ever committed Diamorphine version also removes itself not only from the list of modules.

KoviD , on the other hand, uses sysfs_remove_file( and sets its status to MODULE_STATE_UNFORMED. This constant indicates a ‘suspended’ state when the kernel is still in the process of initializing and loading a module; accordingly, it cannot be unloaded without unpredictable and irreversible consequences for the kernel. This trick helps to fool anti-rootkit tools (e.g. rkspotter) that use __module_address( during the enumeration of virtual memory contents (to be discussed below).

Searching for LKM rootkit descriptors in RAM

This paper discusses techniques used to search for rootkits in the RAM of a ‘live’ system and in the kernel virtual address space. In theory, such a search can be performed not only by a kernel module, but also by a hypervisor (which is actually better from the protection rings perspective). But I am going to examine only the first variant since it’s easier in terms of PoC implementation and closer to the original. Also, hash-based malware detection in memory is beyond the scope of this article: my research is focused on aspects applicable specifically to LKM rootkits, not to malware in general. Primarily, it’s about research PoCs.

Original module_hunter

Back in 2003, an article by madsys called Finding hidden kernel modules (the extrem way) was published in Phrack #61 (Linenoise section); it described a technique used to search for LKM rootkits in memory. It was the epoch of kernels 2.2-2.4 and 32-bit computers; now Linux 6.14 is on the horizon, and it’s really difficult to find operational x86-32 hardware (which is currently suitable only for experiments).

So, it was a long time ago, and plenty of features have been removed from the kernel or added to it over the last 20 years. In addition, the kernel is well-known for its unstable internal API, and the original source code from Phrack now predictably refuses to compile for many reasons. But if you understand the very essence of the proposed idea, it can still be successfully implemented for modern hardware.

That Phrack article leaves aside many aspects, and without proper background, the author’s logic behind the solution may not be immediately clear. In general, the proposed method is somewhat similar to wandering through the RAM contents in the dark by touch: you walk through the memory region where module descriptors are allocated, and as soon as something resembling a valid struct is found, you output the contents of the potential fields in accordance with the known descriptor structure.

For example, you know that a pointer to the init function should be at a certain offset; while sizes of various sections of the loaded module, its current status code, etc., are located at other known offsets. This means that the range of memory values of interest at such offsets is limited, and you can estimate whether the current address can be the beginning of struct . In other words, you can create special checks to avoid extracting obvious garbage from memory and detect what you are looking for to the maximum.

As you understand, not only internal kernel functions have changed since that Phrack publication, but also a bunch of other structures. The original implementation by madsys had only checked whether the module name field contains normal text. On x86-64, you cannot afford this: the virtual address space is much larger due to a larger number of various possible structures; as a result, a huge amount of data in the memory would satisfy such a simple condition.

Another problem solved in module_hunter is that it checks whether the virtual address that is currently under investigation has a is mapped to physical memory. If it is, then the module can access this address without the risk of panic that would drag the entire system down. This check also has to be reworked since it’s bound to architecture.

rkspotter and problem with __module_address()

I had to find a way to traverse memory without crashing the system. And then I came across the above-mentioned rkspotter. It detects several hiding techniques used by LKM rootkits. This enables it to succeed in its tasks even if one of the methods fails. The problem, however, is that this anti-rootkit employs the __module_address( function that was removed from the list of exported functions in 2020, and isn’t available for modules since Linux 5.4.118.

The idea of rkspotter is as follows: the program traverses the memory region called module (where LKMs get loaded) and uses this function to find out the module the current address belongs to. For a given address, __module_address( immediately returns a pointer to the respective module descriptor, which makes it possible to get information about an LKM based on a single address. Such operations as checking the virtual address translation validity are performed under the hood.

Of course, I could just try to copy-paste __module_address(, but my leisure interest was to reincarnate madsys’s original idea. What other pitfalls exciting challenges could be on my way to a new implementation?

What has to be fixed

To write a new working tool, I have to examine everything that has changed in the kernel over the past 20 years and is related to dangling LKM descriptors. In other words, I have to fix all the compilation errors that would be encountered along the way.

Overall, my objectives are roughly as follows:

- fix calls to altered kernel APIs. The original code is actually very short, and the only kernel API used in it relates to

procfs; so, this task won’t take much time; - identify fields in

structthat are most suitable for detecting a structure removed from the general list of modules;module - examine and take into account changes in memory management in x86-64 compared to i386; and

- take into account that on the 64-bit architecture, virtual address space is distributed completely differently, and it’s incomparably greater: 128 TB for the kernel part and the same for the user space vs. 1 GB and 3 GB, respectively, on the 32-bit architecture (by default).

Time to move on to the most exciting part!

Reincarnating module_hunter

Fields, fields…

When you are dealing with x64, a simple module name validity check isn’t enough. Experiments showed that additional checks are required; otherwise, the output would contain too much unnecessary information. False positives suck. After examining the typical contents of various fields, the following checks can be selected:

- memory that corresponds to the

statefield should contain one of the values valid for this field:MODULE_STATE_LIVE,MODULE_STATE_COMING,MODULE_STATE_GOING, orMODULE_STATE_UNFORMED; - values in the

initandexitfields should point tomodule(on x86-64) or bemapping space NULL; - at least one of the

init,exit,list., andnext list.fields must not beprev NULL; -

list.andnext list.are canonical: eitherprev NULLorLIST_POISON1/LIST_POISON2(but this isn’t certain and can be omitted later); and - module size (

core_layout.for versions older than 6.4) must be non-zero and a multiple ofsize PAGE_SIZE.

Important: the above list isn’t carved in stone and can be adjusted in the future when more sophisticated rootkits appear or for systems where it’s not sufficient for some reason.

When you search for an efficient combination of checks, false positives displayed by an anti-rootkit due to various garbage in memory look something like this:

. . .

[ 5944.085392] nitara2: 0xffffffffc011c300 “serio_raw” [ 5944.085435] nitara2: 0xffffffffc0120ff0 “{” [ 5944.085512] nitara2: 0xffffffffc0129680 “i2c_piix4”. . .

[ 5944.087444] nitara2: 0xffffffffc01fd040 “fb_sys_fops” [ 5944.089131] nitara2: 0xffffffffc02affb0 “`”, [ 5944.089342] nitara2: 0xffffffffc02c30c0 “cfg80211” [ 5944.091874] nitara2: 0xffffffffc03cb700 “kvm”…

[ 5944.094188] nitara2: 0xffffffffc04be0c0 “nitara2” [ 5944.670378] nitara2: end check (total gone 66060288 steps)Virtual memory in x86

Now when fields required to detect an unbound structure have been identified, it’s time to find out what part of the kernel virtual address space should be searched.

The original module_hunter traversed through the vmalloc area: in the past, memory for modules and their descriptors was allocated there. Its size on x86-32 was only 128 MB, which significantly reduced the number of addresses for enumeration (and time required for this) compared to the full address space of 4 GB (even if you only take its kernel part of 1-2 GB).

In the x86-64 address space, the already mentioned separate virtual memory area allocated for kernel modules is 1520 MB in size (same for 48- and 57-bit addresses); it’s called module . Its virtual addresses are limited by the MODULES_VADDR and MODULES_END macros. Further experiments showed that both module descriptors and their code with data end up in this area. Great! No need to go through the huge virtual address space whose size is measured in terabytes.

Mapping physical pages and paging levels

Here is the stumbling rock that constrained the implementation of the kernel memory traversal concept for modern systems.

www

If terms like “multilevel page tables” or paging levels make no sense to you, but you are still eager to delve deeper into architectural stuff, you can check, for instance, OSDev or Linux documentation. The most difficult – and rewarding! – task is to examine implementations for different processor types.

In the days of yore, madsys described the problem as follows:

By far, maybe you think: “Umm, it’s very easy to use brute force to list those evil modules”. But it is not true because of an important reason: it is possible that the address which you are accessing is unmapped, thus it can cause a paging fault and the kernel would report: “Unable to handle kernel paging request at virtual address”.

This problem could be solved without delving into the details of virtual to physical address translation magic: similar to rkspotter, I could employ __module_address(. Too bad, this function cannot be used due to the following reasons: (1) it won’t implement the solution elegantly on modern kernels; and (2) it’s not really suitable for the original objective. In addition, without it, my code will be more self-sufficient and less likely to break on future kernels.

Overall, it makes sense to check the availability of a memory page on my own. To do this, it’s necessary to understand how the check is performed when the processor accesses a virtual address, and what structures are involved in this process.

In reality, data accessed by a program must physically reside somewhere. When a virtual address is accessed, that address must be translated into a physical address in RAM so that the hardware knows what data to read. Right now, your computer can simultaneously run several programs whose code starts somewhere around 0x400000 – but in physical memory, these data are completely different and located at different physical addresses.

The memory management unit (MMU) handles all this stuff behind the scenes. In theory, it might be absent (e.g. in embedded systems where it’s not required) as it was in the past. MMU makes it possible to implement virtual address spaces (i.e. protect memory used by some programs from others) on a device. Without this protection, executable memory of an operating system could be easily overwritten from a user app; this would either crash the system or enable you to do some exciting tricks (e.g. intercept system calls or interrupts).

When MMU is present in the system, each user program believes that the entire address space belongs solely to it; accordingly it won’t overwrite anyone’s memory; and no one will overwrite its memory. This is the essence of virtual address space used by a process. On different platforms, it’s divided between the user app and the kernel in different proportions. The kernel part is mapped to the same pages of physical memory in all processes, since there is only one kernel in the system. The same applies to shared libraries since there is no need to multiply copies of the same object in limited physical memory.

info

For instance, the upper valid userspace address in x86-64 with four-level paging is 0x00007fffffffffff; while the lowest kernel address is 0xffff800000000000. The hole between them is huge (16 million terabytes); addresses within it are called non-canonical (i.e. they are invalid for certain hardware configurations, although, in theory, could be used). Unused bits in virtual addresses made it possible to introduce Pointer Authentication Code (Linear Address Masking, PDF).

Paging levels

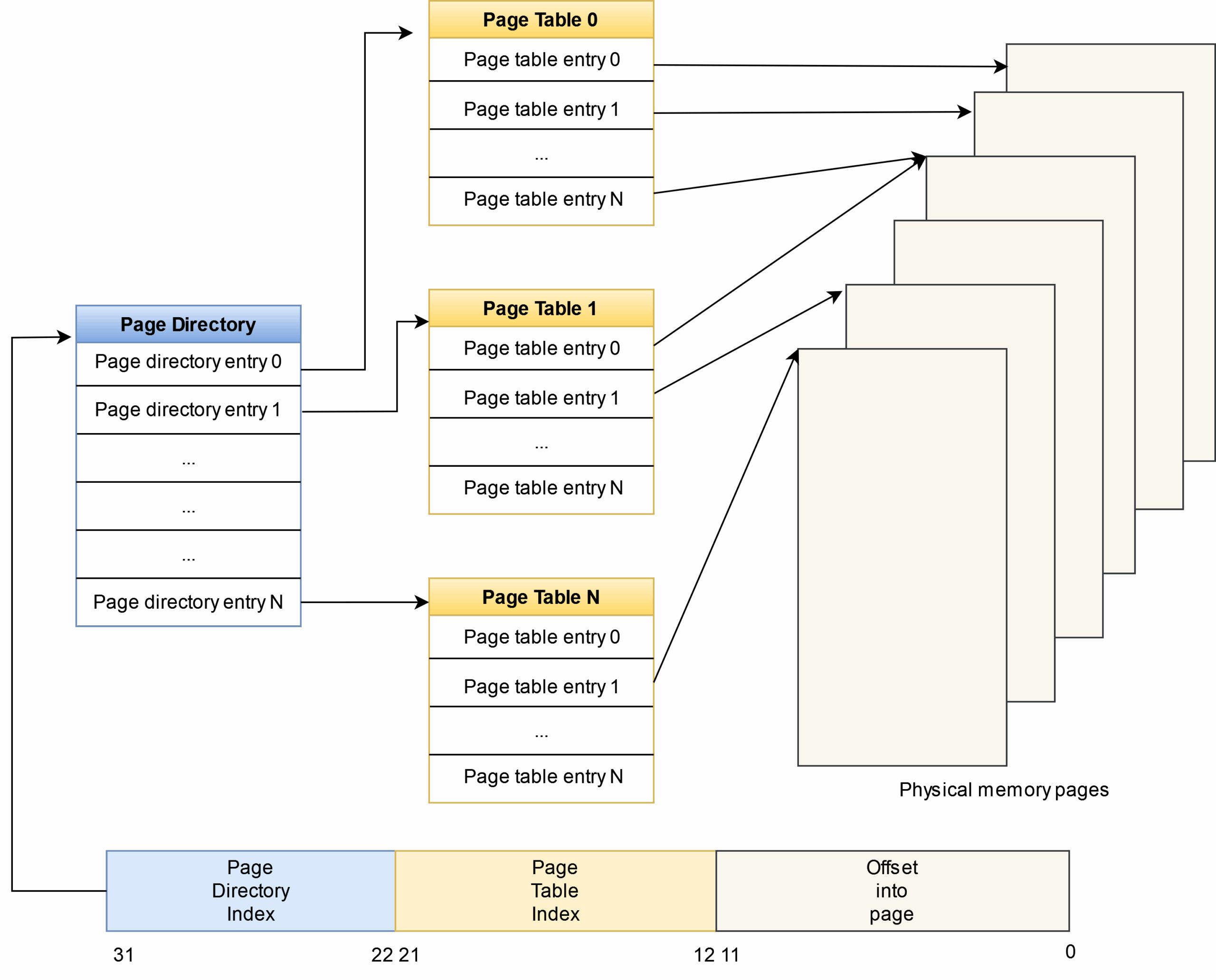

Let’s start with a simpler topic and find out how a 32-bit address is translated using two-level paging that provides 4 GB per virtual address space. The address is divided into three parts that represent an index in the Page Directory Table, an index in the Page Table, and an offset within the page.

The physical address of the desired page will be obtained only at the last stage of walking through page tables. Also, as you can see in the diagram below, several page table entries (PTE) can correspond to the same pages in RAM. For example, this feature is used to allocate zeroed pages to a process (see ZERO_PAGE).

Some pages of the virtual address space may not have physical mapping at all (i.e. no physical pages correspond to such page table entries (PTEs)). If a such a memory area is accessed, a segmentation fault would occur. In other cases, mapping could exist, but it might not be present in physical memory at the moment (e.g. it could be unloaded to the disk due to a memory shortage).

info

Sometimes, zero page (the one that occupies virtual addresses 0...) can be valid. As a result, the null pointer is treated as a regular one and can be dereferenced without any segfaults or panics. At some point, this feature facilitated the exploitation of a kernel vulnerability.

Table entries (i.e. PTE/PDE) contain various information about the page: is it currently present in memory or not; what are the access rights to it; does it belong to user space or kernel; etc. Definitions of various bits can be found in the kernel source code, but keep in mind that they vary in different architectures.

Accessing the right address without crashes

In module_hunter, the following piece of code checks whether a mapping actually exists:

int valid_addr(unsigned long address){ unsigned long page; if (!address) return 0; page = ((unsigned long *)0xc0101000)[address >> 22]; // pde if (page & 1) { page &= PAGE_MASK; address &= 0x003ff000; page = ((unsigned long *) __va(page))[address >> PAGE_SHIFT]; // pte if (page) return 1; } return 0;}For those who have no idea of how memory works on i386, constants like 0xc0101000 and 0x003ff000 resemble some dark magic. The first value can be Googled, but if you don’t understand the process, it won’t really help.

As you can see from the diagram above, the anti-rootkit expects to see the page directory (i.e. a table with Page Directory Entries (PDE)) at the address 0xc0101000: ten years had to pass before address randomization appeared in the kernel. Then the tool accesses the index obtained after bit shifting the target virtual address. Also, you can see in the diagram below that the high order part of the address (bits 22-31) is the index in the page directory.

If the obtained PDE is non-zero, then it, in turn, points to the page table. More precisely, the page presence bit (PAGE_PRESENT) is checked in if (.

Bits 12-21 of the desired address are taken as the index in the page table that is calculated in the code above using a bitwise AND with 0x003ff000. If you access this index, you can get either the respective PTE or zero (if this virtual address isn’t mapped to any physical address in the context of your process).

To support larger address spaces, more paging levels can be required. A diagram showing how an address is processed at three levels can be found in the kernel documentation. Depending on the hardware, several Page Directories can be used instead of the one in the above example. Accordingly, more tables have to be traversed before the desired physical page can be reached. Address translation can be performed, for example, in the following order (in Linux terminology):

- Page global directory (PGD);

- P4D (in the case of five-level paging);

- Page upper directory (PUD);

- Page middle directory (PMD); and

- Page table.

According to the documentation, even if the kernel supports five-level tables, it will still run on hardware with four paging levels; while the P4D level will be folded at runtime.

Important: when you access each subsequent paging level, you have to check for its presence in memory. This can be done using the following macros:

- pgd_present()

- p4d_present()

- pud_present()

- pmd_present()

- pte_present()

They check whether the page present bit is set, and, depending on the current level, some other bits can be checked as well. A full check at each level can be performed as follows:

struct mm_struct *mm = current->mm;pgd = pgd_offset(mm, addr);if (!pgd || pgd_none(*pgd) || !pgd_present(*pgd) ) return false;p4d = p4d_offset(pgd, addr); . . .Something very similar can be found in the kernel code:

- in

kern_addr_valid((which was removed about a couple of years ago); and) - in

dump_pagetable(,) spurious_kernel_fault(,) mm_find_pmd(…)

With regards to different numbers of paging levels, the value used by the current kernel can be found in the CONFIG_PGTABLE_LEVELS option. Support for five-level paging was introduced in 2017 in versions 4.11-4.12 (see the commit and an article on LWN).

Proof-of-Concept

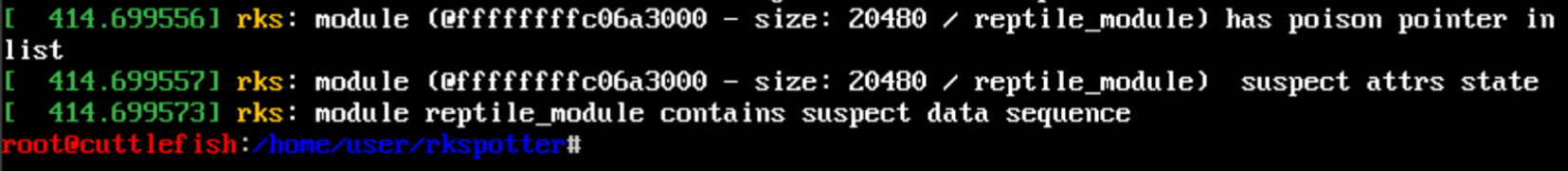

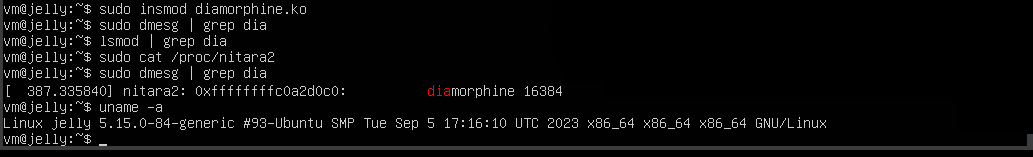

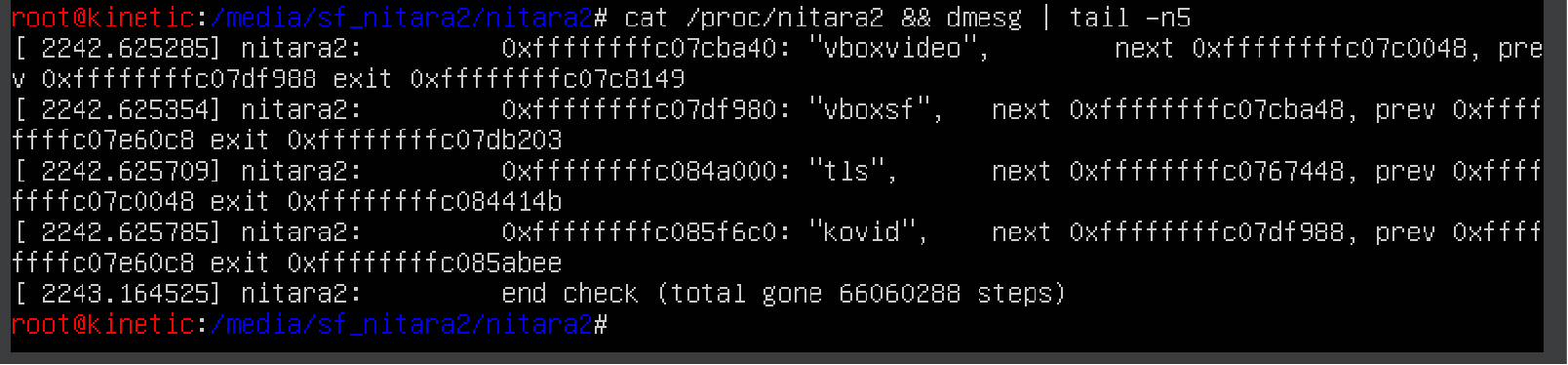

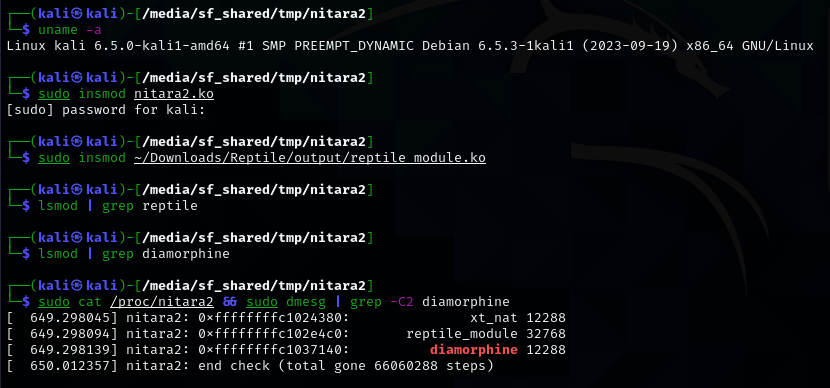

Let’s test the resulting tool on several systems. If the PoC interaction interface seems weird to you, don’t judge it too harshly: in the original utility, it was exactly the same.

The lsmod output expectedly doesn’t show anything suspicious, but my reincarnated tool detects that the enemy is on the watch.

Outro: portability

If you take the current version of the code, remove the check for CONFIG_X86_64, and compile it, let’s say, on Raspberry Pi with Linux 6.1, it would build as normal and even run. But it won’t detect anything, which isn’t surprising: the program cannot adequately operate on other architectures due to differences in their virtual address spaces. For instance, AArch64 features not only a different memory layout (e.g. its memory area for kernel modules is 2 GB in size instead of 1520 MB), but also a different number of paging levels. It varies from two to four depending on the page size and the number of address bits used, which, in turn, affects non-canonical address areas that are checked by the anti-rootkit.

With regards to compatibility with different kernel versions, the code runs successfully on several kernels (4.4, 5.14, 5.15, and 6.5 on x86-64). Of course, this doesn’t guarantee that it won’t crash on some interim release or at some point in the future. If you encounter such failures, feel free to drop the issue on GitHub!