It has emerged—or more precisely, been officially confirmed—that Western Digital has for a long time been quietly shipping SMR (Shingled Magnetic Recording) drives, flatly refusing to disclose this to bewildered and angry owners of RAID arrays that fell apart as a result. What’s at the heart of the scandal, why did shingled recording provoke such a backlash, and how do WD and Seagate differ in their approaches? Let’s unpack it.

The Shingled Drive Scandal

If you haven’t been closely following data storage news, some recent developments may have slipped under the radar. Here’s the timeline.

April 2

It started with research by Christian Franke. In a detailed write-up with tests and reports, he showed that some WD Red drives marketed for multi-bay NAS use actually employ undocumented SMR (Shingled Magnetic Recording) and explained the specific issues this causes in arrays. Because of the well-known characteristics of SMR, mixing SMR disks with “conventional” CMR (Conventional Magnetic Recording) drives led to array degradation and the SMR disks dropping out. Worse, rebuilding such arrays often proved impossible because the SMR drive would repeatedly drop out during recovery.

This study wasn’t the first, second, or even the tenth warning sign. Users had been asking “is this SMR?” for quite some time. For example, the Reddit thread “WD Red 6tb WD60EFAX SMR? Bad for Existing raid in DS918+ with WD Red 6tb WD60EFRX PMR drives?” was already discussing the issue. If you look, you can find dozens of similar discussions on Reddit.

It would be fine if the manufacturer disclosed this behavior. It would even be acceptable if they simply answered “yes” to a direct question: does model X use SMR? Unfortunately, the only response users received was: “We do not disclose details of the internal functioning of our drives to end customers.” Here’s a quote from their support response:

I understand your concern regarding the PMR and SMR specifications of your WD Red drive.

Please be informed that the information about the drive is whether use Perpendicular Magnetic Recording (PMR) or Shingled Magnetic Recording (SMR), is not something that we typically provide to our customers. I am sorry for the inconvenience caused to you.

What I can tell you that the most products shipping today are Conventional Recording (PMR). We began shipping SMR (Shingled Magnetic Recording) at the start of 2017. For more information please refer the link mentioned below.

Here’s another version of the answer—the same thing, just worded differently.

We have received your inquiry whether internal WD Red drive WD40EFAX would use SMR technology. I will do my best here to assist and please accept our sincere apologies for the late reply.

Please note that information on which of our drives use PMR or SMR is not public and is not something that we typically provide to our customers. What we can tell you is that most WD products shipping today are Conventional Recording (PMR) — please see additional information below. However, we began shipping SMR (Shingled Magnetic Recording) at the start of 2017.

Source

Loose translation: “Please note that information about the use of PMR and SMR is not publicly available and is not disclosed to our customers. However, I can say that most WD products we ship use CMR; additional details below. That said, we began shipping SMR drives in early 2017.”

This is roughly the response Christian Franke received. He didn’t stop there, and ended up getting the following reply:

Just a quick note. The only SMR drive that Western Digital will have in production is our 20TB hard enterprise hard drives and even these will not be rolled out into the channel.

All of our current range of hard drives are based on CMR Conventional Magnetic Recording.

With SMR Western Digital would make it very clear as that format of hard drive requires a lot of technological tweaks in customer systems.

With regards,

Yemi Elegunde

Enterprise & Channel Sales Manager UK

Western Digital®

WDC UK, a Western Digital company

The response claims that the only WD drive with SMR is a 20 TB model intended for data centers. According to a WD representative, all other WD drives use CMR.

Further attempts to get any clear information resulted in a proposal to “discuss the issue with engineers and hard drive specialists via a teleconference.” The teleconference, however, never happened. The users turned to journalists.

April 14

So, Christian reached out to journalists at the industry publication Blocks & Files. They investigated the issue and published the article Western Digital admits 2TB-6TB WD Red NAS drives use shingled magnetic recording.

Why is SMR in NAS‑class drives a problem? The article gives several examples. In particular, users replacing failed 6 TB WD Red drives with the newer WD60EFAX models saw unusually long rebuild times for SHR‑1 and RAID 5 arrays—ranging from two to eight days. Some rebuilds failed outright: the new drive was simply kicked out of the array as if it were defective. Clearly, the new drives perform far worse than the previous model and, in some cases, don’t accomplish the intended task at all. It’s a straightforward case of misleading consumers—yet to this day Western Digital representatives have refused to comment on the situation.

For the first time, journalists managed to get a clear, concrete answer from Western Digital: “Current WD Red 2–6 TB models use drive-managed SMR (DMSMR). WD Red 8–14 TB drives are based on CMR. … You’re right that we don’t specify the recording technology in the WD Red documentation. … In our testing of WD Red drives, we did not observe RAID rebuild issues attributable to SMR.”

In its defense, WD offered the following argument: “In typical home and small-business NAS environments, workloads are bursty, which leaves enough time for garbage collection and other maintenance tasks.” Reporters at Blocks & Files countered that many NAS workloads are anything but “typical” as the vendor defines them. The controversy continued to unfold.

April 15

The article hit a nerve—many users have been frustrated about this for a long time. The wave of online coverage that followed the original piece prompted Blocks & Files to continue the investigation.

The article Shingled hard drives have non-shingled zones for caching writes describes the tape-like layout of shingled storage and explains that every SMR drive includes a buffer that uses conventional magnetic recording (CMR). There’s a direct analogy to modern SSDs: you have slower TLC or even QLC NAND, but part of it is used as a pseudo‑SLC cache to absorb writes. Likewise, SMR hard drives include CMR regions used to accelerate writes. As a result, when a user tests the drive with a popular tool like CrystalDiskMark, it creates an impression that everything is normal: the drive appears to read and write data without any surprises.

The surprise shows up once the amount of data written exceeds the size of the CMR area or the disk fills up, forcing the drive to compact data on the fly. In a NAS, this can happen in at least two scenarios: during a rebuild of a RAID 5–class array and similar setups that use parity, and when writing a large volume of data (for example, creating and saving a regular backup). In these cases, the host-visible write speed drops dramatically—by several times, or even by one to two orders of magnitude. In my own tests, the write speed when overwriting a full drive fell to 1–10 MB/s while writing a single 1.5 TB file (a backup of my system). I find that speed unacceptable.

In the same article, the author described what happens when you try to rebuild a RAID 5/6 array with a replacement drive that uses SMR. The heavy random I/O quickly overflows the drive’s CMR cache; the controller can’t keep up and starts returning service-unavailable/busy errors. After a short time (about forty minutes), the drive becomes completely unresponsive, and the RAID controller drops it from the array and marks it as failed.

Interestingly, nothing like this happens with other array types—RAID 0/1—or when creating a new RAID 5/6 array. It looks like Western Digital’s engineers simply didn’t test the new drives in a RAID 5/6 rebuild scenario, sticking to only the most basic use cases.

April 15

In another article, Seagate ‘submarines’ SMR into 3 Barracuda drives and a Desktop HDD, the reporters kept pushing the SMR story, reporting that Seagate has been doing the same.

Seagate has long used SMR in its 2.5-inch drives, as well as in the Archive series and desktop Barracuda models. The company has never concealed this. At the same time, Seagate’s NAS-oriented drives (the IronWolf and IronWolf Pro lines) do not use SMR, which the company has confirmed. So there’s no scandal here: anyone who does even minimal due diligence can tell exactly which drive they’re buying and for what purpose. Seagate documents SMR specifics in the technical documentation for the respective drives; we’ll come back to that later—let’s return to the timeline for now.

April 16

The next day, reporters found that some Toshiba drives also use SMR: Toshiba desktop disk drives have shingles too. Not sure many people will care—Toshiba’s share of 3.5-inch drives is negligible. Still, it’s worth checking which Toshiba models use SMR. As of now, that includes the 4 TB and 6 TB 3.5-inch Toshiba P300 Desktop PC and DT02 drives, as well as all 2.5-inch MQ04-generation models.

April 20

Western Digital is clearly on edge and getting worried. In the article SMR in disk drives: PC vendors also need to be transparent (https://blocksandfiles.com/2020/04/20/western-digital-smr-drives-statement/) the company published an official response claiming there’s nothing wrong with the drives if you use them correctly. A more recent version is in the Western Digital blog (https://blog.westerndigital.com/wd-red-nas-drives/). The takeaway is that rebuilding RAID 5/6 arrays with WD Red drives counts as improper use—don’t do that. And NAS and RAID are not the same thing, so don’t conflate them. If you want to put a drive in a NAS as part of a RAID 5/6 array, you should buy something higher-end—say, from the Ultrastar DC line, or WD Gold, or at least WD Red Pro. Seriously, that’s exactly what it says: If you are encountering performance that is not what you expected, please consider our products designed for intensive workloads. These may include our WD Red Pro or WD Gold drives, or perhaps an Ultrastar drive. I won’t reproduce the entire output of Western Digital’s PR department here; you can read it via the link above.

April 21

As you’d expect, Seagate—the main competitor—weighed in. In the article Seagate says Network Attached Storage and SMR don’t mix, a company representative emphasizes that Seagate has never used shingled magnetic recording (SMR) in its IronWolf and IronWolf Pro NAS drives and does not recommend using SMR drives in network storage.

That said, as we know, Seagate also failed to tell buyers of Barracuda desktop drives that they use shingled magnetic recording (SMR) — which came as an unpleasant surprise to a lot of users.

April 23

Journalists couldn’t ignore WD’s blog post. The article Western Digital implies WD Red NAS SMR drive users are responsible for overuse problems asks some very reasonable questions: how could a user—even in theory—know about potential issues if WD kept the very fact that SMR was used in NAS drives under wraps? And if you’re going to accuse users of “misusing” the drives, then please formally define the “correct” and “incorrect” use cases for each specific model. In the older WD60EFRX, for example, RAID 5/6 rebuilds were a “proper” use case, while in the newer WD60EFAX they became “improper.” That’s acceptable—if you tell customers. But customers weren’t informed, and WD declined to answer the puzzled users’ questions.

April 24

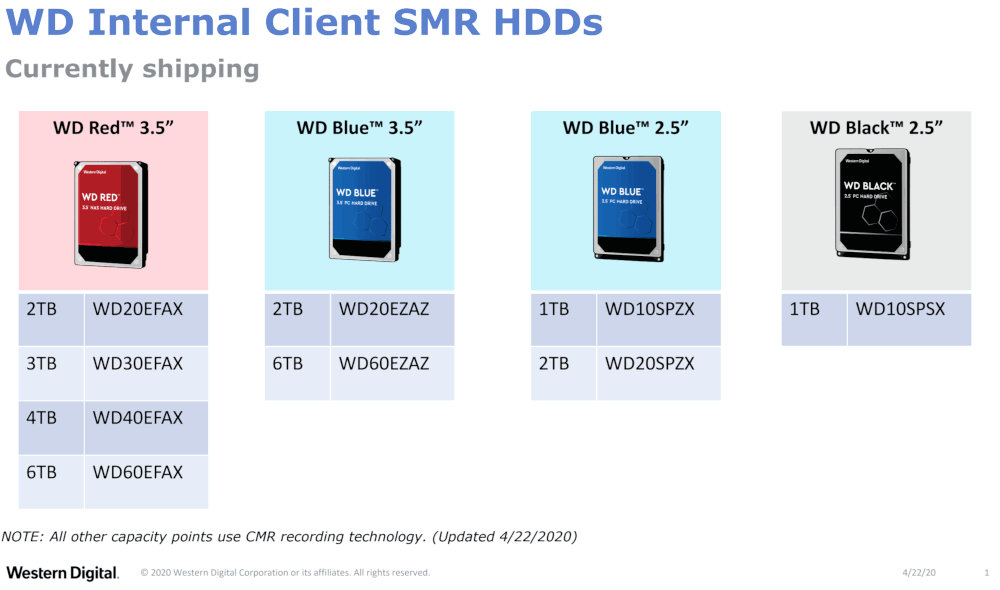

Western Digital has conceded: the company has published a complete list of 3.5-inch drives that use SMR.

Shingled Magnetic Recording (SMR) is still used in WD Red drives intended for NAS, but after unprecedented public pressure the manufacturer—grudgingly and with caveats—agreed to stop keeping it under wraps. Now you can make an informed choice: buy an older WD Red model without SMR, or a newer one with SMR. Or switch to a competitor that doesn’t use SMR in NAS drives at all. Or go for a helium-filled drive with a capacity of 8 TB or more.

While the public is busy celebrating, let’s take a look at drives that use shingled magnetic recording (SMR): are they truly all bad, or is the cost savings justified in some scenarios?

Seagate’s SMR: the same old problem

Seagate is the simplest case. If you buy a Seagate drive, you’re likely getting a shingled magnetic recording (SMR) model. As of today (this could change, and quickly), here’s how Seagate’s lineup looks.

The Seagate Archive and Barracuda Compute families are typically SMR (shingled magnetic recording). Drives of this type are used in the Expansion Desktop and Backup Plus Hub external models at capacities up to and including 8 TB.

The Barracuda Pro lineup doesn’t use SMR. Also, all Seagate drives 10 TB and up use conventional magnetic recording (CMR), which explains the notable price gap between Seagate’s 8 TB and 10 TB external models.

All NAS drives in the IronWolf and IronWolf Pro lineups use conventional magnetic recording (CMR).

What is shingled magnetic recording (SMR)? A detailed illustrated article is available on Seagate’s website—I highly recommend checking it out.

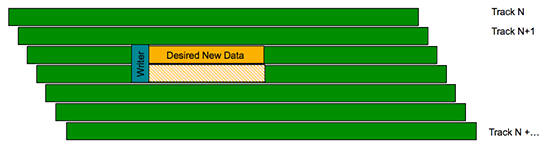

In shingled recording, tracks are written with overlap—like roof shingles—rather than strictly side by side, which increases data density. Using shingled recording can lower manufacturing costs and produce a quieter, lighter drive by requiring fewer spinning platters and read/write heads.

What about the reliability of SMR drives? When the technology first entered the market, users had significant concerns about their long-term reliability. Today, it’s fair to say that, when used in the typical role of archival storage, SMR drives are no less reliable than same-capacity CMR drives—and in some cases may even be more reliable thanks to a simpler mechanical design.

Seagate’s take on SMR keeps write speeds high during the initial fill of the drive. But once the drive is full and you start overwriting data—a very common pattern for recurring backups—the rewrite speed drops dramatically compared to writing to a fresh drive. It’s similar to the early SSDs: writing to an empty block is fast, but updating a single byte requires reading an entire (and fairly large) block, modifying it, and writing it back. With SMR, to overwrite a single track you first have to read all the subsequent tracks in the shingled band, then write the target track and restore the following tracks from the buffer.

Here’s what Seagate, the manufacturer, has to say on the matter:

SMR comes with a drawback: if you need to overwrite or update part of the data, you have to rewrite not only that fragment but also the data on the subsequent tracks. Because the write head is wider than the non-overlapped portion of a track, it encroaches on neighboring tracks, which then must be rewritten as well (see Fig. 3). As a result, changing data on a “lower” track forces you to fix the nearest shingled track, then the next one, and so on—potentially until the entire platter has been rewritten.

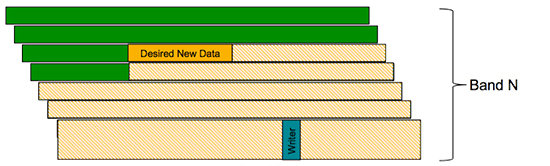

For this reason, the tracks on an SMR drive are grouped into small sets called bands. Only the tracks within the same band overlap one another (see Fig. 4). Thanks to this grouping, when some data needs to be updated you don’t have to rewrite the entire platter—only a limited number of tracks—significantly simplifying and speeding up the process.

This design causes overwrite speeds to drop from a nominal 180 MB/s down to 40–50 MB/s. You can try to work around it by enabling write caching and writing in large blocks at least as big as a single band. For example, a LaCie 2.5″ 4 TB test unit wrote to a blank drive at about 130 MB/s. After the first full write, sustained write speed fell to 20–40 MB/s; notably, neither formatting nor repartitioning helped. The 5 TB version performed even worse, with overwrite speeds dropping below 10 MB/s. Enabling write caching and using large write blocks helped somewhat, but even then the original high write speed could not be restored.

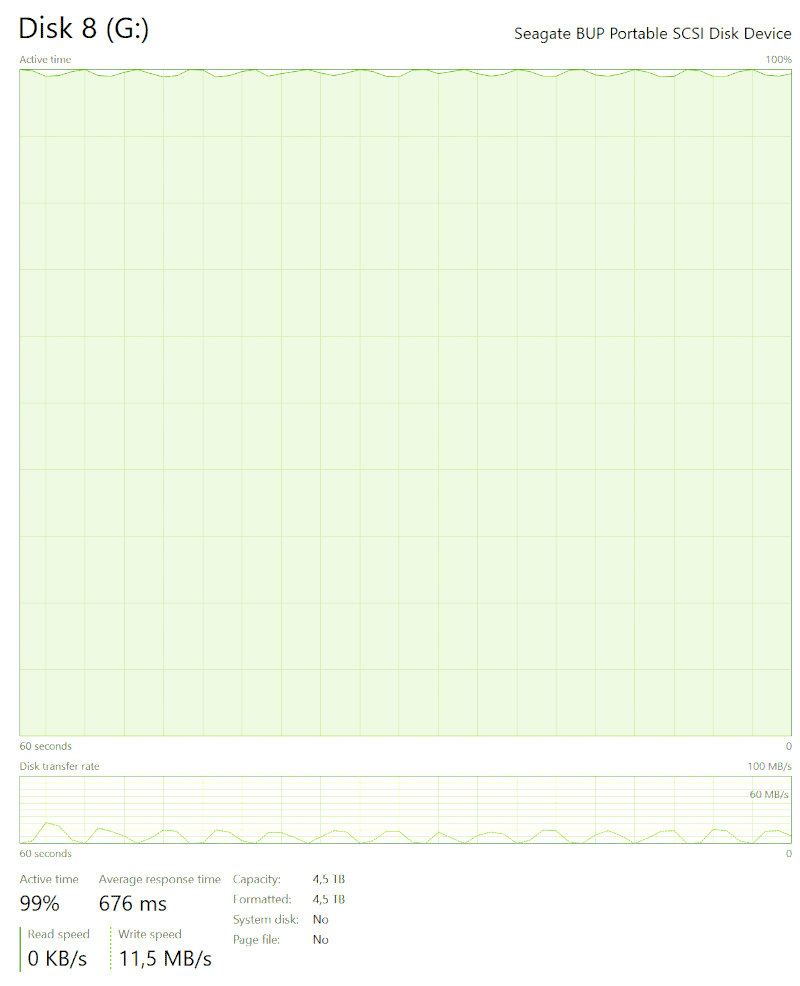

I’ll illustrate how SMR behaves during overwrites. Heads-up: you’ll only see numbers like this after the entire drive has been filled at least once and the amount written in a single session exceeds the size of the CMR cache (and neither wiping nor reformatting the drive will improve performance). In the first screenshot—writes with write caching disabled—the write speed swings between 0 and 10 MB/s. That speed reveals what’s happening under the hood: the controller reads a band (a block of shingled tracks) into a buffer, modifies one track, then writes the entire band back to disk. Next track—same thing again. The result is the process Reddit described like this: “People say SMR is slow, but few realize how slow it really is. Imagine trying to push an elephant through a keyhole. Got it? Now imagine someone on the other side is pushing back. That’s an SMR write.”

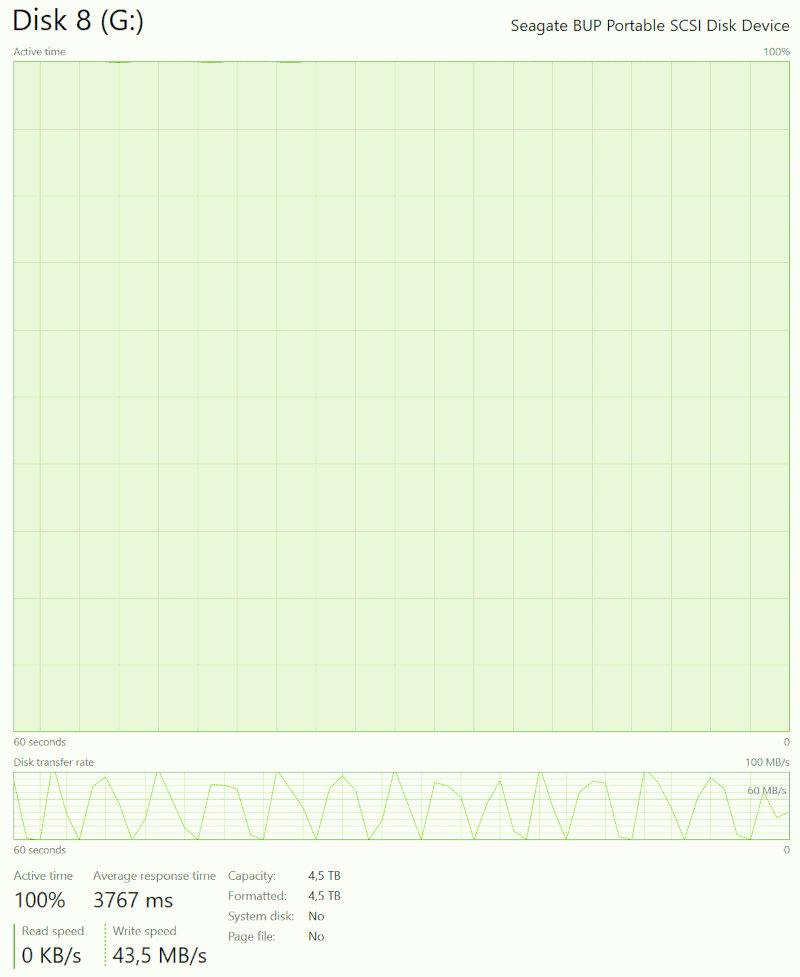

In the second screenshot, it’s the same scenario but with write caching enabled. Now the drive’s cache holds not just a single track but the entire band—or at least it can, depending on the actual band size and how it compares to the cache size, which we don’t know. If the drive were smarter (or at least supported TRIM, like WD’s), it would realize it needs to rewrite the entire band and do exactly that. Instead, it reads the band into cache again, modifies the data, and writes it back. As a result, the write speed swings from zero up to the maximum (about 100 MB/s in this region of the disk).

The screenshots clearly show one of the issues with SMR drives: extremely slow rewrites of large data sets. Given that these disks (the one shown is a Seagate Backup Plus 5TB) are often sold for backup storage, where the data size can range from hundreds of gigabytes to several terabytes, this level of performance on the device’s primary—and essentially only—use case is simply unacceptable.

What about read speed? A well-known issue with SMR is poor seek performance due to the very high track density. As a result, all else equal, random access on SMR models is slower than on non‑shingled disks, unlike sequential access. But the problems don’t end there. In SMR drives without TRIM, just like in SSDs, there’s logical-to-physical address translation. The drive tries to write new data to a small CMR buffer first; once that fills up, it appends to the next free shingled band. Logical addresses are thus remapped to physical ones, which creates internal fragmentation. On SSDs that’s mostly irrelevant, but with mechanical drives you get a double whammy: files are smeared across free bands, and random access is already slow because of tighter head-positioning requirements. In the worst case you end up with a drive where reading data feels like trying to push an elephant through a keyhole.

Not all models are created equal. The external drive mentioned above uses a Seagate Barracuda 5TB (ST5000LM000), one of the first 2.5-inch models with SMR. Its shingled recording implementation is painfully immature, reminiscent of the earliest SSDs that would tank in write speed immediately after the first full-capacity write. In my opinion, a drive in this state should never have been released to market.

So when is it actually viable? Is it even possible to use SMR drives without running into noticeable pain points? It turns out that even within the limits of SMR, you can build quite decent drives (with obvious constraints and a narrow set of use cases) if you get the software side right—namely, the controller firmware. And the first to really nail this wasn’t Seagate, the technology’s pioneer, but its main competitor, Western Digital, the protagonist of this article.

WD’s SMR implementation: TRIM support, just like SSDs

As of today, there’s one crucial difference between Seagate’s and Western Digital’s SMR implementations: WD drives support the TRIM command. Using TRIM changes a lot—if not everything. But does TRIM support actually mean SMR drives are suitable for use in RAID arrays? Let’s dig into the details.

Western Digital’s early experiments with SMR were strictly under wraps: to this day, the company hasn’t acknowledged whether the 4 TB WD My Passport used the technology. Most observers suspect it did—its poor random write speeds and shaky reliability raised predictable doubts. Still, no one is certain, not even the technically savvy folks at AnandTech. SMR is only mentioned in passing as a possibility for that model. Interestingly, that drive’s SMART attributes claim TRIM support, but you can’t actually enable it. By contrast, the newer WD My Passport 5TB (and the WD_Black 5TB) both advertise TRIM and actually support it.

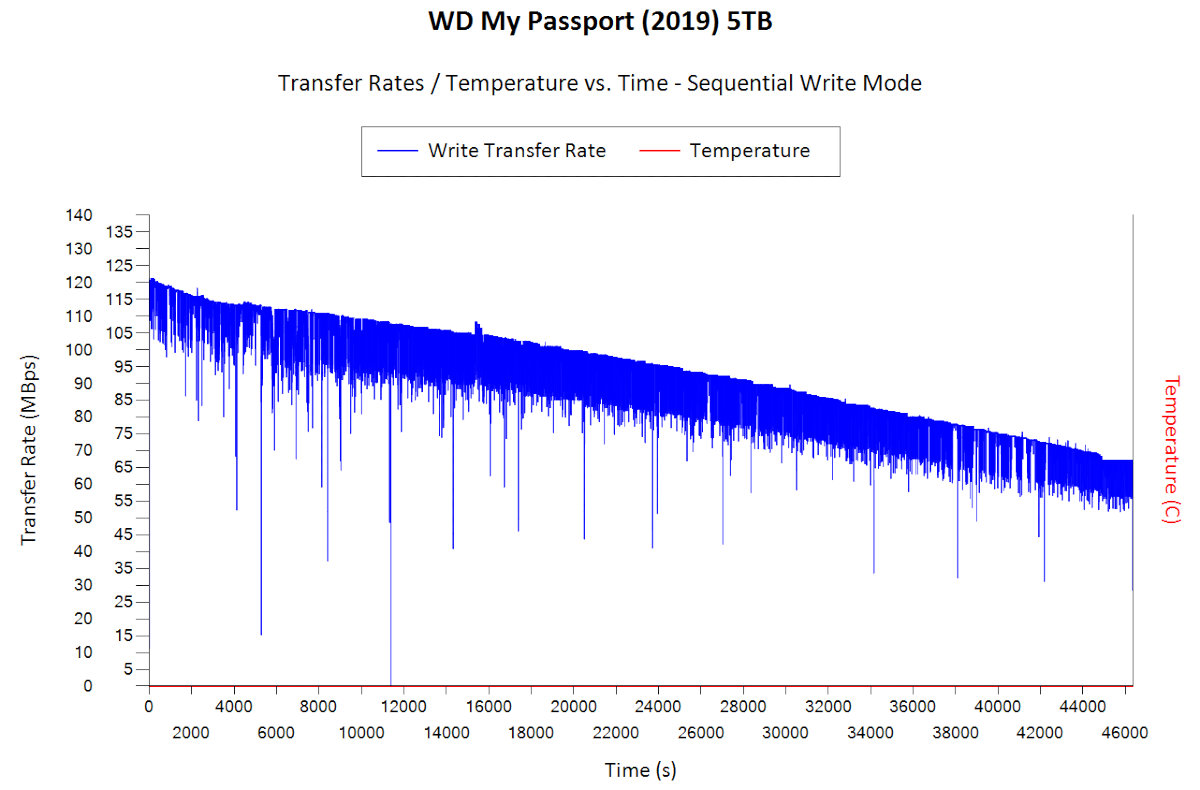

Thanks to TRIM support, sequential writes of large datasets behave as if you’re writing to a brand‑new drive. Here’s what the performance chart looks like for the WD My Passport 5TB (with TRIM support).

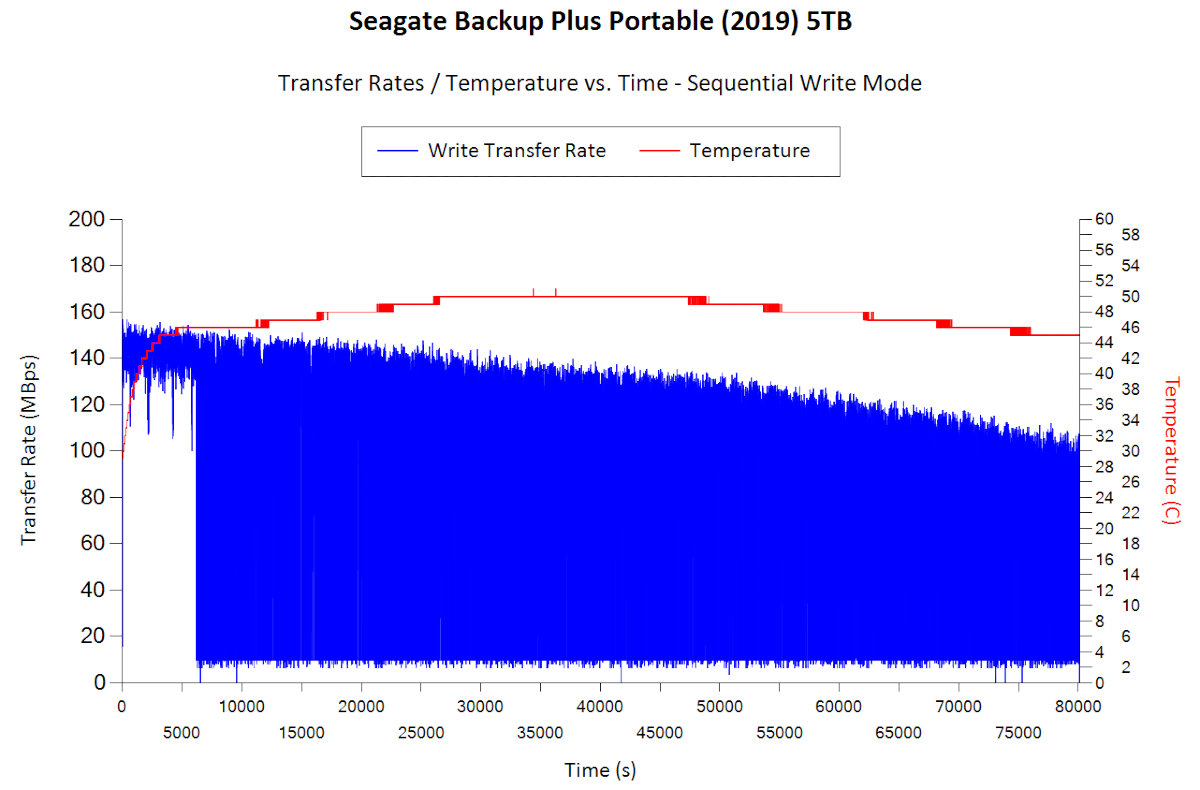

Here’s the performance graph for a comparable Seagate drive (no TRIM support).

As we can see, the Seagate drive spends the first ten minutes writing to its CMR cache, after which it enters an endless compaction/rewriting loop. As a result, the write speed repeatedly drops to about 10 MB/s, recovers to its peak, then drops again. The Western Digital drive did not exhibit this behavior.

How do you check whether a specific drive supports the TRIM command? On the one hand, you can look at its S.M.A.R.T. data. On the other hand, I have a few drives whose parameters claim TRIM support, but it’s actually not available in practice (likely due to the USB controllers the manufacturer used). You can verify it by launching PowerShell with administrative privileges and running the command

$ Optimize-Volume -DriveLetter X: -ReTrimIf the optimization process starts successfully, the system will perform a TRIM on the drive. If it returns an error, it means the TRIM feature isn’t supported (and by the way, it isn’t supported when using any filesystem other than NTFS).

At first glance, what does TRIM—the command traditionally used on solid-state drives to simplify garbage collection—have to do with this?

The article TRIM Command Support for WD External Drives (https://support-en.wd.com/app/answers/detail/a_id/25185) provides a detailed explanation. According to it, the TRIM function is used to optimize garbage collection on WD drives that use shingled magnetic recording (SMR).

TRIM/UNMAP is supported for external (and, incidentally, internal as well—author’s note) hard drives that use SMR recording. It’s used to manage logical-to-physical address mapping tables and to improve SMR performance over time. One of the “advantages” (that’s exactly how it’s phrased—author’s note) of shingled recording is that all physical sectors are written sequentially in the radial direction and only rewritten after a cyclical relocation pass. If you overwrite a previously written logical block, the old data is marked invalid and the logical block is written to the next physical sector. TRIM/UNMAP lets the OS tell the drive which blocks are no longer in use, so the HDD can reclaim them and perform subsequent writes at full speed.

What does this mean in practice? If you use such a drive in Windows 10 (and it’s formatted as NTFS), throughput on large data sets will stay high regardless of how many times data has been overwritten. The OS will automatically tell the controller which blocks have been freed and are no longer in use. As a result, write performance will recover automatically after you delete files or format the drive—something Seagate’s drives sorely lack.

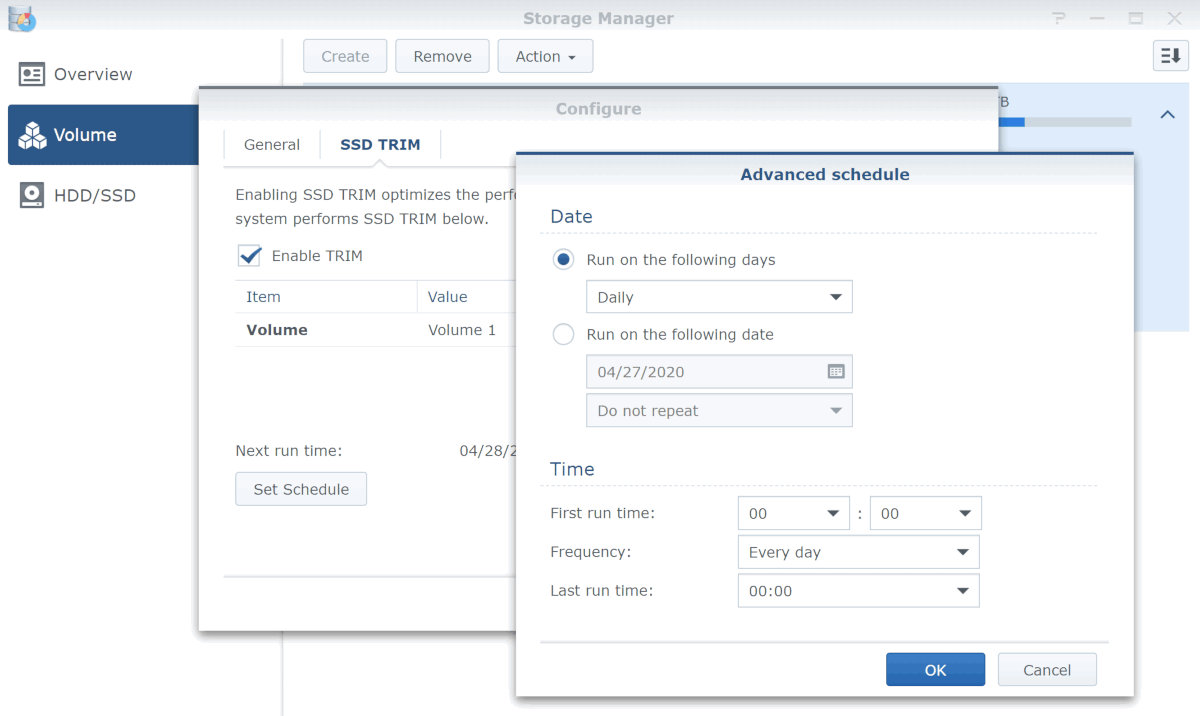

If we’re talking about a 3.5-inch drive, some NAS devices (for example, from Synology) will also detect it as TRIM-capable. You can configure TRIM to run on a schedule.

Don’t let the “SSD Trim” label fool you: the device actually uses a conventional 6 TB mechanical hard drive—from WD’s new shingled magnetic recording (SMR) series. That said, this method has a number of limitations; in particular, Trim doesn’t work on RAID 5/6 arrays. Other NAS systems have similar limitations.

Which brings us to the obvious conclusion: even with drive-level TRIM support, the new Western Digital shingled (SMR) drives are not suitable for use in RAID 5 or RAID 6 arrays (and, based on user reports, in SHR1 and possibly SHR2 as well). In such arrays, a continuous stream of small-block writes will quickly—within about forty minutes—saturate the controller and fill up the limited CMR cache.

You also shouldn’t mix SMR and conventional (CMR) drives in the same RAID array: depending on the workload, an SMR drive can slow down the entire array and even push it into a degraded state if the controller decides the drive is taking too long to respond.

On the other hand, WD’s newer SMR drives are perfectly fine for single-bay NAS units, and they can also be used in JBOD, RAID 1, or RAID 0 arrays in multi-bay NAS enclosures—with the caveat that the last two (RAID 1/0) require every drive in the array to use the same recording technology (either all CMR or all SMR).

And of course, WD shingled magnetic recording (SMR) drives work great as archival storage—so long as you’re using them with Windows and the drive is formatted as NTFS. Using these drives on macOS can lead to unpleasant surprises due to Apple’s implementation of TRIM.

That said, an SMR drive with TRIM support is preferable to a similar drive without TRIM — and the latter category is a long one that includes nearly all of Seagate’s consumer models.

Are SMR drives worth buying?

The price gap between helium‑filled WD My Book or WD Elements Desktop 8TB drives that use conventional magnetic recording (CMR) and the shingled (SMR) Seagate Backup Plus Hub or Seagate Expansion Desktop 8TB is currently about €10–15. The difference between the WD Red 6TB PMR/CMR model (WD60EFRX) and the SMR model (WD60EFAX) used to be the same €10, but after recent reports the price of the conventional model jumped sharply and, unjustifiably, climbed to match the price of the 8TB drive.

Is it worth paying extra for a non‑SMR drive, and is that always an option? In some compact 2.5″ drives starting at 2 TB, Shingled Magnetic Recording (SMR) is used to achieve a thinner enclosure. In 5 TB compact drives, SMR is used simply to make that capacity feasible. Desktop drives at 2, 4, 6, and 8 TB, however, can be built without SMR. Using shingled recording lowers manufacturing costs; some of that saving is passed on to the buyer, but most of it stays in the manufacturer’s pocket.

Ultimately, it’s your call whether to buy a given drive. There are scenarios where SMR is categorically unsuitable—namely RAID 5 and RAID 6 arrays. Using SMR in RAID 0 or RAID 1 is more of an enthusiasts-only choice, but it can work if you follow a simple rule: within an array, use either all CMR drives or all SMR drives. It won’t be optimal, but it’s possible. In a single-bay NAS that supports TRIM, you won’t see much difference between a PMR drive and an SMR drive that supports TRIM. Finally, SMR drives with TRIM are perfectly acceptable for archival use.

If a manufacturer ships SMR drives without TRIM support, they really ought to offer a massive discount. Otherwise, I can’t see why anyone would choose a drive that’s inherently slow—very slow—and has highly inconsistent performance.

Conclusion

In this article, we took a deep dive into SMR (shingled magnetic recording) technology itself, as well as the specifics of its implementation by the industry’s two largest players—Seagate and Western Digital.

Is SMR all bad?

No, it isn’t—so long as the buyer understands the problems and limitations they’re paying for and gets an appropriate discount. But you have to go in with eyes open, and deliberately withholding information is absolutely unacceptable. At the moment, Western Digital seems to have gotten off with a slap on the wrist. I’d very much like to see a class-action lawsuit, a hefty fine, and appropriate compensation paid by the manufacturer for years of deception and omissions.

I’ll grab some popcorn and keep monitoring the situation.