warning

This article is for informational purposes only. Neither the editors nor the author are responsible for any harm that may result from using the information presented here.

Back in 2004, Nikita Borisov, together with Ian Goldberg, developed a general-purpose cryptographic protocol for instant messaging. The protocol, called OTR (Off-the-Record Messaging), was released as a GPL-licensed library. OTR later became the foundation for other popular protocols that introduced additional security mechanisms—most notably the Signal protocol, formerly known as TextSecure. Many modern messengers are built on Signal as well.

Principles of Messaging Encryption

Conceptually, any cryptographic protection for messaging should guarantee at least two fundamental properties: confidentiality and message integrity. Confidentiality means only the participants can decrypt each other’s messages. Neither the internet service provider, nor the messaging app developer, nor any other third party should have the technical ability to decrypt them within a reasonable amount of time. Integrity protects against accidental corruption and deliberate tampering. Any message altered in transit will be automatically rejected by the recipient as corrupted and no longer trustworthy.

Modern instant messaging protocols also tackle additional requirements that improve usability and security. In the Signal protocol and its closest counterparts, these include asynchronous messaging, forward secrecy, and future (post-compromise) secrecy.

You’ve probably noticed that messaging apps deliver messages you missed. They show up even if you were in a group chat and went offline for a while mid-conversation. That’s asynchronous delivery: messages are encrypted and sent independently of one another. Timestamps and a few additional mechanisms preserve their logical order.

A property known as forward secrecy means that if the encryption key for the current message is compromised, it still can’t be used to decrypt earlier conversations. To achieve this, messaging apps frequently rotate session keys, with each key encrypting only a small batch of messages.

Similarly, backward secrecy (post-compromise security) ensures that future messages remain protected even if the current key is compromised. New keys are derived so that linking them to previous keys is computationally infeasible.

Forward and backward secrecy are implemented in modern key management mechanisms. In Signal, this is achieved with the Double Ratchet (DR) algorithm. It was developed in 2013 by cryptography consultant Trevor Perrin and Open Whisper Systems founder Moxie Marlinspike.

The name alludes to the Enigma mechanical cipher machine, which used ratchet wheels—gears with angled teeth that move only in one direction. This mechanism prevented the machine from returning to a recently used gear configuration.

Similarly, the “digital ratchet” prevents reusing previous cipher states. The DR frequently rotates session keys and ensures older keys can’t be used again. This is what provides forward and backward secrecy (i.e., extra protection for individual messages). Even if an attacker manages to recover a single session key, they can decrypt only the messages protected by that key—and that’s always just a small slice of the conversation.

The Signal protocol also includes many other noteworthy mechanisms that are beyond the scope of this article. You can read its audit results here.

Signal and Its Variants

Signal’s end-to-end encryption is used not only in the eponymous messenger from Open Whisper Systems, but also in many third-party apps: WhatsApp, Facebook Messenger, Viber, Google Allo, G Data Secure Chat — all of them rely on the original Signal Protocol or a lightly modified variant, sometimes under their own branding. For example, Viber’s Proteus protocol is essentially Signal with different cryptographic primitives.

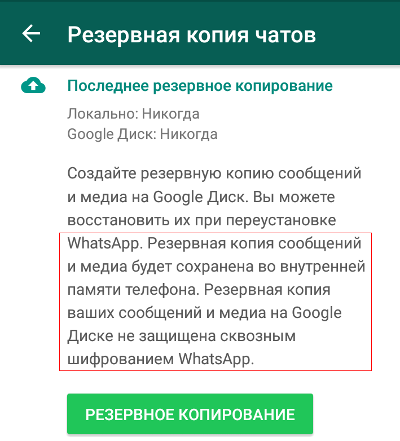

Even with similar implementations of end-to-end encryption, an app can still compromise your data in other ways. For example, WhatsApp and Viber offer chat-history backups. WhatsApp also sends communication statistics to Facebook’s servers. Protection for both local and cloud backups is largely nominal, and the metadata isn’t encrypted at all—this is stated explicitly in the license agreement.

Metadata shows who’s talking to whom and how often, what devices they’re using, where they are, and so on. It’s a huge trove of indirect information that can be used against people who think their communications channel is secure. For example, the NSA doesn’t care about the exact words a suspect used to congratulate Assange on making Obama look foolish, or what Julian replied. What matters is that they’re corresponding at all.

As noted above, messaging apps periodically rotate their session encryption keys, and that’s normal. The primary key, however, may change if your contact moved to a different device, was offline for a long time… or if someone hijacked the account and started messaging in their name.

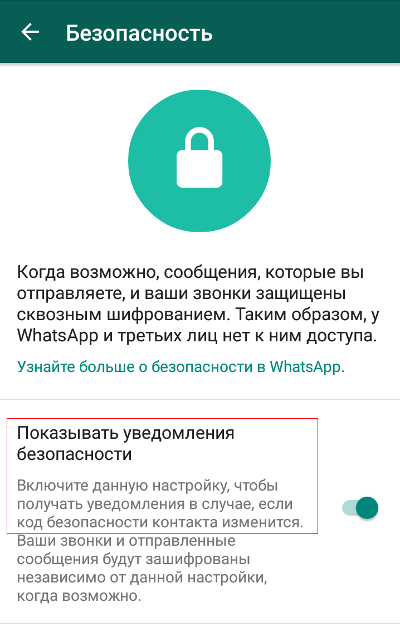

In the official Signal app, all participants in the conversation receive a key change notification in such cases. In WhatsApp and other messengers, this is disabled by default because it doesn’t convey meaningful information to most users. The key may also rotate after the other party has been offline for a long time—arguably both a bug and a feature.

As UC Berkeley researcher Tobias Boelter noted, an attacker targeting the service could generate a new key and receive messages intended for the recipient. Moreover, WhatsApp’s own server operators could do the same—for example, at the request of law enforcement.

The Signal protocol developers dispute Boelter’s conclusions and defend WhatsApp. According to them, a key swap only grants access to messages that haven’t been delivered. Not much of a consolation.

You can enable key-change notifications in the settings, but in practice this “paranoid mode” won’t do much. The messenger only alerts you about a key change after you send another message. The idea is that this is considered more convenient for users.

Ways In

Let’s grant these arguments. As a working assumption, we’ll say the Signal protocol has no practically significant vulnerabilities. So what? The problem remains: in Signal, WhatsApp, and other messengers, end-to-end encryption guarantees confidentiality only if the attacker has nothing beyond intercepted messages in encrypted form.

In practice, during background surveillance the FBI and similar agencies usually rely on communications metadata. If they need the message contents, they obtain them by other means—methods that don’t require breaking strong encryption protocols or factoring long keys.

People often cite the results of “cracking contests” as proof that a given cryptosystem is secure. The logic is: nobody claimed the prize, so it must be unbreakable. That’s a classic false equivalence. Reading a real person’s private messages is one thing; meeting the rules of a contest built around breaking a bot-to-bot dialogue (or messages crafted by messenger developers expecting a trick in every line) is quite another. The rules are usually written so that participants end up facing a task that’s effectively unsolvable within the allotted time.

In the real world, people who want to read your messages don’t play by any rules. They won’t necessarily look for flaws in the end-to-end encryption protocol itself—they’ll go after the easier targets. Think social engineering (hence my point about the humans), OS vulnerabilities (there are thousands in Android), buggy drivers, and third-party software—any trick in the book. Real pros take the path of least resistance, and the three-letter agencies are no exception.

With physical access to a smartphone—even briefly and without root—many more attack vectors open up, far beyond the scope of a “hack the messenger” contest. In such cases, attackers can often abuse a supposedly convenient feature the developers left in—“not a bug, a feature”—which ends up making exploitation easier.

Here’s an example. In our lab, it was common for someone to step out for a couple of minutes and leave their smartphone charging. Everyone had a phone, but there weren’t enough outlets. So we set up a dedicated table with a power strip—basically a charging station where, throughout the day, all or almost all of the phones would end up.

Naturally, we walk up to that table dozens of times a day, grab our phones (and sometimes someone else’s by mistake), and put them back on the charger. One day I needed to find out what Johnny was writing in his messaging apps. There was a suspicion he was leaking project information, and our security team just shrugged. End-to-end encryption is a brick wall. BYOD never took off here. We also tried banning smartphones and messengers outright, but that went nowhere—too much of today’s communication depends on them. So, with the security team’s approval (clause 100500: “…has the right in exceptional cases…”), I simply waited for the right moment and did the following:

- I wait until Johnny heads out to get food. That’s at least three minutes; I only need two.

- I calmly take his smartphone and sit back down in my seat.

- The phone is locked, but I know the pattern. He’s used it in front of me hundreds of times—hard not to memorize that Z-shaped swipe.

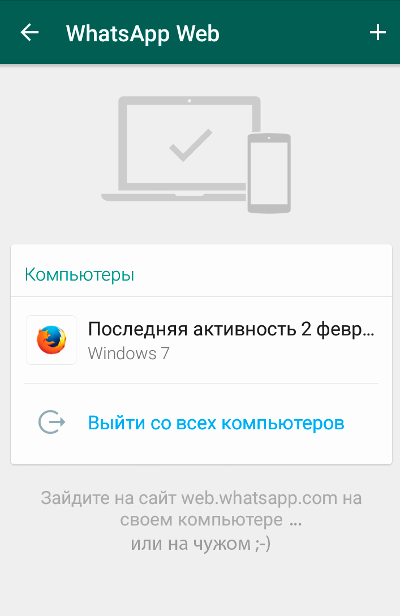

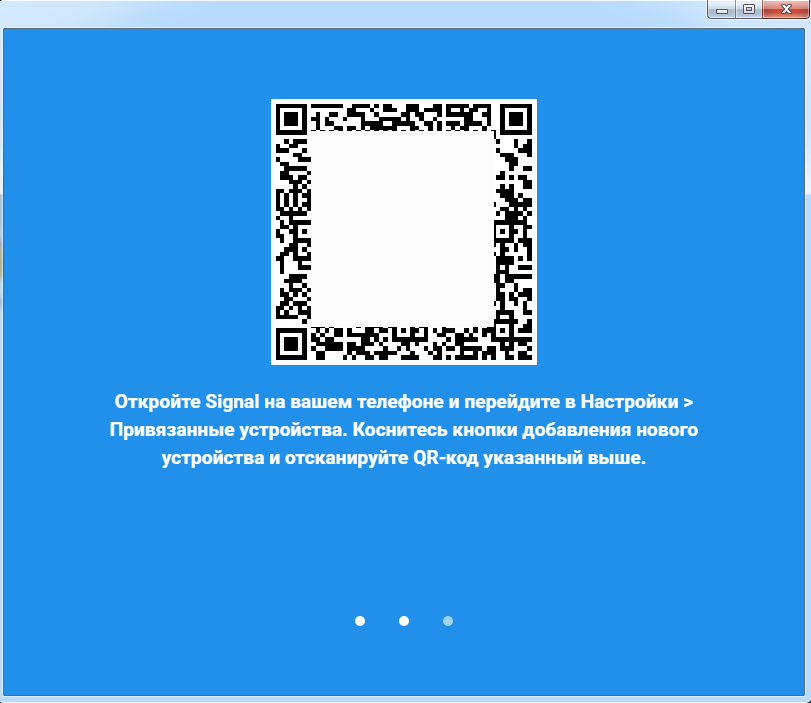

- I open a browser on my computer and go to the WhatsApp Web page. It shows a sync QR code.

- On Johnny’s phone, I open WhatsApp and go to “Chats → Settings → WhatsApp Web.”

- I scan the QR code with the phone.

- Done. Johnny’s entire chat history is now loaded in my browser.

- I wipe the traces and put his phone back where it was.

Now I can see all of Johnny’s past and current conversations. I’ll be able to read them at least until the end of the day, unless WhatsApp rotates the key or Johnny manually terminates the web session. To do that, he’d first have to suspect something’s wrong and then open the same WhatsApp Web menu. There he’ll see a note about the most recent web session… which is basically useless. It only shows the city (via GeoIP), the browser, and the OS. In our case all of those match perfectly (same lab, same network, standard-issue PCs with identical software), so that entry won’t raise any red flags for him.

A web session is convenient for real-time monitoring. You can also back up the chats—for the record.

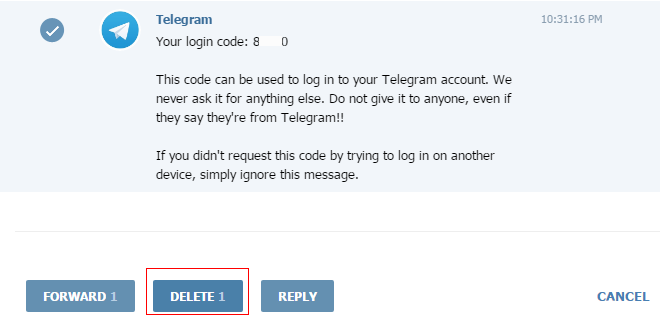

A few days later, Johnny moved over to Telegram. The approach to monitoring his communications was largely the same.

- Take his smartphone, unlock it with his usual “Z” pattern, and open Telegram.

- In your browser, go to the Telegram web app: https://web.telegram.org.

- Enter Johnny’s phone number.

- Grab the verification code that arrives in his Telegram.

- Enter it in your browser window.

- Delete the message and any traces.

Before long, Johnny installed Viber, and I had to pull a new trick.

- Take their smartphone for a couple of minutes.

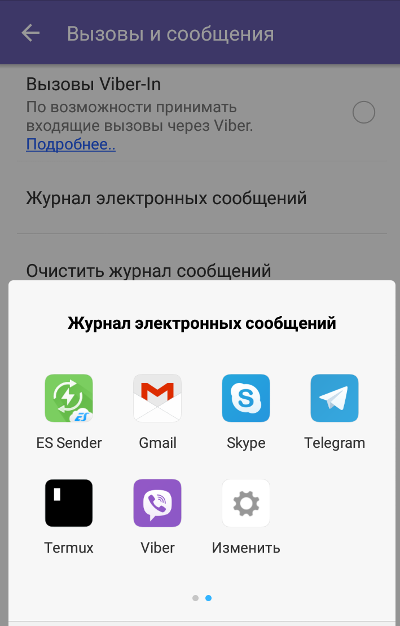

- Open Viber → Settings → Calls and messages → Email message history.

- Copy the archive to a flash drive (OTG) or send it to yourself by any other method—Viber offers dozens of options.

- Return the smartphone and erase any traces.

There’s no web version of Viber. I could have installed the desktop app and linked it to Johnny’s Viber mobile account as well, but I went with the approach that was easier to implement.

Johnny got paranoid and installed Signal. Damn, it’s the gold‑standard messenger, endorsed by Schneier, Snowden, and the Electronic Frontier Foundation. It won’t even let users take chat screenshots. So now what?

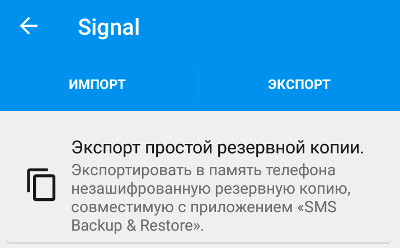

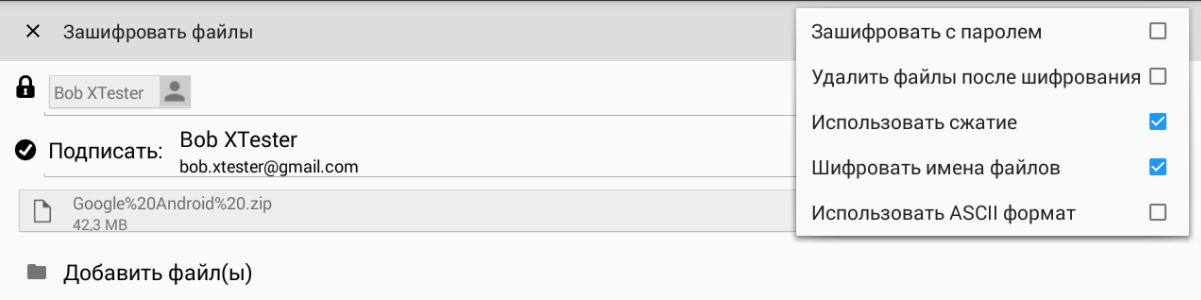

We wait for the right moment again and launch Signal on Johnny’s smartphone. The messenger asks for a passphrase I don’t know… but I do know Johnny. I try his birthday—no luck. I try the combination from our lab briefcase—it works. Almost boring. We head into the messenger’s settings and pause, like a knight at a crossroads. Turns out there are plenty of ways to reach the chats. For example, Signal lets you export the entire conversation history with a single command—but only in unencrypted form.

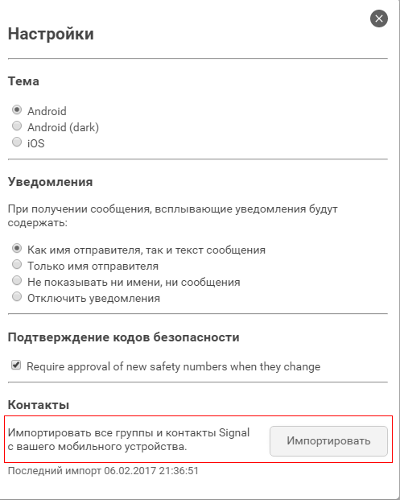

Then go to Settings → Linked devices and repeat the same trick we used with WhatsApp earlier. Signal likewise opens a web session via a QR code. There’s even a dedicated extension for Google Chrome.

As a bonus, you can exfiltrate all contacts from a Signal web session. Could be useful.

Bottom line: I don’t know Johnny’s encryption keys (and he doesn’t know them either!), but I can read his past and current conversations in every messaging app. Johnny suspects nothing and continues to believe that “end-to-end encryption” guarantees him complete privacy.

With physical access to a smartphone, taking over any messenger is easy—but you don’t even need that to compromise conversations. You can lure the victim to a phishing link and remotely drop a trojan on the device; older Android versions and stock browsers have plenty of vulnerabilities. The trojan can obtain root (these days that’s a routine, automated step), start taking screenshots and memory dumps, or simply make it easy to back up all chats from the target messenger in cleartext.

Telegram

This messenger deserves a separate discussion for a number of reasons. First, it uses a different end-to-end encryption protocol — MTProto. After shedding its early teething problems (one, three) and publishing most of its source code, it can be considered a promising alternative to the Signal protocol.

Second, the Telegram app doesn’t store local copies of your conversations on the device; everything is fetched from the server. As a result, you can’t export or copy chat logs the way you can in Viber and many other messengers.

Third, Telegram offers Secret Chats with additional protections. For example, push notifications don’t display the content of Secret Chats. If one participant deletes a message, it’s deleted for both. You can even set messages to self-destruct after a specified time. In any case, all Secret Chat messages are removed from Telegram’s servers once they’re delivered.

You can only verify this indirectly by switching devices. On a new smartphone, after signing in to Telegram, you’ll be able to restore your entire chat history except for Secret Chats. That’s because the encryption keys for Secret Chats are tied to a specific device—at least, that’s what the official FAQ states.

What if Johnny is using Telegram Secret Chats with self-destructing messages? One option is to take advantage of an Android quirk known as Screen after Previous Screens.

In short, the method relies on Android caching window snapshots of running apps in RAM for a short time. This lets users switch between apps faster without waiting for their windows to be fully redrawn.

The open-source tool RetroScope can extract the last dozen screen snapshots from a smartphone’s memory (or more, if you’re lucky), and those can include practically anything — from Telegram Secret Chats (even ones already deleted) to Signal conversations, which normally can’t be captured via standard screenshots.

Secure smartphones

Android is a complex operating system, and any system’s security is only as strong as its weakest component. That’s why “cryptophones”—highly secured smartphones—are appearing on the market. Silent Circle has released two versions of the Blackphone. BlackBerry created the Priv, and last year Macate Group introduced the GATCA Elite.

The paradox is that, in trying to make Android more secure, these companies end up creating a more conservative, more complex—and less secure—version. For example, Blackphone has to use older AOSP app versions that accumulate many known vulnerabilities. You can’t keep them promptly updated by hand, and adding an app store would itself open a hole in the security perimeter.

It got absurd: the preinstalled secure messaging app SilentText had been using the libscimp library for a long time, despite a long-known memory leak. It was enough to send a crafted message for its commands to execute as the local user and grant remote access to the contents of the Blackphone.

The Mobile Carrier Problem

Two-factor authentication was meant to make account takeovers harder, but in practice it shifted the security burden onto the weak link—mobile operators—and introduced new attack surface. For example, in April of last year two employees of the Anti-Corruption Foundation (FBK) reported their Telegram accounts had been hacked. Both were using 2FA. They believe the compromise involved MTS, a mobile carrier: allegedly, rogue employees cloned their SIM cards and handed them to attackers, allowing them to receive the SMS confirmation codes needed to log in to Telegram.

Getting a duplicate SIM is a trivial procedure at any carrier’s retail store. I’ve used it multiple times to replace a damaged card—and they didn’t always ask for ID. Moreover, there’s a small window between activating the new SIM and deactivating the old one. I discovered this by accident when I forgot to hand in the damaged SIM and it suddenly sprang back to life even after the new one had been issued.

Security flaws in older versions of Android

Any cryptographic application is only safe to use if it runs in a trusted environment. Android smartphones don’t really meet that bar. WhatsApp, Telegram, and other messengers will run even on the ancient Android 4.0 Ice Cream Sandwich, for which there are countless exploits. If messengers restricted themselves to only the latest Android versions, they’d lose 99% of their users.

Encryption: De Jure vs. De Facto

End-to-end encryption has become the de facto standard in all major messaging apps. Its legal status, however, remains unclear. On the one hand, many countries’ constitutions guarantee the privacy of correspondence and prohibit censorship. On the other, such encryption conflicts with Russia’s “Yarovaya” laws and with anti-terrorism legislation in the United States. Google, Facebook, and other companies must comply with the laws of the countries in which they operate. If they are compelled to grant access to user communications, they will be forced to “cooperate” with the government.

Since there’s still no workable way to compel access, strong encryption in messaging apps is considered a major headache for law enforcement and intelligence agencies worldwide. The FBI Director, France’s Interior Minister, and many other senior officials have said their agencies can’t monitor or access such communications.

In my view, it’s just a publicity stunt. As Br’er Rabbit said, “Please don’t throw me into the briar patch!” Although the Signal Protocol and similar secure transport protocols used by messaging apps are considered robust—and in some cases have undergone serious audits—the real-world cryptographic protection of conversations often ends up being weak due to the human factor and to extra features in the apps themselves. Officially, chat backups, cloud mirroring, device migration, and automatic key rotation were added for convenience… but whose convenience, exactly?

But What About PGP?

The first thing that usually comes to mind when encrypted messaging is mentioned is PGP. But not all implementations of this popular public-key cryptosystem are equally secure. The United States has officially employed various methods to weaken cryptographic strength for export-grade products, and unofficially applied them to mass-market ones as well. After acquiring PGP from Phil Zimmermann and closing the source code of its products, Symantec is effectively obliged to comply with current U.S. legal restrictions and follow the government’s tacit “recommendations.”

For a long time, privacy advocates considered only the original PGP 2.x releases trustworthy—those that used RSA to wrap session keys and IDEA for bulk encryption. But after a 768-bit RSA key was factored in 2010 using the number field sieve within a practical timeframe, that level of security stopped being viewed as sufficiently strong.

Modern hacktivists and civil-liberties advocates have shifted their focus to open-source PGP implementations. Most of them let you choose among multiple algorithms and generate longer keys. But it’s not that simple. A longer key doesn’t automatically guarantee stronger cryptographic security. That also requires the absence of other weaknesses, and all bits of the key must be uniformly random. In practice, that’s often not the case.

The key’s bit sequence is always generated by some known pseudorandom number generator (PRNG), typically the one built into the operating system or sourced from standard libraries. Accidental or intentional weakening of that PRNG is the most common issue. The once-popular Dual_EC_DRBG (used in most RSA products) was developed by the NSA and contained a backdoor. This was discovered seven years later, by which time Dual_EC_DRBG was in widespread use.

All PGP implementations that conform to the OpenPGP standard (RFC 2440 and RFC 4880) remain broadly interoperable. On Android smartphones, you can add PGP encryption to your email using an app such as OpenKeychain.

OpenKeychain has open source code audited for security by Cure53, integrates seamlessly with the K-9 Mail email client and the Conversations Jabber client, and can even pass encrypted files to the EDS (Encrypted Data Store) app, which we covered in a previous article in this series.

Conclusions

On Android, protection against third-party interception is effectively provided both by traditional email clients (using OpenPGP) and by modern messengers built on end-to-end encryption. That said, message confidentiality only holds as long as the attacker lacks extra advantages—such as physical access to the device or the ability to remotely compromise it with a Trojan.

Debates about how secure the Signal protocol really is, and whether it’s better than Proteus or MTProto, are mostly of interest to cryptographers. For users, they’re largely moot as long as messengers allow unencrypted chat backups and session cloning. Even if every messaging app became as hardened (and as inconvenient) as the original Signal, there would still be plenty of vulnerabilities at the Android OS level—and the human factor isn’t going anywhere.

Useful links:

- Using OpenPGP on Android

- Signal Protocol Java library for Android

- Backdoor in a PRNG

- Features and Limitations of the PGP Cryptosystem