In our work, we are faced with very different projects. In one way or the other, many of them could be called “high-load projects”. If you spend some of your spare time on categorizing these projects and discard such ordinary things as second-rate online stores while roughly grouping what is left, you can come up with an approximate classification. It includes four types of high load:

- By the number of requests (banner networks);

- By traffic (video services);

- By logic (complex back-end calculations);

- Mixed (everything that fell into several categories).

Now, let’s have a closer look at them.

Number of Requests

Of course, these banal “rps” come the first. The banner ad networks represent one of the most outstanding examples of systems oriented on a large number of small requests. In its simplest form, a banner network is a web server, a database and a few scripts involved in returning the correct information from the database to appropriate clients based on various criteria (type of device from which came the request, geographical location of the client, time of day, etc.). More complex systems include multiple front-end servers where the load is balanced, for example, at the level of DNS queries. Behind the front-end servers, there may be either just the database servers, or several third-party partner banner networks, which can also provide advertising for your users (traffic).

Bottlenecks in exclusive use of proprietary independent architecture:

- Processing Power. The problem can be solved by proper web server configuration, and optimizing the banner generation code. In a critical situation, this bottleneck can be eliminated by expanding the number of return servers.

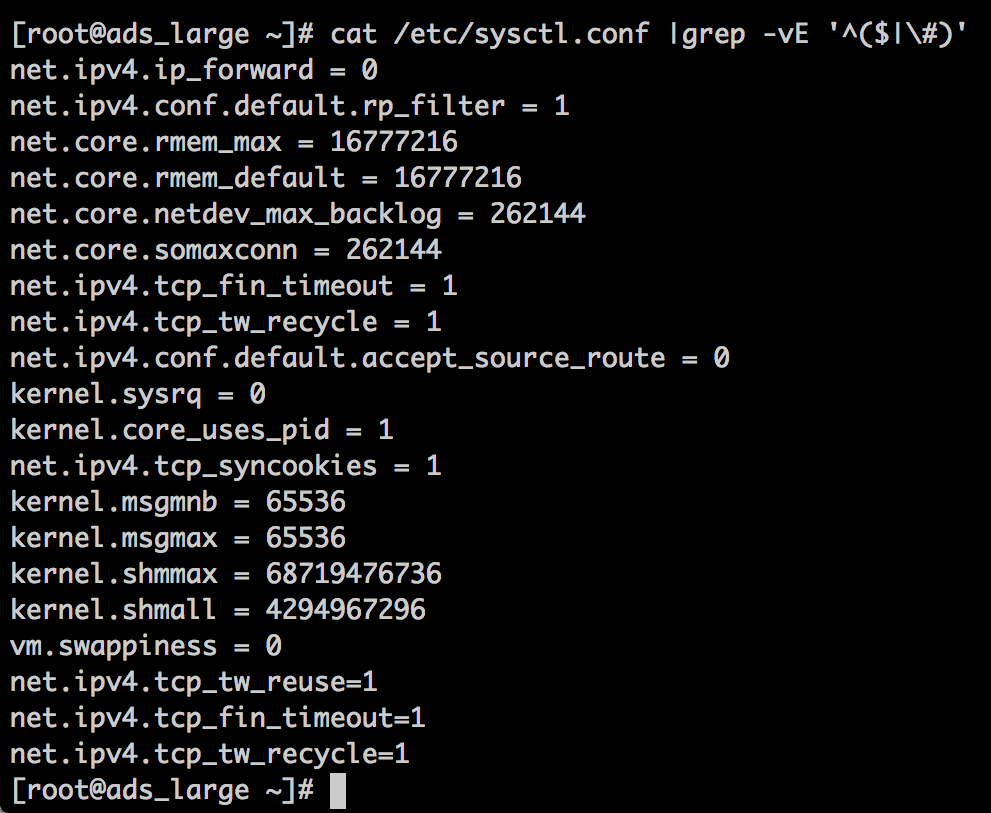

- Proper Configuration of Network Subsystem. If you want to maintain the load of 10–15 thousand rps on the server without choking the processor by ‘sys’, you will have to dig into ‘sysctl’ and properly configure the network subsystem.

Also, there may be some bottlenecks in using partner networks. Of course, this depends on specific partners. But, in general, if you work not alone, there are always some additional problems. Sagging network, banner generation scripts that are too slow, weak servers at the partners, — all this leads to hanging requests that slow down the system and increased load on your own servers. There are not so many ways out of this situation. If this is politically correct, you can cache the banners on your side. If the system is changing too often and too fast, then caching won’t work. In this case, the only thing that you can do is to negotiate with partners.

Traffic

The next category may include projects with a high load that is put not so much on the number of requests, but rather on the amount of data queried in each request. The most obvious example of this type of projects are various video services. Here, the number of proverbial ‘rps’ also plays a role, but not in a pure form as in case of advertising networks. The main problem of such projects is that each client requests a rather large amount of data. This creates a load both on network channels and the disk subsystem used for file storage.

Naturally, there cannot be only one server in case of more or less high load (when viewing videos in 720p, 6–8 Mbps per client means just ~ 130–150 people per one Gigabit channel). Usually, in such cases, there are several groups of servers distributed geographically to diversify the risks of failure. Within each group, the data is synced in a ring, just as between these groups. For syncing, we usually use ‘lsyncd/rsync’. Requests to video storages are also balanced at DNS level. If one or more servers fail, we simply disconnect them from DNS and synchronization ring.

Bottlenecks:

- Network throughput. At the higher loads, the services with heavy traffic are the first to experience the lack of bandwidth to the server. In this case, the 100 Mbps network card is replaced with a 1 Gigabit network card. Then, we add one more. Of course, there are problems with channel aggregation. But, in general, these difficulties definitely can be solved.

- Drive Performance. Even at the full load on one Gigabit channel, regular SATA drives should provide sufficient performance. If there is a need to get a more serious increase of access speed to the server, then it’s time to think about buying 10K SAS drives and using them to form a RAID 10 array.

- Traffic. Naturally, if you want to run a traffic-intensive project, you should choose a host that shows the highest tolerance to large amounts of data sent by your servers.

Logic

Here, you start to encounter something more interesting. Strange as it may seem, I will describe an online store as example for this category. Suppose we have an online store selling alcoholic drinks, for example, 30,000 items. That’s really a lot of alcohol: different years of production, various brands coming from different regions of the world, with a different price. How to search for specific items in this database?

You can certainly make a request based on a dozen conditions. But the time that it will take to run it on an average hardware wouldn’t be acceptable. In the best case, it would take tens of seconds and, in the worst, several minutes. What can you do to solve this problem? You can set up a server park, logically divide the data by different databases, and make sharding for each database. But what if you do not want to spend a tidy sum on the server park? Then, you can solve the issue in a more exciting way. Let’s collect all data in one large temporary table:

Alcohol ID | Wine | Champagne | Whiskey | ... | Price from 1000 to 2000 | Price from 2000 to 3000 | .... | France | Germany, etc.

Overall, this makes about two dozen parameters. For any product, each parameter is either 0 or 1. As a result, we obtain the mask of twenty bits (for example, 00000110000010000011) pointing to a specific product. Next, you can use a separate service to collect these masks in a separate table. When a customer comes and, on the website, specifies the product search parameters, the output is the same mask. This mask, in turn, is sent to aggregator service where it is compared bit by bit with all available data. The search results are provided to the user. This approach allows you to substantially reduce the costs of server infrastructure in a project.

In this case, the bottleneck will come from re-indexing the data. For very large amounts of data, it can take a long time, while creating a rather high additional load. You can solve this problem by specifying an individual small server, to which you would replicate the database for periodic re-indexing without affecting the production server.

Mixed High Load

Finally, I would like to mention some projects that are difficult to include in any particular category. Often, the back-end is used for complex calculations, and there are a lot of requests, while the load is not always evenly distributed. For example, we provide support to a socially popular entertainment project. The idea of the project is that the user uploads two photos (one with a man and one with a woman), and the service uses this data to generate the picture of potential child that would come from such “parents”. If you want to know what a wonderful little daughter you would have with J. Lo, come here 🙂

The main features of this service are that it is quite popular, one can even say “viral”, and shows a huge amount of rps. However, under the hood, you would find not just the return of banners from the database, but a live facial analysis used to generate the new “averaged” face based on aggregate data of both “parents”. The viral nature of this service also adds some specifics: the load on the system is unevenly distributed, there are regular traffic hikes (once, the website was used by a popular Brazilian model who wrote about it in her blog — we had a “brazillion” of new visitors and sweat a lot but this allowed us to come up with a new, current scheme of operation).

The project structure looks like this: the front-end server with a web part, database server, separate storage for uploaded and generated photos, queues service and a bunch of handler servers. The user uploads the photos to the storage, while the scripts send a request for the queues service to “generate a child”. The queues service considers how heavily the generation queue is loaded. If there is almost no load, a new task is simply added to the queue. If the load is higher than a certain limit and processing of tasks begins to slow down, this service just goes and… buys another instance to generate the photo 🙂 When the load decreases, the same service removes extra instances in order not to spend more money for the downtime of unnecessary resources. This is the way for solving the problem of sudden hikes in attendance and budget overruns.

The bottleneck here is the queues service. Before launching into production any complex system that works with finances, you need to carefully check it. And after the launch, monitor all major indicators. In our company, this service went really crazy one day (there was some glitch with API) and began a non-stop ordering of new handler servers. Fortunately, we quickly noticed and cut short this activity 🙂

Conclusion

As you can see, high load is not always a heap of servers and millions of simultaneous connections. High load is also the ability to curb heavy loads, optimize resource consumption and hold back the server infrastructure from unjustified expansion. So, %username%, if you have only 10 rps on your server, but they are doing something more than returning images and forum pages, then this must be something serious 🙂 If you want to talk about some other projects that are relevant for discussing the issue of high loads, feel free to write at dchumak@itsumma.ru. Good luck!