Binary Classification

We’ll teach our neural network to recognize whether a photo shows a cat or a dog. This is a classic example, and Keras Datasets already provides the necessary training image set. Otherwise, we’d have to manually collect and label several thousand photos.

Import the necessary libraries.

from tensorflow import keras

from tensorflow.keras import layers

from tensorflow.keras import Sequential

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Flatten, Dense, Dropout, Activation, BatchNormalization, AveragePooling2D

from tensorflow.keras.optimizers import SGD, RMSprop, Adam

# pip install tensorflow-datasets

import tensorflow_datasets as tfds

import tensorflow as tf

import logging

import numpy as np

import time

Let’s build a neural network model.

def dog_cat_model():

model = Sequential()

model.add(Conv2D(32, (3, 3), input_shape=(128, 128, 3), activation='relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Conv2D(32, (3, 3), activation='relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Flatten())

model.add(Dense(units=128, activation='relu'))

model.add(Dense(units=1, activation='sigmoid'))

model.compile(optimizer=Adam(),

loss='binary_crossentropy',

metrics=['accuracy'])

return model

Our neural network has a single output, because the result is binary: 1 means dog, 0 means cat.

Now we need to train the neural network. The original dataset contains 25,000 images. We’ll split it into training and validation sets and preprocess each image. Each image must be 128 × 128 pixels, with pixel values normalized to the [0, 1] range.

def dog_cat_train(model):

splits = tfds.Split.TRAIN.subsplit(weighted=(80, 10, 10))

(cat_train, cat_valid, cat_test), info = tfds.load('cats_vs_dogs',

split=list(splits), with_info=True, as_supervised=True)

def pre_process_image(image, label):

image = tf.cast(image, tf.float32)

image = image/255.0

image = tf.image.resize(image, (128, 128))

return image, label

BATCH_SIZE = 32

SHUFFLE_BUFFER_SIZE = 1000

train_batch = cat_train.map(pre_process_image)

.shuffle(SHUFFLE_BUFFER_SIZE)

.repeat().batch(BATCH_SIZE)

validation_batch = cat_valid.map(pre_process_image)

.repeat().batch(BATCH_SIZE)

t_start = time.time()

model.fit(train_batch, steps_per_epoch=4000, epochs=2,

validation_data=validation_batch,

validation_steps=10,

callbacks=None)

print("Training done, dT:", time.time() - t_start)

Now we start training the model and save the results to a file:

model = dog_cat_model()

dog_cat_train(model)

model.save('dogs_cats.h5')

On my machine with a GeForce GTX 1060, training the network took about eight minutes.

We can now use the trained model. Let’s write an image recognition function.

def dog_cat_predict(model, image_file):

label_names = ["cat", "dog"]

img = keras.preprocessing.image.load_img(image_file,

target_size=(128, 128))

img_arr = np.expand_dims(img, axis=0) / 255.0

result = model.predict_classes(img_arr)

print("Result: %s" % label_names[result[0][0]])

model = tf.keras.models.load_model('dogs_cats.h5')

dog_cat_predict(model, "cat.png")

dog_cat_predict(model, "dog1.png")

dog_cat_predict(model, "dog2.png")

This approach can be used for other image recognition tasks as well; the only real challenge is sourcing training data.

Image Classification

Solving the binary task we just discussed is quite straightforward. But in practice you rarely need a computer to tell cats from dogs, so let’s look at a more realistic example: identifying what’s in a photo.

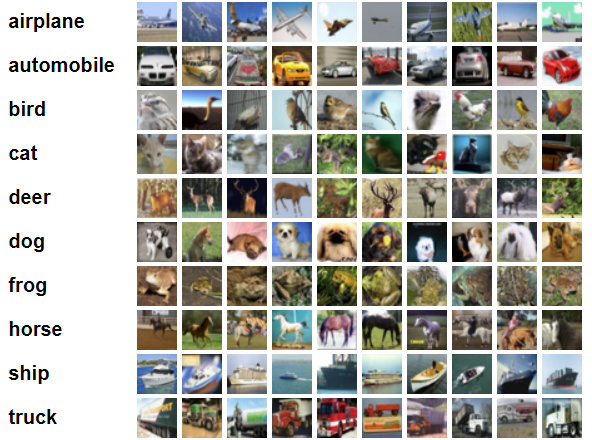

To train this neural network, we’ll use the CIFAR-10 dataset—60,000 images divided into ten classes: airplane, automobile, bird, cat, deer, dog, frog, horse, ship, and truck. The images are 32 × 32 pixels.

Keras has built-in support for this dataset.

The workflow is the same as before: build a neural network model, train it, and test it on a real-world example.

def cifar10_model():

model = Sequential()

model.add(Conv2D(32, (3, 3), padding='same',

activation='relu', input_shape=(32, 32, 3)))

model.add(Conv2D(32, (3, 3), activation='relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

model.add(Conv2D(64, (3, 3), padding='same', activation='relu'))

model.add(Conv2D(64, (3, 3), activation='relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

model.add(Flatten())

model.add(Dense(512, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(10, activation='softmax'))

model.compile(loss='categorical_crossentropy',

optimizer=SGD(lr=.1, momentum=0.9, nesterov=True),

metrics=['accuracy'])

return model

Code for training the network from the Keras example:

def cifar10_train(model):

(x_train, y_train), (x_test, y_test) = keras.datasets.cifar10.load_data()

y_train = keras.utils.to_categorical(y_train, 10)

y_test = keras.utils.to_categorical(y_test, 10)

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

x_train /= 255.0

x_test /= 255.0

def scheduler(epoch):

if epoch < 30:

return 0.01

if epoch < 50:

return 0.001

return 0.0001

change_lr = keras.callbacks.LearningRateScheduler(scheduler)

t_start = time.time()

# randomly rotate images in the range (degrees, 0 to 180)

datagen = keras.preprocessing.image.ImageDataGenerator(rotation_range=0,

# randomly shift images horizontally (fraction of total width)

width_shift_range=0.1,

# randomly shift images vertically (fraction of total height)

height_shift_range=0.1,

# randomly flip images

horizontal_flip=True,

# randomly flip images

vertical_flip=False)

# Compute quantities required for feature-wise normalization

# (std, mean, and principal components if ZCA whitening is applied).

datagen.fit(x_train)

# Fit the model on the batches generated by datagen.flow().

model.fit_generator(datagen.flow(x_train, y_train, batch_size=128),

epochs=60, callbacks=[change_lr],

validation_data=(x_test, y_test))

print("Training done, dT:", time.time() - t_start)

We use a scheduler to adjust the Learning , which controls how fast the model learns. In the final iterations, when accuracy gains between steps are small, the optimizer slows down and becomes more conservative, which improves the final accuracy.

We use the ImageDataGenerator class. It lets you expand your training set by applying random shifts, rotations, and horizontal flips to existing images. In other words, you can generate additional samples from each original image, which generally improves the model’s training quality.

Starting the training:

model = cifar10_model()

cifar10_train(model)

model.save('cifar10_32x32.h5')

Training takes about 15 minutes with a GPU and up to an hour without one.

Recognition function:

def cifar10_predict(model, image_file):

labels = ["airplane", "automobile", "bird", "cat",

"deer", "dog", "frog", "horse", "ship", "truck"]

img = keras.preprocessing.image.load_img(image_file, target_size=(32, 32))

img_arr = np.expand_dims(img, axis=0) / 255.0

result = model.predict_classes(img_arr)

print("Result: {}".format(labels[result[0]]))

This neural network can be used for practical tasks—for example, filtering a video stream to keep only frames that contain a car.

ResNet-50

We’ve hit the limits of what a home PC can do for training neural networks. The previous model processes images only 32×32 pixels in size, yet training it still takes about fifteen minutes on a machine with a Core i7 CPU and a GeForce 1060 GPU.

At this computational speed, tuning or modifying the network parameters will take a considerable amount of time.

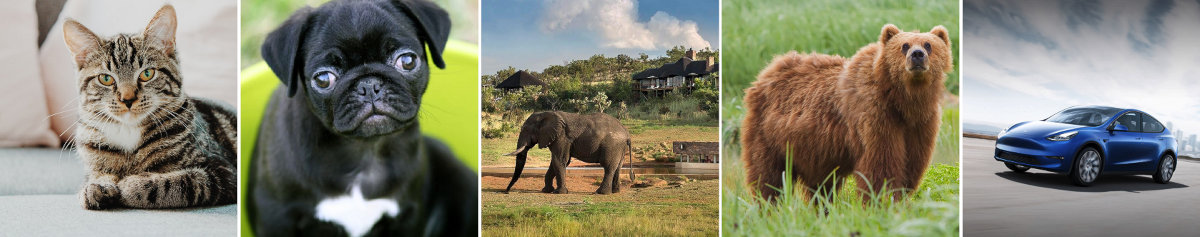

So let’s use an off‑the‑shelf, pretrained network. As an example, consider ResNet-50. It has a deep architecture with 50 layers. In fact, it won first place at ILSVRC 2015. ResNet‑50 can classify images into 1,000 categories, and it expects inputs of size 224 × 224.

Our code becomes much simpler: we don’t need to build or train a model. We can go straight to recognition.

def predict_resnet50(model, image_file):

img = tf.contrib.keras.preprocessing.image.load_img(image_file,

target_size=(224, 224))

img_data = tf.contrib.keras.preprocessing.image.img_to_array(img)

x = tf.contrib.keras.applications.resnet50.preprocess_input(

np.expand_dims(img_data, axis=0))

probabilities = model.predict(x)

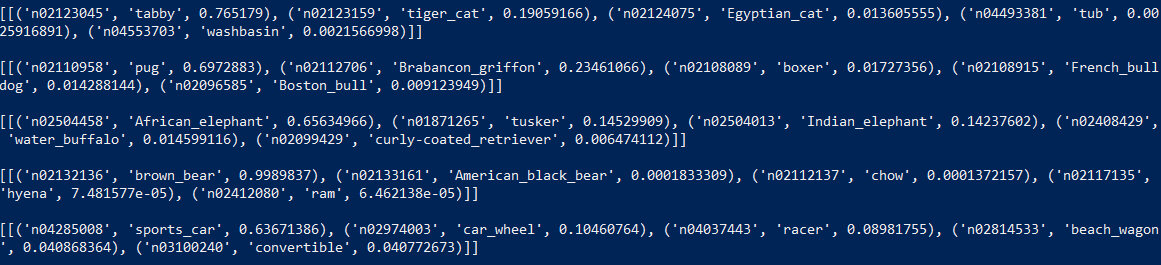

print(tf.contrib.keras.applications.resnet50.decode_predictions(probabilities, top=5))

model = tf.keras.applications.ResNet50(input_shape=(224, 224, 3))

predict_resnet50(model, "cat.png")

predict_resnet50(model, "dog.png")

predict_resnet50(model, "elephant.jpg")

predict_resnet50(model, "bear.png")

predict_resnet50(model, "car.png")

The network recognized a tabby cat, a pug, an elephant, a bear, and a sports car. For each image, it selected the top five matches.

The network operates completely autonomously. So if you need a standalone alarm that triggers when it spots a bear or an elephant, now you know how to build one.