Our primary tools will be the robotics application framework Robot Operating System and the simulator Gazebo. They’re used to test algorithms in a simulated environment while minimizing real-world hardware damage. Both ROS and Gazebo run on Linux—we’ll be using Ubuntu 16.04.

Types of drones

Drones come in many forms. By design, we distinguish several types of drones:

- Multirotor — copters

- Fixed-wing

- Hybrid — takes off vertically, then transitions to wing-borne flight

Drones are also categorized as consumer and commercial.

Consumer drones, as you can imagine, are the ones you can buy off the shelf and use as a flying camera. DJI Phantom and Mavic are solid models. There are cheaper options, but they’re definitely lower in quality. These quadcopters are used to shoot events, buildings, and historical sites. For example, you can capture a series of photos of a building or monument from a drone and then generate a 3D model of the object using photogrammetry.

As a rule, these drones are flown manually; less often they run autonomous missions using GPS waypoints. The consumer quadcopter market is more than half owned by a single company — DJI. They’re tough to compete with because they make a genuinely great product: affordable, capable, and easy to use. That said, in the selfie-drone segment, Skydio is starting to squeeze DJI with its R2 drone. The R2’s killer feature is true autonomy—for example, it can follow a motorcyclist through the woods. It detects obstacles and plots a safe route in real time while keeping the subject in frame. Really impressive stuff.

Companies use commercial drones to perform specific tasks. Some regularly fly over farmland to monitor crop conditions and capture imagery; others can apply fertilizers with pinpoint accuracy. Drones are also used on construction sites and in quarries. Each day they fly over the site, take photos that are turned into a cloud-hosted 3D model, which helps track changes from day to day.

An example of a Russian company actively applying this technology in the U.S. market is Traceair.

Another use case is pipeline inspection with drones. This is especially relevant for Russia: our gas pipelines span thousands of kilometers, and we need to monitor for leaks and unauthorized taps.

And of course, everyone has heard of drone delivery. I’m not sure whether Amazon Prime Air will ever get off the ground, but even now Matternet is delivering goods in Zurich and several U.S. cities, and Zipline has long been flying medical supplies across Africa. In Russia, progress has been much slower: there was recent news about a Russian Post drone that crashed on its first test, and Sberbank is testing cash delivery by drones.

The companies Volocopter and Ehang already have flying taxi prototypes, while Hoversurf, a company with Russian roots, is developing a flying bike.

There are indoor use cases for commercial drones too, but they aren’t very common yet; this area is seeing intensive R&D. Potential applications for this type of drone include:

- warehouse inventory audits;

- indoor construction-site inspections;

- safety monitoring in underground mines;

- industrial equipment inspections on the shop floor.

Time will tell which projects actually get built and end up disrupting our lives. The ultimate goal is to create a drone control system you could talk to like the protagonist of Blade Runner 2049: “Photograph everything here!”

Autonomous Navigation

Commercial drone deployments typically rely on autonomous flight rather than manual control. The reason is simple: commercial missions are often run repeatedly at the same site using the same flight plan, which can be preprogrammed to reduce pilot/operator costs.

To fly autonomously, a drone must at least know its position in space with high accuracy. In open areas it can rely on GPS, which provides accuracy within a few meters. A ground base station combined with GPS RTK can improve that to a few centimeters. However, a base station isn’t always practical and is expensive. Standard GPS is sufficient to program flight routes over farmland, construction sites, and pipelines, and in these scenarios drones can fly on their own. This capability is available in any modern off-the-shelf drone.

In this mode, it’s only safe to fly in open, obstacle-free airspace. For inspecting buildings, pipelines, or operating indoors, you need additional sensors to measure distance to nearby objects. Common choices include single-beam sonars, lidars, 2D lidars, 3D lidars, and depth cameras. The drone must also carry an onboard computer that can ingest these sensor feeds in real time, build a 3D model of the surroundings, and plan a safe route through it.

There’s another important issue: when flying in confined spaces or between tall buildings, GPS may be unavailable, so you need an alternative source for the drone’s position. You can estimate your position onboard by processing the video feed from the onboard cameras—ideally using stereo or depth cameras. This approach is known as SLAM (Simultaneous Localization and Mapping).

In the stream of camera frames, the algorithm looks for distinctive points (features), such as tiny corners or other local irregularities. Each point is assigned a descriptor so that if the same point is found in later frames—after the camera has moved—it receives the same descriptor, enabling the algorithm to say: “This frame contains the same point as the previous one.”

The algorithm doesn’t know the 3D coordinates of the feature points or the camera poses at the moments the frames were captured—those are exactly the parameters it needs to estimate. It tracks how the pixel coordinates of the feature points change between frames and finds parameters such that, when the points are projected onto the image plane, their pixel coordinates match the observed (measured) values.

In the end, you get an estimate of the camera’s motion in 3D space. SLAM algorithms are usually compute‑intensive, but the Intel RealSense T265 camera has a dedicated chip that performs SLAM in hardware.

Enabling autonomous drone control requires solving three key challenges.

- Determine the drone’s position in 3D space. Use a GPS receiver, or compute coordinates onboard by running SLAM on the video feed. Ideally, combine both to obtain global (GPS) and local (SLAM) coordinates.

- Build a 3D map of the drone’s surroundings using sensors such as stereo cameras, depth cameras, and LiDAR.

- Add path-planning software that accounts for the mission objective, current coordinates, and the environment map.

Hands-on

Since we want to test a simple autonomous drone control program without crashing anything, we’ll use an emulator. We’ll need the following software.

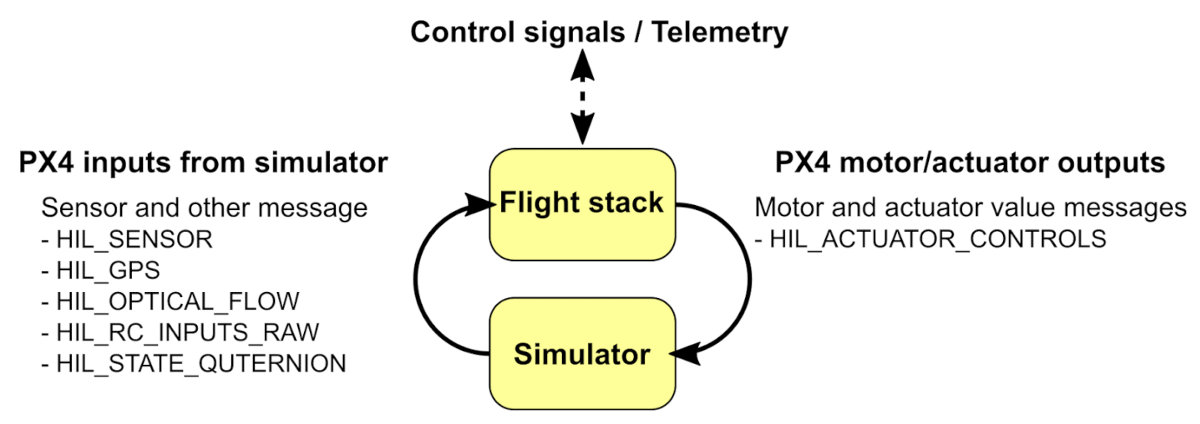

Flight Controller

Motor spin and the drone’s flight are handled by the flight controller—a Pixhawk board with an onboard ARM processor running the PX4 firmware. You can compile PX4 in Software-In-The-Loop (SITL) mode for testing on a personal computer with an Intel x86 CPU. In this mode, PX4 “thinks” it’s running on real flight‑controller hardware, but it actually runs in a PC-based simulation environment and receives simulated sensor data.

Robot Operating System

We’ll need a range of software modules: some to interface with sensors, others to run SLAM, others to build a 3D map, and still others to plan a safe route through it. To build these modules we use the Robot Operating System (ROS), a widely used framework for robotics applications. A ROS application is a collection of interacting packages; the running components are called nodes.

One node, called the master node, is responsible for registering the other nodes in the application. Each node runs as a separate Linux process. ROS provides mechanisms for message passing and synchronization between nodes. There are both standard message types and user-defined ones. Messages can include sensor data, video frames, point clouds, control commands, and parameter updates.

ROS nodes can run on different machines; in that case they communicate over the network. ROS includes a dedicated tool called RViz for visualizing messages within the system. For example, you can see how a UAV perceives its surroundings, plot its trajectory, and display the video feed from its camera.

ROS supports creating packages in C++ and Python.

Gazebo: A Real‑World Simulator

All these software components need to ingest data from sensors and control actuators. From the program’s perspective, it doesn’t matter whether the sensors and actuators are real or simulated, so you can test all the algorithms on a computer first, in the Gazebo simulator.

Gazebo simulates how a robot interacts with its environment. Its high-quality 3D graphics let you view the world and the robot almost like in a video game. It includes a built-in physics model and supports various sensors such as cameras and rangefinders. You can extend the available sensors by creating your own plugins. Sensor measurements can be simulated with configurable noise levels.

Gazebo comes with ready-made environments and sample tasks, and of course you can create your own. Beyond sensor plugins, you can also write programmatic plugins. For example, you can implement a motor model in code that defines the relationship between the controller signal and the engine’s thrust.

Docker Image

To set up the described simulation environment on your computer, you’d need to install a large number of packages, and dependency issues may crop up. To save you time, I’ve built a Docker image with the packages preconfigured and all the software we need for the initial drone test in Gazebo.

You can find the container on my GitHub — go ahead and download it.

How the Flight Control Software Works

In our simplest example, the drone’s flight will be very basic.

- The drone’s coordinates come not from SLAM but from a simulated GPS feed.

- In the simulator, the drone does not build a map of its surroundings.

- The drone flies a predefined flight path.

Not bad for a first test.

Getting Started

First, install Docker and the Python packages.

sudo apt install python-wstool python-catkin-tools -y

Download the disk image and the software.

export FASTSENSE_WORKSPACE_DIR=/home/urock/work/px4

cd $FASTSENSE_WORKSPACE_DIR

mkdir -p catkin_ws/src # This is where we'll clone the ROS control module

mkdir -p Firmware # This is where PX4 code will be compiled in SITL mode

cd catkin_ws/src

git clone git@github.com:FastSense/px4_ros_gazebo.git .

wstool init . # Creates a ROS workspace

Build the image.

сd catkin_ws/src

docker build . -t x_kinetic_img # x_kinetic_img — name of the image being created

Download and build the PX4 code in SITL mode inside a Docker container.

сd catkin_ws/src

./docker/docker_x.sh x_kinetic_img make_firmware

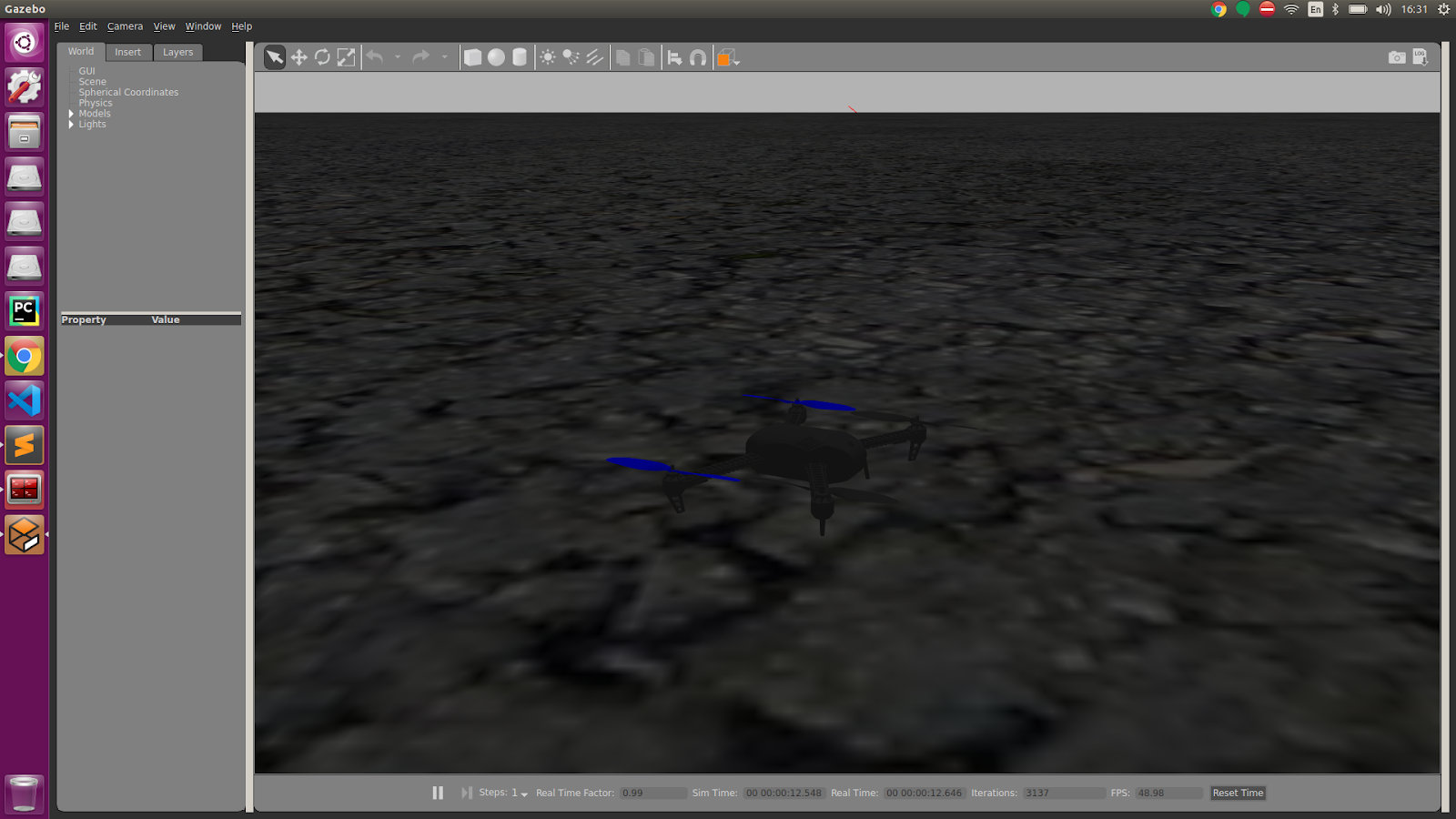

# After compilation, a Gazebo window should open with a drone lying on the asphalt

# After each container run, you have to kill it

# Sorry, I'm not very comfortable with Docker yet and haven't come up with anything smarter

docker rm $(docker ps -a -q)

Launch the container in a Bash shell, then compile and run the test.

сd catkin_ws/src

./docker/docker_x.sh x_kinetic_img bash

# Inside the docker container

cd /src/catkin_ws/

catkin build

source devel/setup.bash # You only need to do this after the first build

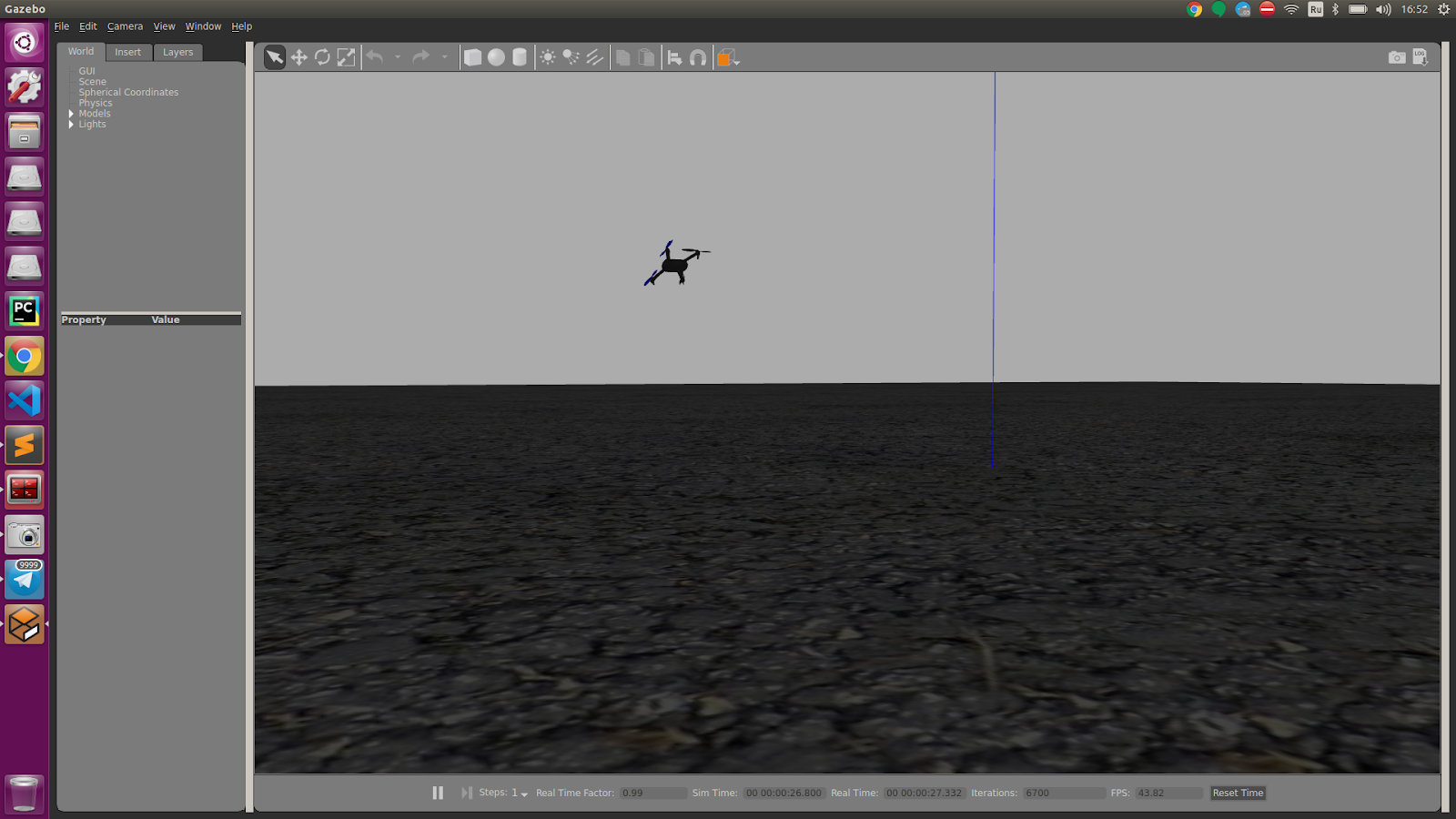

roslaunch simple_goal simple_goal.launch

You should see the same Gazebo window, with the drone starting to fly. In the console, you’ll see output like this:

[ [

The test should end like this.

[-------------------------------------------------------------SUMMARY:* * * *

Ignore errors like this—PX4 produces them for reasons I don’t know.

[

Press Ctrl-C to terminate the process.

Let’s look at the code

My Fast Sense repository is based on materials from the team that developed PX4, the world’s most popular open-source flight controller.

They’ve got their own Docker setup guide, and on their GitHub you’ll find the autonomous control module code, which I migrated to my repository so everything’s in one place.

The Python control code is fairly small; I’m sure you can figure it out on your own. I’ll just note that you should start by reading the test_posctl( function. It lays out the flight logic: the drone is switched to OFFBOARD mode, then armed, takes off, and flies through the waypoints (. After that, it lands and disarms.

What’s next?

If you’re interested in programming drones, there are plenty of ways to get the right education or learn on your own. Here are a few tips to help you get started.

- You can start getting into robotics software engineering as early as high school.

- Choose a university with a dedicated robotics department. If you can’t make it to Stanford, MIT, or ETH Zurich, consider MIPT, Skoltech, or NSTU (there are certainly other strong Russian universities, but I know solid drone teams at these three).

- Come intern with us at Fast Sense: http://www.fastsense.tech/

- Build a team and compete in COEX hackathons: https://copterexpress.timepad.ru/events/ — COEX World Skills: https://coex.tech/cloverws

- And feel free to ask questions in the comments or email me.

See you!