What do we mean by security?

You can’t really discuss a device’s security until you define what you mean by it. Are we talking about the physical security of the data? Protection against low-level forensics like chip-off memory extraction, or just keeping out the curious who don’t know the password and can’t spoof the fingerprint reader? Is sending data to the cloud a security win or a liability? Which cloud exactly—what provider, what region, what data—and does the user know about it and have the option to disable it? And how likely is it, on a given platform, to pick up a trojan and lose not only your passwords but also the money in your account?

The security of mobile platforms can’t be viewed in isolation. Security is an end-to-end discipline that spans every aspect of device use—from communications and application isolation to low-level protections and data encryption.

Today we’ll briefly outline the main strengths and pain points of all modern mobile operating systems with any meaningful adoption. The list includes Google Android, Apple iOS, and Windows 10 Mobile (sadly, Windows Phone 8.1 can no longer be considered modern). As a bonus, we’ll also touch on BlackBerry 10, Sailfish, and Samsung Tizen.

Legacy: BlackBerry 10

Before we dive into the current platforms, a few words about BlackBerry 10, which has already bowed out. Why BlackBerry 10? At one time it was heavily marketed as “the most secure” mobile OS. Some of that was true; some, as usual, was exaggerated; and some things that mattered three years ago are hopelessly outdated today. Overall, we liked BlackBerry’s approach to security—though it wasn’t without its missteps.

Pros:

- The microkernel architecture and trusted boot chain provide real security. In the entire life of the platform, no one has managed to obtain superuser (root) access—despite repeated attempts, including by top-tier shops; BlackBerry wasn’t always a minor player.

- You can’t bypass the device unlock password: after ten failed attempts, the device is fully wiped.

- No built-in cloud services and no deliberate user tracking. No data is sent off-device unless the user chooses to install a cloud app (optional support for OneDrive, Box.com, and Dropbox).

- Exemplary implementation of enterprise security policies and remote management via BES (BlackBerry Enterprise Services).

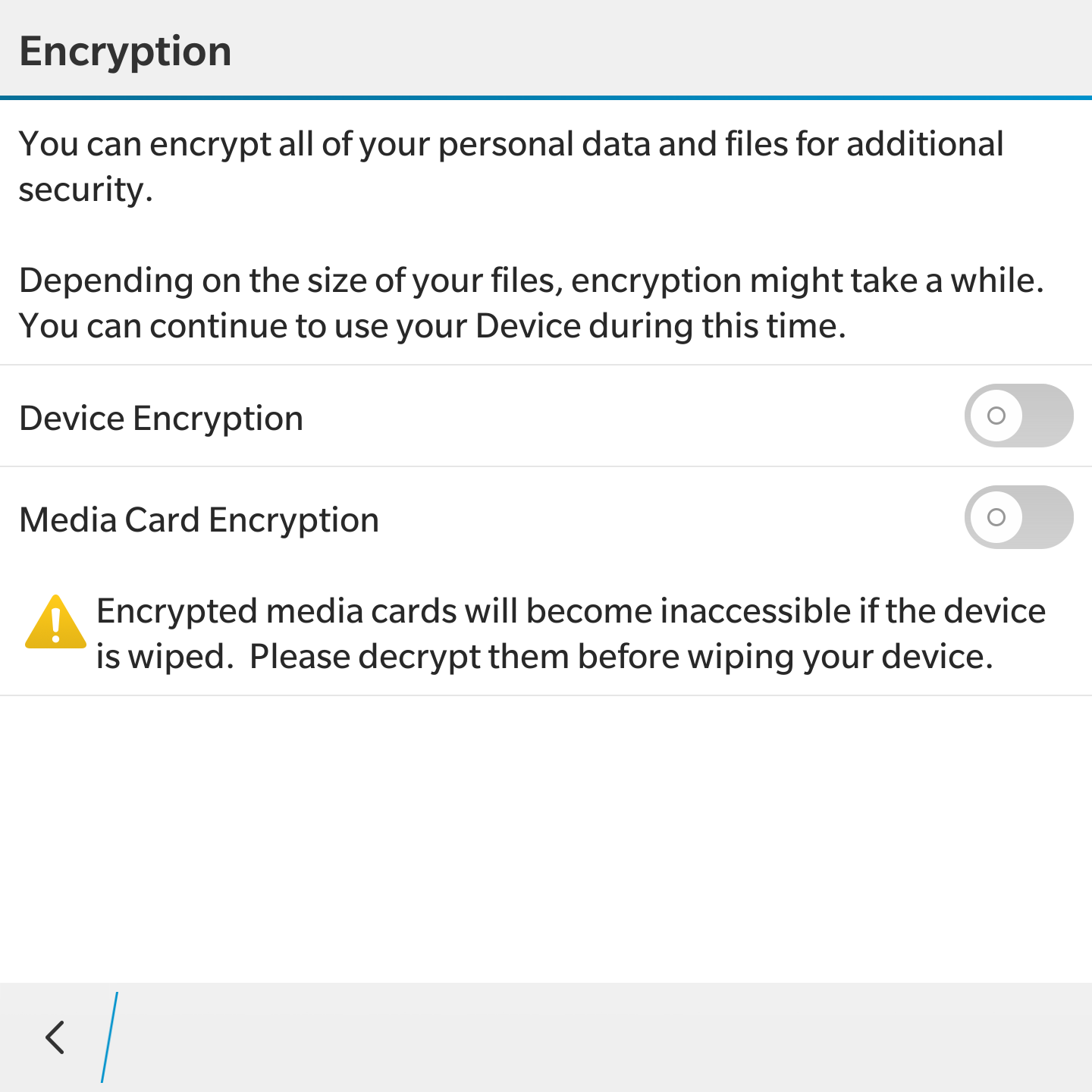

- Strong (optional) encryption for both internal storage and memory cards.

- No cloud backups at all; local backups are encrypted with a secure key tied to your BlackBerry ID.

Cons:

- Data is not encrypted by default. That said, an organization can enable device encryption on employee devices.

- Encryption is block-based and single-tier; there are no data protection classes and nothing akin to the iOS Keychain. For example, Wallet app data can be extracted from a backup.

- BlackBerry ID relies on just a username and password; there’s no two-factor authentication. That’s unacceptable by today’s standards. Moreover, if you know the BlackBerry ID password, you can retrieve the key used to decrypt backups tied to that account.

- Factory reset protection and anti-theft are very weak. They can be bypassed by replacing the BlackBerry Protect app when building an autoloader or—prior to BB 10.3.3—by downgrading the firmware.

- There is no MAC address randomization, enabling tracking of a specific device via Wi‑Fi access points.

Another red flag: BlackBerry readily cooperates with law enforcement, providing as much assistance as possible in tracking down criminals who use BlackBerry smartphones.

Overall, with proper configuration (and BlackBerry 10 users generally do configure their devices properly), the platform can deliver both a reasonable level of security and strong privacy. However, “power users” can negate those benefits by sideloading a hacked build of Google Play Services—inviting all the delights of Big Brother’s oversight.

Niche platforms: Tizen and Sailfish

Tizen and Sailfish are clear market also-rans—more so than Windows 10 Mobile or BlackBerry 10, whose share has dropped below 0.1%. Their security is essentially security through obscurity: we know little about it mostly because so few people care.

You can gauge how justified this approach is from a recently published report, which found roughly forty critical vulnerabilities in Tizen. At this point, the best we can do is restate the obvious.

- Without serious, independent research, you can’t make any claims about a platform’s security. Critical vulnerabilities won’t surface until the platform sees real adoption—by then, it’s too late.

- The absence of malware is simply due to the platform’s low adoption—a kind of security through obscurity.

- The security mechanisms are inadequate, missing, or exist only on paper.

- Certifications only prove the device passed a certification process; they say nothing about its actual security posture.

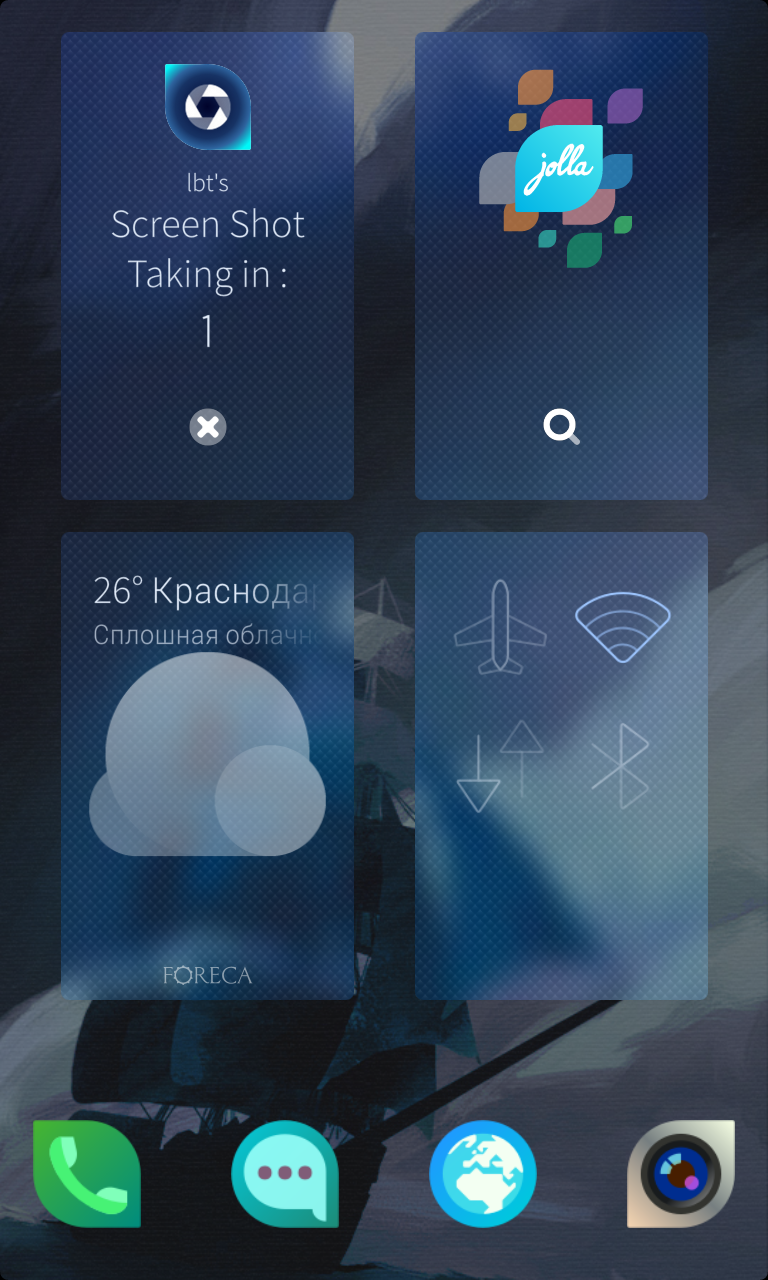

Jolla Sailfish

Sailfish is a mixed bag. On the one hand, the platform is sort of alive: every now and then new devices get announced, and even Russian Post bought a large batch at a sky‑high price. On the other, users are being asked to pay as much as a solid mid‑range Android phone for a Sailfish model with the specs of a bargain‑bin Chinese handset from three years ago. That strategy only makes sense in one scenario: Sailfish phones are bought with public funds and handed out to lower‑level civil servants. Needless to say, in that setup, none of the parties involved is really interested in any supposed “security.”

Even government certifications don’t guarantee anything—no more than open source does. For example, the Heartbleed vulnerability showed up in router firmware whose source code had been publicly available for over a decade. And in Android, which is also open source, new vulnerabilities are discovered on a regular basis.

Exotic operating systems come with no real ecosystem, a very limited selection of hardware and apps, underdeveloped tools for enforcing enterprise security policies, and security that’s questionable at best.

|

|

| Sailfish OS | |

Samsung Tizen

Samsung Tizen stands somewhat apart from other “exotic” platforms. Unlike Ubuntu Touch and Sailfish, Tizen is fairly widespread. It powers dozens of Samsung smart TV models, as well as smartwatches and a handful of budget smartphones (Samsung Z1–Z4).

As soon as Tizen saw broader adoption, independent researchers took a hard look at the system. The findings were discouraging: within the first few months, they uncovered more than forty critical vulnerabilities. To quote Amihai Neiderman, who conducted a security analysis of Tizen:

Quite possibly the worst code I’ve ever seen. Every possible mistake was made. It’s obvious the code was written or reviewed by someone who doesn’t understand security at all. It’s like asking a schoolkid to write your software.

The takeaway is clear: running an exotic, little-used system in a corporate environment is an open invitation to hackers.

Apple iOS

We’ll give Apple some credit. Yes, it’s a closed ecosystem, and yes, the price tag isn’t in line with the raw specs, but devices running iOS have been—and remain—the most secure among mainstream commercial options. This mainly applies to current models like the iPhone 6s and 7 (and arguably the SE).

Older devices are less resilient. For the aging iPhone 5c, 5s, and 6, there are already ways to unlock the bootloader and attack the device passcode (you can reach out to the vendor, Cellebrite, for details). But even on these legacy models, compromising the bootloader is labor-intensive and expensive—Cellebrite charges several thousand dollars for the service. I doubt anyone would go to those lengths to break into your or my phone.

So, where do we stand today? Let’s start with physical security.

All iPhones and iPads running iOS 8.0 and later (with iOS 10.3.2 being current at the time and even more secure) use protection mechanisms so robust that even the manufacturer officially and in practice declines to extract data from locked devices. Independent research (including by Elcomsoft) backs up Apple’s claims.

iOS includes an effective data‑protection system for lost or stolen devices. You can remotely wipe data and lock the device. A stolen device can’t be unlocked or resold if the attacker doesn’t know both the device passcode and the owner’s Apple ID password. That said, skilled hardware tampering can bypass this on iPhone 5s and older models—Chinese repair shops have been known to manage this.

The built‑in, multilayered encryption is exceptionally well designed and implemented. The data partition is always encrypted. A block cipher with per‑block unique keys is used; when you delete a file, its corresponding keys are destroyed, making recovery of deleted data fundamentally impossible. Keys are protected by the Secure Enclave coprocessor and can’t be extracted—even with a jailbreak (we’ve tried). On startup, data remains encrypted until you enter the correct passcode. Additionally, certain data (for example, website passwords and downloaded email) is further protected in the Keychain, parts of which can’t be extracted even with a jailbreak.

You can’t just plug an iPhone into a computer and pull data off it (photos are the exception). iOS uses a pairing trust model with computers, creating a cryptographic key pair that allows a trusted computer to make backups. Even that can be restricted via enterprise security policy or Apple’s Configurator tool. Backup security can be strengthened with an encryption password; that password is only required to restore from the backup and won’t interfere with day‑to‑day use.

iPhone unlocking is implemented at a solid security level. You can use the standard 4‑digit PIN or a stronger passcode. The only additional unlock method is the fingerprint sensor. The implementation gives an attacker very little room to abuse it: fingerprint data is encrypted and is cleared from device memory after power‑off or reboot; after some time if the device hasn’t been unlocked; after five failed attempts; and after a period if the user hasn’t entered the passcode.

PIN code or fingerprint sensor — your calliOS has an option to automatically erase data after ten failed passcode attempts. Unlike BlackBerry 10, this is enforced at the OS level; for older versions of iOS (up to 8.2) there were ways to bypass it.

iOS can restrict app launches with a separate passcode

User tracking and data leaks: where do we stand?

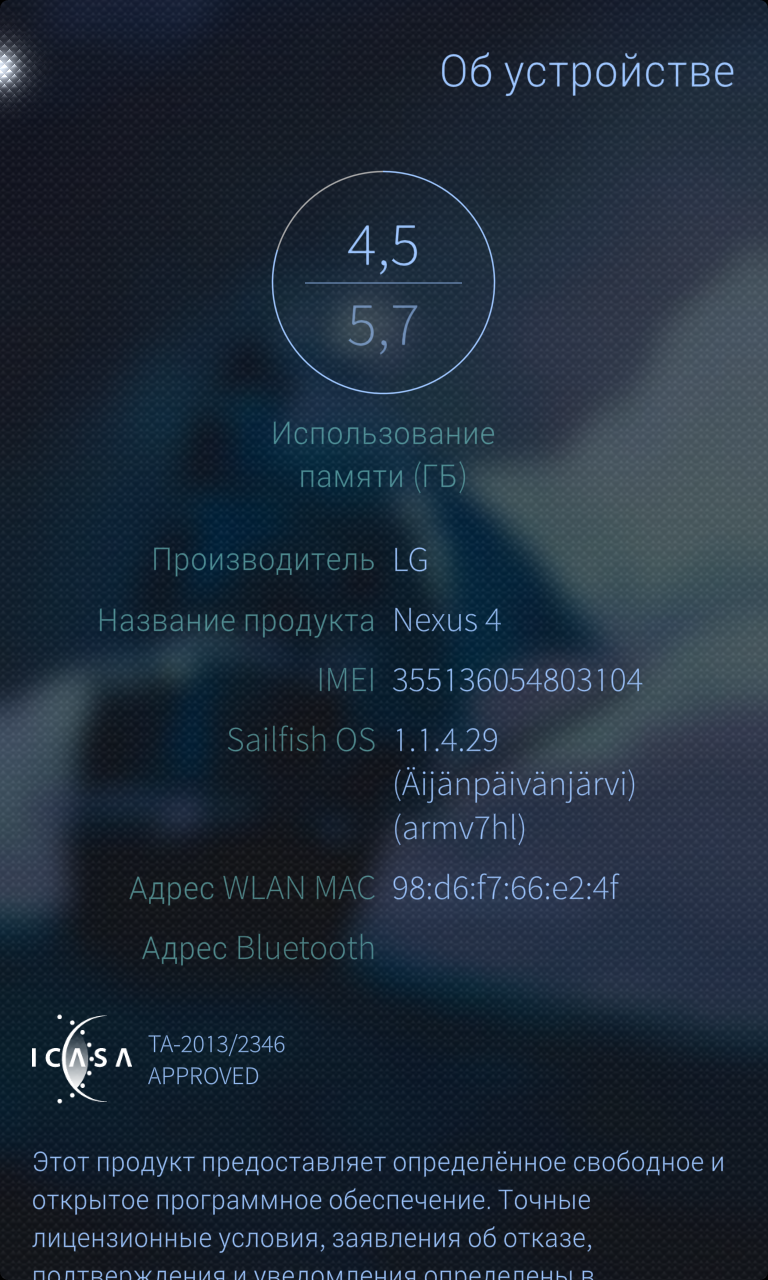

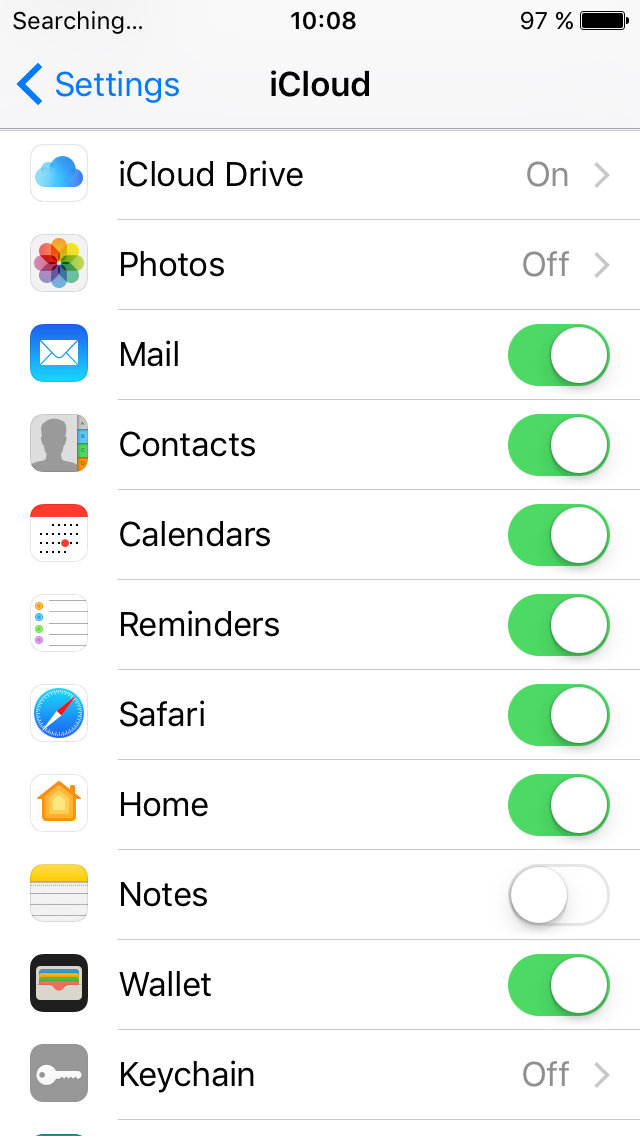

iOS offers optional cloud sync via Apple’s own iCloud service. In particular, iCloud typically stores:

- Device backups

- Synced data — call history, notes, calendars, passwords in iCloud Keychain

- Passwords and browsing history in Safari

- Photos and app data

You can disable all cloud syncing in iOS by turning off iCloud and disabling iCloud Drive. After that, no data will be sent to Apple’s servers. While some of the controls are unintuitive—for example, to stop syncing call history you have to disable iCloud Drive, which is ostensibly for files and photos—switching off Apple’s cloud services completely does disable syncing across the board.

|

|

| All synchronization can be turned off. | |

iOS includes an anti-tracking mechanism: the system can present random identifiers for the Wi‑Fi and Bluetooth radios to the outside world instead of their real, fixed ones.

Okay, but what about malware? On iOS, installing malicious software is almost a non-starter. There have been a few isolated cases—apps built with compromised development toolchains—but they were quickly contained and fixed. Even then, the impact was limited: iOS sandboxes every app, isolating it both from the system and from other apps.

It’s worth noting that iOS has had granular, per‑app permission controls for years. You can individually allow or deny things like background activity (something “stock” Android doesn’t let you do), access to location, notifications, and more. These settings make it much easier to limit tracking by apps that treat data collection as their core business—from Facebook‑class apps to games like Angry Birds.

Finally, Apple regularly updates iOS even on older devices, patching newly discovered vulnerabilities almost immediately (compared to Android). Those updates roll out to all users at the same time (again, unlike Android).

Interestingly, starting with iOS 9 the system is protected against man-in-the-middle attacks involving certificate interception and substitution. While Elcomsoft’s lab managed to reverse-engineer the iCloud backup protocol in iOS 8, they couldn’t do the same on newer releases for technical reasons. On the one hand, this provides assurance about the security of data in transit; on the other, we have no reliable way to verify that no “extra” information is being sent to the servers.

Finally, we can’t ignore the mythical “backdoors” in operating systems, which supposedly lead officials to prefer “certified” Sailfish-based devices over polished iPhones. At Elcomsoft, we thoroughly examined dozens of iPhone models, starting from the earliest generations. In the very old models (up to and including the iPhone 4), there were vulnerabilities we were able to exploit. Starting with the iPhone 4s, we have not found vulnerabilities or backdoors of that caliber.

Common sense says that if such tools were widely available, the FBI wouldn’t have had to pay a million dollars to Cellebrite to unlock a single iPhone 5c. Cellebrite, for its part, keeps the vulnerabilities it uses to exploit the bootloader tightly under wraps—above all from Apple. “We spent a year and a half finding this vulnerability. It would take Apple a week and a half to patch it,” a company representative said at a conference. So let’s put the tinfoil-hat theories to rest.

At the same time, Apple fully controls its own cloud infrastructure and will provide cloud data upon law enforcement request. And there’s quite a lot of interesting information stored in the cloud, by the way. Statistics on the number of requests granted are publicly available. You can find more detailed analysis on Elcomsoft’s website.

Overall, iOS 10.3 security on the iPhone SE, 6s, 7, and their Plus models is top-tier. There’s little to fault, and any minor shortcomings can be easily addressed with built-in tools.

Windows 10 Mobile

We’ll save Android for later and start with smartphones running Windows 10 Mobile. Naturally, the main focus is on Microsoft’s own flagships—the Lumia 950 and 950 XL. These devices were originally engineered with enterprise customers in mind.

One of the most interesting things about Windows 10 Mobile is that it’s the only mobile OS not built on a UNIX variant. Its custom kernel and system design, plus tight optimization of the OS and apps for a narrow set of supported chipsets, place Windows 10 Mobile somewhere between the fully locked-down iOS and the fully open Android.

Microsoft operating systems support full‑disk encryption; however, unlike Apple iOS, they don’t provide multi‑layered data protection. Encryption can be enabled or disabled at the user’s or network administrator’s discretion. You can also configure encryption for external media—such as microSD cards, where applicable.

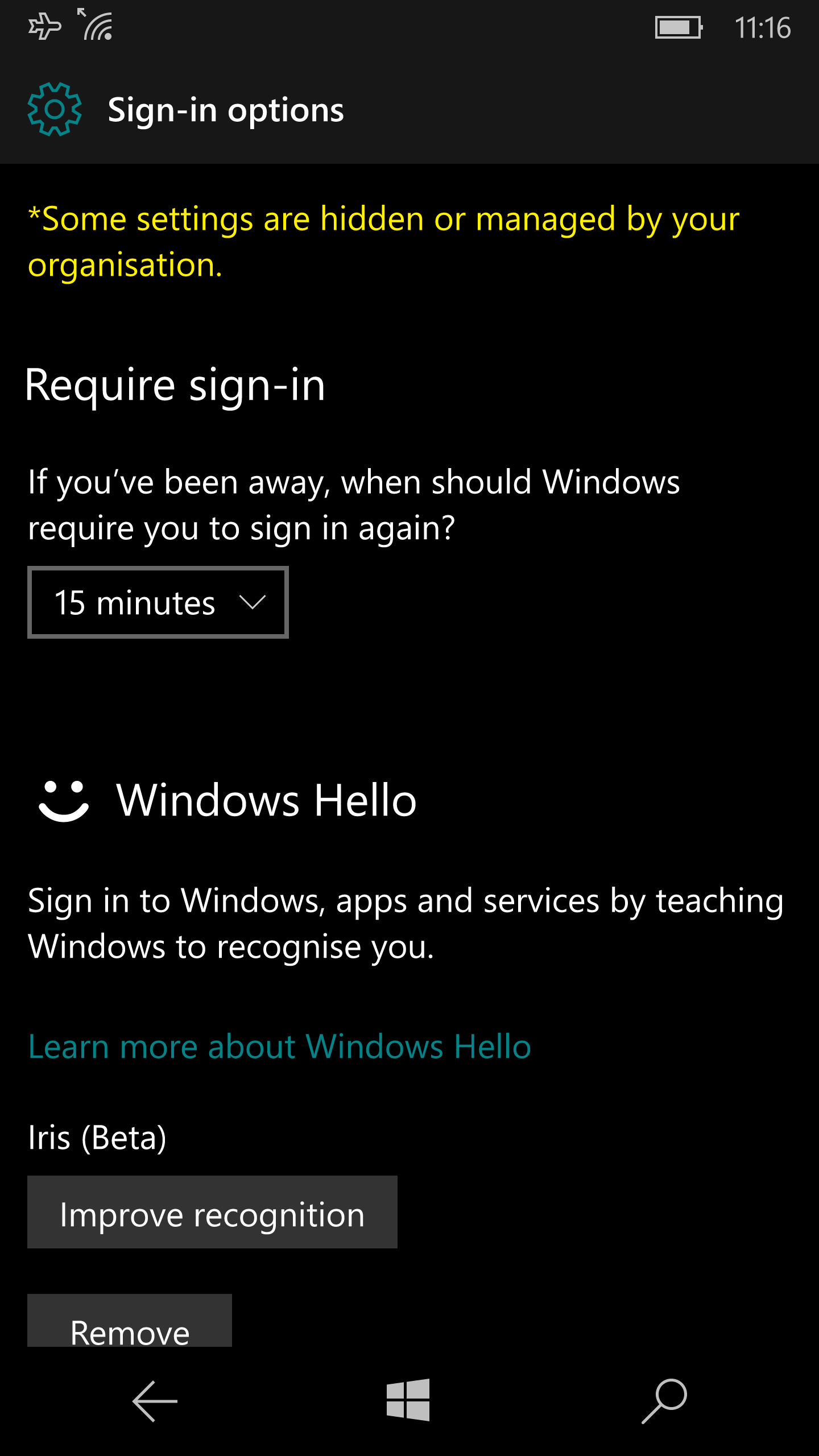

You can unlock the phone with a traditional PIN or via a biometric iris scanner (available only on the Lumia 950 and 950 XL). In terms of speed and convenience, the iris scanner clearly trails fingerprint readers on iOS devices and modern Android phones. Security is solid, though. Unlike Android, Windows 10 Mobile does not offer—or allow—less secure authentication methods.

|

|

| Iris authentication is enabled in Settings. | |

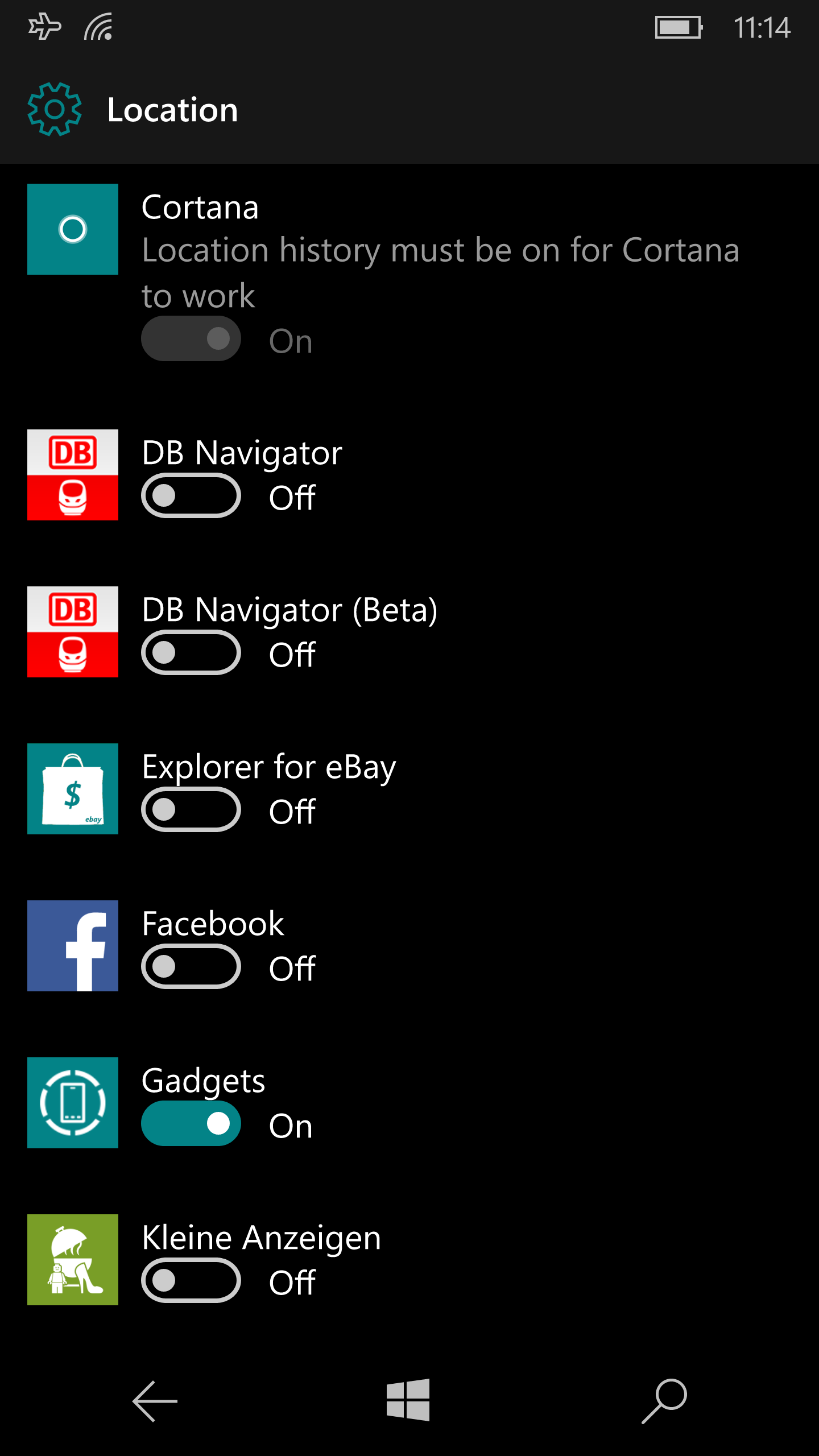

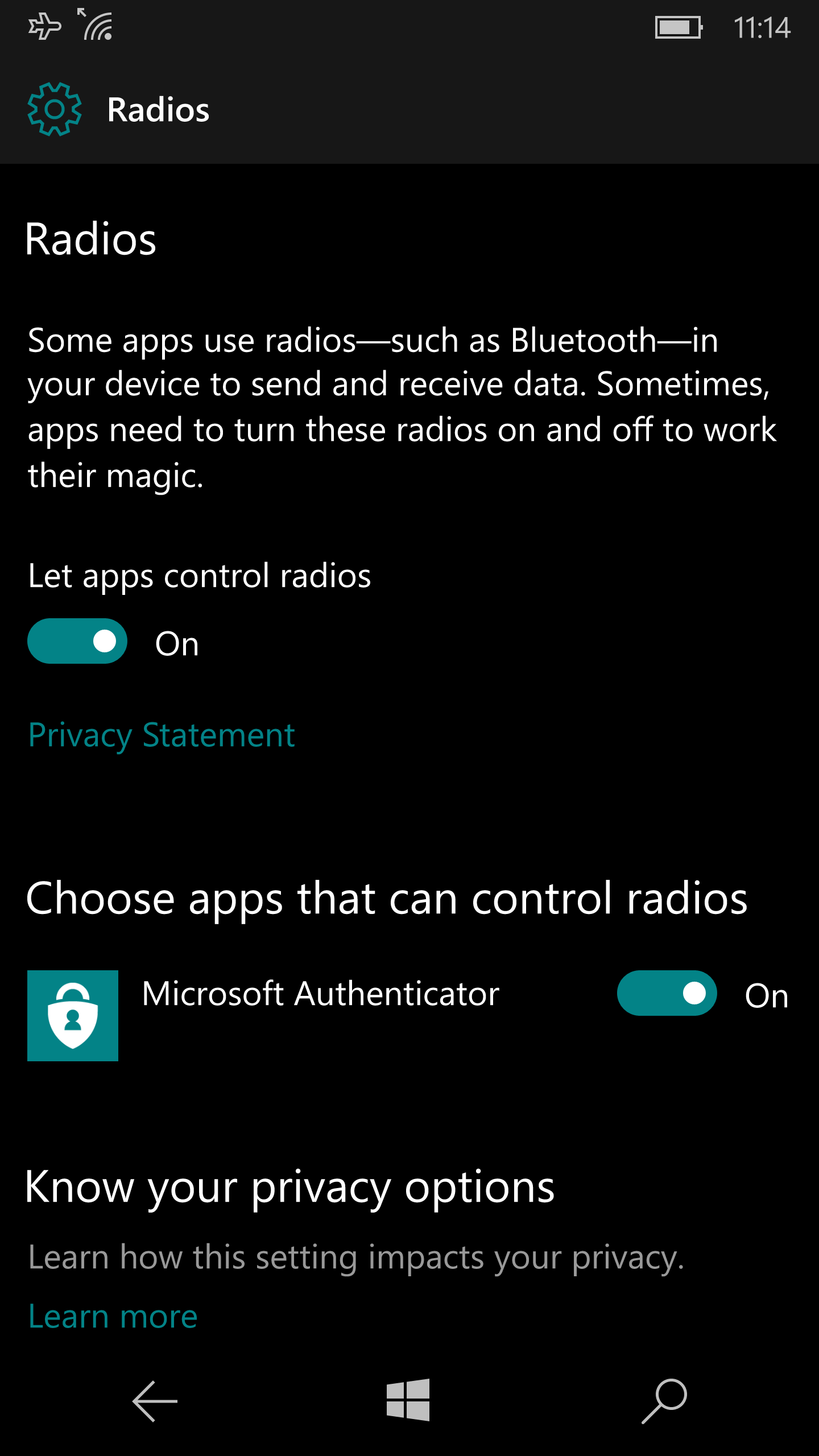

Granular app permission controls are available: you can limit apps’ access to your location and prevent them from running in the background.

|

|

| Left: apps with access to location data; right: telephony access toggle. | |

Application sandboxing is in place. Installing apps from third‑party sources is blocked by default and can be further hardened via an enterprise security policy that prevents users from enabling the installation of unsigned apps.

By the way, there’s a jailbreak equivalent for Windows 10 Mobile that gives you elevated privileges, lets you edit the registry (which, incidentally, is almost identical to the registry in desktop Windows 10), and grants access to the file system. Setting it up isn’t exactly straightforward, but it’s done entirely using built‑in developer mode tools—no vulnerability exploitation involved. The method has been known for a long time and continues to work across all versions of Windows 10 Mobile, including the Creators Update.

Whether this is a security win or a risk is hard to say. After all, this kind of “hack” doesn’t affect unlocking the phone or accessing encrypted data—unlike an iOS jailbreak, which is often used to bypass the platform’s built-in security controls.

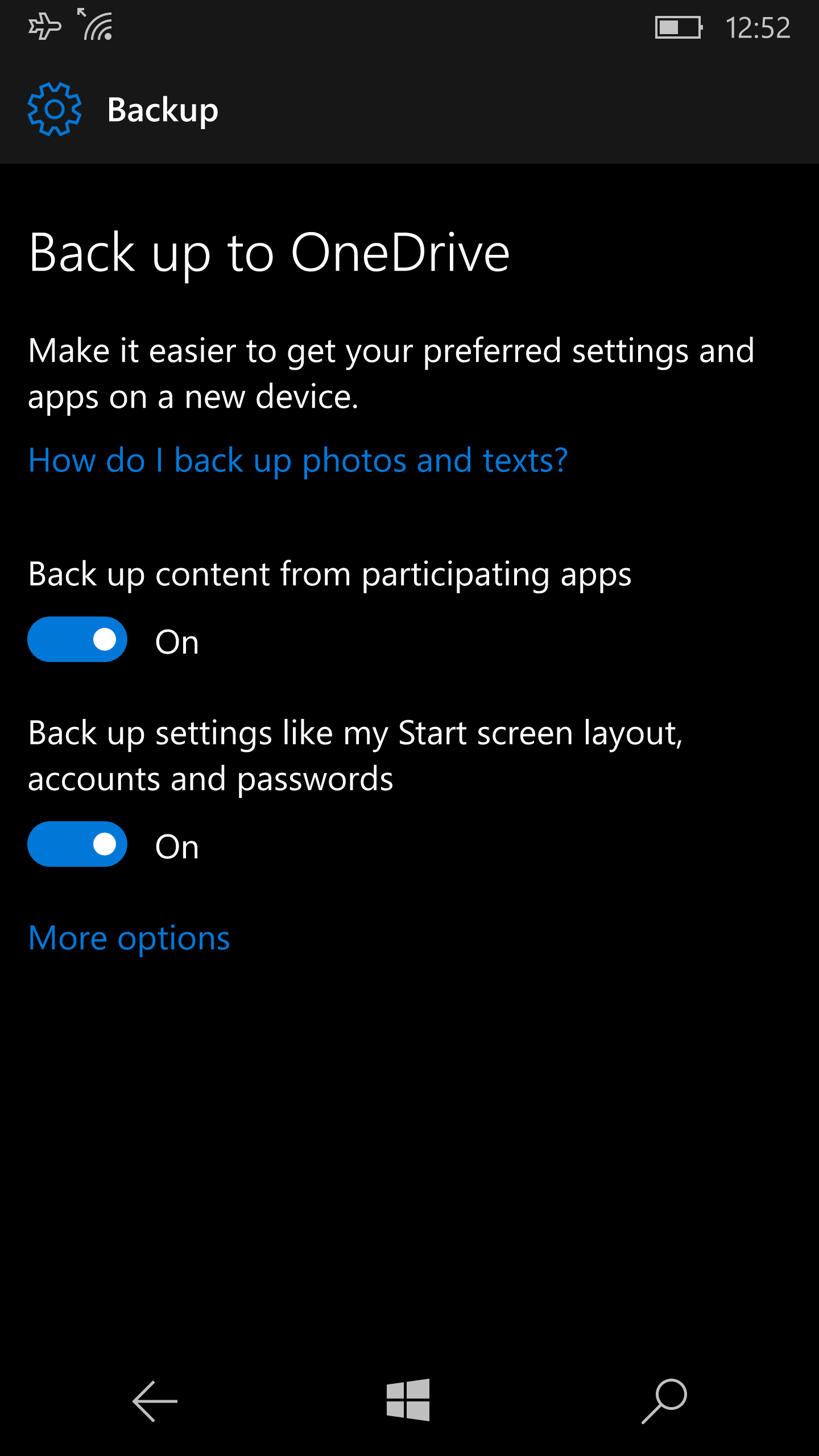

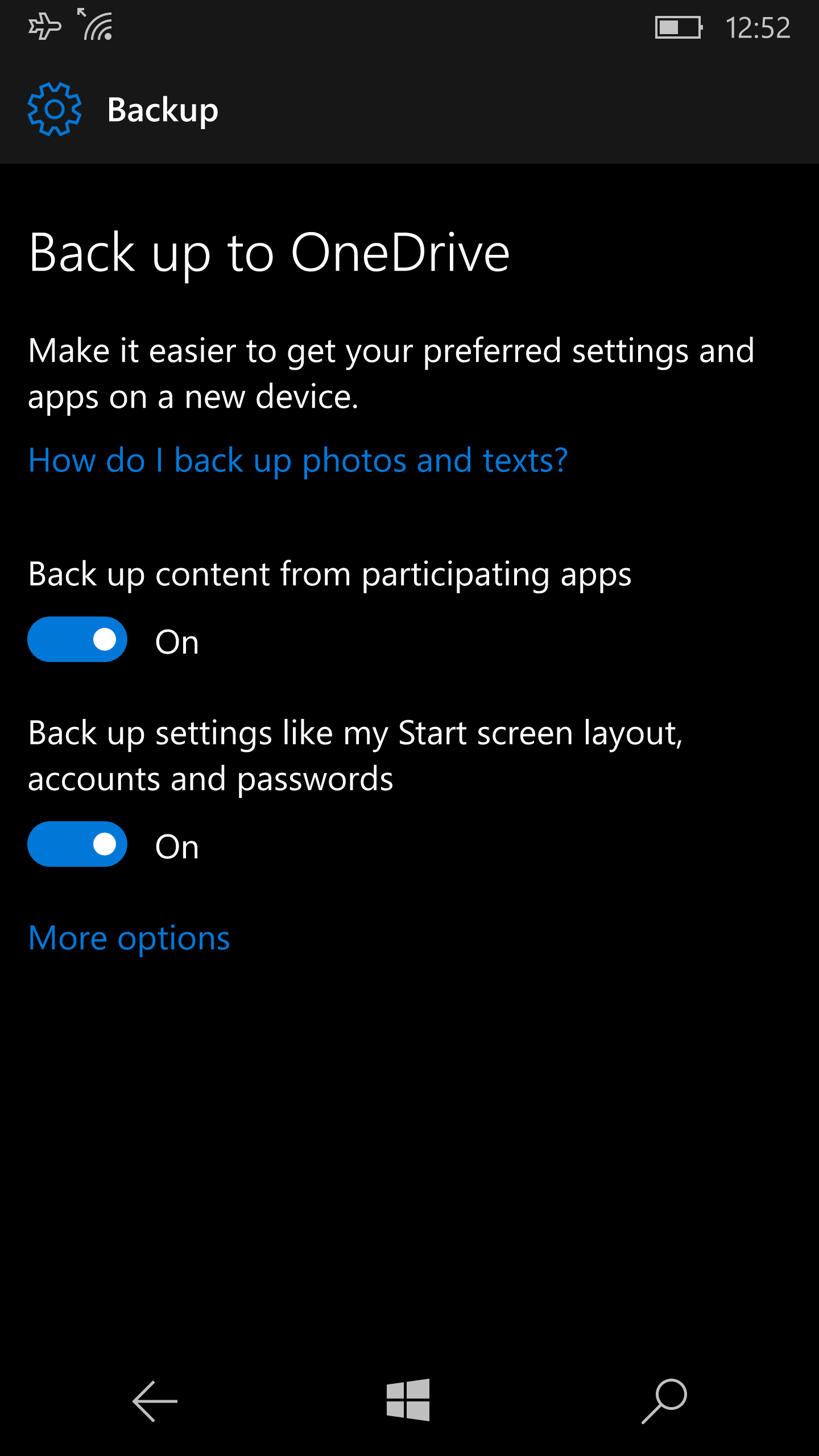

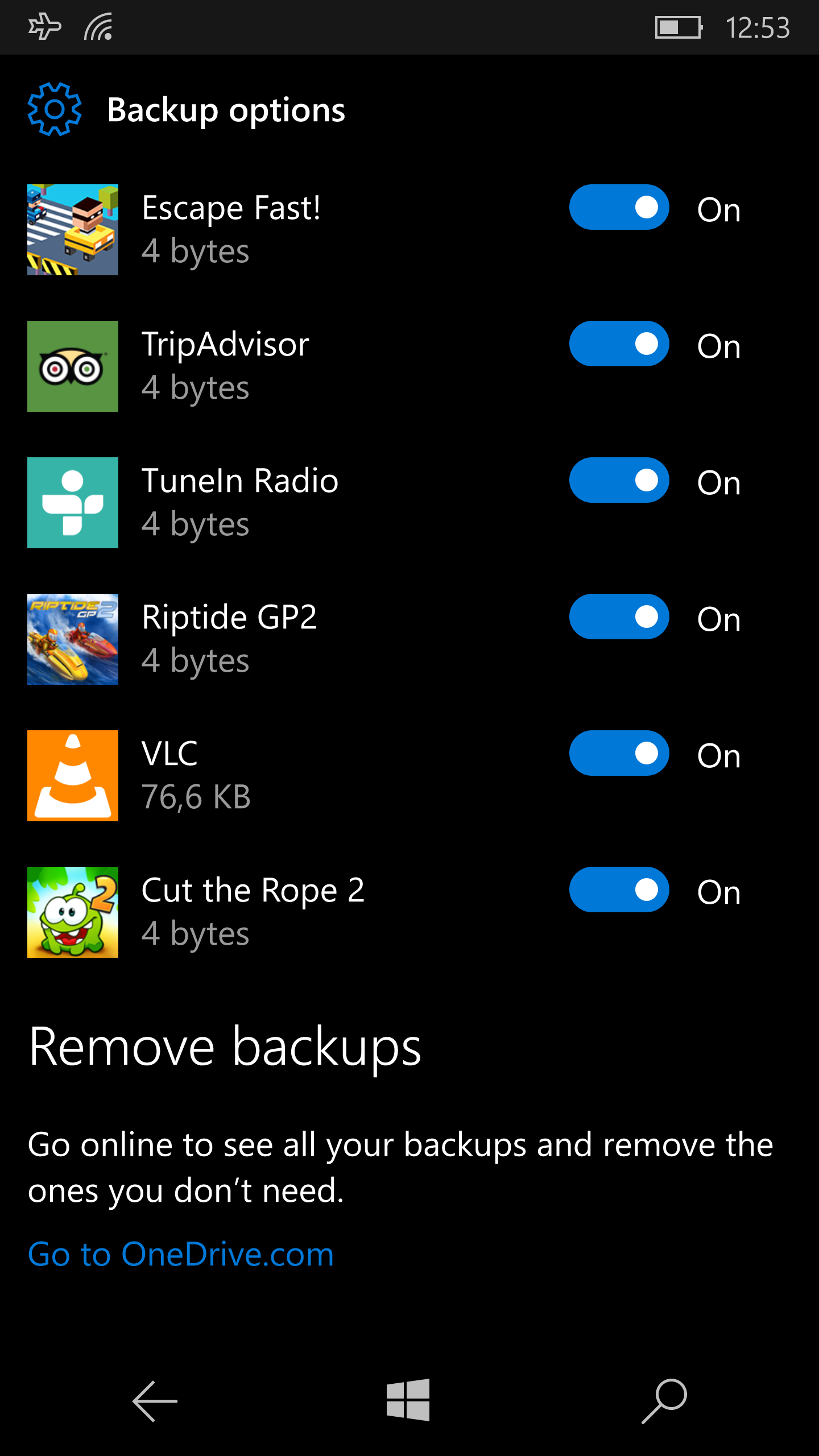

Like Apple, Microsoft collects data from users of its devices. In terms of how much it gathers and the scope of its tracking, the company once again sits somewhere between Apple and Google. In the Microsoft OneDrive cloud, phones create backups (including app data), and they sync call logs and SMS messages (whereas iOS syncs only call logs, not messages). Passwords and browser history are also synchronized.

|

|

| You can disable backups for individual apps. | |

The Find My Phone anti-theft and remote lock service is available, but a feature comparable to Apple’s iCloud Lock or Google’s Factory Reset Protection is only available on models intended for the U.S. market.

As with iOS, all these features are easy to disable, either in the device settings or through corporate security policies.

Like Apple, Microsoft regularly updates its devices and promptly patches discovered vulnerabilities. The update story here is much better than on Android. That said, the platform’s limited adoption and Microsoft’s lukewarm commitment to its future offset many of those advantages.

Google Android

We’ve finally arrived at Android. Android security has been the subject of tens of thousands of articles and hundreds of books. We’re not going to try to cover everything; instead, we’ll take a bird’s‑eye view of the ecosystem as a whole.

Let’s be blunt: Android phones are the most common—and the least secure. Android 4.4 and earlier are basically a sieve. Even Android 5.0–5.1.1 had critical vulnerabilities and gaping security holes. A minimally acceptable security baseline only arrived with Android 6.0, and even then only on devices that shipped with 6.0 from the factory. Android 7 (which still accounts for under 8% of devices) finally delivers reasonably solid on‑device security.

Calling Android a leaky bucket, it’s only fair to note that by the raw count of reported vulnerabilities, iOS is far ahead. The difference is that Apple typically ships fixes within two or three weeks, whereas Android fixes land in the theoretical, semi‑mythical world of AOSP. Regular users might get them as part of monthly security updates (and then only on current flagships from select vendors), get them six to twelve months later, or never at all. The Android version distribution chart suggests that, at best, timely security patches reach about 7% of users.

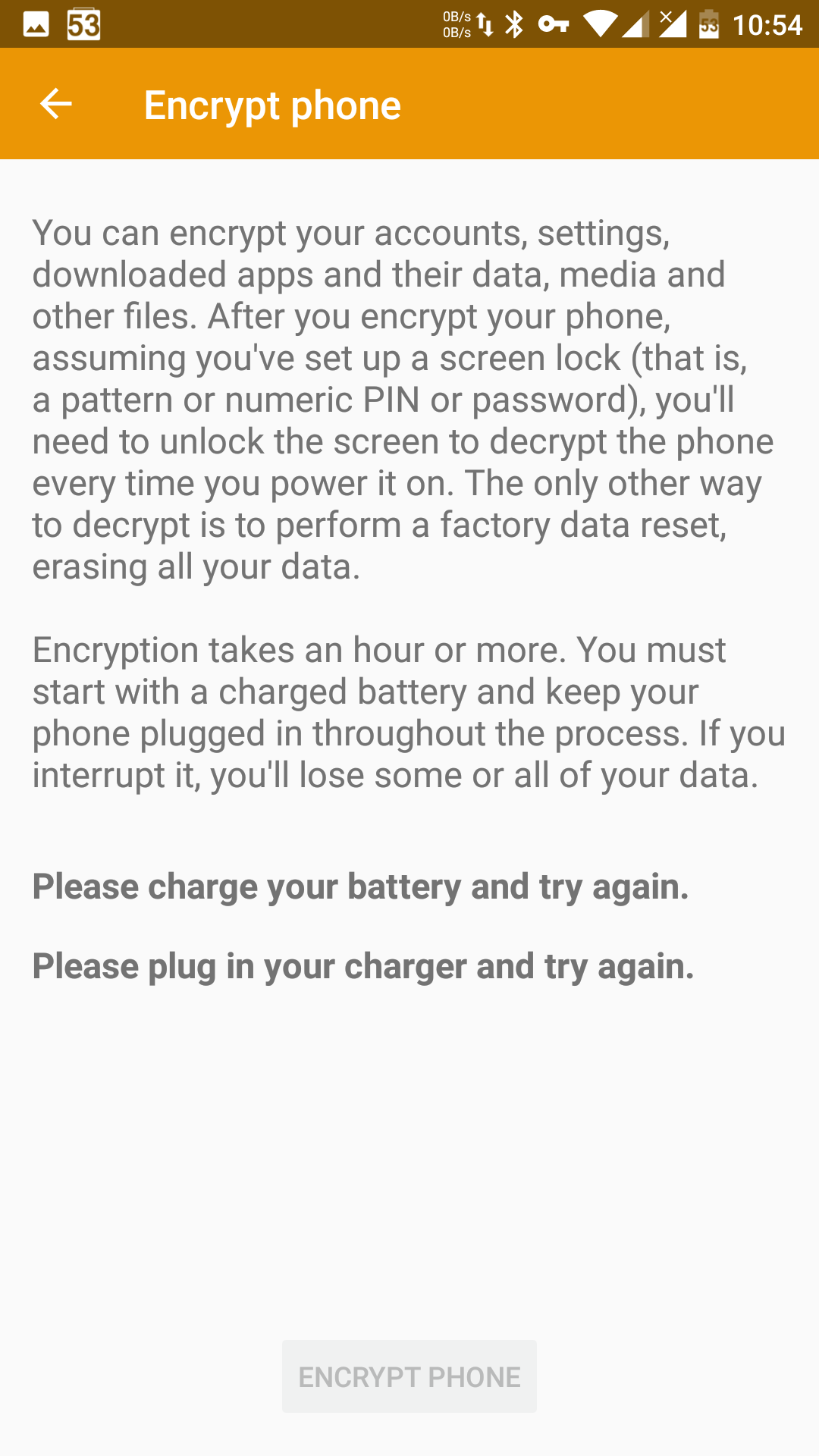

With Android, what matters isn’t just which version you’re running, but also which version the device originally shipped with. For example, devices that come with Android 6.0 out of the box encrypt the user data partition by default, but this doesn’t apply to phones that were upgraded to Android 6 over the air.

How Many Android Phones Are Encrypted?

At Google I/O, it was reported that roughly 80% of devices running Android 7 and about 25% of those on Android 6 are encrypted. What does that mean in practice? Let’s compare the distribution of Android versions with the encryption figures:

- Android 5.1.1 and earlier: ~62% of the market (no encryption data);

- Android 6: 0.31 (31% of the market) * 0.25 = 0.078 (7.8%);

- Android 7: 0.07 (7% of the market) * 0.80 = 0.056 (5.6%).

That gives us 13.4%—the share of Android devices that are definitely encrypted. Most of the credit goes to Google, which required OEMs shipping devices with Android 6 or 7 to enable encryption by default. We don’t have data for older releases, but it’s reasonable to assume many users didn’t want to take the performance hit of turning on optional encryption. With that in mind, the real figure for encrypted Android devices is unlikely to exceed 15%.

Although Android, like iOS, runs apps in sandboxes, it allows much more latitude. Apps can interfere with other apps’ behavior—most notably via Accessibility services, which can even simulate button presses, and through third‑party keyboards that inherently gain the ability to spy on users. Apps can infer a user’s approximate location even without the relevant permissions (the technique is interesting, widely used in practice, and worthy of a separate write‑up). New malware appears every week, and on some phones (we’ve covered such cases) it comes preinstalled in the firmware and can only be removed by reflashing the device.

Yes, this behavior—more precisely, this degree of freedom—is built into the system (it’s a feature, not a bug), and even Accessibility services and third‑party keyboards require a separate confirmation to enable. But trust me, that won’t make life much easier for your kid, your mother‑in‑law, or your grandma, no matter how hard you try to drill into them, “never tap this.”

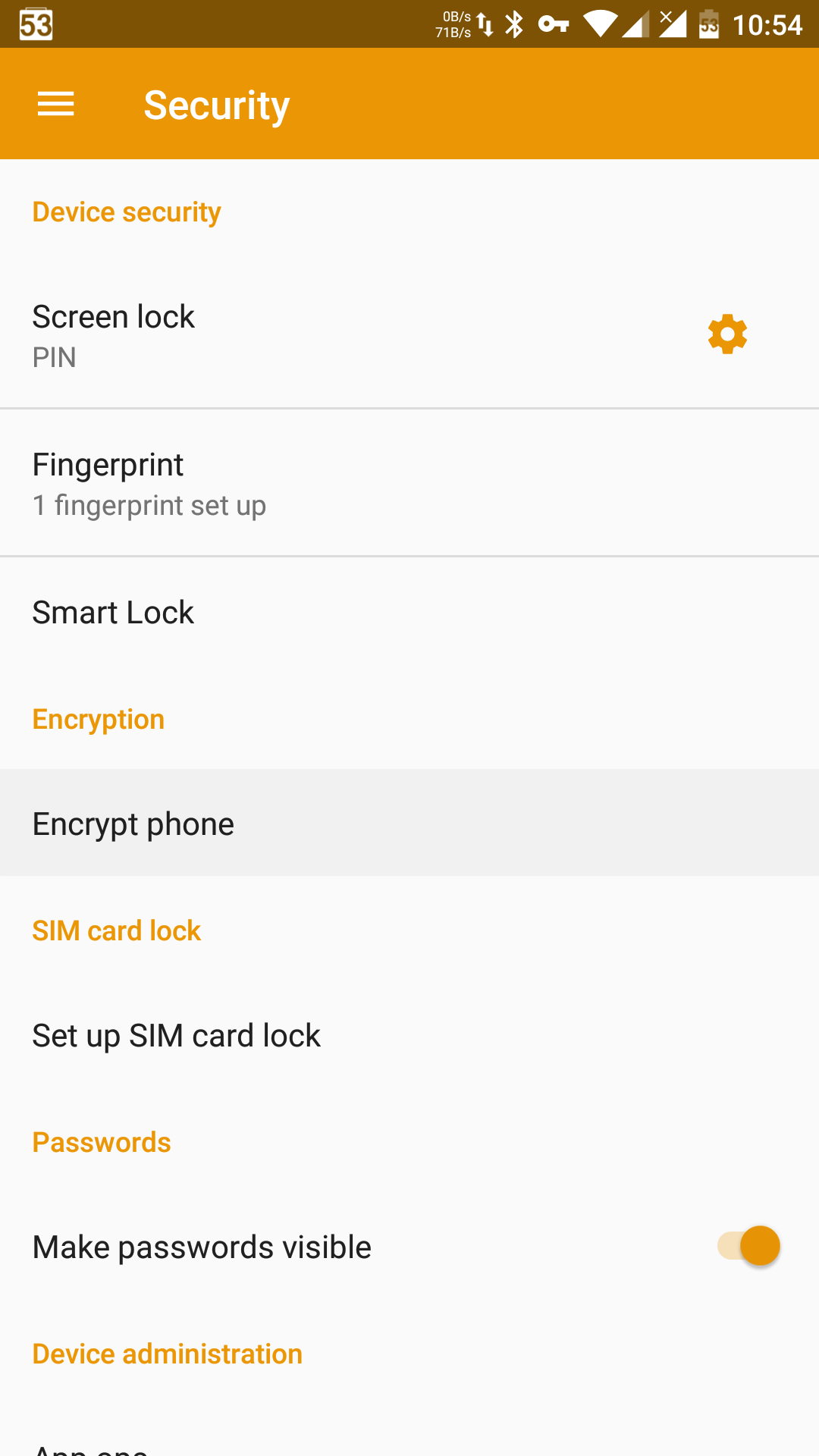

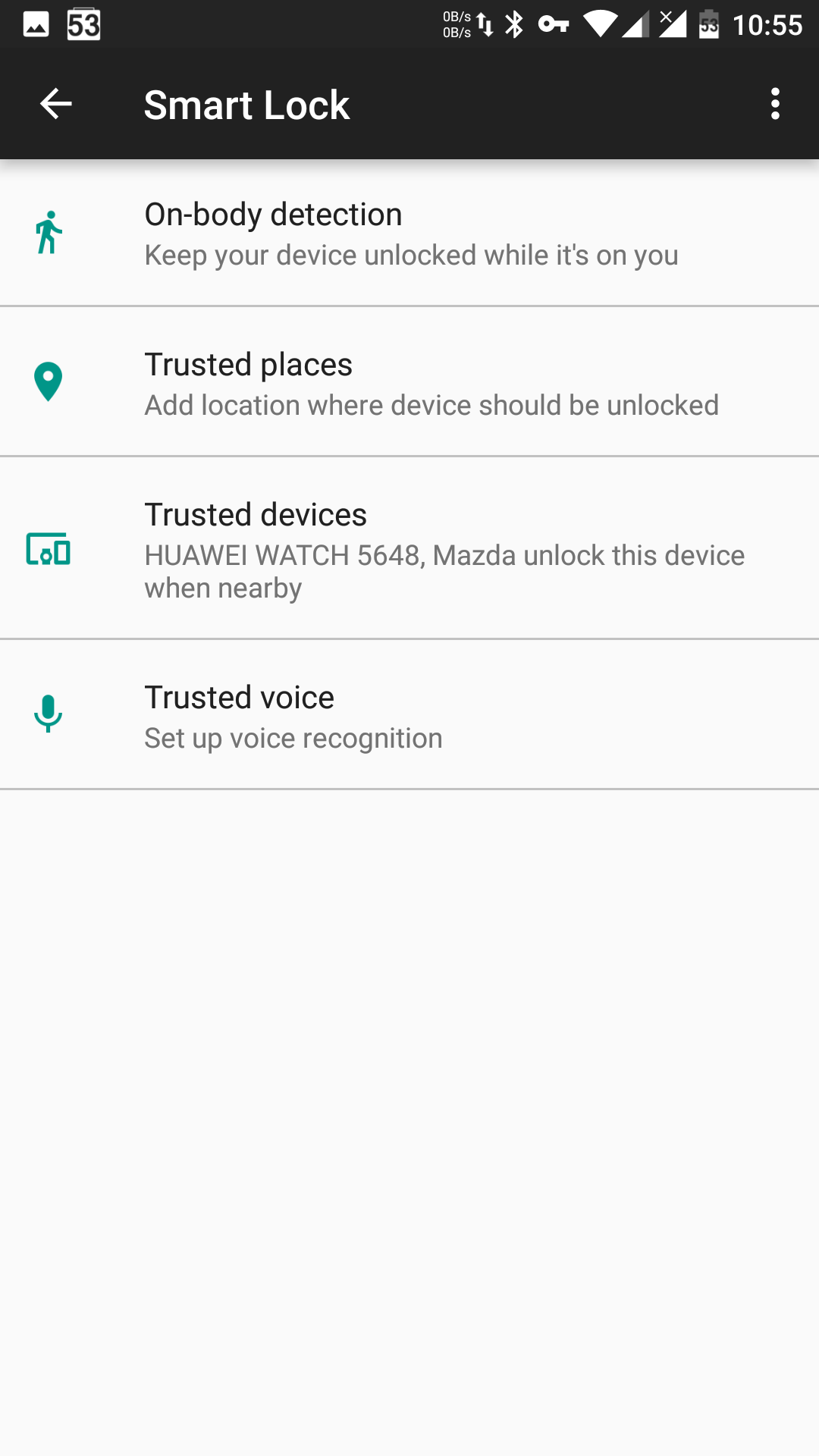

And what about unlocking the phone? You’d think it’s the simplest thing to get right… but no—Android’s developers (specifically AOSP) managed to mess it up. Android offers just about every insecure authentication method you can imagine. Take Smart Lock, which can automatically unlock the phone based on location (picture a scenario where the FBI doesn’t have to spend a million dollars to crack an iPhone 5c—an agent just walks to the suspect’s home). Or unlocking when connected to a “trusted” Bluetooth device (the agent turns on the suspect’s fitness tracker or the car stereo).

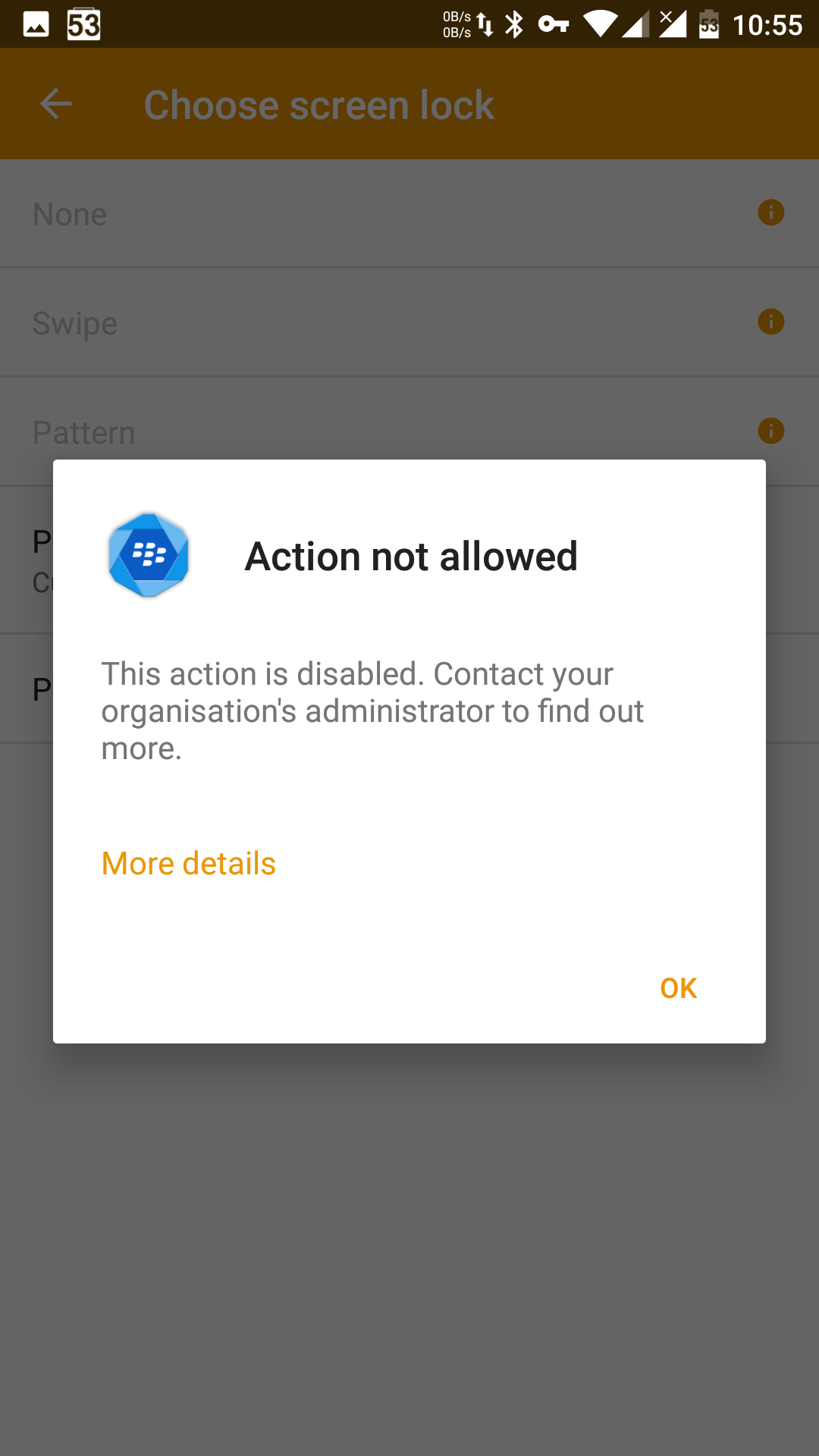

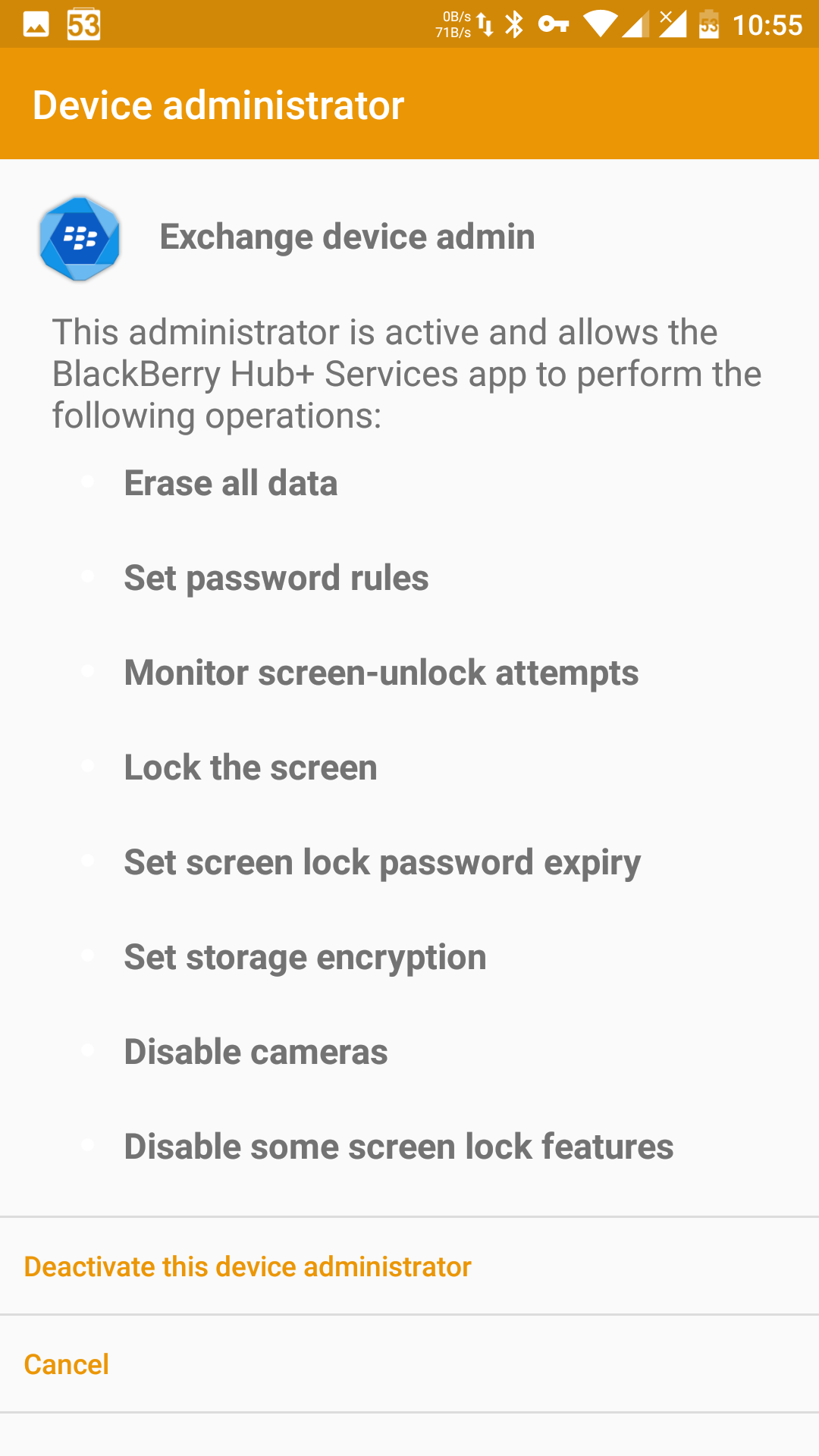

You can also enable face-based sign-in — and in some cases it may even accept a photo. Some methods can be disabled via corporate security policy, but you have to list each one explicitly. Did the sysadmin forget to block Bluetooth-based unlock? Someone will definitely exploit that loophole. And if a new, unsafe unlock method appears, you’ll have to add a separate rule to block it too.

|

|

| Unlock any way you like | |

Sure, the intentions were good: at least try to nudge users of devices without fingerprint sensors to set a lock code. But the downside of these methods is disastrous: they instill a false sense of security—calling them “protection” really belongs in quotes. What’s worse, Smart Lock is still available in the latest Android builds, even on devices that already have fingerprint readers. No one’s forcing you to use Smart Lock, of course, but it’s just so convenient…

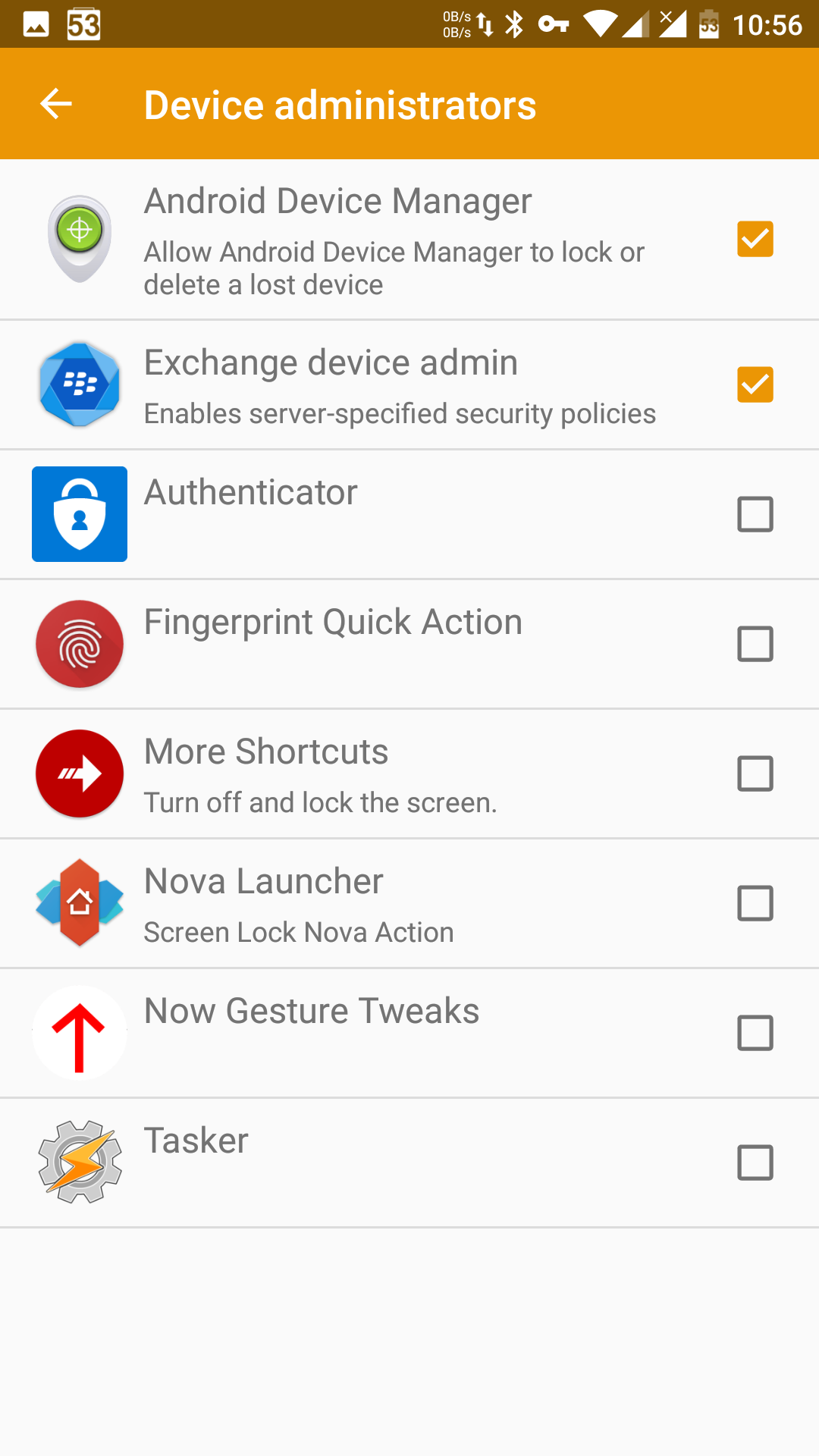

Fortunately, security policies let you administratively restrict certain types of unlocking:

However, there’s no way to prevent the user from disabling such a policy:

|

|

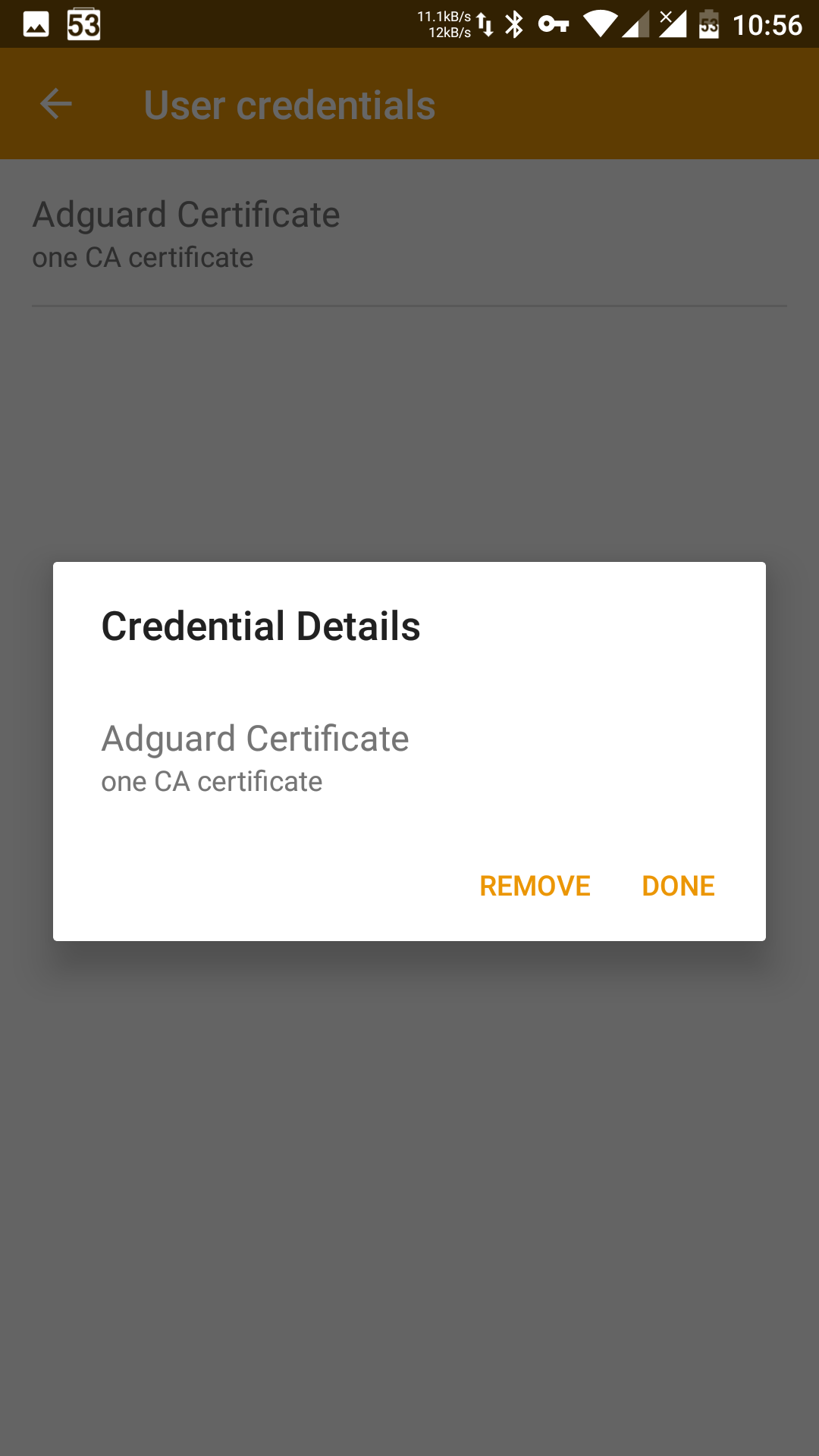

On Android, you can install a custom CA certificate to sniff/intercept HTTPS traffic:

Adguard now supports stripping ads from HTTPS traffic. But what appears in their place could be anything.

Big Brother Is Watching You

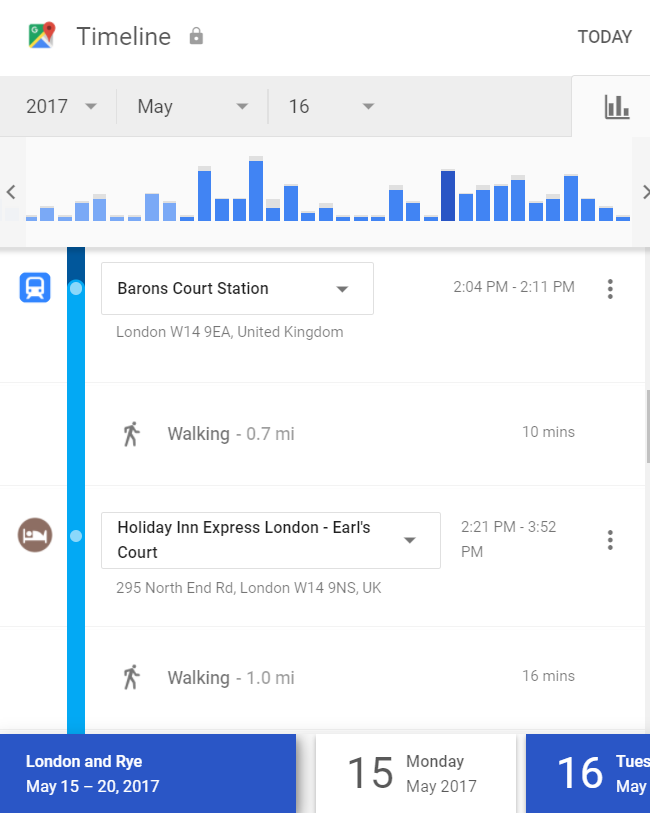

Google collects an enormous amount of information about Android users. The level of detail is astonishing: you can see exactly where you were and what you were doing at a specific time on a specific day over the past six years. Not only does Google know where you were in terms of GPS coordinates; it also infers the exact venue. Hotel, restaurant, bowling alley, theater, park — Google matches your coordinates against map data and the movements of billions of other users. The result: the company can know everything down to how long you sat at a restaurant table waiting for your first course.

You think I’m exaggerating? Not at all. We’ve already covered what data Google collects and how to access it.

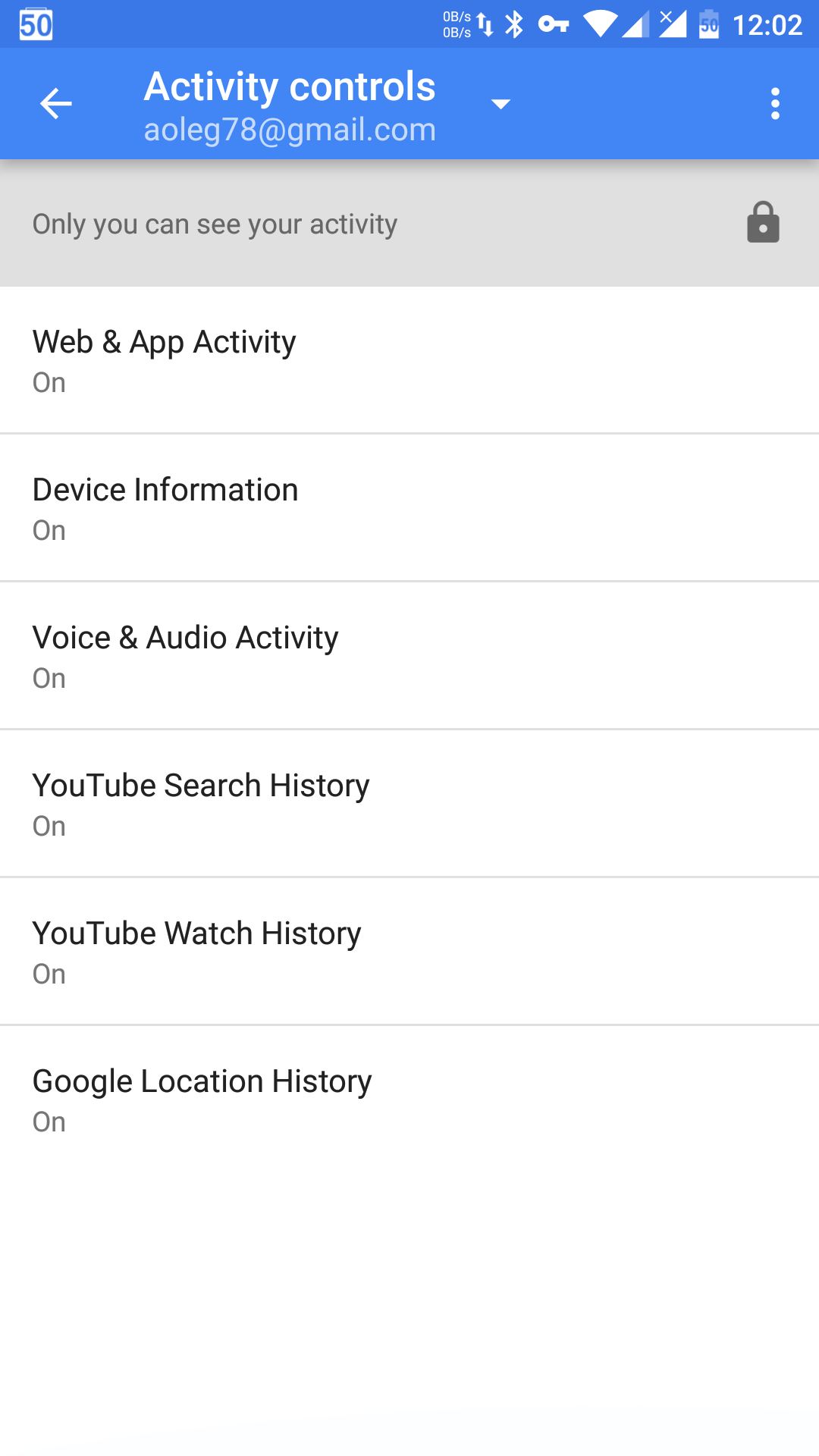

Naturally, Google has data on app launch and usage stats, browser history and search queries, Chrome-synced logins and passwords, the words you type with Gboard, your voice queries, the videos you watch on YouTube, and much, much more.

Yes, you can curb tracking and data collection and delete what’s already been gathered, but the range of data is so broad that just listing it would take pages of fine print. What’s more, Google sometimes enables collection of new data types without warning (a vivid example is syncing call and SMS logs on some devices), and even experts don’t always know how to disable their collection and storage. In short: you can limit data collection, but there’s no guarantee you’ve blocked collection of all your data.

Most Android phones ship with low-level service modes for direct memory access, originally meant for flashing or recovering devices when they malfunction. The same modes can be used to extract data from a device, even if it’s powered off or locked. This is common with most Qualcomm chipsets (modes 9006 and 9008), and is typical for MediaTek, Rockchip, Allwinner, Spreadtrum, and some Samsung Exynos–based devices. LG phones also have a proprietary low-level service protocol, and using it for data extraction is largely a matter of procedure.

Using service mode to steal data leaves no traces. Protecting against this is fairly simple: enable device encryption (Android 6.0 or later is recommended, but even on Android 5 the encryption is already quite reliable).

Granular control over app permissions in Android has long been a sore spot. In stock Android (AOSP), runtime permission control didn’t arrive until version 6.0. Third-party ROMs had something earlier, but it was notoriously unstable. Even now it’s far from perfect: there’s no built-in way to stop an app from running in the background, and limiting access to the Location permission often just means the app will determine your location by other means—using permissions that still can’t be controlled granularly even in recent Android versions. Finally, many apps don’t support per-permission management at all, so users still end up agreeing to a bundle of permissions, some of which are anything but harmless.

Enterprise security policies can block some—though unfortunately not all—ways users might bypass built-in protections. For example, you can require a PIN, disable Smart Lock, and mandate native device encryption. You could also choose a BlackBerry Android phone; at least those receive timely security patches and enforce Verified Boot. That said, BlackBerry’s first‑party software doesn’t protect at all against the user’s own actions, surveillance, or data leaking to the cloud.

Android simply doesn’t have a single, comprehensive security model that protects the device and user data at every layer. You can enable Knox, you can choose a phone with verified boot—but none of that stops a user from installing an app that abuses Android “features” (like screen overlays and Accessibility) to auto-install dangerous malware. Those, in turn, can exploit system vulnerabilities to try to gain root and persist in the system partition.

Editor’s note

It’s worth noting that, while the author is right to ask which OS makes a smartphone more vulnerable to compromise, the discussion is about smartphones as deployed, not Android as a pure operating system. Yes, Android has earned a reputation for being full of holes and a haven for malware, but think about it: what is “Android,” exactly? Is it what ships on LG or Samsung devices—the heavily customized builds that barely resemble stock Android? Is it the firmware from lesser-known brands whose phones go years without updates? Or is it something else entirely?

Technically, Android is the code Google releases publicly as part of the Android Open Source Project (AOSP). That’s the “pure” Android the company ships on Nexus devices and, with slight tweaks, on Pixel phones. Every other firmware build—whether lightly or heavily modified—is considered a fork in open‑source terms. Strictly speaking, that makes them something different, but in practice they’re all still referred to collectively as Android.

Is stock Android safer than manufacturer builds? Yes—many vulnerabilities have been found specifically in OEM-modified Android. Plus, in stock Android these issues are fixed much faster, and after a short delay all Nexus and Pixel users receive the updates simultaneously. The overall support window for these phones is two years of major OS updates and an additional year of security patches.

As Oleg rightly noted, by the total number of discovered critical vulnerabilities Android trails iOS—and over half of those bugs aren’t in Android itself but in proprietary hardware drivers. The smartphone issues listed in the article, like the service mode, aren’t Android problems; they’re device-vendor issues. That said, Nexus devices do expose a service mode—which makes sense, since they’re developer-oriented phones.

Android’s core problem isn’t a “leaky” system or a poorly designed OS architecture. Even version 1.0 shipped with true per‑app sandboxes, inter‑app data exchange over typed channels with permission checks, multilayer validation of requests to system data and functions, and a memory‑safe Java runtime that eliminated the vast majority of potential vulnerabilities. And that’s before later releases added SELinux mandatory access control, seccomp-based sandboxes, ASLR, trusted/verified boot, encryption, and a whole host of other hardening technologies.

Android’s big headache is fragmentation: anything built on AOSP gets called “Android,” even when it’s no longer really Android. A company ships a phone on Android, skips updates, puts users at risk—and Android takes the blame. Samsung tweaks Android and botches the lock screen—Android is at fault. Chinese vendors ship phones with an unlocked bootloader—and suddenly “Android is insecure.”

We also shouldn’t forget that the platform offers far more capabilities than iOS. All those Accessibility features, overlays, and other components the author portrays as vulnerabilities are standard parts of Android available to third‑party apps. The more functionality a system exposes, the more opportunities there are to abuse it.

Comparing iOS—a platform with heavily restricted functionality, no sideloading, and a closed ecosystem—to open Android, which offers far more flexibility and is available for anyone to download and modify, isn’t a fair comparison. It’s like comparing a full-fledged OS like Windows to something like Chrome OS.

Is it possible to make Android truly secure?

If the phone is used by a child, a grandparent, or anyone who cares about anything except mobile OS security — then no. In their hands, an Android device will never be truly secure, even if it’s a Samsung with Knox enabled or a BlackBerry with the latest security patch. Why? There’s too much malware and too much latitude for the average user: they can disable “annoying” security controls, turn off boot-time encryption, enable app installs from unknown sources, and do a bunch of other ill-advised things you probably wouldn’t even think of.

If you hand the device to a specialist—and it’s a current flagship from one of the few vendors that push monthly security patches—then, with proper hardening, it’s good enough for a typical user. That means disabling Smart Lock; enabling full‑disk encryption with a mandatory boot-time password (not to be confused with the lock‑screen PIN); enforcing a policy that completely blocks installs from unknown sources; disabling screen overlays and Accessibility services; and constantly policing what data you allow to be collected and by whom. That said, officials, presidents, terrorists, and criminals still won’t take that risk; their go-to choices remain BlackBerry 10 or iOS.

Traditionally, the last section is about updates and security patches. The reality of Android is that manufacturers may or may not update their devices to current Android versions and security patch levels. Discovered vulnerabilities might get fixed—or not; in most cases, they don’t. Flagships from major brands get somewhat regular updates, but even then the support window is noticeably shorter than on Apple or Microsoft devices. Android phones shouldn’t be placed on the same security tier as iOS, or even Windows 10 Mobile. The cheaper the device, the worse its security posture becomes just a few months after release. Ultra‑budget models carry additional risks: no updates (including fixes for critical vulnerabilities), hardware-level weaknesses, and “bonus” malware preloaded into the firmware.

Conclusion

If we rank mobile platforms by security, the lineup looks like this: Apple iOS (iPhone SE, 6s/7), Windows 10 Mobile (Lumia 950 and 950 XL), and Android (best on recent flagships from Samsung, BlackBerry, and other major vendors; worst on low-end devices from some Chinese manufacturers, with everything else spread across that spectrum). Which one should you pick? It depends on your priorities. For most people, the modest level of security Android offers will be sufficient—provided you have a PIN and a fingerprint sensor enabled (but only if you’re on Android 6.0 or later and the device is Google-certified); there’s no root access and the bootloader is locked; full-disk encryption is enabled; and the insecure Smart Lock methods are turned off; if… Well, that list is long enough for a separate article.

With Apple, it’s much simpler: recent flagship devices running the latest iOS versions are secure. If you’re concerned about privacy, just disable iCloud. You don’t need to do anything else.

The exotic also-rans—Sailfish, Tizen, Ubuntu Touch, and the like—should be treated as insecure until proven otherwise. We’ve already reached a verdict on Tizen; Sailfish is next.