What Is HDR?

To fully grasp how HDR+ works, you first need to understand regular HDR.

The fundamental limitation of smartphone cameras is their tiny sensors—more precisely, the small photosites—which restrict the dynamic range they can capture. To compensate, HDR (High Dynamic Range) was developed. The camera first takes a frame at the scene’s normal exposure, then an underexposed frame that preserves highlight detail, and finally an overexposed frame that reveals shadow detail while blowing out the rest. These frames are then aligned and merged using specialized algorithms, whose quality depends on the camera software. The result is an image with well-preserved detail in both shadows and bright areas.

HDR has some obvious downsides: the extended capture time means moving subjects will show ghosting, and even slight camera shake will blur the shot.

What Is HDR+?

Some clever minds came up with an algorithm that avoids HDR’s drawbacks. That said, the only thing it has in common with HDR is the name.

HDR+ stands for High Dynamic Range plus Low Noise. It became known for a set of standout features: the algorithm can suppress noise with almost no loss of detail, improve color fidelity—crucial in low light and near the edges of the frame—while dramatically expanding a photo’s dynamic range. Unlike standard HDR, HDR+ is far more tolerant of hand shake and motion in the scene.

The first smartphone to support HDR+ was the Nexus 5. Due to its less-than-ideal white balance and small aperture (f/2.4), its camera was considered merely average. That changed with the Android 4.4.2 update, which introduced HDR+ and dramatically improved night shots. They weren’t particularly bright across the frame, but thanks to HDR+ they were almost noise-free, retained fine detail, and delivered excellent color (for a 2013 smartphone).

(Full-resolution image)

The Story Behind HDR+

How did a company with no prior experience in cameras come up with an algorithm that works wonders using the Nexus and Pixel cameras, which are fairly ordinary by flagship standards?

It all started in 2011, when Sebastian Thrun, head of Google X (now simply X), was looking for a camera for the Google Glass augmented reality headset. The size and weight constraints were extremely strict. The image sensor had to be even smaller than those used in smartphones, which would severely degrade dynamic range and introduce a lot of noise in photos.

The only option left was to enhance the photo in software, using algorithms. This task fell to Марк Левой (Marc Levoy), a Stanford Computer Science professor and an expert in computational photography. He worked on software-based image capture and processing technologies.

Marc assembled a team known as GCam to explore an Image Fusion method—combining a series of shots into a single frame. Photos processed this way came out brighter, sharper, and with less noise. In 2013, the technology debuted in Google Glass, and later that same year, rebranded as HDR+, it shipped on the Nexus 5.

(Full-resolution image)

How HDR+ Works

HDR+ is an extremely complex technology that we can’t fully break down in this article. Instead, we’ll cover the general principles without getting into the details.

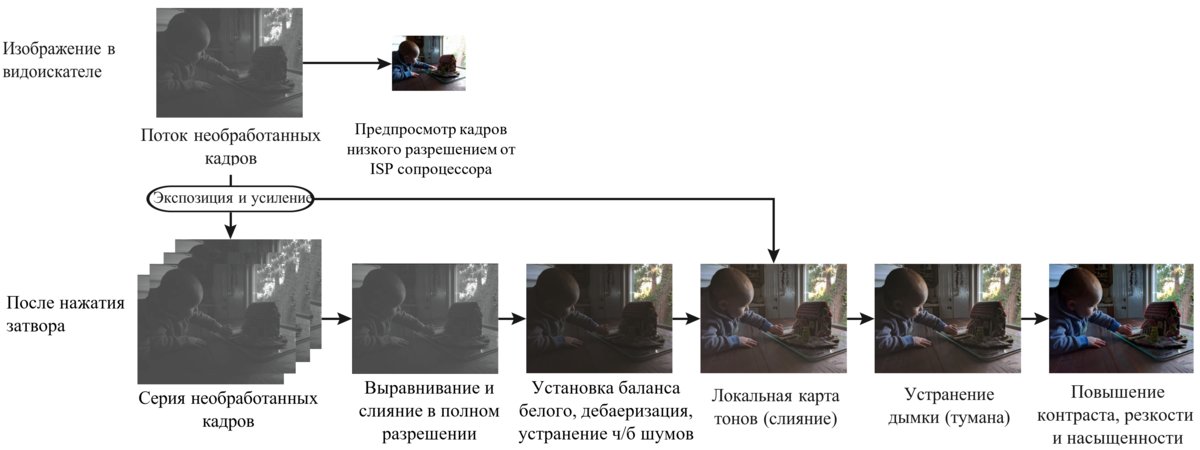

The Core Principle

When you press the shutter button, the camera captures a burst of underexposed, short-exposure frames to preserve as much detail as possible. The number of frames depends on how challenging the lighting is: the darker the scene, or the more shadow detail that needs to be recovered, the more frames the smartphone will shoot.

Once the burst is captured, it’s merged into a single shot. A short exposure (fast shutter speed) helps here, keeping each frame relatively sharp. Of the first three frames, the one with the best combination of sharpness and detail is chosen as the base. The frames are then split into patches, and the system determines whether and how neighboring patches can be aligned. If an unwanted object is found in a patch, that patch is discarded and a similar one from another frame is used instead. The assembled image is further processed with a specialized algorithm based on the lucky imaging method (mostly used in astrophotography to reduce blur caused by the Earth’s twinkling atmosphere).

Next, a sophisticated noise-reduction pipeline kicks in. It combines a simple multi-shot pixel color averaging method with a component that predicts where noise is likely to appear. The algorithm is very gentle around tonal transitions to minimize detail loss, even if that means leaving a bit of noise in those areas. In regions with uniform texture, however, the denoiser smooths the image to an almost perfectly even tone while preserving shade gradations.

(Image in original quality)

What about extending the dynamic range? As we already know, using a short exposure prevents blown highlights. All that’s left is to remove the noise in the dark areas using the algorithm described earlier.

At the final stage, the image undergoes post-processing: the algorithm reduces vignetting caused by light hitting the sensor at an oblique angle, corrects chromatic aberration by replacing pixels along high-contrast edges with neighboring ones, boosts greens, shifts blue and magenta hues toward cyan, increases sharpness (sharpening), and performs several other steps to improve overall image quality.

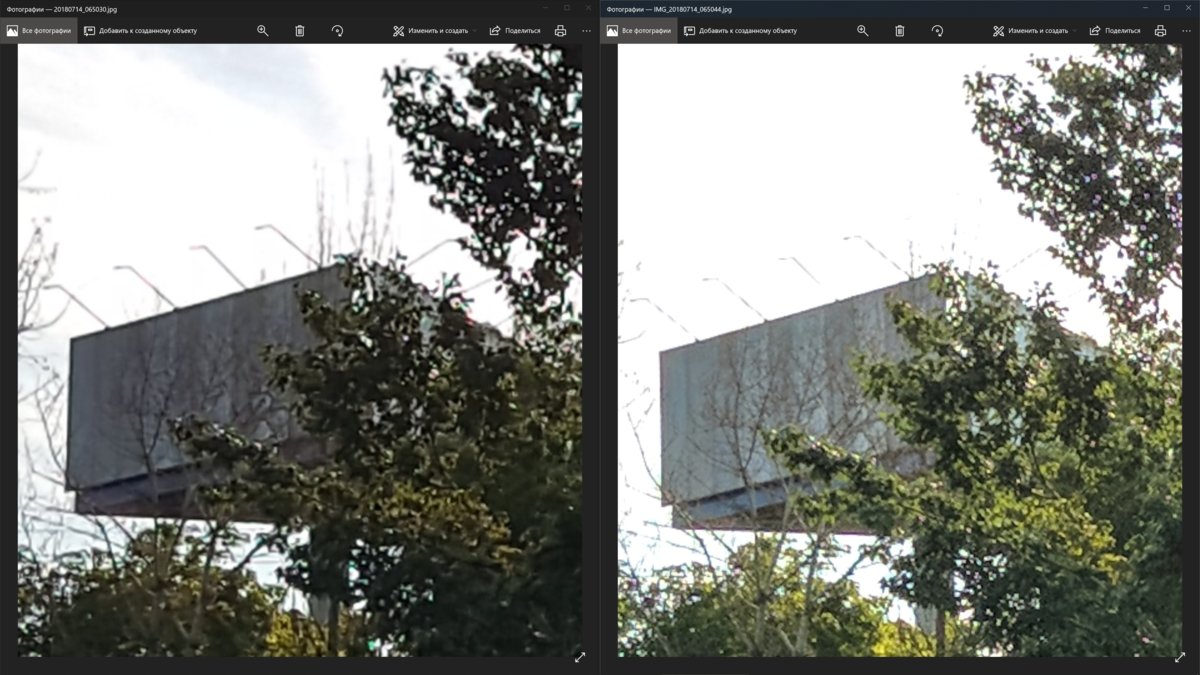

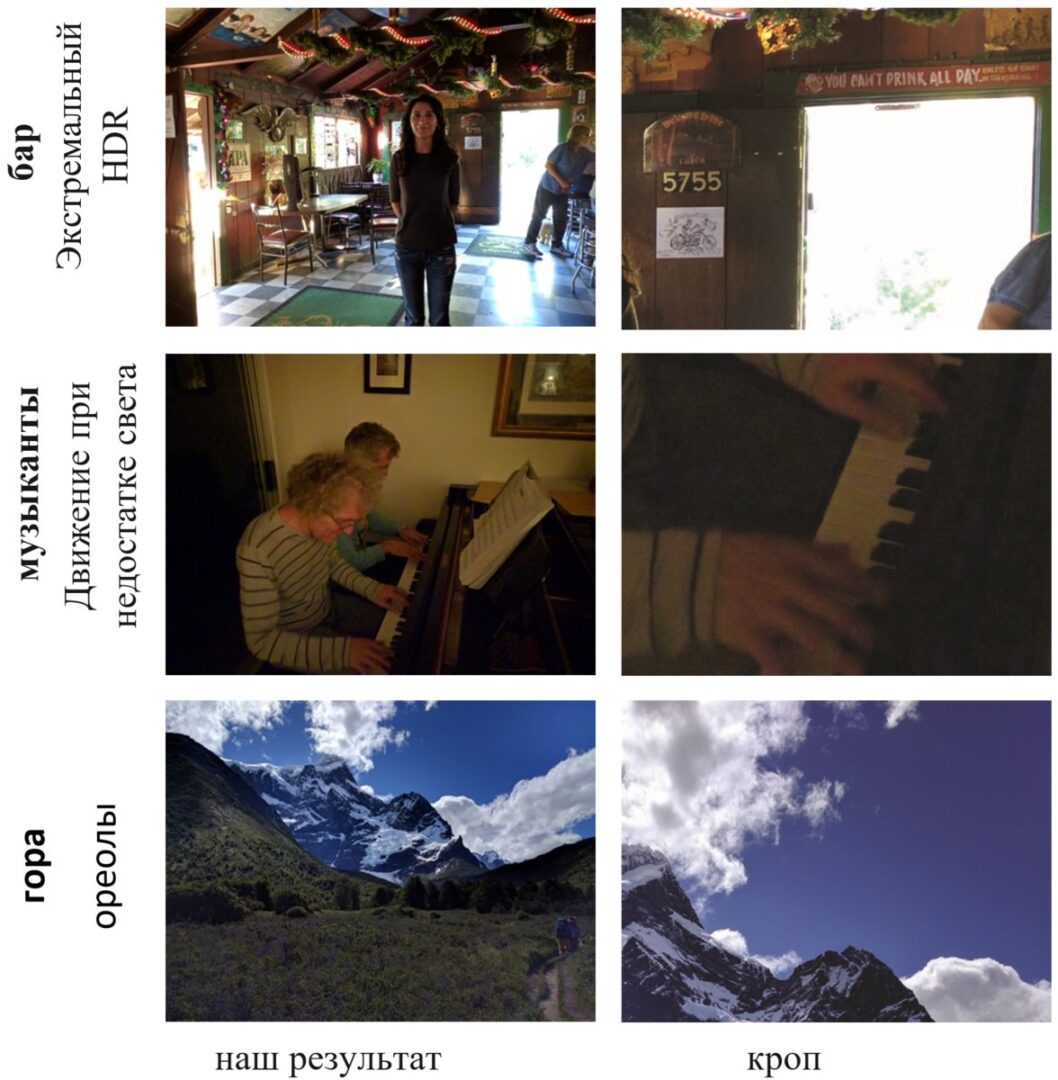

On the left is an HDR photo from Samsung’s stock camera; on the right is an HDR+ image produced with GCam. You can see the algorithm sacrificed sky detail to better render objects on the ground.

HDR+ on the Google Pixel: What’s changed

On Google Pixel, the algorithm has undergone significant changes (source). The phone now starts capturing frames as soon as the camera opens, taking anywhere from 15 to 30 frames per second depending on the lighting. This technology is called ZSL (Zero Shutter Lag) and was originally designed to enable instant captures. Pixel repurposes it for HDR+: when you press the shutter, the phone selects 2 to 10 frames from the ZSL buffer (depending on lighting and motion in the scene). It then picks the best of the first two or three frames as the base, and, as in the previous algorithm, stacks the remaining frames on top of it.

In addition, there are now two modes: HDR+ Auto and HDR+. The latter captures as many frames as possible to produce the final image, resulting in richer, brighter photos.

HDR+ Auto captures fewer frames, which reduces motion blur, lessens the impact of camera shake, and produces a photo almost instantly after you press the shutter button.

In the Pixel 2/2 XL build of Google Camera, HDR+ Auto was renamed to HDR+ On, and HDR+ is now called HDR+ Enhanced.

The second-generation Google Pixel introduced a dedicated coprocessor called the Pixel Visual Core. For now, the chip is only used to accelerate HDR+ photo processing and to enable third-party apps to capture in HDR+. Its presence doesn’t affect the quality of photos taken with the Google Camera app.

info

Google uses HDR+ to work around hardware issues, too. Because of a design flaw, the Pixel/Pixel XL could produce photos with pronounced lens flare. Google released an update that leverages HDR+ to mitigate it by blending multiple frames.

Pros and cons

Key benefits of HDR+:

- The algorithm does an excellent job reducing noise in photos while barely affecting fine detail.

- Colors in low-light scenes are much richer than with single-frame shooting.

- Moving subjects are less prone to ghosting than in standard HDR mode.

- Even in low light, the risk of blur from camera shake is kept to a minimum.

- Dynamic range is wider than without HDR+.

- Color reproduction generally looks more natural than with a single exposure (not on every phone), especially toward the edges of the frame.

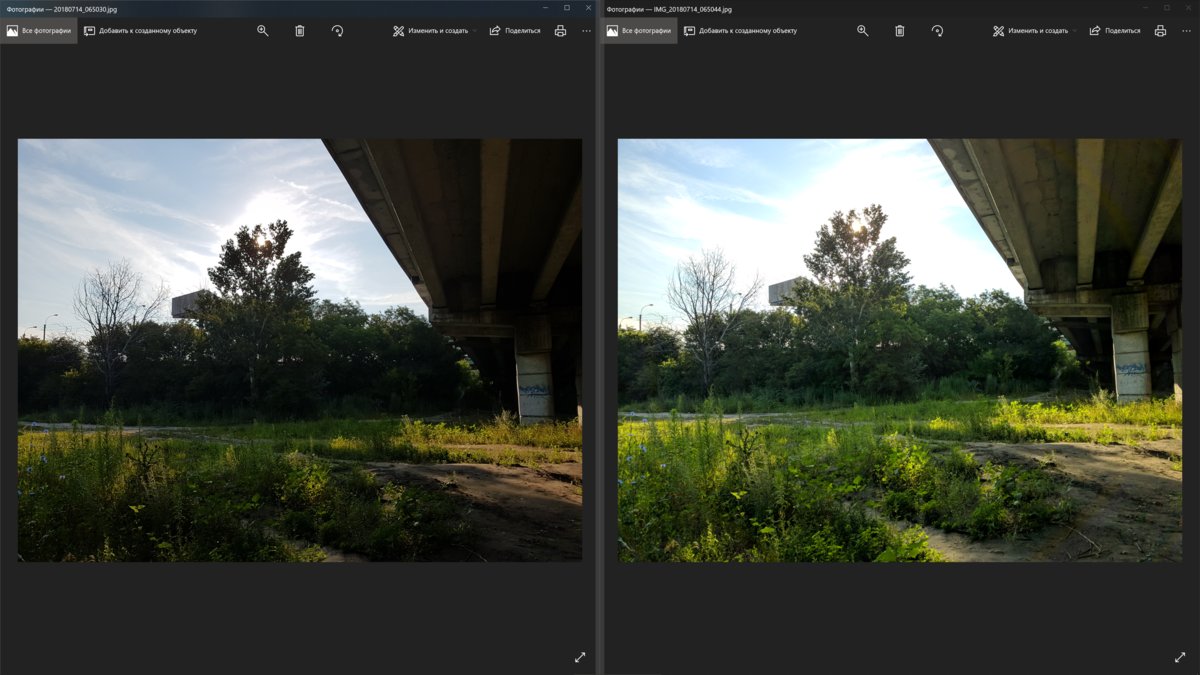

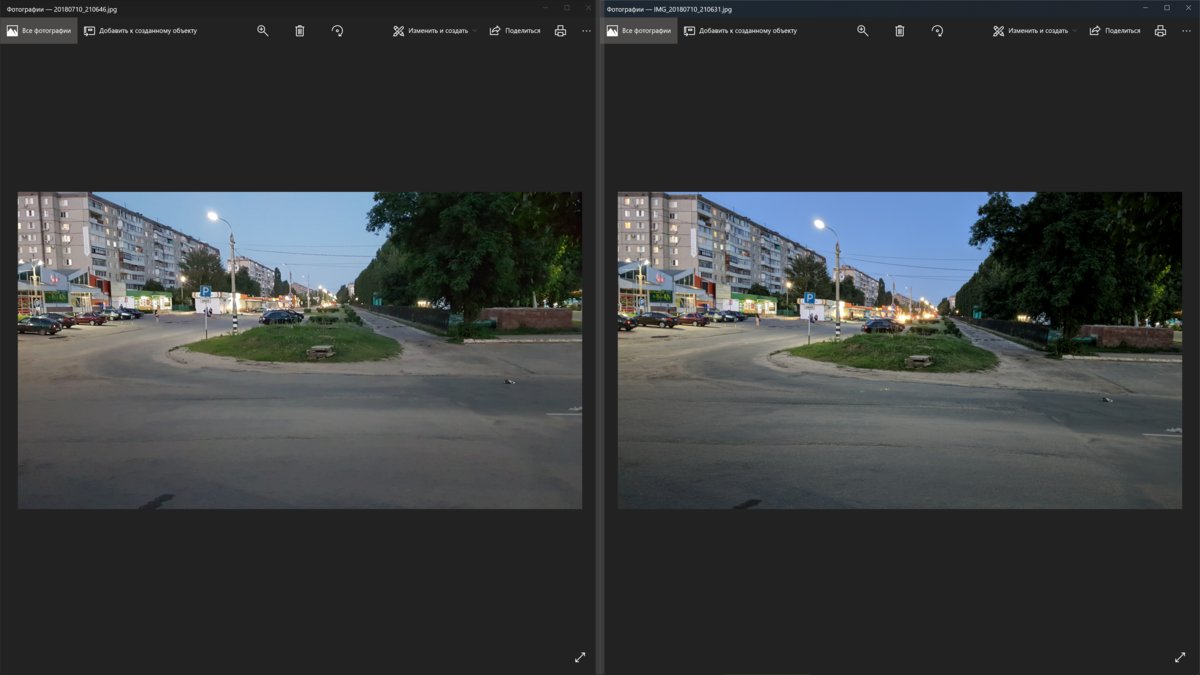

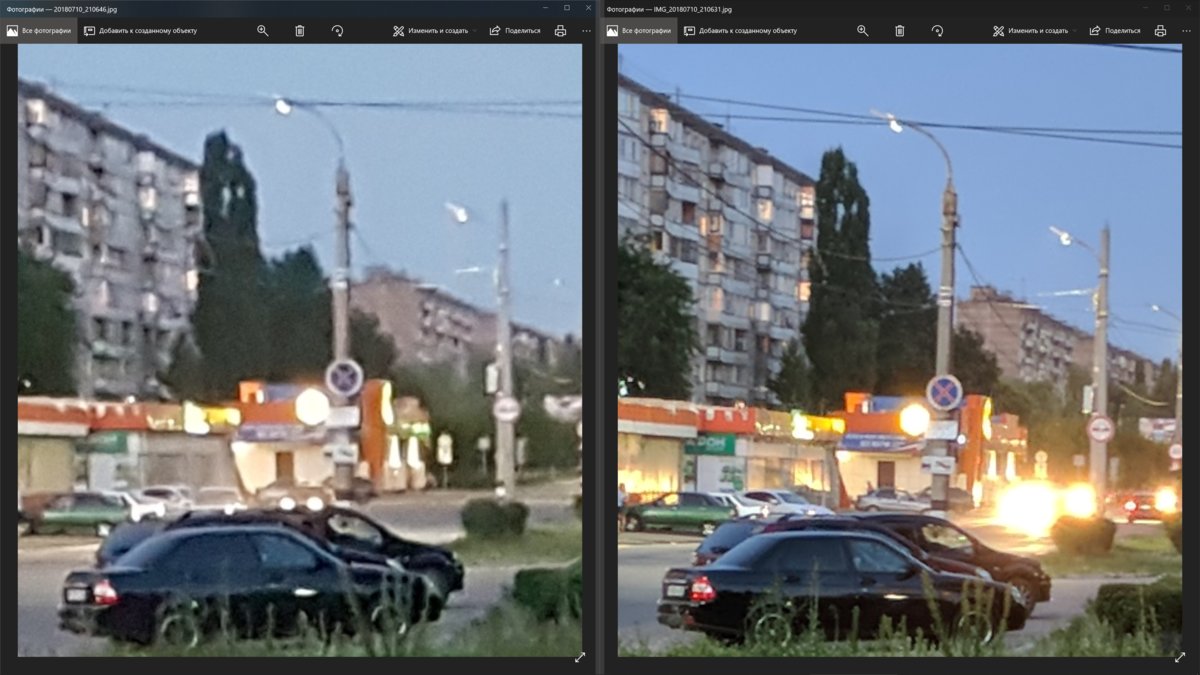

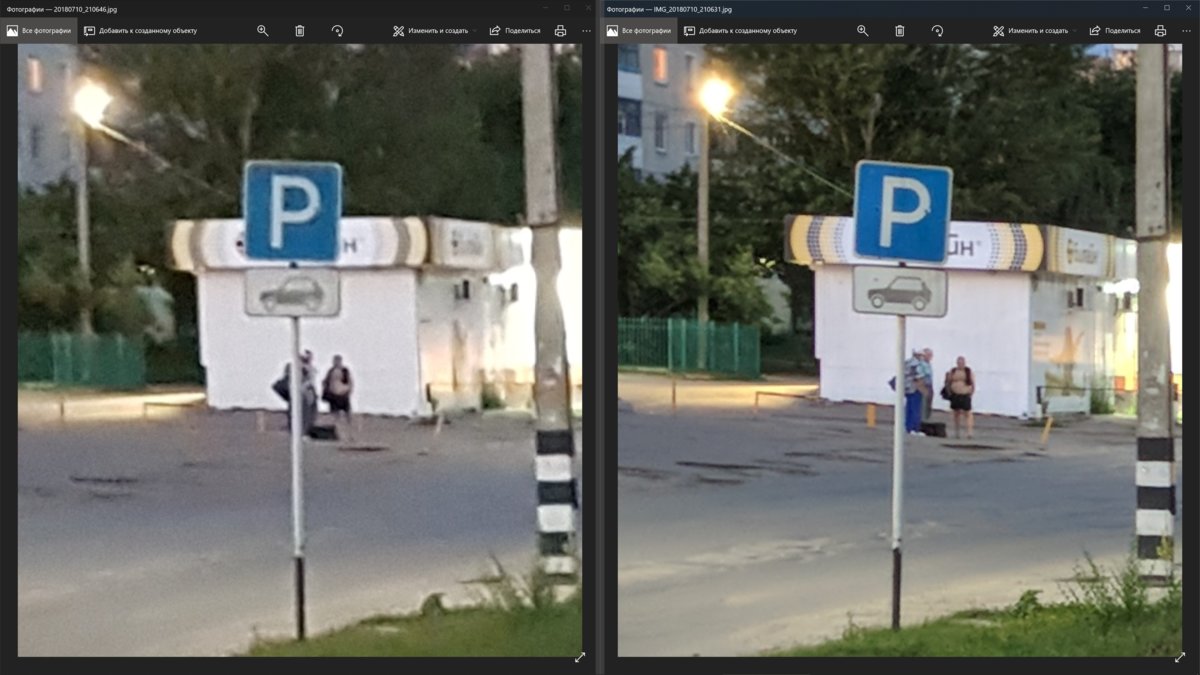

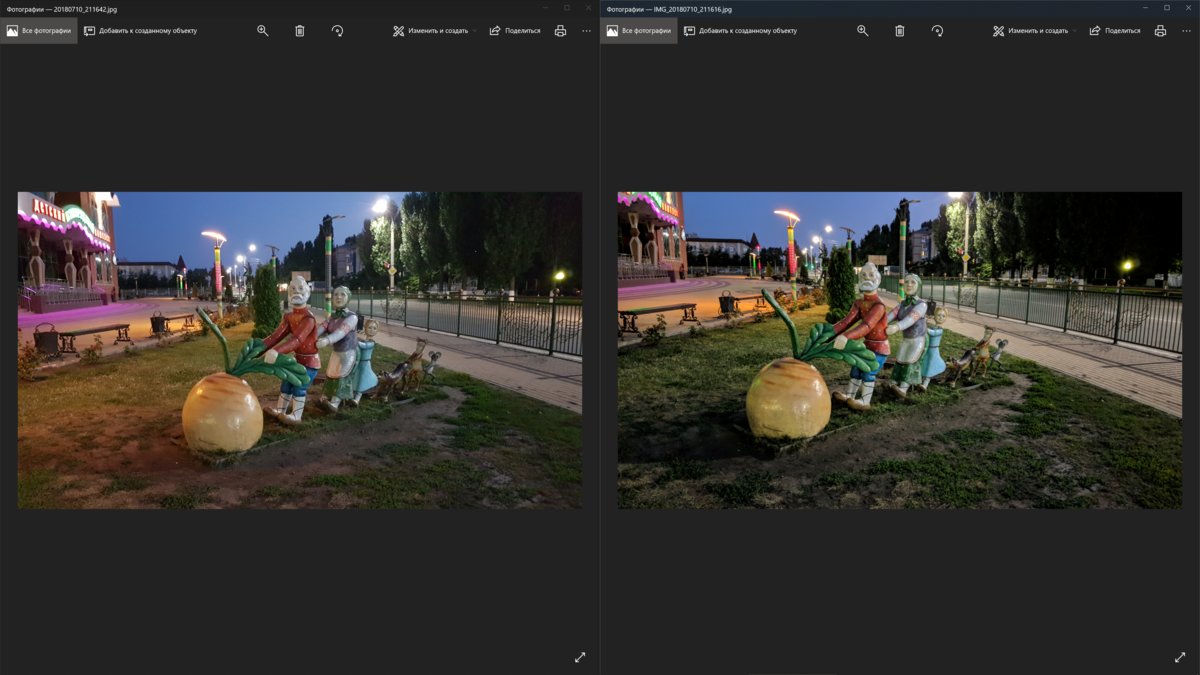

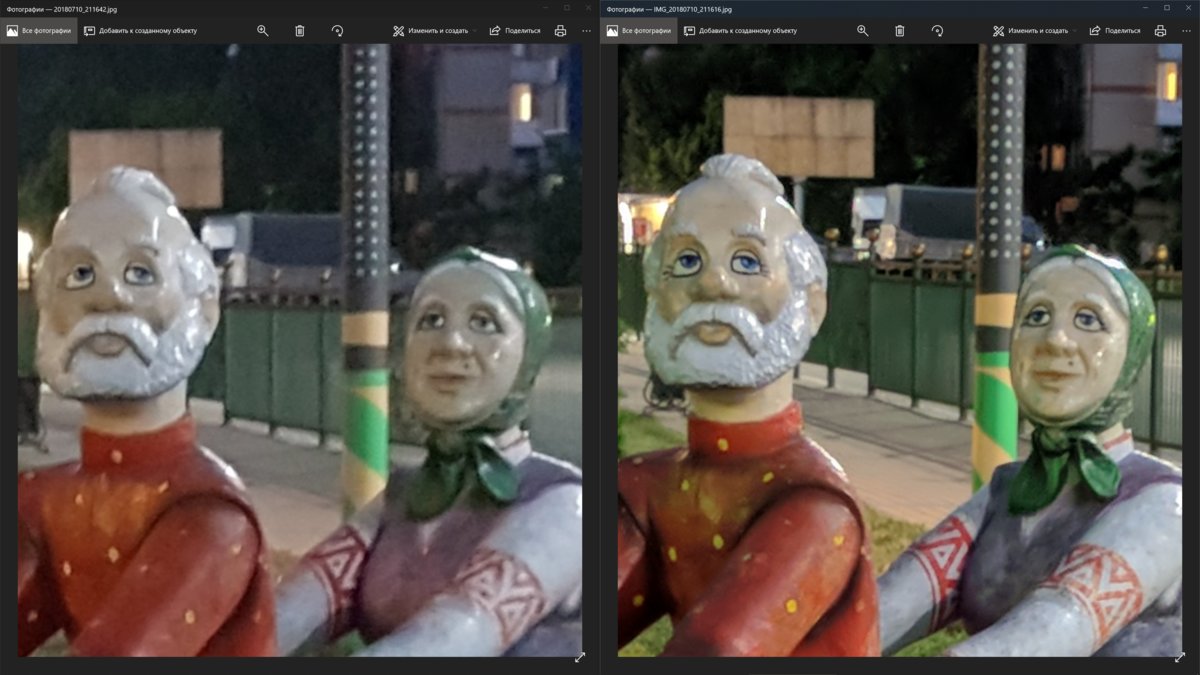

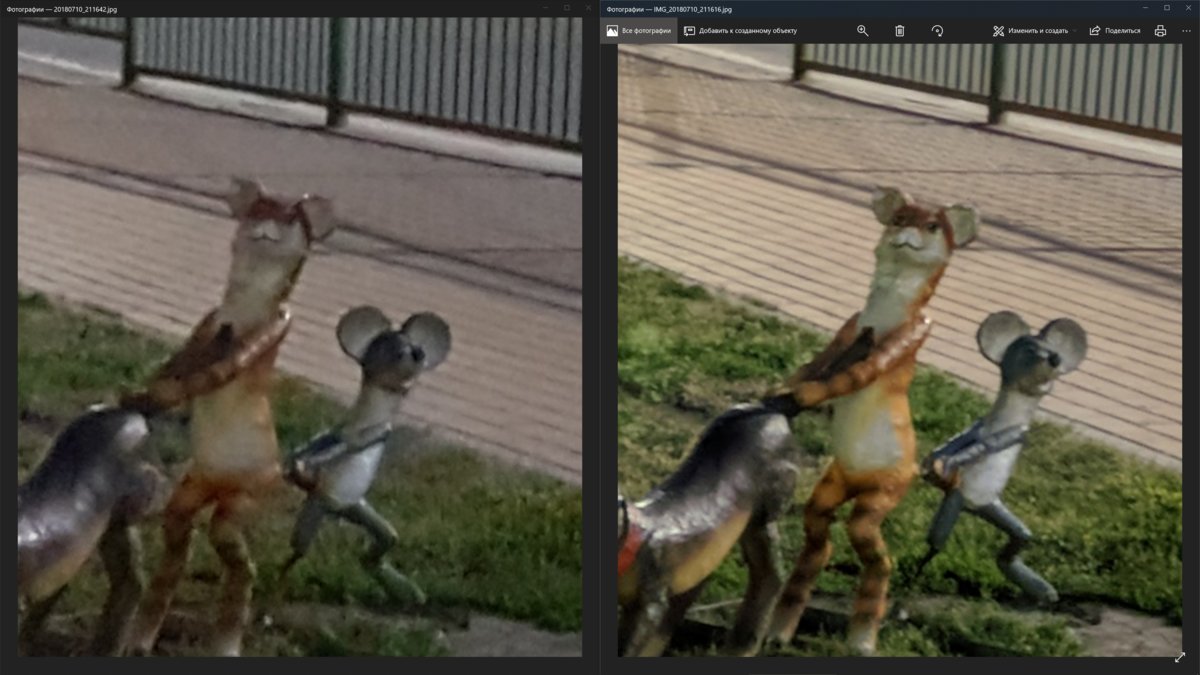

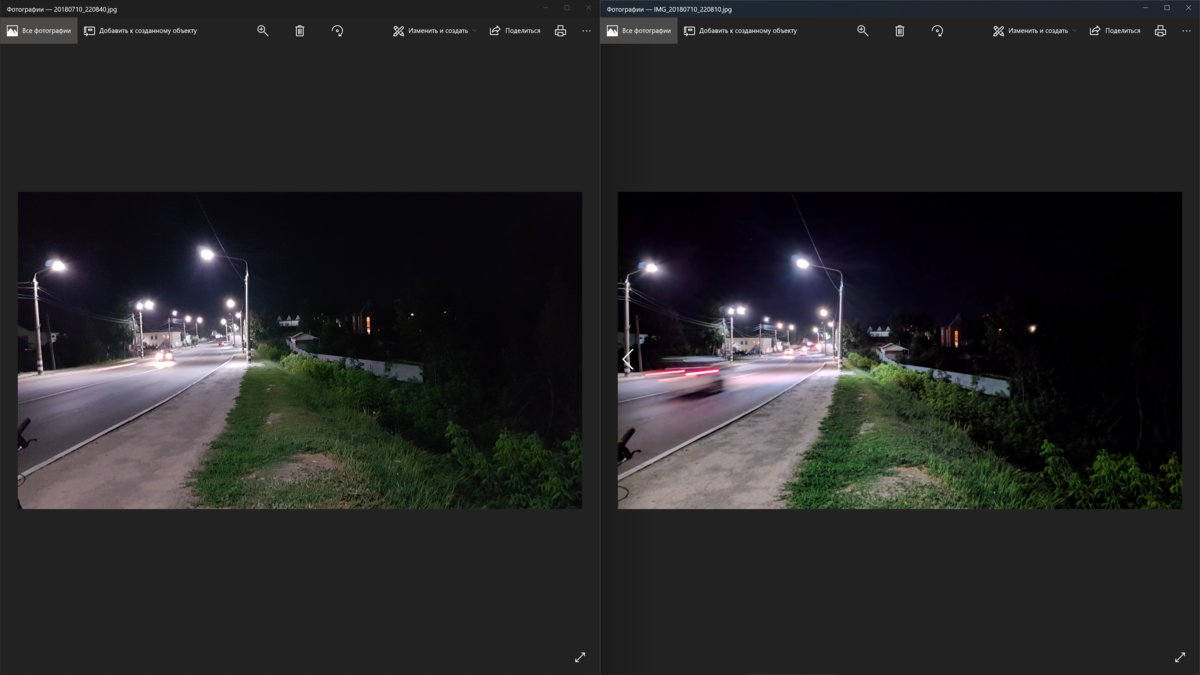

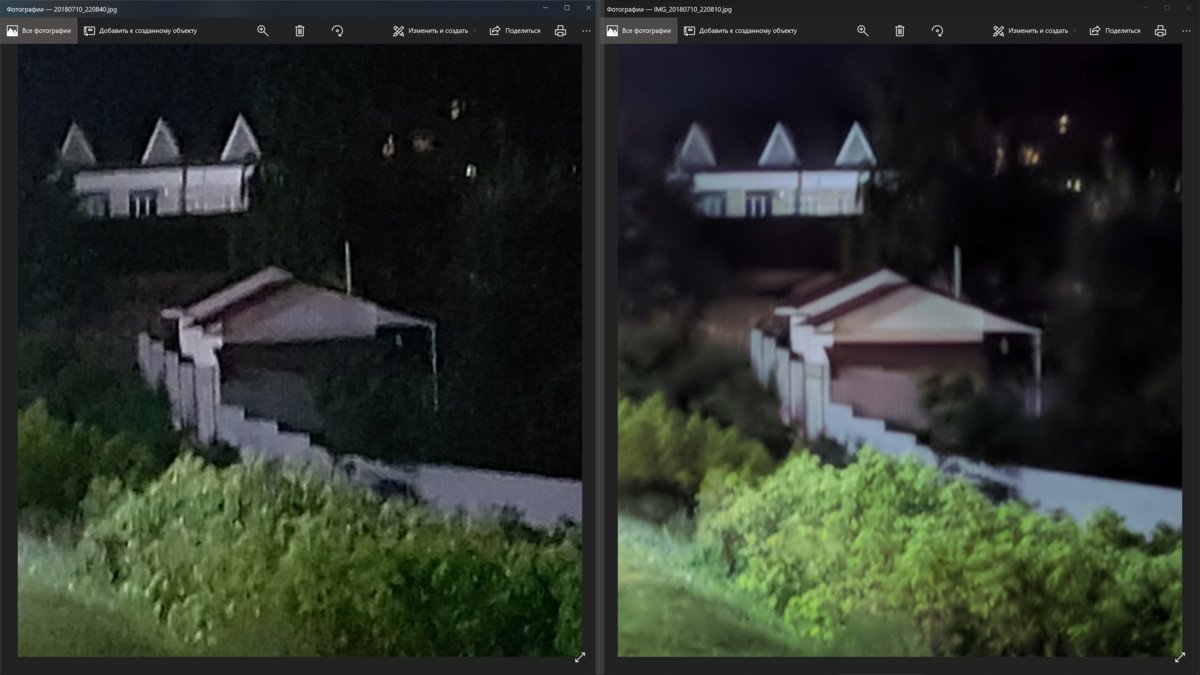

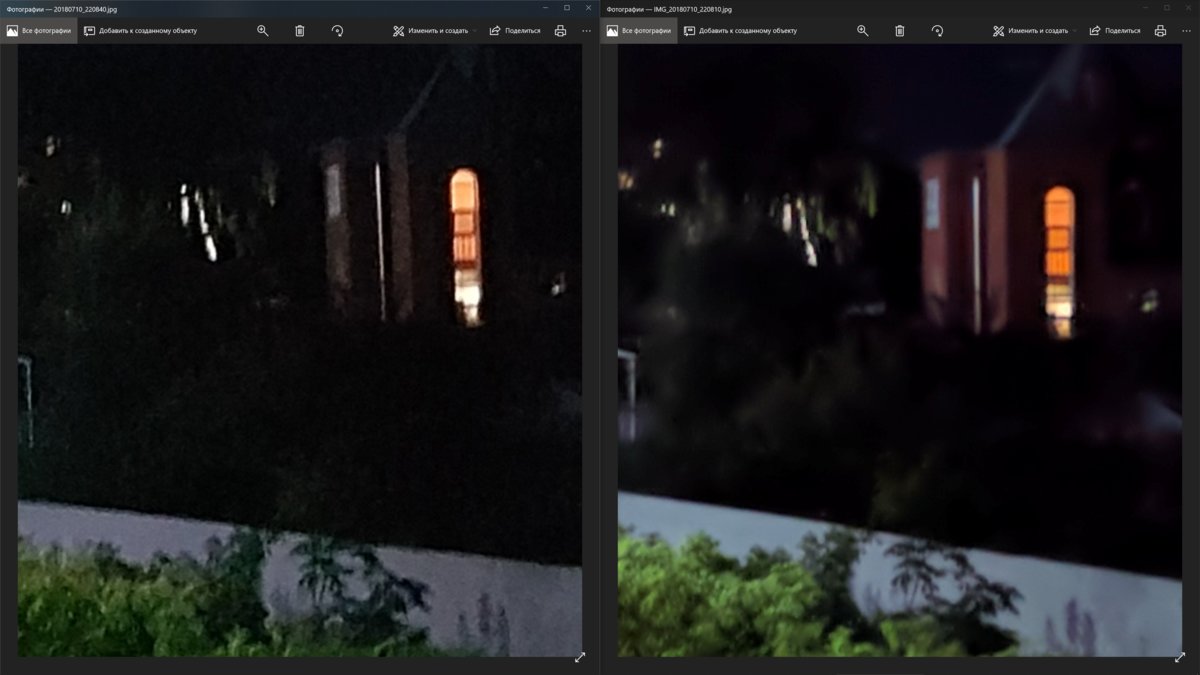

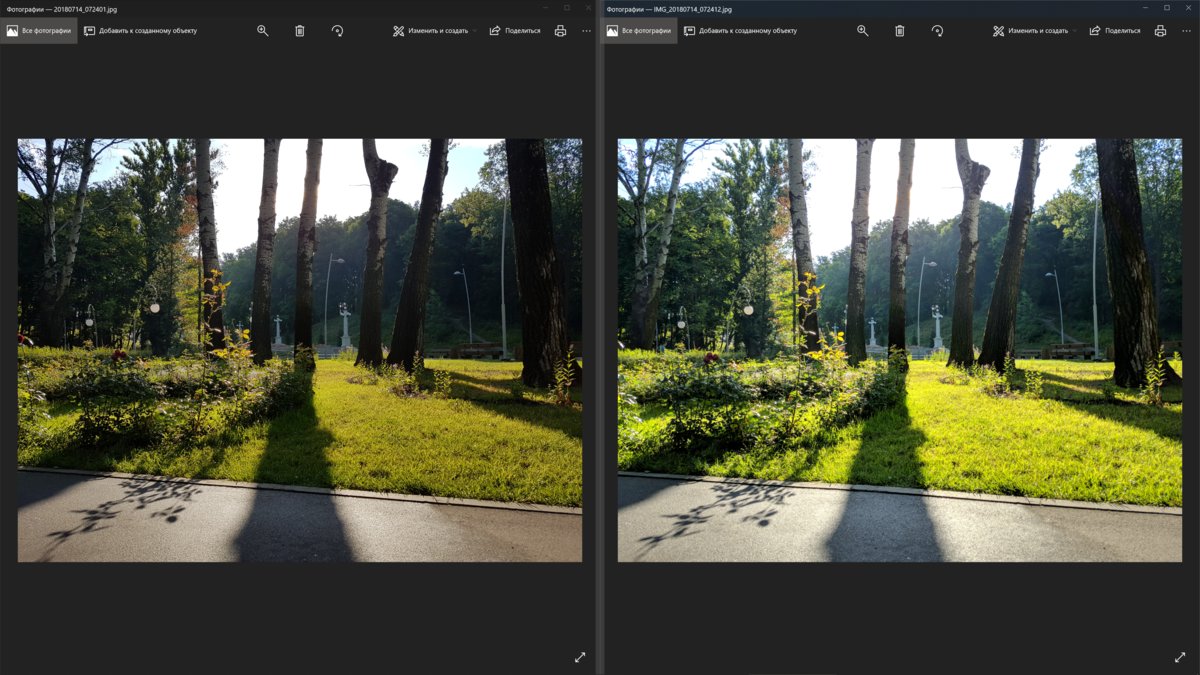

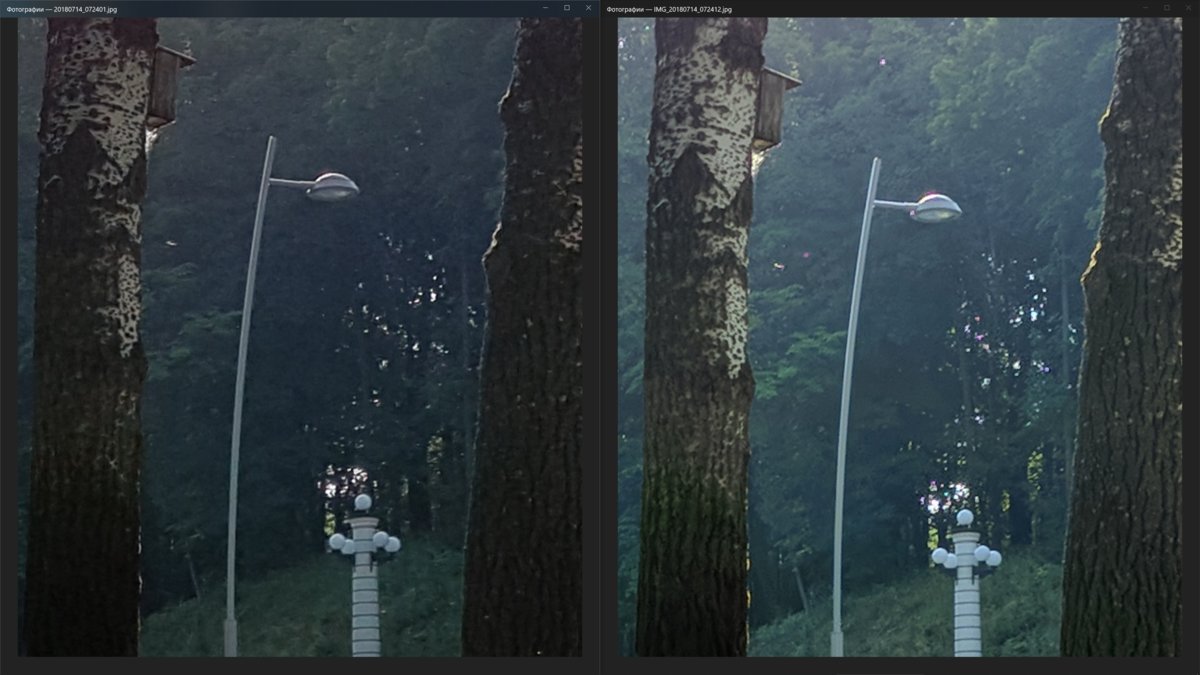

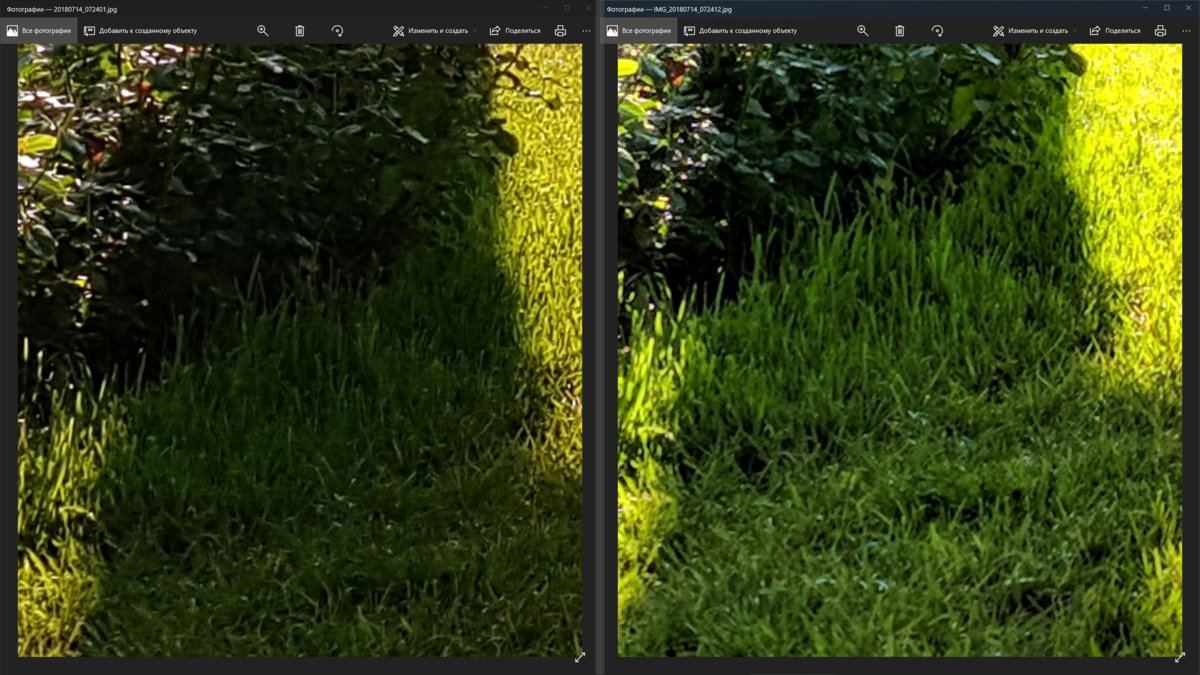

In the illustrations below, the photo on the left is from the Galaxy S7’s stock camera app, while the one on the right is an HDR+ shot taken with Google Camera on the same device.

Nighttime city photos. It’s clear that HDR+ lets us capture a crisp image of the group of people standing under the Beeline sign. The sky looks clean, and the road sign is sharp. The grass is properly green. The Beeline sign shows accurate colors. Balconies, wires, and tree canopies are rendered clearly. Important note: detail in the shadowed trees on the right is somewhat worse with HDR+ than with the stock camera.

Notice the detail on the sculptures’ faces, the richness of the clothing colors, and the lack of objectionable noise. That said, detail in shadow areas is still lacking.

The city’s outskirts. The dim glow of the streetlights is enough to bring out HDR+ detail on the building’s wall.

Morning photos. In challenging morning shooting conditions with strong backlighting, colors look natural, the texture on the tree trunks is crisp, and details of the bush and the grass in the tree’s shade remain visible deep into the shadows.

HDR+ has few downsides, and for most scenes they’re minor. First, producing an HDR+ photo is CPU- and RAM‑intensive, which leads to a number of drawbacks:

- Power consumption goes up and the device heats up when merging a series of shots.

- You can’t quickly take multiple photos.

- No instant preview; the photo only appears in the gallery after processing, which takes up to four seconds on a Snapdragon 810.

Some of these issues have already been addressed with the Pixel Visual Core. But that co-processor will most likely remain a Google Pixel–only advantage.

Second, the algorithm needs at least two images to work, and in practice it captures four to five frames on average. Therefore:

- There will inevitably be situations where the algorithms fall short or fail.

- HDR+ trails classic HDR slightly in overall dynamic range coverage.

- For action scenes, capturing a single frame and processing it with a fast ISP co-processor is preferable, as it helps avoid ghosting and smearing when shutter speeds aren’t high.

(Image in original quality)

Which devices support HDR+

In theory, HDR+ can run on any smartphone with Android 5.0 or newer (it requires the Camera2 API). But for marketing reasons—and because some optimizations depend on specific hardware components, like the Hexagon coprocessor in Snapdragon—Google deliberately disabled HDR+ on anything but Pixel devices. Still, this is Android: enthusiasts have found ways to get around that limitation.

In August 2017, a 4PDA user managed to modify the Google Camera app so that HDR+ could be used on any smartphone with a Hexagon 680+ DSP (Snapdragon 820+) and the Camera2 API enabled. Initially, the mod didn’t support ZSL (zero shutter lag) and was generally rough around the edges. Even so, it was enough to boost the photo quality of devices like the Xiaomi Mi5S and OnePlus 3 to previously unattainable levels, and it even let the HTC U11 compete on equal footing with the Google Pixel.

Over time, more developers joined the effort to bring Google Camera to third-party phones. Before long, HDR+ was working even on devices with Snapdragon 808 and 810. Today, there’s a port of Google Camera for virtually every Snapdragon ARMv8–based smartphone running Android 7 or newer (and in some cases Android 6) that supports the Camera2 API. These ports are often maintained by a single enthusiast, though in many cases several developers contribute.

In early January 2018, XDA user miniuser123 managed to get Google Camera with HDR+ running on a Galaxy S7 with an Exynos processor. A bit later it turned out that Google Camera also worked on the Galaxy S8 and Note 8. The first Exynos builds were unstable, frequently crashing and freezing, with optical image stabilization (OIS) and zero shutter lag (ZSL) not working. Version 3.3 is now stable enough, supports OIS and ZSL, and includes all Google Camera features except Portrait mode. The list of supported devices now also includes several Samsung A-series smartphones.

How to enable HDR+ on your device

If you have an Exynos-based smartphone, your options are limited. Head to the discussion thread on XDA, expand the V8.3b Base section (for Android 8) or the Pixel2Mod Base section (for Android 7), and download the latest build. You can also join the Telegram group, where Google Camera updates are posted promptly.

Owners of smartphones with Qualcomm processors will need to do some digging. Enthusiasts actively maintain Google Camera (GCam) builds with HDR+ for a wide range of phones. Here are the most popular models:

If your model isn’t on the list, check the camera and device discussion threads on 4PDA and XDA. At the very least, you’ll find users who have tried to get HDR+ working.

Additionally, there’s a page that aggregates nearly all Google Camera versions where you can conveniently test different GCam builds on lesser-known devices.

Conclusion

The HDR+ algorithm is a vivid demonstration of what mobile computational photography can do. Arguably, it’s the most effective image-processing algorithm available today. HDR+ needs only a single camera module to produce shots that outclass the dual‑camera systems on some devices.