The beginning

First of all, let’s see how was protection implemented in early versions of the OS and how has it changed over time.

Android 1.0 was released in fall 2008 together with the HTC Dream smartphone (also known as T-Mobile G1). The device had five security subsystems.

1. Lock screen PIN code protecting against unauthorized physical access.

2. Sandboxes isolating the app data and code execution from other apps. Each app was run on behalf of a Linux user specially created for it. The app had full control over the files in its own sandbox (/) but couldn’t get access to system files and files of other apps. The only way to escape from the sandbox was to gain root privileges.

3. Each app had to specify the permissions required for its operation in the manifest file. These permissions allowed to use various system APIs (access to the camera, microphone, network, etc.). The permissions were enforced at several levels, including the Linux kernel; however, there was no need to explicitly ask the user for them; the app had automatically received all the permissions listed in its manifest, while the user could choose between installing the app (thus, granting all the required permissions to it) or not installing it.

The permission-based access control did not apply to memory cards and USB drives: their FAT file system does not allow to assign file permissions. As a result, any app could read the content of a memory card.

4. Every app had to be signed with a developer’s key. During the installation of a new app version, the system had verified the digital signatures of the old and new versions and prohibited installation if they weren’t matching. This approach protected the user from phishing and data thefts (e.g. a Trojan pretending to be a legitimate app and getting access to files of the original app after an ‘update’).

5. Java language and the virtual machine provided protection against many types of attacks affecting apps written in insecure languages, such as C and C++. It’s simply impossible to cause a buffer overflow or reuse the freed memory in Java.

On the other hand, many components of the OS, including system services, the virtual machine, multimedia libraries, graphics rendering system, and all network subsystems, were written in the above-mentioned insecure C and C++ languages and operated with root privileges. A vulnerability in any of these components could be exploited to gain full control over the OS or perform a DoS attack. For instance, HTC Dream was hacked because the preinstalled Telnet service was running with root privileges. After finding a way to launch Telnet, the hacker could connect to the smartphone over the network and get shell access with superuser rights.

Speaking of standard apps, they didn’t have root rights and ran under the protection of a virtual machine in their own sandboxes – but still had very broad capabilities typical for desktop operating systems. If a third-party app had the required privileges, it could do a lot: read the lists of text messages and calls, read any files on a memory card, get a list of installed apps, run in the background indefinitely, display graphics over windows of other apps, receive information about nearly all system events (app installation, turning the screen on/off, ringing, charging, etc.).

Technically, all these APIs were not vulnerabilities per se. Quite the opposite, they had provided great opportunities for programmers and, accordingly, users. Back in 2008, these opportunities hadn’t caused any real problems because the smartphone market was primarily focused on enthusiasts and businessmen. However, a few years later, smartphones became widespread among all social groups, and it became clear that the apps’ capabilities must be restricted.

Permissions

There are two ways to restrict the app’s rights and prevent it from affecting the system and user data.

- Restrict the capabilities of all apps to a minimum (as it was done in J2ME); or

- Enable the user to control the capabilities of each app (the iOS path).

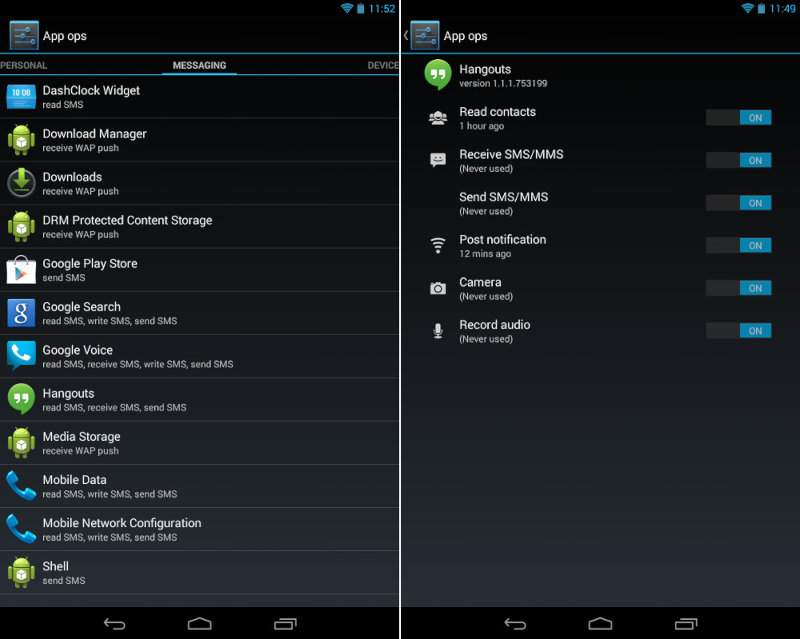

Despite its efficient power separation system operating at the OS level, Android hadn’t allowed the user to apply either of these methods. The granular permission control system was first introduced only in Android 4.3, together with the hidden App Ops menu.

Using these settings, the user could disable any of the app’s permissions. However, the system was neither handy nor user-friendly: the management of permissions was too granular, and you had to navigate through dozens of obscure permissions. The revocation of any permission could lead to an application crash because Android didn’t have an API notifying programmers whether their app has permissions to perform a certain action or not. As a result, the App Ops system was removed from Android as quickly as in version 4.4.2. The currently used system of permissions was first introduced in Android 6.

This time, Google engineers have grouped interrelated powers together, thus, creating seven metapowers that can be requested when the app is running. The system is based on a new API enabling programmers to check permissions available to an app and request them if necessary.

A side effect of this innovative approach was… its total inefficiency. The new system had worked only for apps designed for Android 6.0 and higher. A developer could specify the targetSdkVersion directive (i.e. Android 5.1) in the build rules – and the app gained all the possible privileges automatically.

Google had to implement the system this way to maintain compatibility with old software versions. As a result, the new system has actually come into effect only two years later – after the introduction of the minimum SDK requirements on Google Play (in other words, Google prohibited applications for outdated OS versions). The issue has been finally resolved in Android 10: it became possible to revoke permissions from programs created for Android 5.1 and older.

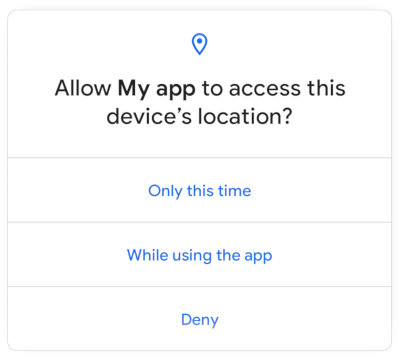

In Android 11, the permission system has been modified; now it allows you to grant permissions required for a specific operation on a one-time basis. Once the application is minimized, it loses such permissions and has to request them again.

Restrictions

However, the permission management system was just a part of the solution. The second part involved prohibitions to use dangerous APIs. One could argue whether third-party applications should be allowed to receive the phone’s IMEI number using their permissions or prohibited from doing so. For Google, with its obsession with privacy, the answer was obvious.

The company had already prohibited some APIs in the past (take, for instance, the prohibition to enable the flight mode or confirmation of text messages sent to short numbers in Android 4.2), but in 2017, it launched a full-scale offensive.

Starting with version 8, Android hides many device identifiers from apps and other devices. The Android ID (Settings.) is now different for each installed app. The device serial number (android.) is not available to apps built for Android 8 and higher. The net. variable is empty, while the DHCP client never sends the hostname to the DHCP server. In addition, some system variables became unavailable, including ro.runtime.firstboot (the last boot time).

Starting with Android 9, applications can no longer read the device serial number without the READ_PHONE_STATE permission. Android 10 restricts access to IMEI and IMSI. The READ_PRIVILEGED_PHONE_STATE permission unavailable to third-party applications is now required to access this information.

Starting with Android 8, the LOCAL_MAC_ADDRESS permission is required to get the Bluetooth MAC address, while the Wi-Fi MAC address is randomized when the device is checking for available networks (to prevent tracking of users, e.g. shoppers in shopping malls).

In Android 9, Google went further and prohibited the usage of the camera, microphone, and any sensors when the application is running in the background (only foreground services can use the camera and microphone). Android 10 prohibits the access to the current location in the background (the ACCESS_BACKGROUND_LOCATION permission is required) and prohibits clipboard reading in the background (theREAD_CLIPBOARD_IN_BACKGROUND permission is required).

Another important innovation introduced in Android 9 is the complete prohibition to use HTTP without TLS (i.e. without encryption) for all apps created for newer Android version. It is possible, however, to circumvent this restriction by specifying the list of allowed domains in the network security configuration file (network_security_config.).

Android 10 prohibits background apps from launching activities (i.e. other apps). The exceptions are bound services (e.g. accessibility and autocomplete). Apps using the SYSTEM_ALERT_WINDOW permission and apps that receive the name of the activity from the system’s PendingIntent can launch activities in the background, too. Also, apps can no longer run binaries from their own private directories (this already caused problems with the popular Termux app).

Starting with Android 11, apps can no longer directly access the memory card, either internal or external, using the READ_EXTERNAL_STORAGE and WRITE_EXTERNAL_STORAGE permissions. Instead, an app must use either its own directory located in / (it is created automatically and does not require permissions) or the Storage Access Framework preventing access to other apps’ data.

Interestingly, Google imposes restrictions on third-party apps using not only the OS mechanisms, but other means as well. In late 2018, a requirement was introduced on Google Play: all apps using permissions to read text messages and call logs must fall under one of the permitted app types and undergo mandatory manual premoderation. As a result, many useful tools were removed from the Market: their authors were unable to justify the need to use certain permissions to Google engineers.

Data Encryption

Permissions and other runtime restrictions provide a decent protection – but only until the attacker gains physical access to the device. What if a smartphone lost by its user falls into the hands of a skilled hacker? At the time of the first OS versions, many devices had bootloader-related vulnerabilities or hadn’t blocked it at all. Therefore, dumping the NAND memory was as easy as a piece of cake.

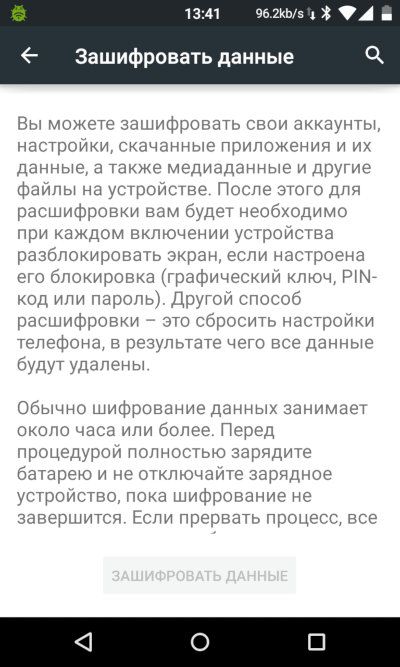

To fix this problem, Android 3.0 introduced a built-in data encryption feature based on time-tested dm-crypt Linux kernel module. Starting with Android 5, this feature became mandatory for all devices supporting hardware encryption (primarily, 64-bit ARM processors).

The encryption system was pretty standard. The userdata section was encrypted with the dm-crypt module using the AES-128 algorithm in the CBC mode employing the ESSIV:SHA256 function to obtain initialization vectors (IV). The encryption key was protected with a key-encryption-key (KEK) that could be either generated on the basis of the PIN code using the scrypt function or generated randomly and stored in the TEE. In Android 5.0 smartphones, the encryption was activated by default, and the PIN set by the user was used to generate the KEK.

Starting with Android 4.4, the scrypt function has been used to extract the key from the PIN. It replaced the PBKDF algorithm that was vulnerable to brute-forcing with a GPU (a six-position PIN consisting of digits was cracked in ten seconds, while a six-position one consisting of alpha characters – in four hours using hashcat). According to the scrypt developers, this function has increased the brute-forcing time by some 20,000 times and was incompatible with GPUs due to its high memory requirements.

However, despite all Google’s efforts, the Full Disk Encryption (FDE) system had still suffered from a number of conceptual flaws.

- FDE didn’t allow to use different encryption keys for different data areas and users. For example, it was impossible to encrypt the Android for Work section using a corporate key or decrypt data critical for the smartphone functionality without entering the user password.

- The sector-by-sector encryption had negated all driver-based performance optimizations. The device had continuously decrypted sectors and encrypted them again, thus, modifying the partition content. Therefore FDE had drastically reduced the performance and the battery life of the device.

- FDE hadn’t supported sector content authentication. There are too many sectors in a smartphone; bad ones appear on a regular basis and are reassigned to the backup area by the controller.

- The AES-CBC-ESSIV cryptographic scheme was vulnerable to data leaks because it allowed the identification of data change points, thus, making it possible to perform substitution and movement attacks.

The file-based encryption (FBE) system introduced in Android 7 has solved the above issues. FBE employs the encryption function used in the ext4 and F2FS file systems and encrypts each file separately using the AES algorithm, but in the XTS mode. This mode was developed specifically for encryption of block-based devices, and it’s not plagued by typical CBC vulnerabilities. For instance, XTS prohibits the identification of data change points, and it is resistant against data leaks and spoofing and movement attacks.

Until recently, Google had allowed smartphone manufacturers to use various encryption mechanisms; however, starting with Android 10, FBE became mandatory. Furthermore, starting with Android 9, encryption is mandatory for all devices – both supporting the hardware encryption and not.

This became possible thanks to the Adiantum encryption mechanism developed by Google. Adiantum is based on the fast NH hash-function, Poly1305 cryptographic message authentication algorithm (MAC), and XChaCha12 stream cipher. On the ARM Cortex-A7 processor, Adiantum encrypts data five times faster than AES-256-XTS.

Trusted Execution Environment

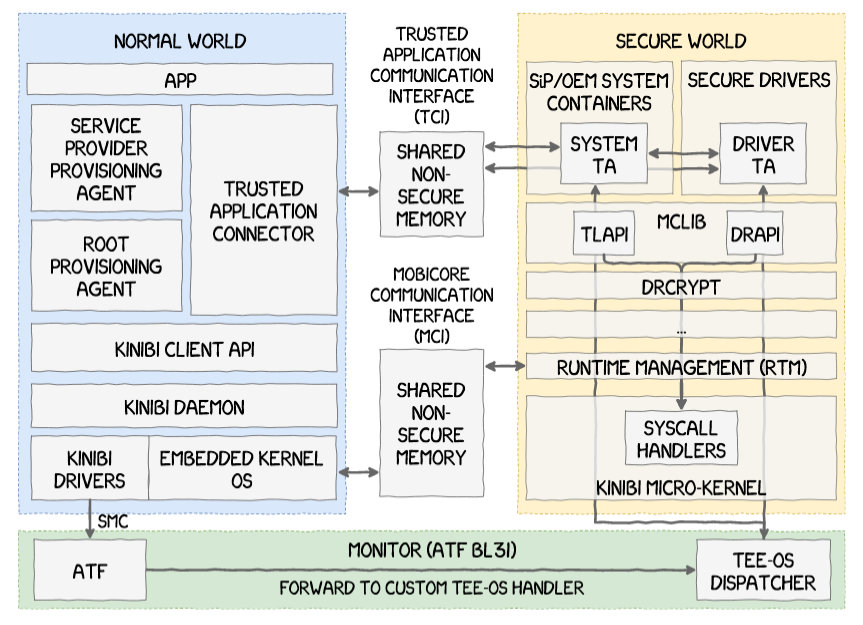

An important innovation introduced in the encryption system of Android 5 is the possibility to store the encryption key in a Trusted Execution Environment (TEE). TEE is a dedicated microcomputer located inside or near the mobile chip. This computer has its own OS, and it is solely responsible for data encryption and storage of encryption keys. The micro-PC can be accessed only by a small service inside the main system; as a result, the keys remain secure even if the system is compromised.

The best known TEE implementation is TrustZone used in Qualcomm chipset, and it was already hacked. Other manufacturers use their own developments. For instance, in Samsung smartphones, TEE is implemented under the control of either Kinibi OS developed by Trustsonic (Samsung Galaxy S3-S9) or the in-house TEEGRIS system (Samsung Galaxy S10 and up).

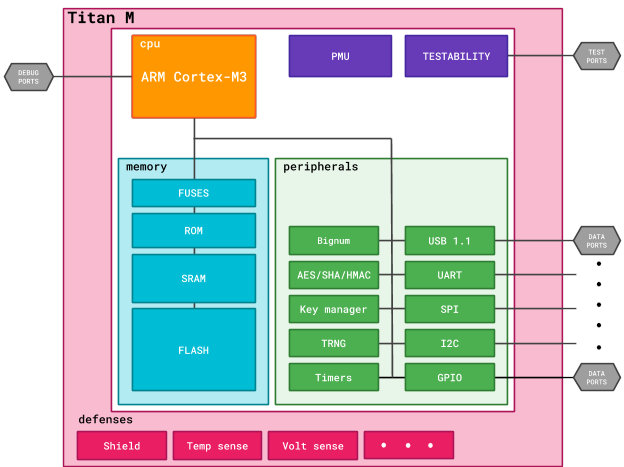

Pixel smartphones (starting with Pixel 3/3XL) use a dedicated Titan M chip designed and manufactured by Google. This is a mobile version of the Titan server chip; it stores inter alia encryption keys and the rollback counter used by the Verified Boot system (see below). The chip also supports the Android Protected Confirmation function that makes possible to prove mathematically that the user has actually seen the confirmation dialog and that the response to that dialog was not intercepted or altered. Titan M has a direct electrical connection with the side keys of the smartphone, and it blocks them in case of a hacking attempt.

Titan M is somewhat an analogue of the Secure Enclave chip installed by Apple into its smartphones for several years already. Titan M is a dedicated microcomputer based on the ARM Cortex-M3 chip and not connected to the main processor; as a result, it’s resistant to Rowhammer, Specter, and Meltdown attacks.

Verified Boot

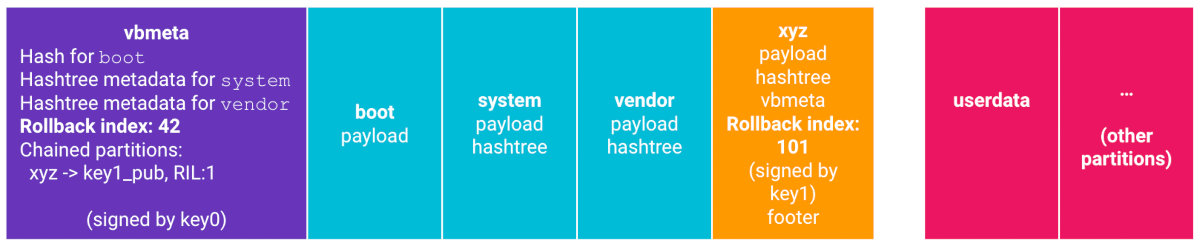

The Verified Boot mechanism has been first implemented in Android 4.4. At each boot stage (primary bootloader → secondary bootloader → aboot → Linux kernel → Android system), the operating system checks the integrity of the next component (bootloaders by their digital signatures, the kernel by its checksum, and the system by the FS checksum) and takes action if any of the components was altered.

The mechanism was in development for a long time. Only starting with Android 7, it relies on the hardware keystore (TEE) and prohibits the boot-up if any of the components was compromised (this depends on the device manufacturer).

Starting with Android 8, Verified Boot officially supports the rollback protection function (the system prohibits the installation of older firmware versions).

Downgrade can be used to get unauthorized access to the smartphone by ‘reviving’ old bugs. For instance, a person steals a phone and then realizes that it is encrypted, password protected, and its bootloader is blocked. The malefactor cannot install custom firmware (because the certificates do not match), but can roll back the smartphone to an older version of the official firmware featuring a bug that allows to bypass the screen lock (like it was in the Samsung firmware, for example).

Stack-smashing protection

Data encryption and the trusted boot mode can protect your phone from an attacker unable to bypass the lock screen and trying either to dump a turned off device or boot it with an alternative firmware. But these mechanisms won’t protect a phone against exploitation of its vulnerabilities if the system is running.

Vulnerabilities existing in the kernel, drivers, and system components are often used to gain root privileges. Accordingly, Google started working on these components immediately after the release of the first version of Android.

Starting with Android 1.5, the system components have been using the safe-iop library. This library implements functions that securely perform arithmetic operations with integers (protection against integer overflow). The dmalloc function hindering double free and chunk-consistency attacks was adopted from OpenBSD, as well as the calloc function that checks for an integer overflow possibility during each memory allocation operation.

Starting with version 1.5, the low-level Android code is compiled using the GCC ProPolice mechanism preventing stack smashing at the compilation stage. Starting with Android 2.3, the code uses hardware stack-smashing protection mechanisms (e.g. the No-eXecute (NX) bit available starting with ARMv6).

In Android 4.0, Google implemented the Address Space Layout Randomization (ASLR) technology, which allows to randomly arrange the executable code, heap, and stack in the address space of a process. This hinders many attack types because the hacker must guess the jump addresses in order to perform the attack. In addition, starting with version 4.1, Android uses the RELRO (Read-only Relocations) mechanism that protects system components from attacks overwriting sections of the ELF file loaded to the memory. Starting with Android 8, ASLR protects the kernel as well. Starting with Android 4.2, the OS supports the FORTIFY_SOURCE mechanism that allows to track buffer overflows in functions copying the memory and strings.

Starting with the Linux 3.18 kernel, Android includes a software version of the PAN (Privileged Access Never) function that restricts access to the memory used by processes from the kernel space. Although the kernel itself normally does not use this possibility, poorly written drivers can do so, thus, creating potential vulnerabilities.

All kernels starting with 3.18 include the Post-init read-only memory function. After the kernel initialization, it marks memory areas that were available for writing during the kernel initialization as read-only.

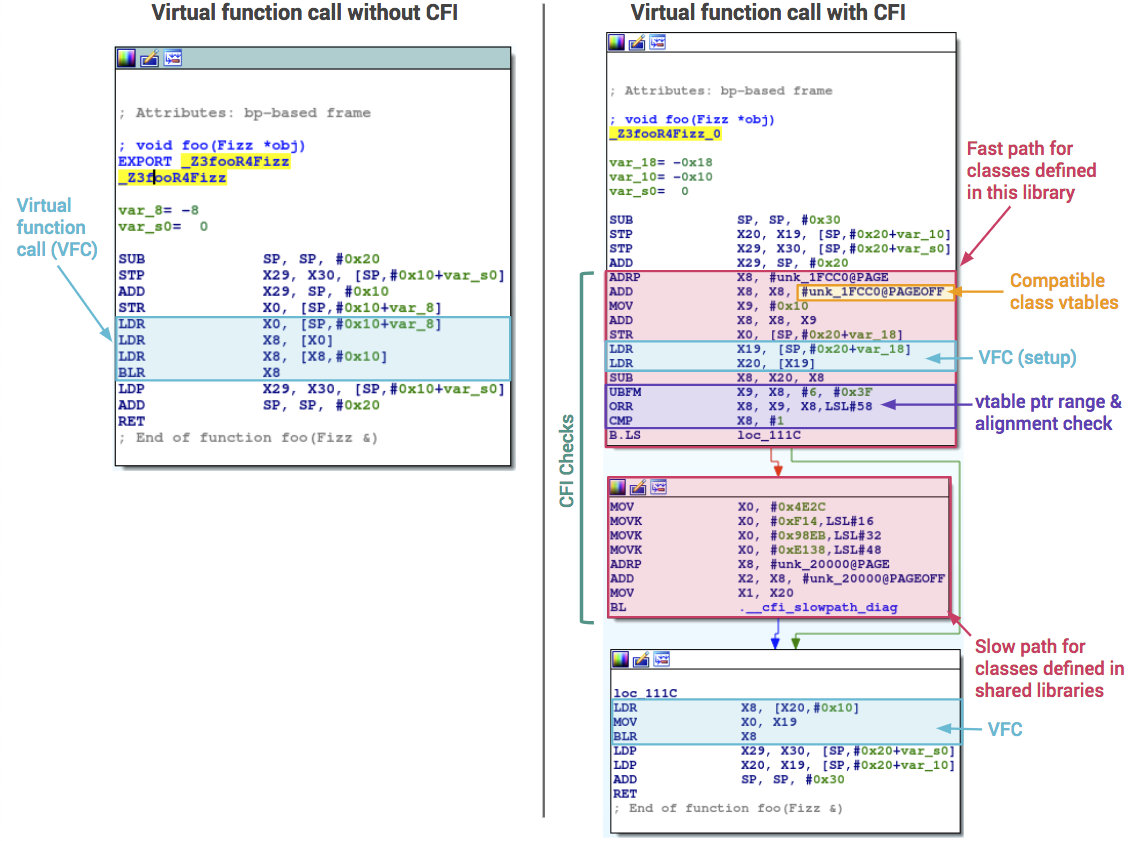

Starting with Android 8, the OS is compiled using the Control Flow Integrity (CFI) technology protecting against exploits based on the Return Oriented Programming (ROP) technique. When CFI is enabled, the compiler builds a function call graph and injects the code checking against this graph prior to each function call. If a call is made to an address differing from the one specified on the graph, the app is terminated.

In Android 9, the CFI functionality has been extended: now it covers media frameworks as well as the NFC and Bluetooth stacks. In Android 10, CFI is embedded in the kernel.

The Integer Overflow Sanitization (IntSan) technology works in a similar way. The compiler embeds control functions into the resultant application code to make sure that the performed arithmetic operation won’t cause an overflow.

The technology was originally introduced in Android 7; it protects the media stack from a complex of Stagefright remote vulnerabilities discovered in it. In Android 8, it also protects the following components: libui, libnl, libmediaplayerservice, libexif, libdrmclearkeyplugin, and libreverbwrapper. In Android 10, the checks are applied to 11 media codecs and the Bluetooth stack. According to the developers, the checks embedded in Android 9 made it possible to neutralize 11 vulnerabilities.

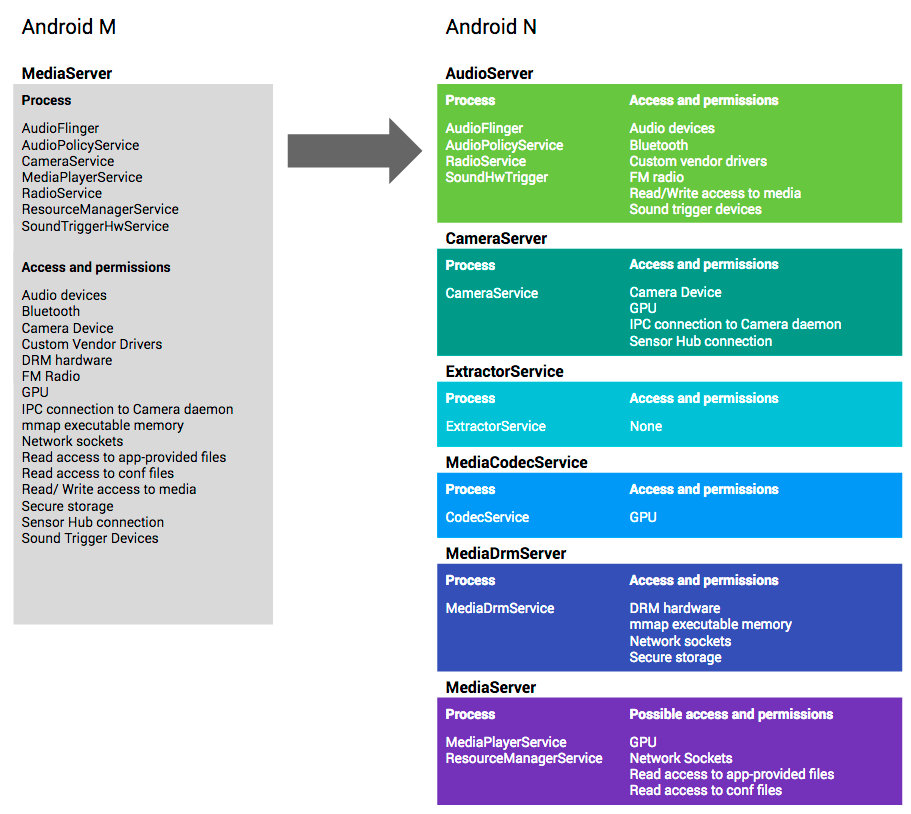

In Android 7, the media stack has been split into numerous independent services, and each of these services only has the permissions it really needs. The point is that the media codecs where the Stagefright vulnerabilities were discovered don’t have Internet access anymore and, accordingly, cannot be exploited remotely. More information on this topic can be found in the Google blog.

Starting with Android 10, media codecs use the Scudo memory allocator hindering such attacks as use-after-free, double-free, and buffer overflow.

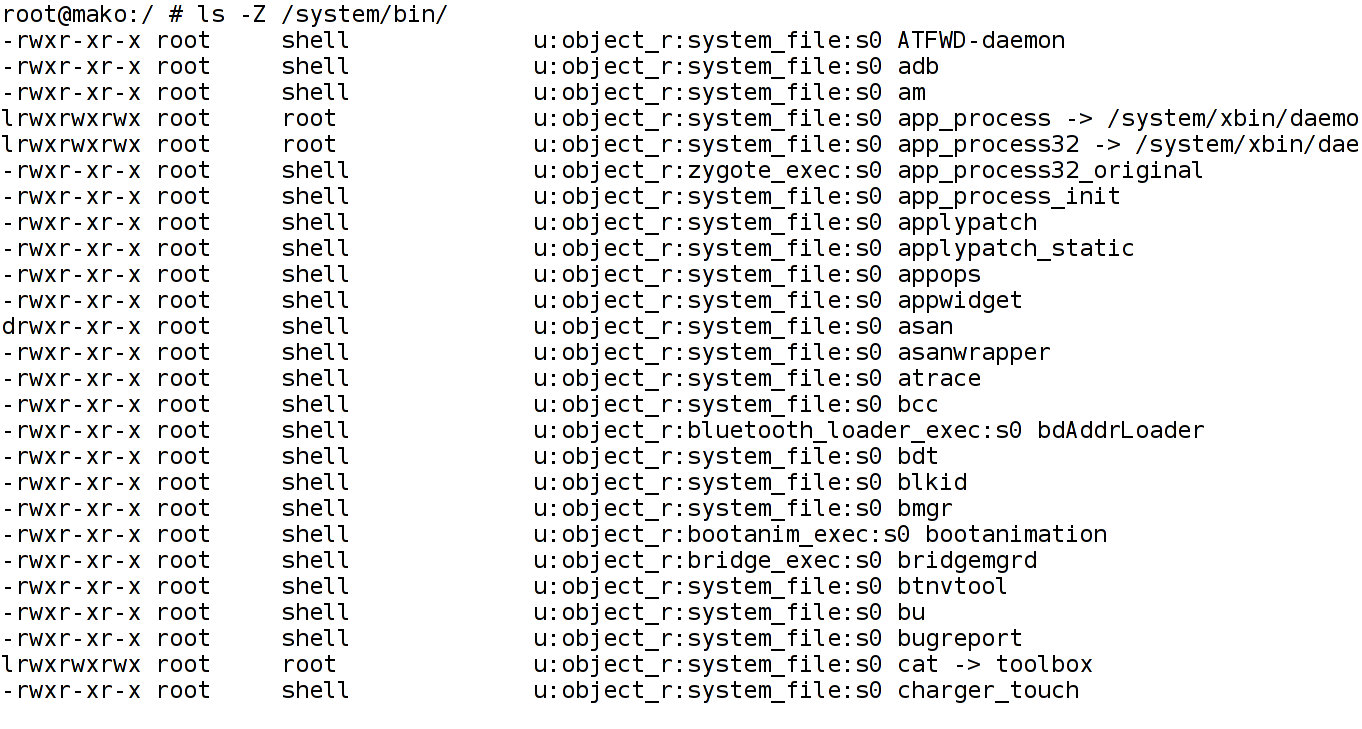

SELinux

The SELinux technology became another important step towards the protection of Android users from potential vulnerabilities in OS components.

Developed by the US National Security Agency, SELinux has been included in many corporate and desktop Linux distributions to protect them against a broad range of attacks. One of the main SELinux functions is to prevent apps from accessing OS resources and data belonging to other apps.

For instance, SELinux can restrict powers of a web server so that it has access only to certain files and port ranges, cannot run binaries except for the specified ones, and has limited access to system calls. In fact, SELinux puts the app into a sandbox, thus, significantly limiting the capacity of a malefactor who managed to hack this app.

Shortly after the release of Android, SELinux developers have launched the SEAndroid project. Its purpose was to port the system for the mobile OS and develop SELinux rules to protect its components. Staring with version 4.2, practical achievements of this project have been incorporated into Android; even though initially (versions 4.2-4.3), they were used only to collect information about the behavior of system components (in order to create rules on the basis of this information). In version 4.4, Google switched the system to the active mode (although with slight restrictions for some system daemons: installd, netd, vold, and zygote). Only in Android 5, SELinux started operating at its full capacity.

Android 5 includes more than 60 SELinux domains (i.e. restriction rules) for almost every system component: from the init process to user apps. As a result, many attack vectors formerly used against Android, both by users willing to get root privileges and by malicious programs, became ineffective.

For instance, the CVE-2011-1823 vulnerability present in all Android versions older than 2.3.4 (the so-called Gingerbreak exploit allowing to cause memory corruption in the vold daemon and then transfer the control to a shell with root privileges), could not be used against OS versions starting with Android 5 because, according to SELinux rules, vold is not allowed to run other binaries anymore. This also applies to CVE-2014-3100 (that causes a buffer overflow in the keystore daemon on Android 4.3) and 70% of other vulnerabilities.

SELinux significantly reduces the risk that an attacker seizes control over the device by exploiting vulnerabilities in low-level system components (i.e. daemons written in C and C++ and run on behalf of root). Concurrently, SELinux makes much more difficult for the user to gain root privileges. Furthermore, root privileges do not guarantee the complete control over the system anymore because SELinux makes no difference between an ordinary user and a superuser.

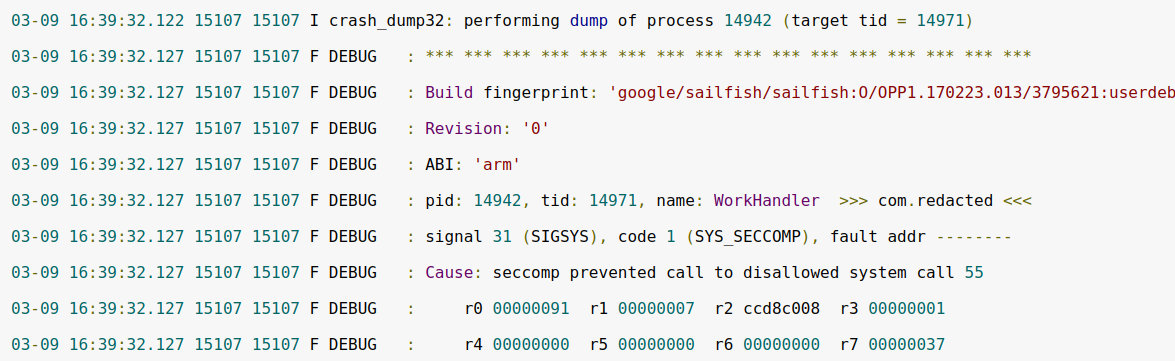

Seccomp-bpf

Seccomp is another Linux kernel technology; it allows to restrict the list of potentially dangerous system calls available to an app. Using seccomp, you can, for instance, prohibit an app from using the execve system call (that is frequently used in various exploits) or block the listen system call (that enables the attacker to create a backdoor on a network port). The Chrome’s tab isolation system for Linux is also based on Seccomp.

The technology has been included in Android starting with version 7, but initially it had affected only the system components. In Android 8, a seccomp filter was embedded in Zygote – the process that spawns processes of all apps installed on the device).

The developers have identified the set of system calls required to load the OS and run most apps and then cut off the unnecessary ones. As a result, 17 system calls out of the 271 on ARM64 and 70 system calls out of the 364 on ARM have been blacklisted.

The example below demonstrates how seccomp is used in MediaExtractor:

static const char kSeccompFilePath[] = "/system/etc/seccomp_policy/mediaextractor-seccomp.policy";int MiniJail(){ struct minijail *jail = minijail_new(); minijail_no_new_privs(jail); minijail_log_seccomp_filter_failures(jail); minijail_use_seccomp_filter(jail); minijail_parse_seccomp_filters(jail, kSeccompFilePath); minijail_enter(jail); minijail_destroy(jail); return 0;}File mediaextractor-seccomp.:

ioctl: 1futex: 1prctl: 1write: 1getpriority: 1mmap2: 1close: 110munmap: 1dupe: 1mprotect: 1getuid32: 1setpriority: 1

Google Play Protect

In February 2012, Google joined the fight against malicious apps by launching Bouncer, an online app verification service. Bouncer runs every app published on Google Play in an emulator and puts it to numerous tests in search of suspicious behavior.

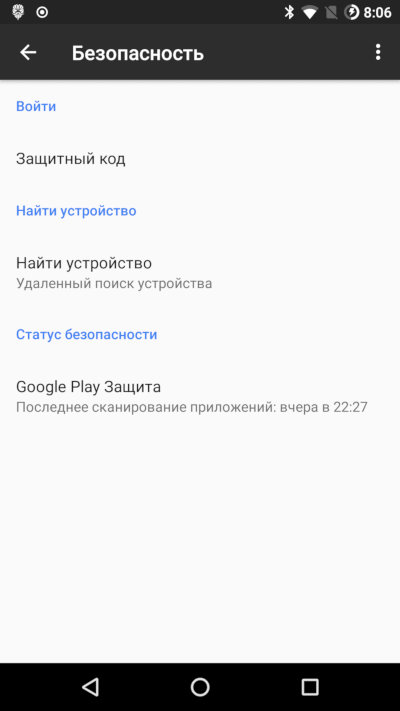

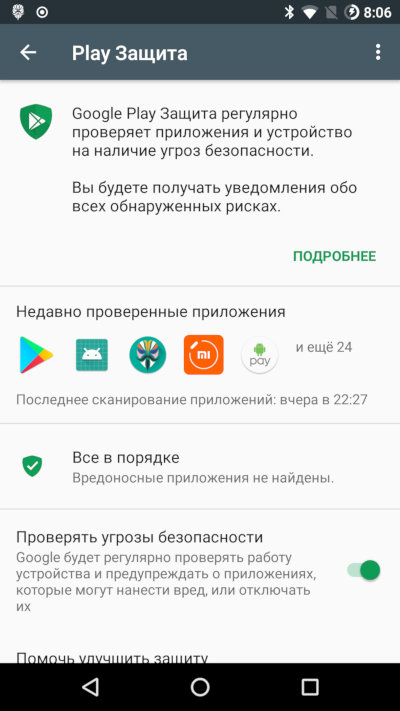

In November 2012, Google launched Verify Apps, an online service that scans software installed on smartphones for viruses. Initially, it had only worked on Android 4.2, but by July 2013, Verify Apps has been integrated into the Google Play Services and became available for all devices starting with version 2.3. Since April 2014, the scan is performed not only at the app installation stage, but on a regular basis according to a special schedule; since 2017, the checks can be monitored using the Google Play Protect interface (the Security section).

In addition to the new interface, Google introduced several new antivirus indicators. Starting with Android 8, the Play Store displays the app verification status on the application page, as well as a nice green shield in the list of installed apps.

|

|

| Google Play Protect in Android 8 | |

The only problem is that according to March 2020 antivirus test, Google Play Protect is ranked the worst antivirus product because it detects only 47.8% of new (up to four weeks old) viruses. This is definitely not good even taking that Google cannot use heuristic algorithms implemented in other such programs (for most antiviruses, even apps having the permission to send text messages are considered suspicious).

Smart Lock

Modern users are accustomed to fingerprint sensors and face scans used to unlock a smartphone; however, six years ago, it was difficult to convince people to protect their smartphones with PINs and passwords. Therefore, Google engineers have created not very reliable (in some cases, totally unreliable), but efficient system called Smart Lock.

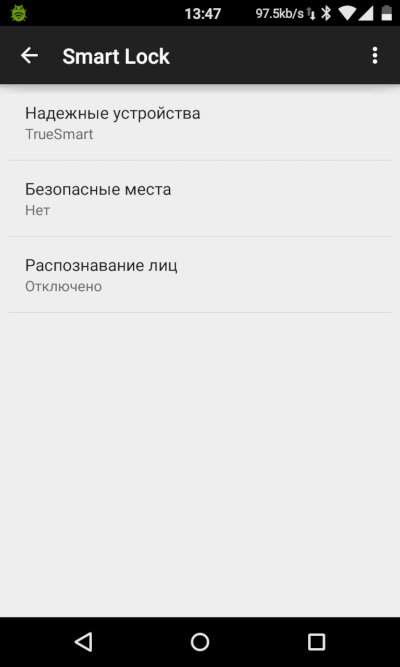

Introduced in Android 5, Smart Lock is a mechanism automatically disabling the lock screen protection after connecting to a trusted Bluetooth device (smart watch, car radio, TV box, etc.), in a trusted location, or after taking a snapshot of the user’s face. In fact, this is the official implementation of functions unofficially supported by third-party apps (for instance, SWApp Link used a Pebble watch for unlocking) and firmware from some manufacturers (e.g. Motorola’s Trusted Bluetooth).

Now these functions are available in Android; all the user has to do is set a PIN, key, or password on the lock screen, activate Smart Lock in the security settings and add trusted Bluetooth devices, places, faces, and locations.

According to Google, Smart Lock has doubled the usage of screen lock passwords by users. However, it is necessary to keep in mind that only one unlocking method available in Smart Lock is more or less reliable: connection to a trusted Bluetooth device. And even this method can be considered reliable only if your goal is to protect the stolen device: it won’t help you to defend your right to privacy before the police.

Currently, Android uses three screen unlocking mechanisms with different degrees of reliability and, accordingly, access levels:

- Password or PIN code is considered the most reliable mechanism that grants full control over the device without any restrictions;

- Fingerprint or face snapshot is less reliable; the system asks for a password after each phone reboot and every 72 hours; and

- Smart Lock is the least reliable method having the same restrictions as the biometric method. In addition, Smart Lock does not grant access to the Keymaster authentication keys (e.g. those used for payments), and the password is requested not every 72 hours, but every four hours

WebView

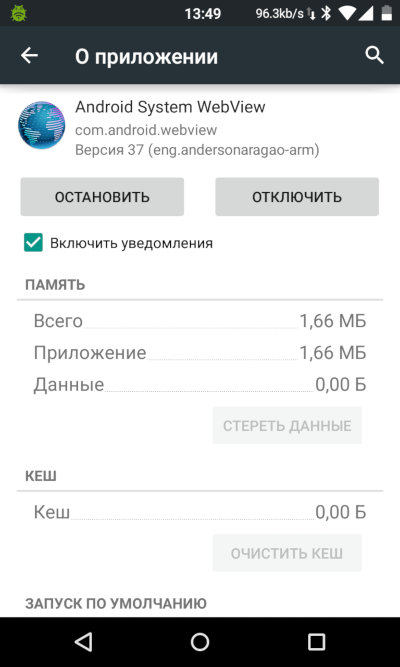

First versions of Android included the WebKit-based WebView component allowing third-party apps to use the HTML/JS engine to display content. The majority of third-party browsers were based on this component.

In Android 4, WebView has been heavily redesigned and replaced by an engine developed in the framework of the Chromium project (version 33 in Android 4.4.3). Starting with version 5, not only is WebView based on Chromium, but it can also be updated via Google Play in the automatic mode (i.e. invisibly to the user). In other words, Google can now patch vulnerabilities in the engine as quickly as vulnerabilities in Google Chrome for Android. The user just needs a smartphone with Android 5 or higher connected to the Internet.

Starting with Android 8, the WebView rendering process has been isolated using seccomp. It runs in a very small sandbox that prohibits access to the permanent storage and network functions. In addition, WebView can now use the Safe Browsing technology familiar to all Chrome users. Safe Browsing warns about potentially unsafe sites and requires you to confirm that you really want to visit such a site.

SafetyNet

Starting with Android 7, Google Play Services include an API called SafetyNet that checks whether the device is authentic (by verifying its serial numbers), whether its firmware was altered, and whether the user has root privileges. Using this API, developers can write apps that won’t run on modified or unpatched firmware (the patch level).

For a long time, SafetyNet had assessed the reliability of devices using heuristic methods that could be easily fooled. For instance, for many years, Magisk was used to hide from SafetyNet the presence of root access and unlocked bootloader.

Since recently, SafetyNet ceased relying on a simple bootloader check (which can be tricked by Magisk); instead, it uses a private encryption key from the secure KeyStore to validate the transmitted data. You can bypass this protection only by gaining access to the private key stored in the dedicated cryptographic coprocessor (TEE), which is almost impossible to do.

This means that very soon, all Google-certified devices with Android 8 and higher won’t be able to pass the SafetyNet checks, and Magisk will be useless for interaction with bank clients and other apps using SafetyNet.

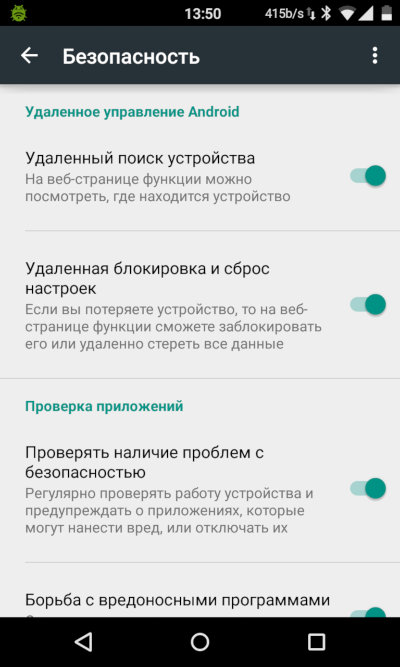

Kill Switch

In August 2013, Google launched Android Device Manager, a web service enabling users to lock their smartphones or revert them to factory settings. The service uses Google Play Services as a client part installed on the smartphone; this component is updated via Google Play; so, the function is available on any device starting with Android 2.3.

Starting with Android 5, the service includes the Factory Reset Protection function. When it’s activated, the ability to reset the device to factory settings becomes locked by a password – Google believes that this restricts the functionality of the smartphone and hinders the possibility to sell it (a smartphone once linked to a Google account cannot be unlinked without reverting its settings).

The only problem is that bootloaders of most smartphones can be unlocked perfectly legitimately. And after doing so, you can completely reflash the device.

Conclusions

The efforts of Google engineers have ultimately paid off. Today, the majority of vulnerabilities in your smartphone originate not from Android, but from the device’s drivers and firmware. The total number of vulnerabilities in Android is already less than that in iOS, and the rewards for Android vulnerabilities detected in the framework of the Bug Bounty Program exceed rewards for iOS vulnerabilities.

A modern Android smartphone (unless, of course, it’s a cheap made-in-China device) is well-protected from nearly all directions. Its memory cannot be dumped, it cannot be bricked, downgraded, or hacked bypassing the lock screen. And these built-in protection mechanisms can be disabled only by the phone manufacturer.